- Joined

- Aug 19, 2017

- Messages

- 2,921 (1.05/day)

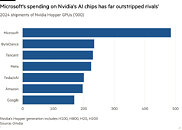

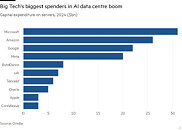

Microsoft is heavily investing in enabling its company and cloud infrastructure to support the massive AI expansion. The Redmond giant has acquired nearly half a million of the NVIDIA "Hopper" family of GPUs to support this effort. According to market research company Omdia, Microsoft was the biggest hyperscaler, with data center CapEx and GPU expenditure reaching a record high. The company acquired precisely 485,000 NVIDIA "Hopper" GPUs, including H100, H200, and H20, resulting in more than $30 billion spent on servers alone. To put things into perspective, this is about double that of the next-biggest GPU purchaser, Chinese ByteDance, who acquired about 230,000 sanction-abiding H800 GPUs and regular H100s sources from third parties.

Regarding US-based companies, the only ones that have come close to the GPU acquisition rate are Meta, Tesla/xAI, Amazon, and Google. They have acquired around 200,000 GPUs on average while significantly boosting their in-house chip design efforts. "NVIDIA GPUs claimed a tremendously high share of the server capex," Vlad Galabov, director of cloud and data center research at Omdia, noted, adding, "We're close to the peak." Hyperscalers like Amazon, Google, and Meta have been working on their custom solutions for AI training and inference. For example, Google has its TPU, Amazon has its Trainium and Inferentia chips, and Meta has its MTIA. Hyperscalers are eager to develop their in-house solutions, but NVIDIA's grip on the software stack paired with timely product updates seems hard to break. The latest "Blackwell" chips are projected to get even bigger orders, so only the sky (and the local power plant) is the limit.

View at TechPowerUp Main Site | Source

Regarding US-based companies, the only ones that have come close to the GPU acquisition rate are Meta, Tesla/xAI, Amazon, and Google. They have acquired around 200,000 GPUs on average while significantly boosting their in-house chip design efforts. "NVIDIA GPUs claimed a tremendously high share of the server capex," Vlad Galabov, director of cloud and data center research at Omdia, noted, adding, "We're close to the peak." Hyperscalers like Amazon, Google, and Meta have been working on their custom solutions for AI training and inference. For example, Google has its TPU, Amazon has its Trainium and Inferentia chips, and Meta has its MTIA. Hyperscalers are eager to develop their in-house solutions, but NVIDIA's grip on the software stack paired with timely product updates seems hard to break. The latest "Blackwell" chips are projected to get even bigger orders, so only the sky (and the local power plant) is the limit.

View at TechPowerUp Main Site | Source