- Joined

- Aug 19, 2017

- Messages

- 2,885 (1.04/day)

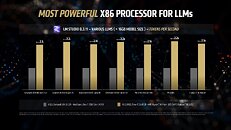

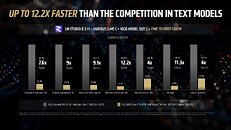

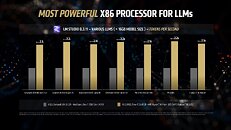

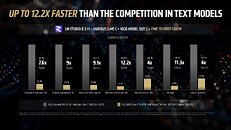

AMD's latest flagship APU, the Ryzen AI MAX+ 395 "Strix Halo," demonstrates some impressive performance advantages over Intel's "Lunar Lake" processors in large language model (LLM) inference workloads, according to recent benchmarks on AMD's blog. Featuring 16 Zen 5 CPU cores, 40 RDNA 3.5 compute units, and over 50 AI TOPS via its XDNA 2 NPU, the processor achieves up to 12.2x faster response times than Intel's Core Ultra 258V in specific LLM scenarios. Notably, Intel's Lunar Lake has four E-cores and four P-cores, which in total is half of the Ryzen AI MAX+ 395 CPU core count, but the performance difference is much more pronounced than the 2x core gap. The performance delta becomes even more notable with model complexity, particularly with 14-billion parameter models approaching the limit of what standard 32 GB laptops can handle.

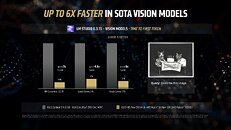

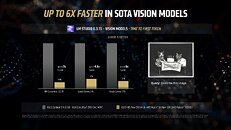

In LM Studio benchmarks using an ASUS ROG Flow Z13 with 64 GB unified memory, the integrated Radeon 8060S GPU delivered 2.2x higher token throughput than Intel's Arc 140V across various model architectures. Time-to-first-token metrics revealed a 4x advantage in smaller models like Llama 3.2 3B Instruct, expanding to 9.1x with 7-8B parameter models such as DeepSeek R1 Distill variants. AMD's architecture particularly excels in multimodal vision tasks, where the Ryzen AI MAX+ 395 processed complex visual inputs up to 7x faster in IBM Granite Vision 3.2 3B and 6x faster in Google Gemma 3 12B compared to Intel's offering. The platform's support for AMD Variable Graphics Memory allows allocating up to 96 GB as VRAM from systems equipped with 128 GB unified memory, enabling the deployment of state-of-the-art models like Google Gemma 3 27B Vision. The processor's performance advantages extend to practical AI applications, including medical image analysis and coding assistance via higher-precision 6-bit quantization in the DeepSeek R1 Distill Qwen 32B model.

View at TechPowerUp Main Site | Source

In LM Studio benchmarks using an ASUS ROG Flow Z13 with 64 GB unified memory, the integrated Radeon 8060S GPU delivered 2.2x higher token throughput than Intel's Arc 140V across various model architectures. Time-to-first-token metrics revealed a 4x advantage in smaller models like Llama 3.2 3B Instruct, expanding to 9.1x with 7-8B parameter models such as DeepSeek R1 Distill variants. AMD's architecture particularly excels in multimodal vision tasks, where the Ryzen AI MAX+ 395 processed complex visual inputs up to 7x faster in IBM Granite Vision 3.2 3B and 6x faster in Google Gemma 3 12B compared to Intel's offering. The platform's support for AMD Variable Graphics Memory allows allocating up to 96 GB as VRAM from systems equipped with 128 GB unified memory, enabling the deployment of state-of-the-art models like Google Gemma 3 27B Vision. The processor's performance advantages extend to practical AI applications, including medical image analysis and coding assistance via higher-precision 6-bit quantization in the DeepSeek R1 Distill Qwen 32B model.

View at TechPowerUp Main Site | Source