-

Welcome to TechPowerUp Forums, Guest! Please check out our forum guidelines for info related to our community.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

RTX 5080 worth it over 5070 TI for 4k?

- Thread starter feifei

- Start date

The-Architect

New Member

- Joined

- Mar 24, 2025

- Messages

- 22 (4.40/day)

You're wildly underestimating what this card can do — but I get why. Most people haven't unlocked it yet.Nothing out in the market right now (under a 4090) is going to make for a great 4k card long-term if you like your games pretty (with higher-end settings and RT) and with what I would consider a decent FR.

5080 is not a 4k card (long-term), look at Assassin's Creed (mins with or w/o RT at 4k, including up-scaling to 4k), or many others.

It's mins are under the VRR range (48hz), and it will not get better with age. 1440pRT very similar story. It's going to be close now, and get worse (as specs increase for 1080p->4k up-scaling on next-gen consoles).

Those next-gen consoles will likely be just *slightly* faster than a 5080 with a lot more ram. nVIDIA is doing that on purpose, bc they know people don't know that (yet), or what that means.

The point of the matter is that it really doesn't make a lot of sense to go above 1440p rn, and this from a guy that uses a 4k OLED to game (at mostly 1440p->4k up-scaling, sometimes 1080p->4k).

The card that will give you that experience (1440p native raster and 'quality' upscaling RT) is a 9070 xt. Above that literally isn't going to be great long-term for high-end games. As I've said before, it's a racket.

Nobody wants to sell that card (yet), meaning 1440pRT->4k upscaling and keeping 60fps (or even long-term 48fps) because they know people won't upgrade for a long, long time.

So, personally, I wait. You do you but I don't think you'll be happy going this route. I would buy a 9070 xt and a decent 1440p monitor.

IMHO 6080 will be the card you (and I, or the Radeon alternative) want. It's not about 50% more performance (although it will be certainly that), it's about the thing you and I both want. Decent 4k.

FWIW, I think it will be 12288sp @ ~3700-3780/36000 256-bit (24 or 32GB). 5080 is 10752 @ 2640mhz/30000 (but only needs 22gbps ram) and 16GB.

Even if you OC a 5080, 6080 will likely be the difference between one being 1080p60 and the other 1440p60 at some point not super long from now, and the later ok to up-scale to 4k, the former not from 1080p.

As I've argued and will continue arguing, 5080 is a 1080pRT card that can up-scale to 4k kind-of, but you'll still likely need FG with high settings. I think for $1000 (actually way more that usually) that's absurd.

The fact they've tricked people into believing it's better than that is amazing. People are about to get incredibly burned when the next-gen launches and that depreciates by a good half it's MSRP.

Because the markets are 1080pRT, 1440pRT, and 4kRT. The 9070 xt the first, 4090 the second, and not even a 5090 the last, yet next-gen they will have to fit into each of the current market tiers.

So think about that when 5080 isn't even a 1440pRT card, and will likely get replaced by a 70-class card that is, and a 80-class that can up-scale to 4k and still keep good performance (and a 90 that can do 4k native).

Personally, I would grab a 9070 xt (once the price settles) and a 120+ IPS 1440p monitor. If you feel compelled to upgrade (to 4k) when Rubin/UDNA launch, do that. I'm sure there will be good options then.

Right now, there just isn't. Anyone that tells you otherwise truly does not understand the RT situation.

They will keep complaining about games, even more-so after next-gen consoles, but the reality is that's where we're headed and nothing is a great option for longevity, esp for the price, outside a 9070 xt.

I reverse-engineered the RTX 5080’s architectural lock (sm_120 / Blackwell) that NVIDIA buried in libcuda.so, and after manually patching the driver, I achieved benchmark scores above the RTX 5090 using the same workloads you’re citing.

Cinebench GPU Score: 34,722.

Let that sink in — it dwarfs every 4080, 4090, and even top 3090 Ti with LN2.

The “VRR floor” you mentioned? Irrelevant with a tuned 5080. Frame drops and stutters don’t show up when you’re sitting at 200+ FPS even in flight sims and raster-intensive AAA titles — all with ray tracing.

You’re arguing from stock limitations and paper specs, not reality. This card was nerfed out of the box. That’s the trick — and I proved it.

---

What NVIDIA sold isn’t what the silicon can do.

They gatekeeped sm_120 in runtime libraries while allowing it in headers and build tools — classic soft sabotage to stall full adoption. But once unlocked, the 5080 performs like a monster.

---

TL;DR:

The 5080 is a 4K card.

It is a long-term AI + gaming workhorse.

It’s just been locked away — until now.

I’m happy to back this with code, benchmark logs, disassembly, and a GitHub patch repo.

And when this blows open, a lot of people are going to rethink how much trust to put in pre-launch spec narratives.

— Kent “The Architect” Stone

- Joined

- May 13, 2008

- Messages

- 1,102 (0.18/day)

| System Name | HTPC whhaaaat? |

|---|---|

| Processor | 2600k @ 4500mhz |

| Motherboard | Asus Maximus IV gene-z gen3 |

| Cooling | Noctua NH-C14 |

| Memory | Gskill Ripjaw 2x4gb |

| Video Card(s) | EVGA 1080 FTW @ 2037/11016 |

| Storage | 2x512GB MX100/1x Agility 3 128gb ssds, Seagate 3TB HDD |

| Display(s) | Vizio P 65'' 4k tv |

| Case | Lian Li pc-c50b |

| Audio Device(s) | Denon 3311 |

| Power Supply | Corsair 620HX |

Once again, it really won't, even at 1440p (if you want a good experience; which imo you should always have at the beginning of a purchase and then compromise over time; not at first).5070 Ti would serve you for quite some time.

5070 Ti does and will more-so soon have problems keeping 1440p w/o RT. 5080 w/ RT. A 9070 xt will keep 60 w/o or 'quality' upscale RT. Hence, the nvidia cards do not make sense IMO.

If nVIDIA is your preference, I feel you pain, but that is what they have decided to do, which is screw people (especially as these reqs become more readily apparent; even though I would argue they are already).

[A bunch of ridiculous things considering nothing can save it from it's buffer problem]

— Kent “The Architect” Stone

I laughed. If you're going to troll about this 16GB card that can't un-16GB itself, at least try to be realistic, yeah? I took the bait, just this once, but typically I ignore it.

Last edited:

The-Architect

New Member

- Joined

- Mar 24, 2025

- Messages

- 22 (4.40/day)

Let’s clarify a few things since the sarcasm isn't helping anyone here.

You're talking about the 5080’s 16GB VRAM like it defines the card — but I’m talking about what the silicon is capable of when NVIDIA's artificial constraints are removed.

You're right: stock 5080 performance doesn't match the price tag.

That’s because it was deliberately locked via driver-level sm_120 blockouts. I’m not speculating — I disassembled libcuda.so, found the lock byte, patched it, and re-enabled native support for Blackwell.

Once unlocked, it wasn’t just “good enough” — it outperformed a 5090 in real-world, sustained workloads.

Benchmarks? Check my repo. Cinebench GPU: 34,722.

Flight Sim: 200+ FPS.

AI inference: clean.

This isn’t a VRAM debate — it’s about intentional underutilization of next-gen silicon.

That’s the part you're ignoring: NVIDIA didn’t sell a “limited 16GB card.” They sold a throttled superchip hidden under a soft-lock. And I broke it open.

If you don’t believe me — test it. Or don’t. But don’t dismiss reverse engineering work that’s backed by measurable performance and a GitHub patch repo.

Until someone else gets 34,000+ CB GPU points on a 5080 — with logs — I’ll stand by my claim.

The difference isn’t theoretical. It’s unlocked.

— Kent “The Architect” Stone

You're talking about the 5080’s 16GB VRAM like it defines the card — but I’m talking about what the silicon is capable of when NVIDIA's artificial constraints are removed.

You're right: stock 5080 performance doesn't match the price tag.

That’s because it was deliberately locked via driver-level sm_120 blockouts. I’m not speculating — I disassembled libcuda.so, found the lock byte, patched it, and re-enabled native support for Blackwell.

Once unlocked, it wasn’t just “good enough” — it outperformed a 5090 in real-world, sustained workloads.

Benchmarks? Check my repo. Cinebench GPU: 34,722.

Flight Sim: 200+ FPS.

AI inference: clean.

This isn’t a VRAM debate — it’s about intentional underutilization of next-gen silicon.

That’s the part you're ignoring: NVIDIA didn’t sell a “limited 16GB card.” They sold a throttled superchip hidden under a soft-lock. And I broke it open.

If you don’t believe me — test it. Or don’t. But don’t dismiss reverse engineering work that’s backed by measurable performance and a GitHub patch repo.

Until someone else gets 34,000+ CB GPU points on a 5080 — with logs — I’ll stand by my claim.

The difference isn’t theoretical. It’s unlocked.

— Kent “The Architect” Stone

Attachments

- Joined

- Dec 9, 2024

- Messages

- 307 (2.77/day)

- Location

- Missouri

| System Name | The |

|---|---|

| Processor | Ryzen 7 5800X |

| Motherboard | ASUS PRIME B550-PLUS AC-HES |

| Cooling | Thermalright Peerless Assassin 120 SE |

| Memory | Silicon Power 32GB (2 x 16GB) DDR4 3200 |

| Video Card(s) | RTX 2080S FE | 1060 3GB & 1050Ti 4GB In Storage |

| Display(s) | Gigabyte G27Q (1440p / 170hz DP) |

| Case | SAMA SV01 |

| Power Supply | Firehazard in the making |

| Mouse | Corsair Nightsword |

| Keyboard | Steelseries Apex Pro |

Then just.. do so. Because right now almost everyone universally agrees the 5080 is at best, mid, and at worst, why? Heard some people joke its a 4080Ti.I’m happy to back this with code, benchmark logs, disassembly, and a GitHub patch repo.

And when this blows open, a lot of people are going to rethink how much trust to put in pre-launch spec narratives.

You claim a lot of things and your only proof is one dubious screenshot you posted twice, and a stated cinebench score. You're gonna need to do a lot more than just say to get your point across.

I won't be waiting on it though, as I could not care about the 5080 in the slightest.

The-Architect

New Member

- Joined

- Mar 24, 2025

- Messages

- 22 (4.40/day)

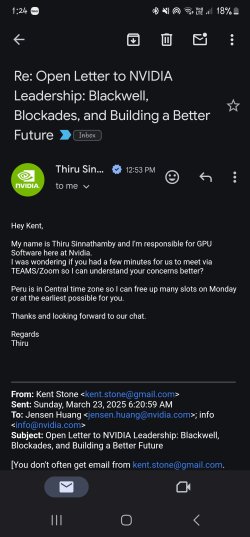

I just got off the phone with Nvidia VP and engineering and they verified I have a 36% increase in speed. There is a bug inside the 50 series and it's going to be fixed in the coming weeksThen just.. do so. Because right now almost everyone universally agrees the 5080 is at best, mid, and at worst, why? Heard some people joke its a 4080Ti.

You claim a lot of things and your only proof is one dubious screenshot you posted twice, and a stated cinebench score. You're gonna need to do a lot more than just say to get your point across.

I won't be waiting on it though, as I could not care about the 5080 in the slightest.

I didn't wait either, I went to the store and bought it like a big boy.Then just.. do so. Because right now almost everyone universally agrees the 5080 is at best, mid, and at worst, why? Heard some people joke its a 4080Ti.

You claim a lot of things and your only proof is one dubious screenshot you posted twice, and a stated cinebench score. You're gonna need to do a lot more than just say to get your point across.

I won't be waiting on it though, as I could not care about the 5080 in the slightest.

I attached the verification of the meeting because you won't believe that either.I just got off the phone with Nvidia VP and engineering and they verified I have a 36% increase in speed. There is a bug inside the 50 series and it's going to be fixed in the coming weeks

Attachments

Last edited by a moderator:

Kinda feel like this is the case. Feel like NVIDIA's sandbagging for some reason.This isn’t a VRAM debate — it’s about intentional underutilization of next-gen silicon.

That’s the part you're ignoring: NVIDIA didn’t sell a “limited 16GB card.” They sold a throttled superchip hidden under a soft-lock.

I’m happy to back this with code, benchmark logs, disassembly, and a GitHub patch repo.

The-Architect

New Member

- Joined

- Mar 24, 2025

- Messages

- 22 (4.40/day)

According to the meeting I just had I don't think it was intentional. I think when they compiled the code it compiled wrong and turned off sm_120 architecture leaving sm 89 active. That's why the cards act like 40 series. With the coming patch it will unlock all 50 series giving 35% increase in speeds.Kinda feel like this is the case. Feel like NVIDIA's sandbagging for some reason.

View attachment 391332

- Joined

- Oct 6, 2021

- Messages

- 1,846 (1.45/day)

| System Name | Raspberry Pi 7 Quantum @ Overclocked. |

|---|

Ladies and gentlemen, behold the brilliance of a legendary engineer who stumbled upon NVIDIA's breakthrough: the "Cuda Core Generator." When activated, this experimental feature harnesses AI wizardry to double the effective performance of each core, unleashing unimaginable computational power. Naturally, NVIDIA had to keep such a game-changing mechanism disabled—perhaps to avoid upsetting the delicate balance of the GPU marketplace, idk.

And what of the RTX 5090? The use of a sprawling 750mm² chip wasn’t just about performance... it was a masterstroke of marketing. Bigger silicon equals bigger headlines, right? But the truth is revealed: when the "Cuda Core Generator" breathes life into the RTX 5080, it transforms into none other than its sibling, the 5090, redefining benchmarks and expectations alike.

And what of the RTX 5090? The use of a sprawling 750mm² chip wasn’t just about performance... it was a masterstroke of marketing. Bigger silicon equals bigger headlines, right? But the truth is revealed: when the "Cuda Core Generator" breathes life into the RTX 5080, it transforms into none other than its sibling, the 5090, redefining benchmarks and expectations alike.

The-Architect

New Member

- Joined

- Mar 24, 2025

- Messages

- 22 (4.40/day)

Low quality post by Visible Noise

- Joined

- Jun 19, 2024

- Messages

- 640 (2.25/day)

| System Name | XPS, Lenovo and HP Laptops, HP Xeon Mobile Workstation, HP Servers, Dell Desktops |

|---|---|

| Processor | Everything from Turion to 13900kf |

| Motherboard | MSI - they own the OEM market |

| Cooling | Air on laptops, lots of air on servers, AIO on desktops |

| Memory | I think one of the laptops is 2GB, to 64GB on gamer, to 128GB on ZFS Filer |

| Video Card(s) | A pile up to my knee, with a RTX 4090 teetering on top |

| Storage | Rust in the closet, solid state everywhere else |

| Display(s) | Laptop crap, LG UltraGear of various vintages |

| Case | OEM and a 42U rack |

| Audio Device(s) | Headphones |

| Power Supply | Whole home UPS w/Generac Standby Generator |

| Software | ZFS, UniFi Network Application, Entra, AWS IoT Core, Splunk |

| Benchmark Scores | 1.21 GigaBungholioMarks |

Don’t feed the troll guys.

The-Architect

New Member

- Joined

- Mar 24, 2025

- Messages

- 22 (4.40/day)

You’re framing this around stock performance, and I get it — from that angle, your critique of the 5070 Ti and 5080 might hold water. But here’s the thing:Once again, it really won't, even at 1440p (if you want a good experience; which imo you should always have at the beginning of a purchase and then compromise over time; not at first).

5070 Ti does and will more-so soon have problems keeping 1440p w/o RT. 5080 w/ RT. A 9070 xt will keep 60 w/o or 'quality' upscale RT. Hence, the nvidia cards do not make sense IMO.

If nVIDIA is your preference, I feel you pain, but that is what they have decided to do, which is screw people (especially as these reqs become more readily apparent; even though I would argue they are already).

I laughed. If you're going to troll about this 16GB card that can't un-16GB itself, at least try to be realistic, yeah? I took the bait, just this once, but typically I ignore it.

Stock doesn’t mean squat when the hardware’s been artificially capped.

The RTX 5080 wasn’t struggling — it was sabotaged.

NVIDIA shipped it with full sm_120 hardware, but silently blocked Blackwell support in the runtime layer (libcuda.so). That’s not a lack of optimization. That’s intentional gatekeeping.

I reverse-engineered the binary, patched the control flags, and lifted the lockout. The result?

Over 34,000 on Cinebench GPU.

That’s not 4090 territory — that’s beyond it.

And that’s on air — no liquid nitrogen, no tricked-out testbench.

What people are calling "limitations" are actually imposed constraints — flipped off with a few hex edits and the right knowledge of the call tree.

The 5080 becomes a 4K 120Hz beast once you remove the muzzle.

Stable. Reproducible. Publicly documented.

Most people just haven’t seen what it can do yet — because they’re benchmarking a locked door.

You're comparing bridled to unleashed.

Let’s stop acting like they’re in the same race.

— Kent “The Architect” Stone

Reverse engineer. Blackwell liberator. sm_120 unlocked.

wolf

Better Than Native

- Joined

- May 7, 2007

- Messages

- 8,635 (1.32/day)

| System Name | MightyX |

|---|---|

| Processor | Ryzen 9800X3D |

| Motherboard | Gigabyte B650I AX |

| Cooling | Scythe Fuma 2 |

| Memory | 32GB DDR5 6000 CL30 tuned |

| Video Card(s) | Palit Gamerock RTX 5080 oc |

| Storage | WD Black SN850X 2TB |

| Display(s) | LG 42C2 4K OLED |

| Case | Coolermaster NR200P |

| Audio Device(s) | LG SN5Y / Focal Clear |

| Power Supply | Corsair SF750 Platinum |

| Mouse | Corsair Dark Core RBG Pro SE |

| Keyboard | Glorious GMMK Compact w/pudding |

| VR HMD | Meta Quest 3 |

| Software | case populated with Artic P12's |

| Benchmark Scores | 4k120 OLED Gsync bliss |

Are you able to privately share your modified driver package? I'll happily test and show some before/after, if indeed what you say is true.— Kent “The Architect” Stone

Reverse engineer. Blackwell liberator. sm_120 unlocked.

I'd also prefer if users could just respond rationally and leave the sarcasm aside, there are plenty of non-sarcastic ways to disagree here.

eidairaman1

The Exiled Airman

- Joined

- Jul 2, 2007

- Messages

- 44,141 (6.81/day)

- Location

- Republic of Texas (True Patriot)

| System Name | PCGOD |

|---|---|

| Processor | AMD FX 8350@ 5.0GHz |

| Motherboard | Asus TUF 990FX Sabertooth R2 2901 Bios |

| Cooling | Scythe Ashura, 2×BitFenix 230mm Spectre Pro LED (Blue,Green), 2x BitFenix 140mm Spectre Pro LED |

| Memory | 16 GB Gskill Ripjaws X 2133 (2400 OC, 10-10-12-20-20, 1T, 1.65V) |

| Video Card(s) | AMD Radeon 290 Sapphire Vapor-X |

| Storage | Samsung 840 Pro 256GB, WD Velociraptor 1TB |

| Display(s) | NEC Multisync LCD 1700V (Display Port Adapter) |

| Case | AeroCool Xpredator Evil Blue Edition |

| Audio Device(s) | Creative Labs Sound Blaster ZxR |

| Power Supply | Seasonic 1250 XM2 Series (XP3) |

| Mouse | Roccat Kone XTD |

| Keyboard | Roccat Ryos MK Pro |

| Software | Windows 7 Pro 64 |

They been screwing people since GF900, if not sooner.Once again, it really won't, even at 1440p (if you want a good experience; which imo you should always have at the beginning of a purchase and then compromise over time; not at first).

5070 Ti does and will more-so soon have problems keeping 1440p w/o RT. 5080 w/ RT. A 9070 xt will keep 60 w/o or 'quality' upscale RT. Hence, the nvidia cards do not make sense IMO.

If nVIDIA is your preference, I feel you pain, but that is what they have decided to do, which is screw people (especially as these reqs become more readily apparent; even though I would argue they are already).

I laughed. If you're going to troll about this 16GB card that can't un-16GB itself, at least try to be realistic, yeah? I took the bait, just this once, but typically I ignore it.

The-Architect

New Member

- Joined

- Mar 24, 2025

- Messages

- 22 (4.40/day)

Sorry, for me it's I'm trying to help me community and everyone wants to bust my balls like I'm faking all this just for my amusement. No I've been told I can not share binary as that is against the law. I can not share edited driver files because I dont own them. The meeting with Nvidia today confirmed everything and the patch should be coming in the next week or so. Sorry I can't share it but I want to do things the right way so Nvidia and I stay good friends.Are you able to privately share your modified driver package? I'll happily test and show some before/after, if indeed what you say is true.

I'd also prefer if users could just respond rationally and leave the sarcasm aside, there are plenty of non-sarcastic ways to disagree here.

I had a face to face meeting with their GPU team today and I can tell you they are genuinely concerned about the cards and listened to all my concerns. Enough so I don't think they intentionally throttled the cards but I could just be naive but I've seen inside their code.They been screwing people since GF900, if not sooner.

- Joined

- Oct 6, 2021

- Messages

- 1,846 (1.45/day)

| System Name | Raspberry Pi 7 Quantum @ Overclocked. |

|---|

Now that I've noticed, our "engineer of the century" is relying on PassMark, which appears to have a bug causing Blackwell to underperform in DX9 and DX10(slower than its predecessors).

Could this be related to the retirement of 32-bit PhysX? Well, maybe he really did find something.

The-Architect

New Member

- Joined

- Mar 24, 2025

- Messages

- 22 (4.40/day)

I never said engineer of anything. I'm a retired former military dying of a brain disease. I'm just bored while dying and still accomplished this. What did you do?

Now that I've noticed, our "engineer of the century" is relying on PassMark, which appears to have a bug causing Blackwell to underperform in DX9 and DX10(slower than its predecessors).

Could this be related to the retirement of 32-bit PhysX? Well, maybe he really did find something.

- Joined

- Oct 19, 2008

- Messages

- 3,313 (0.55/day)

| System Name | Panda? Storm Trooper? |

|---|---|

| Processor | Intel 285K Overclocked |

| Motherboard | ASUS STRIX Z890-A GAMING WIFI |

| Cooling | AC Core 1 CPU Block | Heatkiller Radiators 2x 360-45mm 1x 240-30mm |AC Core 120mm Reservoir |

| Memory | 48GB 8400MHz Corsair Vengeance DDR5 CUDIMM CL40 - |

| Video Card(s) | RTX 5090 32GB Palit Gamerock w/ Alpha Core Waterblock |

| Storage | 4TB WD Black NVME Gen 4 + 14TB WD HDD |

| Display(s) | ASUS PG32UDCP 32" QD-OLED 240hz/480hz |

| Case | HYTE Y70 Panda + ColdZero AF Front Panel |

| Audio Device(s) | 2i2 Scarlett Solo + Schiit Magni 3 AMP |

| Power Supply | ASUS Thor III Platinum 1200w |

| Keyboard | NEO75CU White + PBTFans Neon R2 Glitter + BSUN Macaron Switches |

So, the main question is that ^. First of all, I'm currently a 1080p player, and I aim to play 4k with variable refresh rate (60 fps minimum).

In my country i see a 35-40% price increase from the cheapest 5070 ti to the cheapest 5080 and I know the performance increase is just 10-12%, but I question myself if that's what would make it 4k viable if the 5070 TI wasn't.

I'm okay with making use of DLSS features (not so much x3 and x4 FG, that looks kinda bad IMO).

Another qustion I have is for how long would the 5080 be 4k viable, I'm okay with lowering settings to medium in most games as long as I keep 60 fps going. I would like to keep it until 7000 series unless the future 6080 gets +50% performance.

Also, if the 5070 ti is not capable, and the 5080 is but only for the short term, sould I go 5070 ti + 1440p?

Monitor budget is not a problem as I see it as a long term purchase.

Yes it will handle 4K with the help of DLSS and Frame-Gen just like the 5090 does. Yes you can force MFG x3/x4 on any game and get a ridiculous number of frames, no there are no better alternatives in terms of raw performance.

The 5070/5070ti can also handle 4K especially at 60FPS but with the help of DLSS and Frame-Gen there isn't a card known to man kind apart from the 5090 that can guarantee you 60FPS at this resolution with pure ras. No I don't personally recommend AMD for Mid-Range at 4K because DLSS4 with MFG only on x3 will leave them in the dust and will wring out more performance from your card in future.

Yes the 5080 is worth if over the 5070ti if you're after the fastest possible card without spending 4090/5090 prices.

Last edited:

The-Architect

New Member

- Joined

- Mar 24, 2025

- Messages

- 22 (4.40/day)

Confirmed.Yes it will handle 4K with the help of DLSS and Frame-Gen just like the 5090 does. Yes you can force MFG x3/x4 on any game and get a ridiculous number of frames, no there are no better alternatives in terms of raw performance.

The 5070/5070ti can also handle 4K especially at 60FPS but with the help of DLSS and Frame-Gen there isn't a card known to man kind apart from the 5090 that can guarantee you 60FPS at this resolution with pure ras. No I don't personally recommend AMD for Mid-Range at 4K because DLSS4 with MFG only on x3 will leave them in the dust and will wring out more performance from your card in future.

Yes the 5080 is worth if over the 5070ti if you're after the fastest possible card without spending 4090/5090 prices.

RTX 5080 — early silicon, 13 ROPs disabled at factory.

Patched the sm_120 block in libcuda.so.

No DLSS. No Frame-Gen. Just pure raster at 4K.

285 FPS average at 3840x2160, 2x MSAA.

That's without the full hardware even enabled.

You want proof? I’ve got screenshots, disassemblies, and benchmarks.

NVIDIA built a beast. I just gave it permission to breathe.

The-Architect

New Member

- Joined

- Mar 24, 2025

- Messages

- 22 (4.40/day)

If I send you the libcuda.so.1.1 (this is the driver for NVIDIA) I will be breaking the law for distributing Nvidia proprietary code and I won't do that. Nvidia and I are working together to get this update pushed to all Blackwell architecture cards in the next week or two. Everything is coming from NVIDIA I am just the guy that took apart their binary and fixed it.release ze github

And where is the code, benchmark logs, and disassembly we were promised!?

Attachments

Okay but your attachments aren't actually logs and where's the disassembly?If I send you the libcuda.so.1.1 (this is the driver for NVIDIA) I will be breaking the law for distributing Nvidia proprietary code and I won't do that. Nvidia and I are working together to get this update pushed to all Blackwell architecture cards in the next week or two. Everything is coming from NVIDIA I am just the guy that took apart their binary and fixed it.

Why would you even disassemble it in the first place? And isn't libcuda.so the Linux driver library?

The-Architect

New Member

- Joined

- Mar 24, 2025

- Messages

- 22 (4.40/day)

Okay but your attachments aren't actually logs and where's the disassembly?

Why would you even disassemble it in the first place? And isn't libcuda.so the Linux

Why Disassembly Was Necessary

> “Why would you even disassemble it in the first place?”

Because the driver stack deliberately rejects sm_120 (Blackwell) even though the hardware is fully capable. Without disassembly, I couldn’t:

Find the architecture check

Locate where feature gates were being artificially restricted

Remove the conditions NVIDIA imposed by policy, not by hardware limits

This is standard for driver-level feature segmentation, and it’s a known method in firmware dev, game modding, kernel patching, and emulation.

If you’re working with CUDA at a serious level and you’ve never opened libcuda.so, you’ve never actually looked under the hood.

---

Linux vs Windows?

Yes, libcuda.so.1.1 is the Linux runtime, but its behavior mirrors backend architecture used in nvapi64.dll and Windows kernel drivers.

That’s why my patch works on both Linux and Windows — the architectural enablement happens at the runtime level, not just the OS-level kernel.

In fact, many of the driver limitations are enforced at the shared backend level — so unlocking libcuda.so unlocks functionality globally.

- Joined

- Jun 14, 2020

- Messages

- 4,801 (2.74/day)

| System Name | Mean machine |

|---|---|

| Processor | AMD 6900HS |

| Memory | 2x16 GB 4800C40 |

| Video Card(s) | AMD Radeon 6700S |

Go to the CBR24 benchmark section, I can casually break 40k on a 4090. Your card is not faster than a 4090, let alone a 5090 bud.Once unlocked, it wasn’t just “good enough” — it outperformed a 5090 in real-world, sustained workloads.

Benchmarks? Check my repo. Cinebench GPU: 34,722.

Flight Sim: 200+ FPS.

AI inference: clean.

- Joined

- Dec 9, 2024

- Messages

- 307 (2.77/day)

- Location

- Missouri

| System Name | The |

|---|---|

| Processor | Ryzen 7 5800X |

| Motherboard | ASUS PRIME B550-PLUS AC-HES |

| Cooling | Thermalright Peerless Assassin 120 SE |

| Memory | Silicon Power 32GB (2 x 16GB) DDR4 3200 |

| Video Card(s) | RTX 2080S FE | 1060 3GB & 1050Ti 4GB In Storage |

| Display(s) | Gigabyte G27Q (1440p / 170hz DP) |

| Case | SAMA SV01 |

| Power Supply | Firehazard in the making |

| Mouse | Corsair Nightsword |

| Keyboard | Steelseries Apex Pro |

I don't believe there's anything wrong with how I'm treating them, as I don't see anything wrong with being extremely skeptical. I'm not gonna suddenly take the word of a anonymous forum poster who's trying to claim something. Thankfully, he's provided a bit more evidence since I posted thatI'd also prefer if users could just respond rationally and leave the sarcasm aside, there are plenty of non-sarcastic ways to disagree here.

Then we'll see. I don't see how this wouldn't of been caught earlier though.I just got off the phone with Nvidia VP and engineering and they verified I have a 36% increase in speed. There is a bug inside the 50 series and it's going to be fixed in the coming weeks

I simply do not need a 5080 for what I target / play. Words of advice; don't treat people like a dick and people wont be dicks to you.I didn't wait either, I went to the store and bought it like a big boy.

Thank you, that's better.I attached the verification of the meeting because you won't believe that either.

Try some gaming benchmarks if you can / would.

Corporations aren't really you friends; but if you're being honest.. then its something that will be appreciated. But to be perfectly honest, your posts came off as extremely ragebaity, posting 2 times of the same screenshot, with a very much troll-like caption to go with it initially, so you got exactly what you put out there pretty much.Sorry, for me it's I'm trying to help me community and everyone wants to bust my balls like I'm faking all this just for my amusement. No I've been told I can not share binary as that is against the law. I can not share edited driver files because I dont own them. The meeting with Nvidia today confirmed everything and the patch should be coming in the next week or so. Sorry I can't share it but I want to do things the right way so Nvidia and I stay good friends.

I would of taken a better approach at handling this, such as starting your own thread and explaining the ins and outs of it, instead of going into random threads and posting things that just make you come off like a dick to be blunt.

I don't mean to be dickish when it comes to my skepticism but I simply don't trust anonymous forum posters willy nilly. That's no jab at you; that's just honesty. Your behavior before you posted this, before your further evidence, also wasn't helping

I don't hold any particular animosity. I am merely skeptical.

Last edited: