I can include smiles too

My English are far from perfect, but I am pretty sure that "we didn't see manufacturers

really using that standard" is somewhat different from "equals

no support to you".

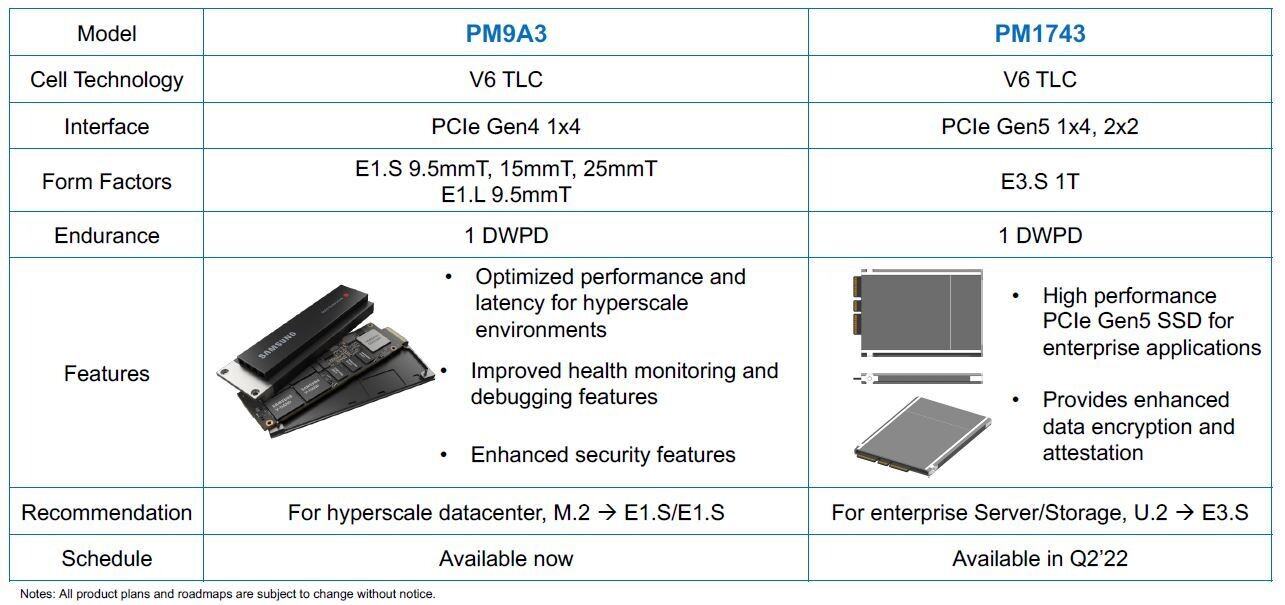

SSD manufacturers came up with PCIe 4.0 models that where not much better than PCIe 3.0 models, with the exception of sequential speeds. If I am not mistaken, Samsung didn't really rushed to come out with PCIe 4.0 solutions either. And as for PCIe 4.0 with graphics cards, it's just normal for the manufacturers to use the latest version of the bus, but my point was that 4.0 doesn't offer much compared to 3.0 version in 3D performance, something that is usually the case in all those last years. Not just this last generation.

Now my point exactly is that. Now that Intel will come out with PCIe 5.0 support, we will see how difficult it is to create PCIe 5.0 SSDs that are clearly faster than previous generations SSDs in about every benchmark. I wouldn't remember your post by then, I hope you would and you would mention me either to tell me that I was wrong, or either to tell me that probably I was right.

About that AQC113. Was it available after AM4 came out or after Intel supported PCIe 4.0? If it was way before Intel supported 4.0, then we have an example. But still I believe it is an exception, not the rule.

Finally, more smiles!!!!!

Came up with? I guess you don't understand how NAND flash works if that's how simple you think it is.

The issue with SSDs are not the controllers, but the NAND flash. We should see some improvements as the NAND flash makers stack more layers, but even so, the technology is quite limited if you want faster random speeds and that's one of the reasons Intel was working on 3D XPoint memory with Micron, was later became Optane, no? It might not quite have worked out, but consumer SSDs are using a type of flash memory that was never really intended for what it's being used as today, yet it has scaled amazingly in what is just over a decade of SSDs taking over from spinning rust in just about every computer you can buy today.

Also, do you have any idea how long it takes to develop any kind of "chip" used in a modern computer or other device? It's not something you throw together in five minutes. In all fairness, the only reason there was a PCIe 4.0 NVMe controller so close to AMD's X570 launch, was because AMD went to Phison and asked them to make something and gave them some cash to do it. It was what I'd call "cobbled together" as it ran hot, it was technically a PCIe 3.0, but with a PCIe 4.0 bus strapped on to it. Hence why it performed as it did. It was also produced on a node that wasn't really meant for something to handle the amount of data that the PCIe 4.0 can deliver, so it ran hot as anything.

How long have we used PCIe 3.0 and how long did it take until GPUs took advantage of the bus? We pretty much had to get to an GTX 1080 for it to make a difference against PCIe 1.1 at 16 lanes, based on testing by TPU. So we're obviously going to see a similar slow transition, unless something major happens in GPU design where they can take more of an advantage of the bus. So obviously the generational difference is going to be even smaller with 4.0 and 5.0 as long as everything else stays the same.

In this article, we investigate how performance of NVIDIA's GeForce GTX 1080 is affected when running on constrained PCI-Express bus widths such as x8 or x4. We also test all PCIe speed settings, 1.1, 2.0, and 3.0. One additional test checks on how much performance is lost when using the...

www.techpowerup.com

Did you even read the stuff that was announced these past few days? Intel will only have PCIe 5.0 for the PEG slot initially, so you won't see any SSD support for their first consumer platform, which makes this argument completely moot. So maybe end of 2022 or sometime in 2023 we'll see the first PCIe 5.0 consumer SSDs.

The AQC113 was launched a couple of months ago, but why does it matter if Intel supported PCIe 4.0 or not? We're obviously going to see a wider move towards PCIe 4.0 for many devices, of which one of the first will be USB4 host controllers, as you can see above. I don't understand why you think that only Intel can push the ecosystem forward, as they're far from the single driving force in the computer industry, as not all devices are meant for PC use only. There are plenty of ARM based server processors with PCIe 4.0 and Intel isn't even the first company with PCIe 5.0 in their processors.

I think you need to broaden your horizons a bit before making a bunch of claims about things you have limited knowledge about.

Motherboard prices went way up when Gen 4 was implemented. Scary to think how much Z690 is going to cost with a bleeding edge Gen 5 implementation. And all for something which is of no use for 99.99% of people who'll buy these desktop CPUs. Sticking with Gen 4 seems like the right move in the medium term.

For several reasons, not just because of PCIe 4.0. As for Z690, the singular PCIe 5.0 slot isn't likely to increase cost in and of itself, as it shouldn't be far enough away from the CPU to require redrivers or any other kind of signal conditioners. The only potential thing that might increase costs marginally would be in case there needs to be a change to even higher quality PCB materials to reduce noise, but I don't think that would be the case compared to a PCIe 4.0 board, as this was one of the other changes compared to PCIe 3.0 boards that increased costs.

I think integrated components like Ethernet controllers is one of the more realistic places to see this come true, as they don't have to care as much about backwards compatibility. With SSDs on the other hand, I would love to see 4.0 x2 NVMe become a thing for fast, cheap(er) drives, but given that those would be limited to 3.0 x2 on older boards/chipsets and thus lose significant performance (at least in bechmarks) and would be pissed on by literally every reviewer out there for this ("yes, it's a good drive, but it sacrifices performance on older computers for no good reason" alongside the inevitable value comparison with a just-launched MSRP product vs. one that has 6-12 months of gradual price drops behind it), I don't see it happening, even if they would be great drives and would allow for more storage slots per motherboard at the same or potentially lower board costs.

That's the problem with adopting new standards in open ecosystems with large install bases - if you break compatibility, you shrink your potential customer base dramatically.

Overall, I think this is a very good choice on AMD's part. The potential benefits of this are so far in the future that it wouldn't make sense, and the price increase for motherboards (and development costs for CPUs and chipsets) would inevitably be passed on to customers. No thanks. PCIe 4.0 is plenty fast. I'd like more options to bifurcate instead, but 5.0 can wait for quite a few years.

I guess the problem with SSDs is that PCIe 4.0 x2 gives you about the same performance as PCIe 3.0 x4 and the cost of developing a new controller is higher than continuing to use the already developed products that deliver similar performance. There ought to be benefits in things like notebooks though, as they have limited PCIe lanes. As PCIe 4.0 x2 would be considered a "budget" drive, it seems like the few controllers that are available, are DRAM-less, which isn't all that interesting to me at least.

As you point out, it's kind of frustrating that the PCIe interface couldn't have been designed in a way that it hard "forward" compatibility too, in the sense that a PCIe 4.0 x2 device plugged into a PCIe 3.0 x4 interface could utilise the full bandwidth available, instead of only PCIe 3.0 x2.

That's also why we're stuck with the ATX motherboard standard, even though it's not really fit for purpose any more and barely resembles what it did when it launched. That said, I do not miss AT motherboards...

It's always nice with new, faster interfaces, but in terms of PC technology, we've only just gotten a new standard and outside of NVMe storage, we're just scratching the surface of what PCIe 4.0 can offer. Maybe AMD did a "bad choice" by going with PCIe 4.0 if it's really set to be replaced by PCIe 5.0 so soon, but I highly doubt it, mainly due to the limitations of the trace lengths. PCIe 3.0 is the only standard that doesn't need additional redrivers to function over any kind of distance, at least until someone works out a better way of making motherboards. PCIe 4.0 is just about manageable, but 5.0 is going to have much bigger limitations and 6.0 might be even worse.

You'd need a different PSU, wouldn't you? Regardless, the motherboard manufacturers were pushing back against that standard, last I heard.

Motherboard manufacturers unite against Intel's efficient PSU plans | PC Gamer

Though, take it with a grain of salt, as always.

Actually, you can get a simple adapter from a current PSU to the ATX12VO connector, you just need to discount the 5V and 3.3V when you look at the total Wattage of your PSU.

As such, you might have to discount for 100W or so from the total output of the PSU.