You want it in a format i cant provide. I cannot and will not transcribe entire video for you.

I have TPU links below.

Yes it did. And after 8GB is obsolete and gone from new cards (60 series?) then the same will happen with 10GB cards next. Then 12GB etc. It's inevitable.

Games constantly get more demanding.

8GB is already in critical zone. Only people like you are still in denial.

You're the one trying to prove 8GB is "youtuber drama". The onus is not on me to disprove your delusions.

Who cares what nvidia compares. What matters is what consumers and reviewers compare. Nobody's buying Intel? Sure. Keep telling yourself that. I guess all those cards they produced just vanished from the shelves all by themself?

Proving how? That he runs at Ultra settings without an accompanying frametime graph where 8GB cards get murdered?

I looked at all the performance benchmark reviews he has posted for this years games.

11 games in total. At 1080p max settings (tho not in all games and without RT or FG) the memory usage is average 7614 MB.

7 games stay below 8GB at those settings. 4 games go over it.

6 games are ran at lowest settings 1080p no RT/FG and despite that half of them (3) still go over 8GB even at these low settings.

Anyone looking at these numbers and seeing how close the average is to the 8GB limit should really be considering twice when buying a 8GB card today.

Next year likely more than half of the tested games will surpass 8GB even at 1080p low no RT/FG and you have to remember that RT and FG both increase VRAM usage even more. To say nothing of frametimes on those 8GB cards. Even if the entire buffer is not used up the frametimes already take a nosedive or in some cases textures simply refuse to load.

Links:

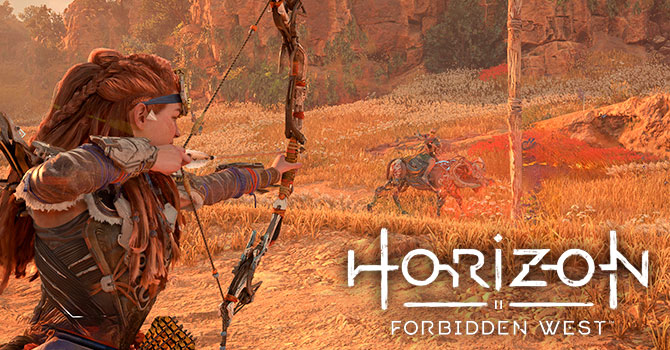

Horizon Forbidden West finally brings the PS5 Exclusive to the PC, with stunning visuals and an excellent gaming experience. There's also support for multiple upscalers and DLSS 3 Frame Generation. In our performance review, we're taking a closer look at image quality, VRAM usage, and...

www.techpowerup.com

Homeworld 3 is the latest installment in the epic space RTS series. Thanks to Unreal Engine, you get upgraded visuals and combat in real three-dimensional environments. In our performance review, we're taking a closer look at image quality, VRAM usage, and performance on a wide selection of...

www.techpowerup.com

Ghost of Tsushima is finally available for PC after being a PlayStation exclusive for four years. Thanks to the porting work by Nixxes, the game runs very well, even on weaker hardware. In our performance review, we're taking a closer look at image quality, VRAM usage, and performance on a wide...

www.techpowerup.com

In "Hellblade II," Senua returns, confronting her haunting past as one of gaming's most tragic heroes. Powered by Unreal Engine 5, the graphics are among the best we've ever seen, but hardware requirement are high, too. In our performance review, we're taking a closer look at image quality, VRAM...

www.techpowerup.com

Black Myth Wukong has over two million gamers playing the game. This souls-like action RPG is a huge hit, especially in China. Powered by Unreal Engine 5, the graphics look great, but hardware requirements are definitely not on the light side, we had to use upscaling. In our performance review...

www.techpowerup.com

Star Wars Outlaws is a new title in the iconic Star Wars universe, which lets you play as member of the criminal underworld. The game uses the Snowdrop engine, and delivers great graphics. In our performance review, we'll look at the game's graphics quality, VRAM consumption, and how it runs...

www.techpowerup.com

Space Marine 2 is the latest entry in the Warhammer 40K universe, where you step into the role of an Ultramarine and battle against the alien Tyranids. In our performance review, we'll look at the game's graphics quality, VRAM consumption, and how it runs across a range of contemporary graphics...

www.techpowerup.com

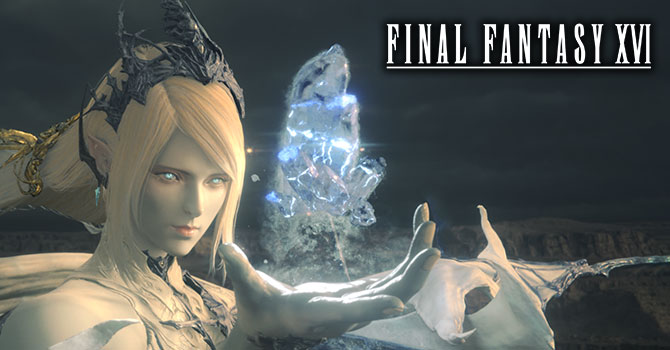

Final Fantasy XVI is finally available on PC. This is new fodder for lovers of the epic RPG series, now on a platform with good graphics and support for DLSS, FSR and FrameGen. In our performance review, we'll look at the game's graphics quality, VRAM consumption, and how it runs across a range...

www.techpowerup.com

Silent Hill 2 Remake lets you relive the iconic classic with modern graphics thanks to Unreal Engine 5. The PC version offers enhanced visuals, featuring support for cutting-edge technologies like DLSS, FSR and ray tracing. In our performance review, we'll look at the game's graphics quality...

www.techpowerup.com

Dragon Age: The Veilguard finally brings us back to the fantastic Dragon Age Universe. Built on Frostbite, the graphics are good, and there's support for RT and upscalers, too. In our performance review, we'll look at the game's graphics quality, VRAM consumption, and how it runs across a range...

www.techpowerup.com

Stalker 2 is out now and brings players back to the wasteland around the Chernobyl nuclear plant. Built using the cutting-edge Unreal Engine 5, it promises good graphics, but also high hardware requirements. In our performance review, we'll look at the game's graphics quality, VRAM consumption...

www.techpowerup.com

He's calling the 8GB 4060 Ti at 400 a joke. And it is. 4060 8GB at 300 is not any better.

AMD had RX480 in 2016 with 8GB that's slower than 1060. So 8GB even back then was not for semi high end or upper midrange like you claim.

3070 also cost only 379 for 8GB which in 2016 was good price for 8GB.

Eight years later me and many other people expect more because prices have risen but 8GB remains.

If you know a thing or two about this market, you should know you should avoid these cards.

If you know a thing or two about this market, you should know you should avoid these cards.