- Joined

- Sep 17, 2014

- Messages

- 23,907 (6.16/day)

- Location

- The Washing Machine

| System Name | Tiny the White Yeti |

|---|---|

| Processor | 7800X3D |

| Motherboard | MSI MAG Mortar b650m wifi |

| Cooling | CPU: Thermalright Peerless Assassin / Case: Phanteks T30-120 x3 |

| Memory | 32GB Corsair Vengeance 30CL6000 |

| Video Card(s) | ASRock RX7900XT Phantom Gaming |

| Storage | Lexar NM790 4TB + Samsung 850 EVO 1TB + Samsung 980 1TB + Crucial BX100 250GB |

| Display(s) | Gigabyte G34QWC (3440x1440) |

| Case | Lian Li A3 mATX White |

| Audio Device(s) | Harman Kardon AVR137 + 2.1 |

| Power Supply | EVGA Supernova G2 750W |

| Mouse | Steelseries Aerox 5 |

| Keyboard | Lenovo Thinkpad Trackpoint II |

| VR HMD | HD 420 - Green Edition ;) |

| Software | W11 IoT Enterprise LTSC |

| Benchmark Scores | Over 9000 |

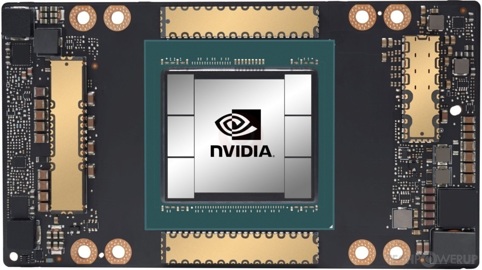

I know this is a wrong topic and all, but what the hell is Nvidia manufacturing on 7nm this year?

Both are using a small enough part of 5nm but on 7nm AMD continues with Zen3 (and possibly Zen3+) and RDNA2 at 27% of 7nm capacity while Nvidia uses 21% for... something?

Good question. Maybe that is really just all other nodes?