I did read what you wrote. You are saying the upside to MCM is better performance and efficiency, without mentioning process and architecture improvements which are more important. Almost everything positive you attribute to MCM is more likely due to being on 5nm and being a new architecture. Convenient you used 4k data to show performance difference between 6900xt and 7900xtx where bus size and memory speed difference are more likely bigger factors than being MCM.

Also that in bold is not the truth, they have increased MSRP across every tier.

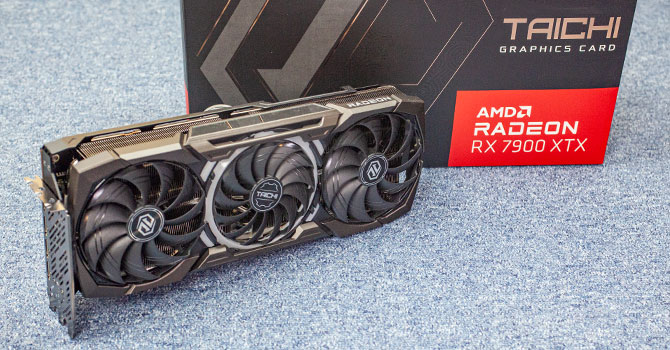

Come again? Exact same MSRP, images taken from TPU reference reviews for their respective release.

Claims I’m straw manning and starts putting words in other peoples mouths.