Where's this magical land where the 9600k is only $220? It's $250,

everywhere. The 9600k

has been $220 before, but it's not right now. The 3600 is $200,

everywhere. That is... 20% cheaper.

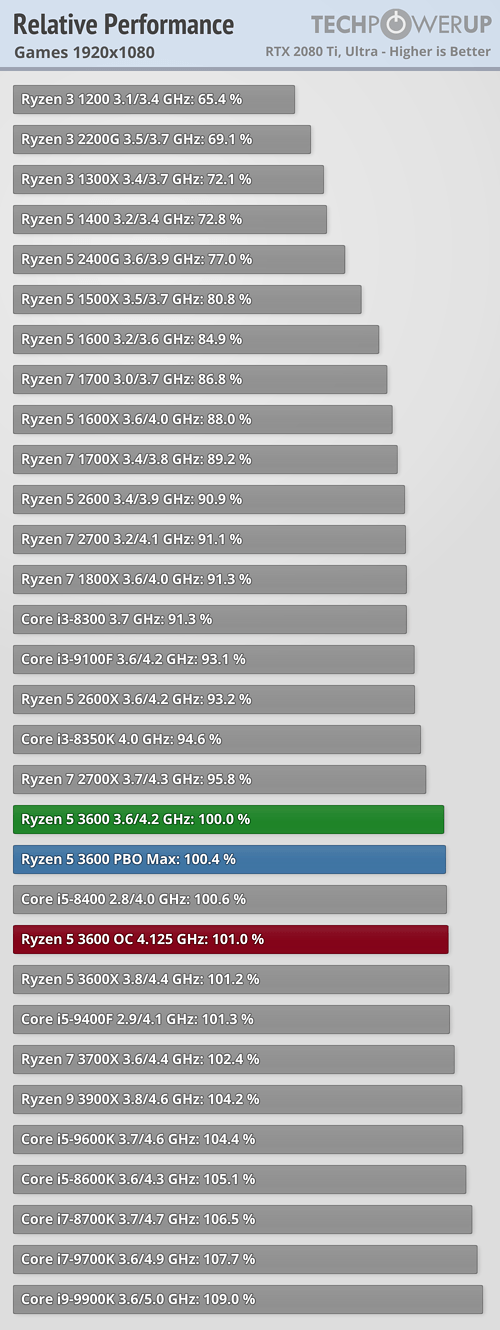

"Up to 20% faster" is just a way of ignoring that the 9600k is only 6.6% faster in the games on average right on this

site, at 720p with a 2080 Ti, no less. There will be outliers, which is why I said:

Because of that, if someone absolutely has to game at 144 fps on a 144hz monitor, then Intel might be the right choice. For literally everyone else, Zen offers better value near-equal performance in the majority of games.