No big deal since static OC on ryzen cpus is kinda pointless. Limiting the voltage to 1.3-1.35 I am guessing pretty much nerfs boost clock override offset but again it's no big deal since you get almost none gaming performance from this. Only thing that has a meaning to do in ryzen cpus is raising the PBO Limits as much as your cooling allows to keep higher all-core clocks if you need them.

-

Welcome to TechPowerUp Forums, Guest! Please check out our forum guidelines for info related to our community.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD's Robert Hallock Confirms Lack of Manual CPU Overclocking for Ryzen 7 5800X3D

- Thread starter TheLostSwede

- Start date

- Joined

- Oct 13, 2021

- Messages

- 45 (0.03/day)

Incorrect. They said it themself the Cache is dependent of the CPU core voltage. They coud'nt design a seperate voltage rail among it due to compatible pin layouts. So you go with what you have, really. Since it's a EPYC gimmick, epyc's where never tested against OC's since the clocks of those CPU avg on 2 to 3.4Ghz.

Whether or not the extra cache can be powered down is irrelevant. It's a multi-layer cpu on a 7nm process and whether it was designed by AMD or Intel, it will always run hotter than a similar cpu design on a single layer.

- Joined

- May 31, 2016

- Messages

- 4,510 (1.38/day)

- Location

- Currently Norway

| System Name | Bro2 |

|---|---|

| Processor | Ryzen 5800X |

| Motherboard | Gigabyte X570 Aorus Elite |

| Cooling | Corsair h115i pro rgb |

| Memory | 32GB G.Skill Flare X 3200 CL14 @3800Mhz CL16 |

| Video Card(s) | Powercolor 6900 XT Red Devil 1.1v@2400Mhz |

| Storage | M.2 Samsung 970 Evo Plus 500MB/ Samsung 860 Evo 1TB |

| Display(s) | LG 27UD69 UHD / LG 27GN950 |

| Case | Fractal Design G |

| Audio Device(s) | Realtec 5.1 |

| Power Supply | Seasonic 750W GOLD |

| Mouse | Logitech G402 |

| Keyboard | Logitech slim |

| Software | Windows 10 64 bit |

I thought you are talking about gaming not CBR23.Im pretty sure there is a huge difference in all core workloads. For example i think I can hit 15k (thats how much 5800x gets right?) cbr23 at around 45-50w

Mussels

Freshwater Moderator

- Joined

- Oct 6, 2004

- Messages

- 58,412 (7.77/day)

- Location

- Oystralia

| System Name | Rainbow Sparkles (Power efficient, <350W gaming load) |

|---|---|

| Processor | Ryzen R7 5800x3D (Undervolted, 4.45GHz all core) |

| Motherboard | Asus x570-F (BIOS Modded) |

| Cooling | Alphacool Apex UV - Alphacool Eisblock XPX Aurora + EK Quantum ARGB 3090 w/ active backplate |

| Memory | 2x32GB DDR4 3600 Corsair Vengeance RGB @3866 C18-22-22-22-42 TRFC704 (1.4V Hynix MJR - SoC 1.15V) |

| Video Card(s) | Galax RTX 3090 SG 24GB: Underclocked to 1700Mhz 0.750v (375W down to 250W)) |

| Storage | 2TB WD SN850 NVME + 1TB Sasmsung 970 Pro NVME + 1TB Intel 6000P NVME USB 3.2 |

| Display(s) | Phillips 32 32M1N5800A (4k144), LG 32" (4K60) | Gigabyte G32QC (2k165) | Phillips 328m6fjrmb (2K144) |

| Case | Fractal Design R6 |

| Audio Device(s) | Logitech G560 | Corsair Void pro RGB |Blue Yeti mic |

| Power Supply | Fractal Ion+ 2 860W (Platinum) (This thing is God-tier. Silent and TINY) |

| Mouse | Logitech G Pro wireless + Steelseries Prisma XL |

| Keyboard | Razer Huntsman TE ( Sexy white keycaps) |

| VR HMD | Oculus Rift S + Quest 2 |

| Software | Windows 11 pro x64 (Yes, it's genuinely a good OS) OpenRGB - ditch the branded bloatware! |

| Benchmark Scores | Nyooom. |

It varies chip to chipNo big deal since static OC on ryzen cpus is kinda pointless. Limiting the voltage to 1.3-1.35 I am guessing pretty much nerfs boost clock override offset but again it's no big deal since you get almost none gaming performance from this. Only thing that has a meaning to do in ryzen cpus is raising the PBO Limits as much as your cooling allows to keep higher all-core clocks if you need them.

My 5800x for example is one of the hotter running ones, so a static OC gains me 200Mhz all core, loses 500Mhz single threaded, but also runs 30C colder (well, with 40C ambients it did)

These are simply chips to be left on auto, or with minimal PBO tweaking (curve offset may remain) - and that appeals to a MUCH larger userbase than the overclockers

- Joined

- Nov 15, 2020

- Messages

- 1,010 (0.62/day)

| System Name | 1. Glasshouse 2. Odin OneEye |

|---|---|

| Processor | 1. Ryzen 9 5900X (manual PBO) 2. Ryzen 9 7900X |

| Motherboard | 1. MSI x570 Tomahawk wifi 2. Gigabyte Aorus Extreme 670E |

| Cooling | 1. Noctua NH D15 Chromax Black 2. Custom Loop 3x360mm (60mm) rads & T30 fans/Aquacomputer NEXT w/b |

| Memory | 1. G Skill Neo 16GBx4 (3600MHz 16/16/16/36) 2. Kingston Fury 16GBx2 DDR5 CL36 |

| Video Card(s) | 1. Asus Strix Vega 64 2. Powercolor Liquid Devil 7900XTX |

| Storage | 1. Corsair Force MP600 (1TB) & Sabrent Rocket 4 (2TB) 2. Kingston 3000 (1TB) and Hynix p41 (2TB) |

| Display(s) | 1. Samsung U28E590 10bit 4K@60Hz 2. LG C2 42 inch 10bit 4K@120Hz |

| Case | 1. Corsair Crystal 570X White 2. Cooler Master HAF 700 EVO |

| Audio Device(s) | 1. Creative Speakers 2. Built in LG monitor speakers |

| Power Supply | 1. Corsair RM850x 2. Superflower Titanium 1600W |

| Mouse | 1. Microsoft IntelliMouse Pro (grey) 2. Microsoft IntelliMouse Pro (black) |

| Keyboard | Leopold High End Mechanical |

| Software | Windows 11 |

Will this be 6nm or 7nm?

- Joined

- Jun 14, 2020

- Messages

- 4,968 (2.78/day)

| System Name | Mean machine |

|---|---|

| Processor | AMD 6900HS |

| Memory | 2x16 GB 4800C40 |

| Video Card(s) | AMD Radeon 6700S |

In gaming the difference is even biggerI thought you are talking about gaming not CBR23.

- Joined

- Aug 9, 2019

- Messages

- 1,812 (0.87/day)

| Processor | 7800X3D 2x16GB CO |

|---|---|

| Motherboard | Asrock B650m HDV |

| Cooling | Peerless Assassin SE |

| Memory | 2x16GB DR A-die@6000c30 tuned |

| Video Card(s) | Asus 4070 dual OC 2610@915mv |

| Storage | WD blue 1TB nvme |

| Display(s) | Lenovo G24-10 144Hz |

| Case | Corsair D4000 Airflow |

| Power Supply | EVGA GQ 650W |

| Software | Windows 10 home 64 |

| Benchmark Scores | Superposition 8k 5267 Aida64 58.5ns |

Not to start another war, but I'm not so sure since games generally utilize few cores most of the time. My 5600X in SOTTR (a game that utilizes many cores well) get 230-240fps CPU game avg 1080p highest running 76W limit, but I get 215-225fps running 45W limit so only around 5% fps loss last I checked. In both cases IO-die uses 15-20W. It may be more or less in other games, but generally less since most games uses less cores than SOTTR.In gaming the difference is even bigger

If you can test your 12th gen in SOTTR 1080p highest with unlimited and 45W limit it would be great

Ryzen 5k scales poorly efficiencywise in allcore loads if voltage is above 1.25V-ish (meaning 4.5-4.75GHz allcore depending on bin, CO value etc). I'm not sure where sæefficient scaling stops on 12th gen, but wouldn't be surprised if it were around 1.2-1.3v allcore like Ryzen 5k, maybe lower. My 12400F only runs allcore at 1.0v so can't test scaling on that one.

Last edited:

- Joined

- Jun 14, 2020

- Messages

- 4,968 (2.78/day)

| System Name | Mean machine |

|---|---|

| Processor | AMD 6900HS |

| Memory | 2x16 GB 4800C40 |

| Video Card(s) | AMD Radeon 6700S |

Unless we have the same card it's kind of pointless. Ill win in efficiency just because i habe a 3090 that pumps 300 fps in 1080p. We could try locking the framerate to 150 or something and then check the power draw i guessNot to start another war, but I'm not so sure since games generally utilize few cores most of the time. My 5600X in SOTTR (a game that utilizes many cores well) get 230-240fps CPU game avg 1080p highest running 76W limit, but I get 215-225fps running 45W limit so only around 5% fps loss last I checked. In both cases IO-die uses 15-20W. It may be more or less in other games, but generally less since most games uses less cores than SOTTR.

If you can test your 12th gen in SOTTR 1080p highest with unlimited and 45W limit it would be great

Ryzen 5k scales poorly efficiencywise in allcore loads if voltage is above 1.25V-ish (meaning 4.5-4.75GHz allcore depending on bin, CO value etc). I'm not sure where sæefficient scaling stops on 12th gen, but wouldn't be surprised if it were around 1.2-1.3v allcore like Ryzen 5k, maybe lower.

Igorslab and derbauer ran some tests and adl are insane in gaming efficiency

- Joined

- Aug 9, 2019

- Messages

- 1,812 (0.87/day)

| Processor | 7800X3D 2x16GB CO |

|---|---|

| Motherboard | Asrock B650m HDV |

| Cooling | Peerless Assassin SE |

| Memory | 2x16GB DR A-die@6000c30 tuned |

| Video Card(s) | Asus 4070 dual OC 2610@915mv |

| Storage | WD blue 1TB nvme |

| Display(s) | Lenovo G24-10 144Hz |

| Case | Corsair D4000 Airflow |

| Power Supply | EVGA GQ 650W |

| Software | Windows 10 home 64 |

| Benchmark Scores | Superposition 8k 5267 Aida64 58.5ns |

No, you can compare, look at CPU game avg, this is what your CPU produces and will be equal no matter what GPU you useUnless we have the same card it's kind of pointless. Ill win in efficiency just because i habe a 3090 that pumps 300 fps in 1080p. We could try locking the framerate to 150 or something and then check the power draw i guess

Igorslab and derbauer ran some tests and adl are insane in gaming efficiency

You may be right that you lose less perf pwr limiting a 12th gen, but it would be nice to see how it affects. You will get different numbers than me, but the point is to show how much lower perf gets if pwr limited

You may be right that you lose less perf pwr limiting a 12th gen, but it would be nice to see how it affects. You will get different numbers than me, but the point is to show how much lower perf gets if pwr limited

- Joined

- Jun 14, 2020

- Messages

- 4,968 (2.78/day)

| System Name | Mean machine |

|---|---|

| Processor | AMD 6900HS |

| Memory | 2x16 GB 4800C40 |

| Video Card(s) | AMD Radeon 6700S |

The cpu game is affected by the gpu actually, downclock your gpu and youll get higher numbers. I dont know why it works that way but it doesNo, you can compare, look at CPU game avg, this is what your CPU produces and will be equal no matter what GPU you useYou may be right that you lose less perf pwr limiting a 12th gen, but it would be nice to see how it affects. You will get different numbers than me, but the point is to show how much lower perf gets if pwr limited

- Joined

- Aug 9, 2019

- Messages

- 1,812 (0.87/day)

| Processor | 7800X3D 2x16GB CO |

|---|---|

| Motherboard | Asrock B650m HDV |

| Cooling | Peerless Assassin SE |

| Memory | 2x16GB DR A-die@6000c30 tuned |

| Video Card(s) | Asus 4070 dual OC 2610@915mv |

| Storage | WD blue 1TB nvme |

| Display(s) | Lenovo G24-10 144Hz |

| Case | Corsair D4000 Airflow |

| Power Supply | EVGA GQ 650W |

| Software | Windows 10 home 64 |

| Benchmark Scores | Superposition 8k 5267 Aida64 58.5ns |

I have not seen that, I get same cpu game avg running UV or OC profile on GPU, but your system might behave different. Still using same GPU settings you can show how your CPU, that is what I was curious about? Can you do that?The cpu game is affected by the gpu actually, downclock your gpu and youll get higher numbers. I dont know why it works that way but it does

- Joined

- Jun 14, 2020

- Messages

- 4,968 (2.78/day)

| System Name | Mean machine |

|---|---|

| Processor | AMD 6900HS |

| Memory | 2x16 GB 4800C40 |

| Video Card(s) | AMD Radeon 6700S |

Just got back home, i get 225 @ 45watts with e cores off. Too bored to try e cores on right now, maybe laterI have not seen that, I get same cpu game avg running UV or OC profile on GPU, but your system might behave different. Still using same GPU settings you can show how your CPU, that is what I was curious about? Can you do that?

- Joined

- Aug 9, 2019

- Messages

- 1,812 (0.87/day)

| Processor | 7800X3D 2x16GB CO |

|---|---|

| Motherboard | Asrock B650m HDV |

| Cooling | Peerless Assassin SE |

| Memory | 2x16GB DR A-die@6000c30 tuned |

| Video Card(s) | Asus 4070 dual OC 2610@915mv |

| Storage | WD blue 1TB nvme |

| Display(s) | Lenovo G24-10 144Hz |

| Case | Corsair D4000 Airflow |

| Power Supply | EVGA GQ 650W |

| Software | Windows 10 home 64 |

| Benchmark Scores | Superposition 8k 5267 Aida64 58.5ns |

But try at stock pwr and compareJust got back home, i get 225 @ 45watts with e cores off. Too bored to try e cores on right now, maybe later

- Joined

- Jun 14, 2020

- Messages

- 4,968 (2.78/day)

| System Name | Mean machine |

|---|---|

| Processor | AMD 6900HS |

| Memory | 2x16 GB 4800C40 |

| Video Card(s) | AMD Radeon 6700S |

Stock with no power limits i get around 330 if I remember correctly, but i've never checked how much it actually consumes.But try at stock pwr and compare

- Joined

- Aug 9, 2019

- Messages

- 1,812 (0.87/day)

| Processor | 7800X3D 2x16GB CO |

|---|---|

| Motherboard | Asrock B650m HDV |

| Cooling | Peerless Assassin SE |

| Memory | 2x16GB DR A-die@6000c30 tuned |

| Video Card(s) | Asus 4070 dual OC 2610@915mv |

| Storage | WD blue 1TB nvme |

| Display(s) | Lenovo G24-10 144Hz |

| Case | Corsair D4000 Airflow |

| Power Supply | EVGA GQ 650W |

| Software | Windows 10 home 64 |

| Benchmark Scores | Superposition 8k 5267 Aida64 58.5ns |

Okay, you lose 33% perf then. How much does it use in SOTTR when not pwr limited? I haven't tested the 5800X, but considering I only lose 5% fps going from 76W to 45W I would be surprised if 5800X lose a lot more. All this considered it seems gaming is less impacted by pwr limit that productivity which for instance the 10900K test on HWUB showed.Stock with no power limits i get around 330 if I remember correctly, but i've never checked how much it actually consumes.

- Joined

- Jun 14, 2020

- Messages

- 4,968 (2.78/day)

| System Name | Mean machine |

|---|---|

| Processor | AMD 6900HS |

| Memory | 2x16 GB 4800C40 |

| Video Card(s) | AMD Radeon 6700S |

Ill check, i never really paid attention. Sotr though is kind of a weird game to test for this cause its really memory and cache dependant more than it cares about the actual cpu.Okay, you lose 33% perf then. How much does it use in SOTTR when not pwr limited? I haven't tested the 5800X, but considering I only lose 5% fps going from 76W to 45W I would be surprised if 5800X lose a lot more. All this considered it seems gaming is less impacted by pwr limit that productivity which for instance the 10900K test on HWUB showed.

The only game where I've noticed high power consumption is cyberpunk with RT on at very low resolution to make it cpu bound. Ive seen peaks at 170 watts which is insane for a game, usually its under 100watts in most games.

- Joined

- Aug 9, 2019

- Messages

- 1,812 (0.87/day)

| Processor | 7800X3D 2x16GB CO |

|---|---|

| Motherboard | Asrock B650m HDV |

| Cooling | Peerless Assassin SE |

| Memory | 2x16GB DR A-die@6000c30 tuned |

| Video Card(s) | Asus 4070 dual OC 2610@915mv |

| Storage | WD blue 1TB nvme |

| Display(s) | Lenovo G24-10 144Hz |

| Case | Corsair D4000 Airflow |

| Power Supply | EVGA GQ 650W |

| Software | Windows 10 home 64 |

| Benchmark Scores | Superposition 8k 5267 Aida64 58.5ns |

SOTTR scales with everything which makes it weird, but good for testingIll check, i never really paid attention. Sotr though is kind of a weird game to test for this cause its really memory and cache dependant more than it cares about the actual cpu.

The only game where I've noticed high power consumption is cyberpunk with RT on at very low resolution to make it cpu bound. Ive seen peaks at 170 watts which is insane for a game, usually its under 100watts in most games.

Cyberpunk has high CPU usage using DLSS or native low res, it scales good with bandwith (loves DDR5 and performs much better than with DDR4), too bad the built in bench is inconsistent :/ If I remember correct CP has some sections that utilize AVX2, that might explain high CPU usage.

Cyberpunk has high CPU usage using DLSS or native low res, it scales good with bandwith (loves DDR5 and performs much better than with DDR4), too bad the built in bench is inconsistent :/ If I remember correct CP has some sections that utilize AVX2, that might explain high CPU usage.- Joined

- Aug 9, 2019

- Messages

- 1,812 (0.87/day)

| Processor | 7800X3D 2x16GB CO |

|---|---|

| Motherboard | Asrock B650m HDV |

| Cooling | Peerless Assassin SE |

| Memory | 2x16GB DR A-die@6000c30 tuned |

| Video Card(s) | Asus 4070 dual OC 2610@915mv |

| Storage | WD blue 1TB nvme |

| Display(s) | Lenovo G24-10 144Hz |

| Case | Corsair D4000 Airflow |

| Power Supply | EVGA GQ 650W |

| Software | Windows 10 home 64 |

| Benchmark Scores | Superposition 8k 5267 Aida64 58.5ns |

I just remembered that TPU did a powerscaling test:Ill check, i never really paid attention. Sotr though is kind of a weird game to test for this cause its really memory and cache dependant more than it cares about the actual cpu.

The only game where I've noticed high power consumption is cyberpunk with RT on at very low resolution to make it cpu bound. Ive seen peaks at 170 watts which is insane for a game, usually its under 100watts in most games.

It seems ADL is very efficient down to around 75W limit or maybe a bit below, but get serious problems at 50W so somewhere between 50 and 75W gamingperformance tanks completely. 5600X at 76W limit is equally efficient as 12900K at 75W.

5800X does not behave the same and scales well at 65W vs stock 142W.

gamingperf at 65W is 98-99% of 142W :O How low you can go on 5800X before performance tanks is a big question, but I'm sure it loses a lot less perf at 45W than 12900K, since it loses over 40% perf at 50W, while I lose 5% at 45W.

The voltage/frequency curve of Ryzen 5k is a bit weird where you get very good linear scaling to around 1.1v.

My 5600X tested at various speeds, lowest voltage/powerusage in CB23:

4.2@0.99v 56W

4.3@1.02v 60W

4.4@1.05v 64W

4.5@1.10v 69W

4.6@1.18v 76W

4.7@1.26v 95W

4.8@1.34v 115W

I think it is quite comparable to 5800X. All these test were done with 4000 ram so I/O-die uses a bit more power than an avg 5600X.

Even though the I/O-die uses a bit of power (10-15W load 3200MHz ram, 20-30W load 4000MHz ram) the mem controller on ADL, I/O etc uses a fair amount of power, but at the same die as cores.

I bet 12600K will have better perf at low tdp due to less cores/cache.

Low quality post by sillyman5454

sillyman5454

New Member

- Joined

- Mar 26, 2022

- Messages

- 2 (0.00/day)

Pure sh*t. 1 step forward, 1 step back.

Well, AMD did what it promised:

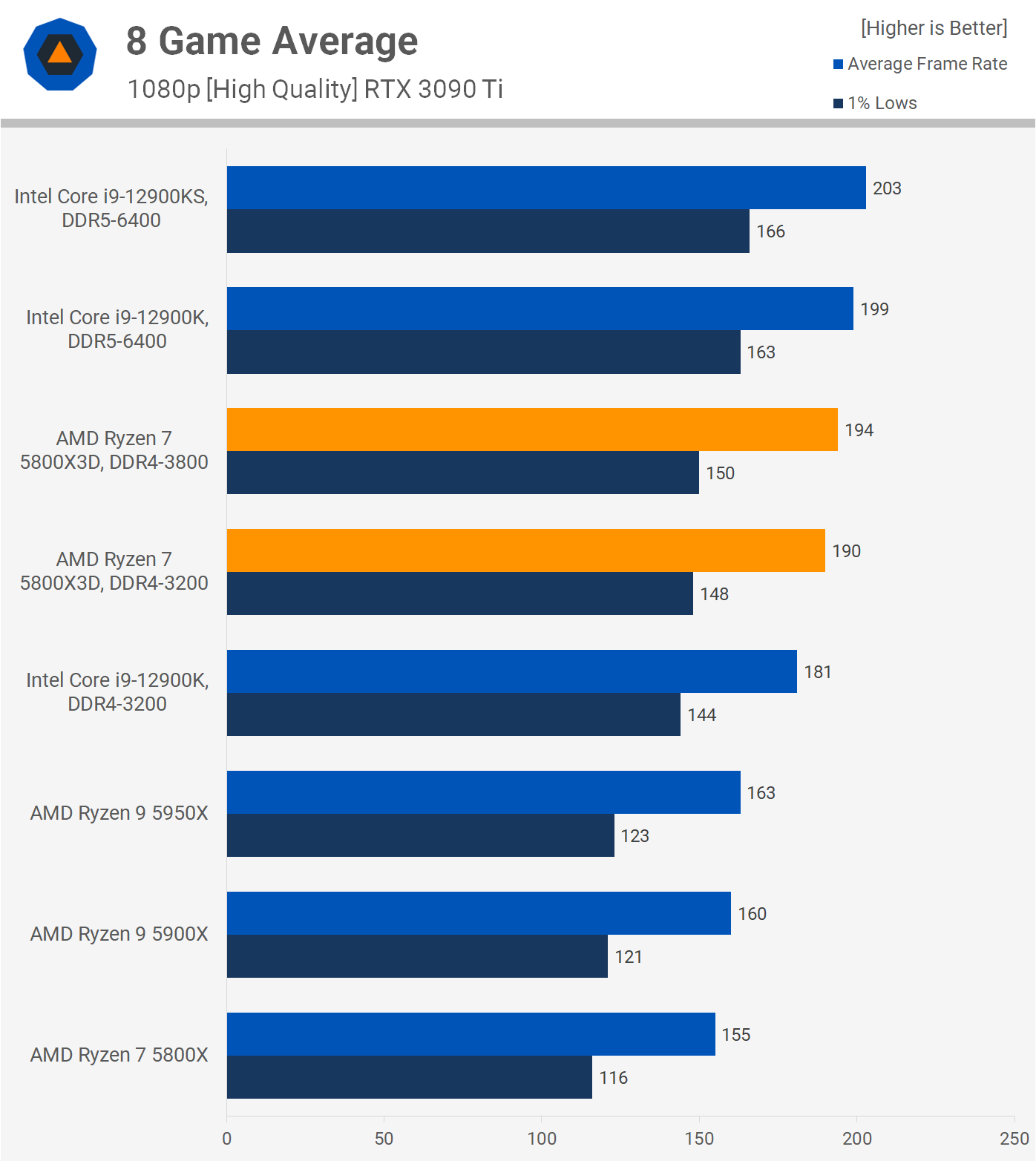

"From this small sample though, we found that the 5800X3D is slightly faster than the 12900K when using the same DDR4 memory.

It's a small 5% margin, but that did make it 19% faster than the 5900X on average, so AMD's 15% claim is looking good."

Quite an impressive comeback within the generation even without DDR5 support.

"From this small sample though, we found that the 5800X3D is slightly faster than the 12900K when using the same DDR4 memory.

It's a small 5% margin, but that did make it 19% faster than the 5900X on average, so AMD's 15% claim is looking good."

Quite an impressive comeback within the generation even without DDR5 support.

- Joined

- Jun 14, 2020

- Messages

- 4,968 (2.78/day)

| System Name | Mean machine |

|---|---|

| Processor | AMD 6900HS |

| Memory | 2x16 GB 4800C40 |

| Video Card(s) | AMD Radeon 6700S |

That's because the voltage is set a bit to high by default on the 12900k. With a little undervolting I managed 14k cbr23 score @ 35 watts. I can max out my 3090 with that power limitI just remembered that TPU did a powerscaling test:

It seems ADL is very efficient down to around 75W limit or maybe a bit below, but get serious problems at 50W so somewhere between 50 and 75W gamingperformance tanks completely. 5600X at 76W limit is equally efficient as 12900K at 75W.

5800X does not behave the same and scales well at 65W vs stock 142W.

gamingperf at 65W is 98-99% of 142W :O How low you can go on 5800X before performance tanks is a big question, but I'm sure it loses a lot less perf at 45W than 12900K, since it loses over 40% perf at 50W, while I lose 5% at 45W.

The voltage/frequency curve of Ryzen 5k is a bit weird where you get very good linear scaling to around 1.1v.

My 5600X tested at various speeds, lowest voltage/powerusage in CB23:

4.2@0.99v 56W

4.3@1.02v 60W

4.4@1.05v 64W

4.5@1.10v 69W

4.6@1.18v 76W

4.7@1.26v 95W

4.8@1.34v 115W

I think it is quite comparable to 5800X. All these test were done with 4000 ram so I/O-die uses a bit more power than an avg 5600X.

Even though the I/O-die uses a bit of power (10-15W load 3200MHz ram, 20-30W load 4000MHz ram) the mem controller on ADL, I/O etc uses a fair amount of power, but at the same die as cores.

I bet 12600K will have better perf at low tdp due to less cores/cache.

- Joined

- Aug 9, 2019

- Messages

- 1,812 (0.87/day)

| Processor | 7800X3D 2x16GB CO |

|---|---|

| Motherboard | Asrock B650m HDV |

| Cooling | Peerless Assassin SE |

| Memory | 2x16GB DR A-die@6000c30 tuned |

| Video Card(s) | Asus 4070 dual OC 2610@915mv |

| Storage | WD blue 1TB nvme |

| Display(s) | Lenovo G24-10 144Hz |

| Case | Corsair D4000 Airflow |

| Power Supply | EVGA GQ 650W |

| Software | Windows 10 home 64 |

| Benchmark Scores | Superposition 8k 5267 Aida64 58.5ns |

One could argue that AMD does the same, and above 1.2v the efficiencycurve is garbage, probably the same on ADL.That's because the voltage is set a bit to high by default on the 12900k. With a little undervolting I managed 14k cbr23 score @ 35 watts. I can max out my 3090 with that power limit

Mussels

Freshwater Moderator

- Joined

- Oct 6, 2004

- Messages

- 58,412 (7.77/day)

- Location

- Oystralia

| System Name | Rainbow Sparkles (Power efficient, <350W gaming load) |

|---|---|

| Processor | Ryzen R7 5800x3D (Undervolted, 4.45GHz all core) |

| Motherboard | Asus x570-F (BIOS Modded) |

| Cooling | Alphacool Apex UV - Alphacool Eisblock XPX Aurora + EK Quantum ARGB 3090 w/ active backplate |

| Memory | 2x32GB DDR4 3600 Corsair Vengeance RGB @3866 C18-22-22-22-42 TRFC704 (1.4V Hynix MJR - SoC 1.15V) |

| Video Card(s) | Galax RTX 3090 SG 24GB: Underclocked to 1700Mhz 0.750v (375W down to 250W)) |

| Storage | 2TB WD SN850 NVME + 1TB Sasmsung 970 Pro NVME + 1TB Intel 6000P NVME USB 3.2 |

| Display(s) | Phillips 32 32M1N5800A (4k144), LG 32" (4K60) | Gigabyte G32QC (2k165) | Phillips 328m6fjrmb (2K144) |

| Case | Fractal Design R6 |

| Audio Device(s) | Logitech G560 | Corsair Void pro RGB |Blue Yeti mic |

| Power Supply | Fractal Ion+ 2 860W (Platinum) (This thing is God-tier. Silent and TINY) |

| Mouse | Logitech G Pro wireless + Steelseries Prisma XL |

| Keyboard | Razer Huntsman TE ( Sexy white keycaps) |

| VR HMD | Oculus Rift S + Quest 2 |

| Software | Windows 11 pro x64 (Yes, it's genuinely a good OS) OpenRGB - ditch the branded bloatware! |

| Benchmark Scores | Nyooom. |

I can max out my 5800x + 3090 and stay under 400W, the moment you tweak values it's an unfair comparisonThat's because the voltage is set a bit to high by default on the 12900k. With a little undervolting I managed 14k cbr23 score @ 35 watts. I can max out my 3090 with that power limit