- Joined

- Oct 9, 2007

- Messages

- 47,668 (7.43/day)

- Location

- Dublin, Ireland

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | Gigabyte B550 AORUS Elite V2 |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 16GB DDR4-3200 |

| Video Card(s) | Galax RTX 4070 Ti EX |

| Storage | Samsung 990 1TB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

Futuremark released the latest addition to the 3DMark benchmark suite, the new "Time Spy" benchmark and stress-test. All existing 3DMark Basic and Advanced users have limited access to "Time Spy," existing 3DMark Advanced users have the option of unlocking the full feature-set of "Time Spy" with an upgrade key that's priced at US $9.99. The price of 3DMark Advanced for new users has been revised from its existing $24.99 to $29.99, as new 3DMark Advanced purchases include the fully-unlocked "Time Spy." Futuremark announced limited-period offers that last up till 23rd July, in which the "Time Spy" upgrade key for existing 3DMark Advanced users can be had for $4.99, and the 3DMark Advanced Edition (minus "Time Spy") for $9.99.

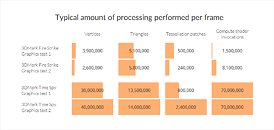

Futuremark 3DMark "Time Spy" has been developed with inputs from AMD, NVIDIA, Intel, and Microsoft, and takes advantage of the new DirectX 12 API. For this reason, the test requires Windows 10. The test almost exponentially increases the 3D processing load over "Fire Strike," by leveraging the low-overhead API features of DirectX 12, to present a graphically intense 3D test-scene that can make any gaming/enthusiast PC of today break a sweat. It can also make use of several beyond-4K display resolutions.

DOWNLOAD: 3DMark with TimeSpy v2.1.2852

View at TechPowerUp Main Site

Futuremark 3DMark "Time Spy" has been developed with inputs from AMD, NVIDIA, Intel, and Microsoft, and takes advantage of the new DirectX 12 API. For this reason, the test requires Windows 10. The test almost exponentially increases the 3D processing load over "Fire Strike," by leveraging the low-overhead API features of DirectX 12, to present a graphically intense 3D test-scene that can make any gaming/enthusiast PC of today break a sweat. It can also make use of several beyond-4K display resolutions.

DOWNLOAD: 3DMark with TimeSpy v2.1.2852

View at TechPowerUp Main Site