T0@st

News Editor

- Joined

- Mar 7, 2023

- Messages

- 3,065 (3.88/day)

- Location

- South East, UK

| System Name | The TPU Typewriter |

|---|---|

| Processor | AMD Ryzen 5 5600 (non-X) |

| Motherboard | GIGABYTE B550M DS3H Micro ATX |

| Cooling | DeepCool AS500 |

| Memory | Kingston Fury Renegade RGB 32 GB (2 x 16 GB) DDR4-3600 CL16 |

| Video Card(s) | PowerColor Radeon RX 7800 XT 16 GB Hellhound OC |

| Storage | Samsung 980 Pro 1 TB M.2-2280 PCIe 4.0 X4 NVME SSD |

| Display(s) | Lenovo Legion Y27q-20 27" QHD IPS monitor |

| Case | GameMax Spark M-ATX (re-badged Jonsbo D30) |

| Audio Device(s) | FiiO K7 Desktop DAC/Amp + Philips Fidelio X3 headphones, or ARTTI T10 Planar IEMs |

| Power Supply | ADATA XPG CORE Reactor 650 W 80+ Gold ATX |

| Mouse | Roccat Kone Pro Air |

| Keyboard | Cooler Master MasterKeys Pro L |

| Software | Windows 10 64-bit Home Edition |

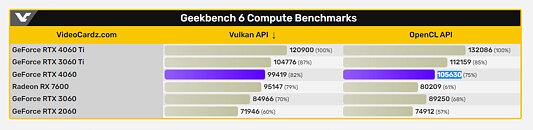

NVIDIA is launching its lower end GeForce RTX 4060 graphics card series next week, but has kept schtum about the smaller Ada Lovelace AD107 GPU's performance level. This more budget-friendly offering (MSRP $299) is rumored to have 3,072 CUDA cores, 24 RT cores, 96 Tensor cores, 96 TMUs, and 32 ROPs. It will likely sport 8 GB of GDDR6 memory across a 128-bit wide memory bus. Benchleaks has discovered the first set of test results via a database leak, and posted these details on social media earlier today. Two Geekbench 6 runs were conducted on a test system comprised of an Intel Core i5-13600K CPU, ASUS Z790 ROG APEX motherboard, DDR5-6000 memory and the aforementioned GeForce card.

The GPU Compute test utilizing the Vulkan API resulted in a score of 99419, and another using OpenCL achieved 105630. We are looking at a single sample here, so expect variations when other units get tested in Geekbench prior to the June 29 launch. The RTX 4060 is about 12% faster (in Vulkan) than its direct predecessor—RTX 3060. The gap widens with its Open CL performance, where it offers an almost 20% jump over the older card. The RTX 3060 Ti presents around 3-5% faster performance over the RTX 4060. We hope to see actual in-game benchmarking carried out soon.

View at TechPowerUp Main Site | Source

The GPU Compute test utilizing the Vulkan API resulted in a score of 99419, and another using OpenCL achieved 105630. We are looking at a single sample here, so expect variations when other units get tested in Geekbench prior to the June 29 launch. The RTX 4060 is about 12% faster (in Vulkan) than its direct predecessor—RTX 3060. The gap widens with its Open CL performance, where it offers an almost 20% jump over the older card. The RTX 3060 Ti presents around 3-5% faster performance over the RTX 4060. We hope to see actual in-game benchmarking carried out soon.

View at TechPowerUp Main Site | Source