Raevenlord

News Editor

- Joined

- Aug 12, 2016

- Messages

- 3,755 (1.18/day)

- Location

- Portugal

| System Name | The Ryzening |

|---|---|

| Processor | AMD Ryzen 9 5900X |

| Motherboard | MSI X570 MAG TOMAHAWK |

| Cooling | Lian Li Galahad 360mm AIO |

| Memory | 32 GB G.Skill Trident Z F4-3733 (4x 8 GB) |

| Video Card(s) | Gigabyte RTX 3070 Ti |

| Storage | Boot: Transcend MTE220S 2TB, Kintson A2000 1TB, Seagate Firewolf Pro 14 TB |

| Display(s) | Acer Nitro VG270UP (1440p 144 Hz IPS) |

| Case | Lian Li O11DX Dynamic White |

| Audio Device(s) | iFi Audio Zen DAC |

| Power Supply | Seasonic Focus+ 750 W |

| Mouse | Cooler Master Masterkeys Lite L |

| Keyboard | Cooler Master Masterkeys Lite L |

| Software | Windows 10 x64 |

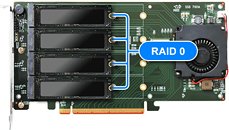

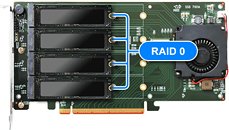

HighPoint has announced the release of a bootable Quad M.2 NVMe RAID card. The SSD7102 features a PCIe x16 connector for plugging into a users' system, and is essentially an expansion card that can house up to four M.2 NVMe SSDs - four 4x PCIe NVMe SSDs comes up to 16 total PCIe lanes, so the math definitely does check out. HighPoint has gone around CPU-bound limitations in recognizing this type of multiple devices folded into a single one with the usage of a PLX8747 PCIe bridge chip. Custom firmware allows these four drives in RAID to serve as a bootable device.

RAID is supported in RAID 0 (all drives working in tandem for the best speed); RAID 1 (the drives are set in pairs for data mirroring), or single-drive boot in a JBOD configuration. When in this configuration, all of the drives can be a boot volume, which means multiple OS installs are possible. The SSD7102 features a shroud and a single 50 mm fan to help in cooling your selection of drives (it supports any kind of vendor drive, but of course that for RAID configurations it's best to pair identical drives). The card is supported under Windows 10, Server 2012 R2 (or later), Linux Kernel 3.3 (or later) and macOS 10.13 (or later). MTBF is set at just under 1M hours, with 8 W typical power consumption, and will be available at an MSRP of $399.

View at TechPowerUp Main Site

RAID is supported in RAID 0 (all drives working in tandem for the best speed); RAID 1 (the drives are set in pairs for data mirroring), or single-drive boot in a JBOD configuration. When in this configuration, all of the drives can be a boot volume, which means multiple OS installs are possible. The SSD7102 features a shroud and a single 50 mm fan to help in cooling your selection of drives (it supports any kind of vendor drive, but of course that for RAID configurations it's best to pair identical drives). The card is supported under Windows 10, Server 2012 R2 (or later), Linux Kernel 3.3 (or later) and macOS 10.13 (or later). MTBF is set at just under 1M hours, with 8 W typical power consumption, and will be available at an MSRP of $399.

View at TechPowerUp Main Site