T0@st

News Editor

- Joined

- Mar 7, 2023

- Messages

- 3,086 (3.90/day)

- Location

- South East, UK

| System Name | The TPU Typewriter |

|---|---|

| Processor | AMD Ryzen 5 5600 (non-X) |

| Motherboard | GIGABYTE B550M DS3H Micro ATX |

| Cooling | DeepCool AS500 |

| Memory | Kingston Fury Renegade RGB 32 GB (2 x 16 GB) DDR4-3600 CL16 |

| Video Card(s) | PowerColor Radeon RX 7800 XT 16 GB Hellhound OC |

| Storage | Samsung 980 Pro 1 TB M.2-2280 PCIe 4.0 X4 NVME SSD |

| Display(s) | Lenovo Legion Y27q-20 27" QHD IPS monitor |

| Case | GameMax Spark M-ATX (re-badged Jonsbo D30) |

| Audio Device(s) | FiiO K7 Desktop DAC/Amp + Philips Fidelio X3 headphones, or ARTTI T10 Planar IEMs |

| Power Supply | ADATA XPG CORE Reactor 650 W 80+ Gold ATX |

| Mouse | Roccat Kone Pro Air |

| Keyboard | Cooler Master MasterKeys Pro L |

| Software | Windows 10 64-bit Home Edition |

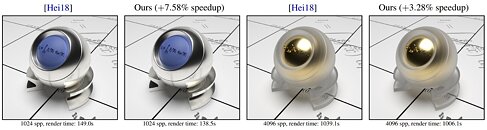

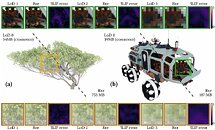

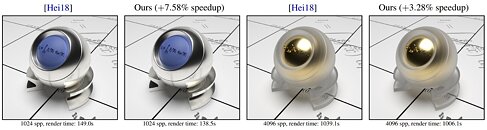

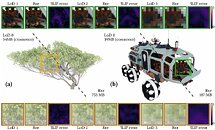

Intel's software engineers are working on path-traced light simulation and conducting neural graphics research, as documented in a recent company news article, with an ambition to create a more efficient solution for integrated graphics cards. The company's Graphics Research Organization is set to present their path-traced optimizations at SIGGRAPH 2023. Their papers have been showcased at recent EGSR and HPG events. The team is aiming to get iGPUs running path-tracing in real time, by reducing the number of calculations required to simulate light bounces.

The article covers three different techniques, all designed to improve GPU performance: "Across the process of path tracing, the research presented in these papers demonstrates improvements in efficiency in path tracing's main building blocks, namely ray tracing, shading, and sampling. These are important components to make photorealistic rendering with path tracing available on more affordable GPUs, such as Intel Arc GPUs, and a step toward real-time performance on integrated GPUs." Although there is an emphasis on in-house products in the article, Intel's "open source-first mindset" hints that their R&D could be shared with others—NVIDIA and AMD are likely still struggling to make ray tracing practical on their modest graphics card models.

The articles concludes: "We're excited to keep pushing these efforts for more efficiency in a talk in Advances in Real-time Rendering, SIGGRAPH's most attended course. During this talk, titled Path Tracing a Trillion Triangles, we demonstrate that with efficient algorithms, real-time path tracing requires a much less powerful GPU, and can be practical even on mid-range and integrated GPUs in the future. In the spirit of Intel's open ecosystem software mindset, we will make this cross-vendor framework open source as a sample and a test-bed for developers and practitioners."

View at TechPowerUp Main Site | Source

The article covers three different techniques, all designed to improve GPU performance: "Across the process of path tracing, the research presented in these papers demonstrates improvements in efficiency in path tracing's main building blocks, namely ray tracing, shading, and sampling. These are important components to make photorealistic rendering with path tracing available on more affordable GPUs, such as Intel Arc GPUs, and a step toward real-time performance on integrated GPUs." Although there is an emphasis on in-house products in the article, Intel's "open source-first mindset" hints that their R&D could be shared with others—NVIDIA and AMD are likely still struggling to make ray tracing practical on their modest graphics card models.

The articles concludes: "We're excited to keep pushing these efforts for more efficiency in a talk in Advances in Real-time Rendering, SIGGRAPH's most attended course. During this talk, titled Path Tracing a Trillion Triangles, we demonstrate that with efficient algorithms, real-time path tracing requires a much less powerful GPU, and can be practical even on mid-range and integrated GPUs in the future. In the spirit of Intel's open ecosystem software mindset, we will make this cross-vendor framework open source as a sample and a test-bed for developers and practitioners."

View at TechPowerUp Main Site | Source