- Joined

- Oct 9, 2007

- Messages

- 47,670 (7.43/day)

- Location

- Dublin, Ireland

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | Gigabyte B550 AORUS Elite V2 |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 16GB DDR4-3200 |

| Video Card(s) | Galax RTX 4070 Ti EX |

| Storage | Samsung 990 1TB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

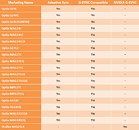

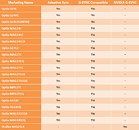

Following NVIDIA's announcement of their newest drivers, MSI monitors are effectively G-Sync Compatible! This technology allows G-Sync to be used on Adaptive Sync monitors. G-Sync, an anti-tearing, anti-flickering and anti-stuttering monitor technology designed by NVIDIA, was once only exclusive to monitors that had passed the NVIDIA certification. With the newest release of NVIDIA GPU driver, NVIDIA now allows G-Sync to be used on monitors that support Adaptive Sync technology when they are connected to an NVIDIA graphics card.

At the moment, not all Adaptive Sync monitors on the market are perfectly G-Sync Compatible. MSI, on the other hand, has been constantly testing Adaptive Sync monitors to determine if they are G-Sync Compatible. Below are the test results.

*Models not listed are still under internal testing

Graphics cards used for the test:

View at TechPowerUp Main Site

At the moment, not all Adaptive Sync monitors on the market are perfectly G-Sync Compatible. MSI, on the other hand, has been constantly testing Adaptive Sync monitors to determine if they are G-Sync Compatible. Below are the test results.

*Models not listed are still under internal testing

Graphics cards used for the test:

- MSI RTX 2070 Ventus 8G

- MSI RTX 2080 Ventus 8G

- MSI GTX 1080 Gaming 8G

- Graphics Card: NVIDIA 10 series (pascal) or newer

- VGA driver version should be later than 417.71

- Display Port 1.2a (or above) cable

- Windows 10

- Only a single display is currently supported; Multiple monitors can be connected but no more than one display should have G-SYNC Compatible enabled

View at TechPowerUp Main Site

Regardless. There are some arguments to be made here;

Regardless. There are some arguments to be made here;