At least with FG you know they're running the same game

By your own previous argument, it's running in a different way and should not be comparable.

I don't think it's even about fairness it's just a shitty way of presenting those performance metrics for the tiny percentage of people who would even care, wouldn't you want to know that this now supports a smaller data type and that you can now run smaller models ?

The tiny percentage of people that care about it should be aware that those performance increases come from the extra bandwidth.

Be honest when you saw that did you assume it's the same data type or did you magically understand there must be more to it before squinting your eyes in the footnotes (if you ever did before someone else pointed it out for you), I for one admit I missed it before I saw someone else talk about it.

Being 100% honest, I hadn't even seen that Flux was in that graph at first lol

I just saw 1 game, then another one with DLSS stuff on top, and another one like that, and just stopped looking because I don't even care about games, and the comparison between DLSS stuff is kinda moot as everyone already agreed to in here.

I only noticed it when someone else brought the FP4 vs FP8 stuff earlier, I got confused what they were talking about until I noticed Flux was in there (and only for the 4090 vs 5090 comparison, not the others), so I was already aware of it by the time I saw it.

Does the AI industry even buy 5070/5080 level cards? I mean, home users getting their feet wet in AI, sure, but the wealthiest AI corps need a lot more oomph, don't they? That's who uber expensive professional cards are for. To them, everything you say about the 5070/5080 is meaningless.

5070s I don't think so, but 5080s would still be useful. If your model fits within 16GB, you can use these as cheap-yet-powerful inference stations.

Not a big company doing collocation in a big DC, but plenty of startups do something like that. Just take a look at vast.ai and you'll notice systems like so:

8x 4070S Ti on an Epyc node with 258GB of RAM.

No, they are buyin B200 which also used FP4 claims (vs FP8 for hopper) in their marketing slides.

Look, the thing is, there was another company at CES that compared their 120w CPU vs the competitions 17w chip. With no small letters btw. No one is talking about it being misleading, but we have 50 different threads 20 pages long complaining about nvidia. Makes you wonder

B200s would be for the big techs, most others either buy smaller models (or even consumer GPUs), or just subscribe to some cloud provider.

Fair enough. Still wrong, imo, but as long as buyers are fine with it, who am I to argue.

They are more than fine, it means lots of vram savings and more perf.

Really? That's poor as well. I guess no one was really interested in that CPU. I don't even know which one you're talking about, it completely missed the spot with me (although I admit, I only looked for GPUs this time around).

That was AMD doing comparisons of strix halo vs lunar lake (and it was the shitty 30W lunar lake model, instead of the regular 17W).

RTX 4000 series changed the pattern about next gen. near top SKU beating the previous gen. top SKU. This no longer works, dudes.

Nvidia widened gap between SKUs. RTX 4080 has only 60% of 4090's compute units.

With RTX 5000 series, gap is even more widening. RTX 5080 will have only 50% of RTX 5090's compute units and just 65% of RTX 4090's.

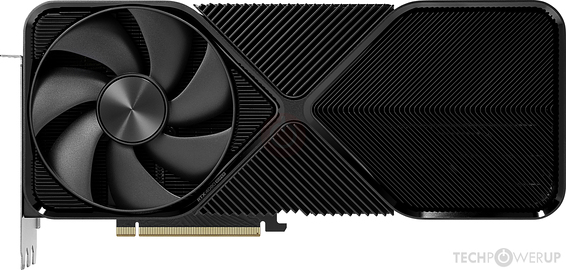

NVIDIA AD103, 2550 MHz, 10240 Cores, 320 TMUs, 112 ROPs, 16384 MB GDDR6X, 1438 MHz, 256 bit

www.techpowerup.com

NVIDIA GB203, 2617 MHz, 10752 Cores, 336 TMUs, 112 ROPs, 16384 MB GDDR7, 1875 MHz, 256 bit

www.techpowerup.com

RTX 5080 is basically RTX 4080S with 5% more compute units and a bit higher clocks.

No room for any significant performance boost this time (no significant node change).

Seriously, do your math people. I say it once more -

RTX 5080 won't beat RTX 4090 in native (no DLSS and FG) because it lacks hardware resources.

We should come back to this discussion after reviews of 5080 are up. I don't have problem to admit that I was wrong WHEN I was wrong.

As for RX 9070 XT, no one is really expecting that it will go toe to toe with RX 7900 XTX, with RX 7900 XT maybe. (I'm not taking RT into account here.)

AMD clearly stated that they want to focus on making mainstream card for masses with vastly improved RT performance over previous generation.

I personally estimate for RX 9070 XT to be 5% below RX 7900XT but beating RX 7900XT in RT. Unfortunately, I don't give a f* about RT now.

RT may become a reasonable things when it will become less burdening on hardware, meaning ramping up RT will degrade performance as much as 15-20%.

Anything above that is just too much. My personal expectation is that AMD will move with RDNA4 from -60% perf. degradation to 30-35% degradation.

To be honest, even though the 4090 had almost 70% more cores, this doesn't mean that it had 70% more performance

in games, in the same way the 5090 won't have 100% higher perf than the 5080 in this scenario.

The 4090 was really bottlenecked by memory bandwidth for games, and the 5080 has a bandwidth pretty similar to it, so the gap between those two may not be as big as the difference in SMs.

Will it be faster or equal in games? I don't know, reviews should reveal that once they're available, but I wouldn't be surprised if it does (in the same sense I wouldn't be in case it doesn't). Game perf is not really linear with either memory bandwidth nor compute units, so it's hard to estimate anything.