Raevenlord

News Editor

- Joined

- Aug 12, 2016

- Messages

- 3,755 (1.18/day)

- Location

- Portugal

| System Name | The Ryzening |

|---|---|

| Processor | AMD Ryzen 9 5900X |

| Motherboard | MSI X570 MAG TOMAHAWK |

| Cooling | Lian Li Galahad 360mm AIO |

| Memory | 32 GB G.Skill Trident Z F4-3733 (4x 8 GB) |

| Video Card(s) | Gigabyte RTX 3070 Ti |

| Storage | Boot: Transcend MTE220S 2TB, Kintson A2000 1TB, Seagate Firewolf Pro 14 TB |

| Display(s) | Acer Nitro VG270UP (1440p 144 Hz IPS) |

| Case | Lian Li O11DX Dynamic White |

| Audio Device(s) | iFi Audio Zen DAC |

| Power Supply | Seasonic Focus+ 750 W |

| Mouse | Cooler Master Masterkeys Lite L |

| Keyboard | Cooler Master Masterkeys Lite L |

| Software | Windows 10 x64 |

Up to now, only users with Intel's latest Kaby Lake architecture processors could enjoy 4K Netflix due to some strict DRM requirements. Now, NVIDIA is taking it upon itself to allow users with one of its GTX 10 series graphics cards (absent the 1050, at least for now) to enjoy some 4K Netflix and chillin'. Though you really do seem to have to go through some hoops to get there, none of these should pose a problem.

The requirements to enable Netflix UHD playback, as per NVIDIA, are so:

Single or multi GPU multi monitor configuration

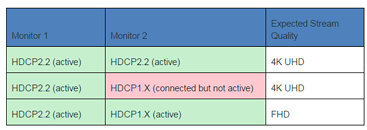

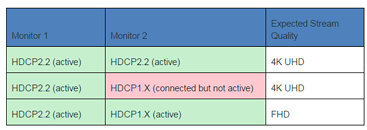

In case of a multi monitor configuration on a single GPU or multiple GPUs where GPUs are not linked together in SLI/LDA mode, 4K UHD streaming will happen only if all the active monitors are HDCP2.2 capable. If any of the active monitors is not HDCP2.2 capable, the quality will be downgraded to FHD.

What do you think? Is this enough to tide you over to the green camp? Do you use Netflix on your PC?

View at TechPowerUp Main Site

The requirements to enable Netflix UHD playback, as per NVIDIA, are so:

- NVIDIA Driver version exclusively provided via Microsoft Windows Insider Program (currently 381.74).

- No other GeForce driver will support this functionality at this time

- If you are not currently registered for WIP, follow this link for instructions to join: https://insider.windows.com/

- NVIDIA Pascal based GPU, GeForce GTX 1050 or greater with minimum 3GB memory

- HDCP 2.2 capable monitor(s). Please see the additional section below if you are using multiple monitors and/or multiple GPUs.

- Microsoft Edge browser or Netflix app from the Windows Store

- Approximately 25Mbps (or faster) internet connection.

Single or multi GPU multi monitor configuration

In case of a multi monitor configuration on a single GPU or multiple GPUs where GPUs are not linked together in SLI/LDA mode, 4K UHD streaming will happen only if all the active monitors are HDCP2.2 capable. If any of the active monitors is not HDCP2.2 capable, the quality will be downgraded to FHD.

What do you think? Is this enough to tide you over to the green camp? Do you use Netflix on your PC?

View at TechPowerUp Main Site