61

61

NVIDIA SLI GeForce RTX 2080 Ti and RTX 2080 with NVLink Review

(61 Comments) »Introduction

We reviewed a bunch of GeForce RTX 20-series graphics cards last week, which left us with plenty of cards to test SLI performance. Since its introduction some 14 years ago, NVIDIA SLI (Scalable Link Interface) has become arguably the most popular multi-GPU technology for the sheer popularity of the GeForce brand. Multi-GPU lets you team up multiple graphics processors to increase performance, letting you either build a bleeding-edge gaming rig with the fastest graphics cards or incrementally upgrade your graphics performance over time.

Until a few generations ago, SLI was supported across NVIDIA's product stacks, running from high-end through the mainstream, with only the cheapest sub-$100 graphics cards lacking it. NVIDIA has since narrowed its availability down to only the most expensive graphics cards in its lineup. With the RTX 20-series, SLI support starts at $800, with the RTX 2080. Not even the $500 RTX 2070 gets it. This is probably because the TU106 silicon it's based on physically lacks the NVLink interface.

Speaking of which, NVLink is a high-bandwidth, point-to-point interconnect originally designed by NVIDIA for the enterprise market, to build meshes of several AI compute GPUs, so an application can span across an abstract network of multiple GPUs, accessing all of their memory across a flat address-space at very high bandwidths and very low latencies. NVIDIA decided to bring NVLink to the consumer space with its "Turing" silicon, so up to two GPUs could talk to each other at 100 GB/s and extremely low latencies without burdening the PCI-Express bus.

NVIDIA has always refrained from taking the cable-free route for multi-GPU, unlike rival AMD. The NVIDIA philosophy for multi-GPU prioritizes low latency direct communication between the GPUs, so problems inherent to multi-GPU setups, such as lack of smoothness in display output or micro-stutter, could be alleviated. If you've read our recent GeForce RTX 2080 Ti PCI-Express scaling article, you'll know that the RTX 2080 Ti is able to saturate PCI-Express gen 3.0 x8, and had NVIDIA taken AMD's approach of doing multi-GPU over PCIe, it could have ended badly.

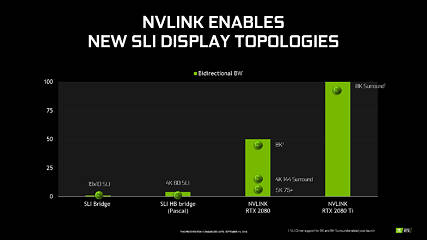

Whatever happened to SLI-HB, you ask? Good question. Introduced with "Pascal," SLI-HB doubled the interface bandwidth between GPUs, so it wouldn't bottleneck the setup at higher resolutions, such as 4K. NVLink provides sufficient bandwidth for an SLI setup to cope with even higher display formats than SLI-HB can handle, such as 4K-120 Hz, 5K-60 Hz, or even 8K for the craziest enthusiasts out there. More importantly, 3DVision Surround setups with higher resolution monitors is possible, at least according to NVIDIA. The NVLink bridge is more complex in design than SLI-HB and can only be obtained separately, priced at $79 a piece.

In this review, we are testing a 2-way SLI of the GeForce RTX 2080 Ti, GeForce RTX 2080, and freshly re-benched GeForce GTX 1080 Ti SLI. Unlike "Pascal" cards, 3-way or 4-way SLI setups aren't even unofficially possible. The RTX 2080 Ti SLI setup is put through our entire set of graphics card benchmarks, to shape your expectations as an early adopter with very deep-pockets. We are very curious to see just how many of the AAA titles we added to our VGA bench in these past 20 months play with SLI.

The NVLink SLI Bridge

NVIDIA's NVLink bridge shares some of its design language with the latest GeForce RTX "Founders Edition" graphics cards. It comes in two physical sizes, depending on how much spacing there is between your two graphics cards and the PCIe slots they're installed in.

With "Volta" and "Turing," NVIDIA is implementing the 2nd generation NVLink protocol with up to two 8-lane sublinks. The TU102 supports two x8 sublinks making up one link (making it mesh-capable in its Quadro RTX avatar) and is theoretically capable of 300 GB/s, while the TU104 supports just one x8 link capable of up to 150 GB/s. On the GeForce RTX 20-series GPUs, NVIDIA is either using fewer lanes or lower clocks since it doesn't envision setups with more than 2 GPUs. The RTX 2080 Ti SLI hence has 100 GB/s bi-directional NVLink bandwidth, while the RTX 2080 SLI has 50 GB/s.

Disassembly

The NVLink bridge has a C-shaped design, unlike SLI-HB bridges that were rectangular. The NVLink connector has a higher pin-count (124 pins) compared to SLI-HB (52 pins), yet the fiberglass PCB looks narrower because it's probably multi-layer and uses vias near the connectors. This explains why you don't see flexible NVLink bridges printed on polyethylene substrates as those would need to be single-layer, running the entire width of the NVLink connector and resembling an ugly ribbon cable, such as IDE, hampering airflow for the Founders Edition axial-flow cooler.

There seem to be two tiny chips responsible for the LED lighting inside the shroud. This is purely cosmetic as it could at best indicate that an SLI link is active between two GPUs. The NVLink bridge is still a "dumb" piece of hardware, like a cable, and has no real logic of its own. Asking $79 for this is quite outrageous.

Our Patreon Silver Supporters can read articles in single-page format.

Apr 10th, 2025 15:58 EDT

change timezone

Latest GPU Drivers

New Forum Posts

- RX 9000 series GPU Owners Club (280)

- What are you playing? (23360)

- New posts added to last post (automatic post merging) (27)

- SAMSUNG A52s 5G // SM-A528B/DS - Delayed Security updates / seems 3 month cycle / no updates around october 2025. (0)

- Your GPU history? (370)

- Gigabyte 9070 XT audio issues (26)

- AMD RX580 2048SP on macOS - help! (0)

- TPU's Nostalgic Hardware Club (20219)

- SK hynix A-Die (Overclocking thread) only for RYZEN AM5 users (14)

- Help with updating firmware on a AOC Agon Pro OLED monitor, says I need Install Tool (7)

Popular Reviews

- The Last Of Us Part 2 Performance Benchmark Review - 30 GPUs Compared

- ASRock Z890 Taichi OCF Review

- MCHOSE L7 Pro Review

- Sapphire Radeon RX 9070 XT Pulse Review

- Zotac GeForce RTX 5070 Ti Amp Extreme Review

- PowerColor Radeon RX 9070 Hellhound Review

- Sapphire Radeon RX 9070 XT Nitro+ Review - Beating NVIDIA

- Upcoming Hardware Launches 2025 (Updated Apr 2025)

- ASUS GeForce RTX 5080 Astral OC Review

- Acer Predator GM9000 2 TB Review

Controversial News Posts

- NVIDIA GeForce RTX 5060 Ti 16 GB SKU Likely Launching at $499, According to Supply Chain Leak (175)

- MSI Doesn't Plan Radeon RX 9000 Series GPUs, Skips AMD RDNA 4 Generation Entirely (146)

- Microsoft Introduces Copilot for Gaming (124)

- AMD Radeon RX 9070 XT Reportedly Outperforms RTX 5080 Through Undervolting (119)

- NVIDIA Reportedly Prepares GeForce RTX 5060 and RTX 5060 Ti Unveil Tomorrow (115)

- NVIDIA Sends MSRP Numbers to Partners: GeForce RTX 5060 Ti 8 GB at $379, RTX 5060 Ti 16 GB at $429 (103)

- Nintendo Confirms That Switch 2 Joy-Cons Will Not Utilize Hall Effect Stick Technology (102)

- Over 200,000 Sold Radeon RX 9070 and RX 9070 XT GPUs? AMD Says No Number was Given (100)