-

Welcome to TechPowerUp Forums, Guest! Please check out our forum guidelines for info related to our community.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

RTX 4090 & 53 Games: Ryzen 7 5800X vs Core i9-12900K

- Thread starter W1zzard

- Start date

- Joined

- May 21, 2009

- Messages

- 276 (0.05/day)

| Processor | AMD Ryzen 5 4600G @4300mhz |

|---|---|

| Motherboard | MSI B550-Pro VC |

| Cooling | Scythe Mugen 5 Black Edition |

| Memory | 16GB DDR4 4133Mhz Dual Channel |

| Video Card(s) | IGP AMD Vega 7 Renoir @2300mhz (8GB Shared memory) |

| Storage | 256GB NVMe PCI-E 3.0 - 6TB HDD - 4TB HDD |

| Display(s) | Samsung SyncMaster T22B350 |

| Software | Xubuntu 24.04 LTS x64 + Windows 10 x64 |

53 games!!!!!!!

And not one DX9!!!

"After hundreds of individual benchmarks, we present you with a fair idea of just how much the GeForce RTX 4090 "Ada" graphics card is bottlenecked by the CPU."

A question for the software/hardware experts out there.....

When it is bound by CPU, how much of that 'boundness' would be due to

-IPC

-Frequency

-Cache

-Architecture/IMC/ram speed/BIOS/OS (branch prediction, HPET, other OS oddities, etc.)

-Other (API/optimization, instruction sets, etc., misc., other?)

or basically impossible to say?

Yeah this will be good to add

- Joined

- Dec 19, 2008

- Messages

- 320 (0.05/day)

- Location

- WA, USA

| System Name | Desktop |

|---|---|

| Processor | AMD Ryzen 7945HX3D |

| Motherboard | BD790i X3D |

| Memory | 64GB DDR5 5600 |

| Video Card(s) | AMD RX 9070 XT |

| Display(s) | AOC CU34G2X |

| Power Supply | 850w |

This test is normal X, not X3D.X3D lacks good clocks. Thats why the intel overall is faster. But the same Intel consumes roughly double the power to accomplish it.

Poor threading, ultimately. Remember when Microsoft was teasing DX12? Closer to the metal? How 2.3Ghz with multiple cores would be just fine, but years later it's still a Ghz battle? This is closely tied to the OS as well, of course.53 games!!!!!!!

And not one DX9!!!

"After hundreds of individual benchmarks, we present you with a fair idea of just how much the GeForce RTX 4090 "Ada" graphics card is bottlenecked by the CPU."

A question for the software/hardware experts out there.....

When it is bound by CPU, how much of that 'boundness' would be due to

-IPC

-Frequency

-Cache

-Architecture/IMC/ram speed/BIOS/OS (branch prediction, HPET, other OS oddities, etc.)

-Other (API/optimization, instruction sets, etc., misc., other?)

or basically impossible to say?

- Joined

- Dec 22, 2011

- Messages

- 3,947 (0.80/day)

| Processor | AMD Ryzen 7 5700X3D |

|---|---|

| Motherboard | MSI MAG B550 TOMAHAWK |

| Cooling | Thermalright Peerless Assassin 120 SE |

| Memory | Team Group Dark Pro 8Pack Edition 3600Mhz CL16 |

| Video Card(s) | Sapphire AMD Radeon RX 9070 XT NITRO+ |

| Storage | Kingston A2000 1TB + Seagate HDD workhorse |

| Display(s) | Hisense 55" U7K Mini-LED 4K@144Hz |

| Case | Thermaltake Ceres 500 TG ARGB |

| Power Supply | Seasonic Focus GX-850 |

| Mouse | Razer Deathadder Chroma |

| Keyboard | Logitech UltraX |

| Software | Windows 11 |

Ouch, pretty brutal for the red team, help me Obi-wan 5800X3D-nobi, you're my only hope. Still for the price the 5800X does great... averaged out over all titles.

Yeah, we all know that the X3D version is the 'real' gaming CPU from AMD.no 5800x3d?

is it on the menu (for later) at the very least?

Why not both?5800X3D for sure me thinks

@W1zzard I know it's not very common for CPU comparisons, but I wonder if you could test a few games with ray-tracing on as well. I find RT to be a fairly CPU-intensive task, even at high-resolution, and the little CPU comparison data with RT enabled we do have from outlets like Eurogamer and Hardware Unboxed back this up. It's a real-world test case that's relevant to many people and might be useful to include in these comparisons as well. Cyberpunk 2077, Hitman 3, and Spider-Man Remastered with high object draw distance are three games that come to mind for really hammering the CPU in certain scenarios with RT on.

Last edited:

- Joined

- Jul 13, 2016

- Messages

- 3,757 (1.14/day)

| Processor | Ryzen 9800X3D |

|---|---|

| Motherboard | ASRock X670E Taichi |

| Cooling | Noctua NH-D15 Chromax |

| Memory | 64GB DDR5 6000 CL26 |

| Video Card(s) | MSI RTX 4090 Trio |

| Storage | P5800X 1.6TB 4x 15.36TB Micron 9300 Pro 4x WD Black 8TB M.2 |

| Display(s) | Acer Predator XB3 27" 240 Hz |

| Case | Thermaltake Core X9 |

| Audio Device(s) | JDS Element IV, DCA Aeon II |

| Power Supply | Seasonic Prime Titanium 850w |

| Mouse | PMM P-305 |

| Keyboard | Wooting HE60 |

| VR HMD | Valve Index |

| Software | Win 10 |

Then why not use DDR5-7200.

I mean you can't even buy 32GB of DDR4-4000 C14. You then have to OC it past XMP settings, you're playing silicon lotto at this point.

DDR4-4000 C16 is the best you can do on XMP, and only about 5% of Zen 3 will let you run IF 2000 1:1

Why not just use the standard test bench, which represents what probably 80%+ of what people can actually do (DDR5-6000 and DDR4-3600 C14).

To be fair hardly anyone is running a 4090 in general right now. The price precludes it from being in the majority of people's hands.

I do agree in regards to memory. OC memory should not be used unless the piece is specifically about memory scaling / is a section in a product review regarding memory OC.

That said, this comparison should have never been done with such a discrepancy in memory or CPU tier. If the intent is to show how much performance reasonable high-end systems can squeeze out of the 4090 then the comparison should have been the 5800X vs the 12700K, both with DDR4 memory. If the intent is to show how much the fastest from Intel and AMD can squeeze out of the 4090 the comparison should be the 5800X3D vs 7950X vs 12900K all with the fastest RAM that'll run reliably. For DDR5 that's DDR5 6000 CL30 and for DDR4 3600 CL14.

You can tell the biggest reason for this comparison is a result of criticism pointed towards the RTX 4090 review where it was criticized that the original test platform with the 5800x was holding back the RTX 4090.

This is addressing that criticism and testing it rather than simply saying the 5800x is a good enough platform for such a high end card, particularly at 4k.

And this article validates that criticism. It is humble for Wizzard to publish this article.

All articles are not ads for AMD where AMD need to be shown in the best light possible.

Yes, different reviewers have different approaches to reviews that may impact the results. For example OS and RAM choice in addition to how data is gathered. It's important to look at many reviews and examine the results. If one review obtained abnormal results the objective thing would be to review methodology and look for ways to improve, assuming of course those differences are not the result of a difference in approach. Some reviewers for example prefer to appeal to average customers by using slower RAM and others like including 720p results for certain tests.

- Joined

- Jan 27, 2015

- Messages

- 1,912 (0.50/day)

| System Name | Legion |

|---|---|

| Processor | i7-12700KF |

| Motherboard | Asus Z690-Plus TUF Gaming WiFi D5 |

| Cooling | Arctic Liquid Freezer 2 240mm AIO |

| Memory | PNY MAKO DDR5-6000 C36-36-36-76 |

| Video Card(s) | PowerColor Hellhound 6700 XT 12GB |

| Storage | WD SN770 512GB m.2, Samsung 980 Pro m.2 2TB |

| Display(s) | Acer K272HUL 1440p / 34" MSI MAG341CQ 3440x1440 |

| Case | Montech Air X |

| Power Supply | Corsair CX750M |

| Mouse | Logitech MX Anywhere 25 |

| Keyboard | Logitech MX Keys |

| Software | Lots |

To be fair hardly anyone is running a 4090 in general right now. The price precludes it from being in the majority of people's hands.

I do agree in regards to memory. OC memory should not be used unless the piece is specifically about memory scaling / is a section in a product review regarding memory OC.

That said, this comparison should have never been done with such a discrepancy in memory or CPU tier. If the intent is to show how much performance reasonable high-end systems can squeeze out of the 4090 then the comparison should have been the 5800X vs the 12700K, both with DDR4 memory. If the intent is to show how much the fastest from Intel and AMD can squeeze out of the 4090 the comparison should be the 5800X3D vs 7950X vs 12900K all with the fastest RAM that'll run reliably. For DDR5 that's DDR5 6000 CL30 and for DDR4 3600 CL14.

It wasn't any of those intents from what I read, it was to see what changing the GPU bench test setup TPU uses from a 5800X (the current standard) to a 12900K (one of the contenders at the time they went to 5800X) would reveal. It's in the verbiage of the article.

I just think they should have stayed with their normal DDR4-3600 setup.

- Joined

- Apr 17, 2014

- Messages

- 236 (0.06/day)

| System Name | 14900KF |

|---|---|

| Processor | i9-14900KF |

| Motherboard | ROG Z790-Apex |

| Cooling | Custom water loop: D5 |

| Memory | G-SKill 7200 DDR5 |

| Video Card(s) | RTX 4080 |

| Storage | M.2 and Sata SSD's |

| Display(s) | LG 4K OLED GSYNC compatible |

| Case | Fractal Mesh |

| Audio Device(s) | sound blaster Z |

| Power Supply | Corsair 1200i |

| Mouse | Logitech HERO G502 |

| Keyboard | Corsair K70R cherry red |

| Software | Win11 |

| Benchmark Scores | bench score are for people who don't game. |

Conclusion, AMD fan boy hearts be broken.

how so? 5800X worth less than 12900K, and eats way less energy. 5700X has almost same performance, and heats even less.Conclusion, AMD fan boy hearts be broken.

5800X used with Single Rank memory, instead of Dual Rank, while 12900K used with DDR5 memory.

Not saying, that 5800X has 5800X3D version. and its Diffident gens.

This test shows people, that right now u dont need super-duper CPU (unless u own the 2000$ GPU), and u will be fine with basic 5600X/5700X/5900X or 12400F/12600K/12700K. Its has nothing to do with "Intel vs AMD"

Last edited:

Right. Thanks for taking time to do all those tests. Gordon from PC World said earlier today that his hair got grey from years of testing and he had to shave it. I hope this is not the case with you after so many tests ;-)

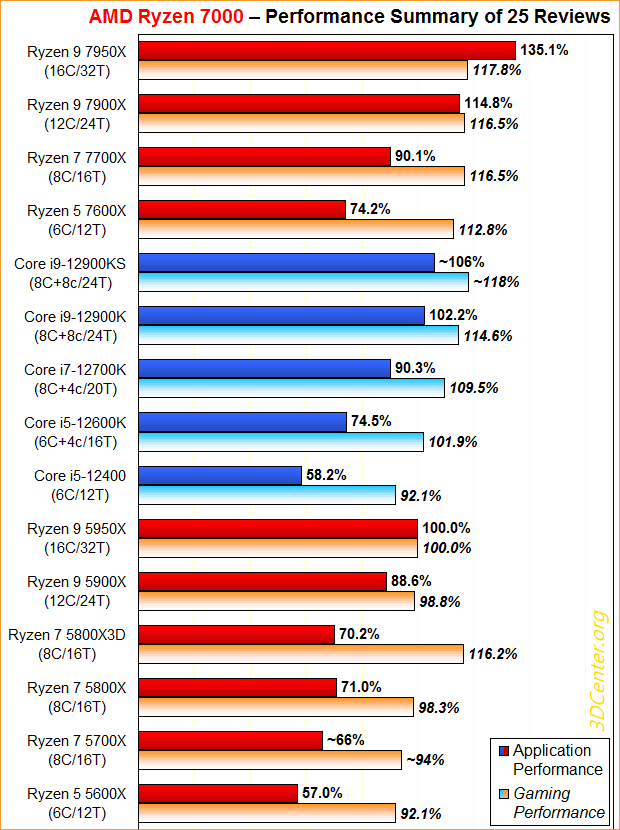

Back to business, this test with 4090 shows that nothing fundamentally has changed, following so many tests with Ampere cards. In 3D Center's meta-analysis from December 2021, 12900K is ~16% faster in 1080p gaming than 5800X, so within margin of error. This graph has added 7000 CPUs too.

By the way, 5800X, 5900X and 5950X have very similar performance in 1080p gaming, within less than 2% difference.

Recent testing of 7950X showed that gaming performance is even better when one CCD is switched off and gaming is faster with 8 cores only due to latency penalty between CCDs. So, testing with 5800X is fine.

Back to business, this test with 4090 shows that nothing fundamentally has changed, following so many tests with Ampere cards. In 3D Center's meta-analysis from December 2021, 12900K is ~16% faster in 1080p gaming than 5800X, so within margin of error. This graph has added 7000 CPUs too.

It does not really matter. It was an academic probe. Nothing has changed that we did not know before.Comparing a i9-12900k priced at 699,90€ with a 339,90€ ryzen7 5800x??Should be a Ryzen 9 5950X (614,90€) maybe.

By the way, 5800X, 5900X and 5950X have very similar performance in 1080p gaming, within less than 2% difference.

Recent testing of 7950X showed that gaming performance is even better when one CCD is switched off and gaming is faster with 8 cores only due to latency penalty between CCDs. So, testing with 5800X is fine.

Please don't post nonsense on a platform with serious discussions. It's embarrassing. Step up the game.Conclusion, AMD fan boy hearts be broken.

- Joined

- Jan 20, 2019

- Messages

- 1,802 (0.76/day)

- Location

- London, UK

| System Name | ❶ Oooh (2024) ❷ Aaaah (2021) ❸ Ahemm (2017) |

|---|---|

| Processor | ❶ 5800X3D ❷ i7-9700K ❸ i7-7700K |

| Motherboard | ❶ X570-F ❷ Z390-E ❸ Z270-E |

| Cooling | ❶ ALFIII 360 ❷ X62 + X72 (GPU mod) ❸ X62 |

| Memory | ❶ 32-3600/16 ❷ 32-3200/16 ❸ 16-3200/16 |

| Video Card(s) | ❶ 3080 X Trio ❷ 2080TI (AIOmod) ❸ 1080TI |

| Storage | ❶ NVME/SATA-SSD/HDD ❷ <SAME ❸ <SAME |

| Display(s) | ❶ 1440/165/IPS ❷ 1440+4KTV ❸ 1080/144/IPS |

| Case | ❶ BQ Silent 601 ❷ Cors 465X ❸ S340-Elite |

| Audio Device(s) | ❶ HyperX C2 ❷ HyperX C2 ❸ Logi G432 |

| Power Supply | ❶ HX1200 Plat ❷ RM750X ❸ EVGA 650W G2 |

| Mouse | ❶ Logi G Pro ❷ Razer Bas V3 ❸ Razer Bas V3 |

| Keyboard | ❶ Logi G915 TKL ❷ Anne P2 ❸ Logi G610 |

| Software | ❶ Win 11 ❷ 10 ❸ 10 |

| Benchmark Scores | I have wrestled bandwidths, Tussled with voltages, Handcuffed Overclocks, Thrown Gigahertz in Jail |

Right. Thanks for taking time to do all those tests. Gordon from PC World said earlier today that his hair got grey from years of testing and he had to shave it. I hope this is not the case with you after so many tests ;-)

Back to business, this test with 4090 shows that nothing fundamentally has changed, following so many tests with Ampere cards. In 3D Center's meta-analysis from December 2021, 12900K is ~16% faster in 1080p gaming than 5800X, so within margin of error. This graph has added 7000 CPUs too.

View attachment 266093

It does not really matter. It was an academic probe. Nothing has changed that we did not know before.

By the way, 5800X, 5900X and 5950X have very similar performance in 1080p gaming, within less than 2% difference.

Recent testing of 7950X showed that gaming performance is even better when one CCD is switched off and gaming is faster with 8 cores only due to latency penalty between CCDs. So, testing with 5800X is fine.

Please don't post nonsense on a platform with serious discussions. It's embarrassing. Step up the game.

Got a link to the above chart? Will we get to see individual game results too alongside test setup notes and display resolutions?

One of the things I admire about TPU charts is the wealth of info that comes with it... a second source with a similar break-up would be great.

15% slower on average is far away from "brutal". Nonsense. Plus, if someone plays in 4K, the difference in experience is pretty much negligible. However, your pocket will feel a real and deep difference by deciding to game with halo CPU 12900K.Ouch, pretty brutal for the red team, help me Obi-wan 5800X3D-nobi, you're my only hope. Still for the price the 5800X does great... averaged out over all titles.

- Joined

- Mar 21, 2016

- Messages

- 2,761 (0.81/day)

Should I test 5800X3D or faster RAM speed?

Definitely a weird comparison with the 12900K. The locked chip Raptor Lake chips below the 13600K when it arrives and older 12600K are closer comparisons for the 5800X.

On the other hand if comparing with 5800X3D and 12700K and also 13600K with DDR4 I think make the most fair comparisons. If your comparing with DDR5 however you'd have to probably compare a locked Raptor Lake chip below the 13600K eventually or previous generation 12600K. The difference is expected DDR5 price and platform cost relative to DDR4 price and platform cost. That's even factoring out the other variable of already owning DDR4 memory which makes a value comparison with DDR5 even trickier between newer and older chips.

Far as 5800X3D or faster RAM speed 5800X the former since the even with a 5800X with faster memory is a really hard comparison against a 12900K. You have to be comparing a very premium obscure DDR4 kit like 4800MT/s CL17 or 4000MT/s CL14 that most wouldn't be temped to buy. That's exceptional binning for DDR4 though however SR and limited capacity. The cost difference is too steep for it to be a practical comparison that's not heavily slanted.

A comparison on 5800XD with 4000MT/s CL14 against 13600K and a more affordable z670 board and best DDR5 kit that matches the CPU/MB/RAM cost of the AMD configuration would be a good one see. The 13600K should win at MT and could challenge 5800X3D at gaming more than expected, but then again a crazy kit of very binned DDR4 might make 5800X3D even exciting where it already excels very well.

Seeing the same cost investment between the two would be a nice showdown on which comes out on top and in which under scenario's and how is the power on each!!? Keep it as money neutral as possible within about +/- $20's.

Last edited:

We know now that changing GPU from Ampere to Lovelace does not make a significant difference for Zen3 and Alder Lake CPU average performance in gaming. See #89Should I test 5800X3D or faster RAM speed?

With 3D SKU, I doubt we will find something novel with Nvidia GPUs, but I might be wrong. Testing 5800X 3D will be more interesting with RDNA3 GPUs, because we know from HUB tests that SAM works slightly better on AMD's systems and brings more gains.

RAM could be more exciting with 7700X or 7900X vs. 12900K.

3600MHz is a sweet spot for Zen3, but even testing with different RAM did not uncover anything that we did not know before. It's all roughly the same, within margin of error.I'm more shocked that this is posted live to the world (and the fact that 53 games were tested with that bad ram) and that someone who has so much systems didnt realize yet that 4000mhz is not go for Zen 3, especially those really high timings. Just to be clear, I dont have 500$ board (I'm on B550 Pro4, 100$ board) and 2x8 Ballistix 3600 CL16 (E-die) that I picked for a bit more than 50€ on amazon.de. Actually, this is ONE of the reason I don't like techpowerup, or atleast, their "testers".

You are free not to like anyone and anything, but you could put some basic effort into polite communication and not post personal and sarcastic comments against staff who put a lot of time into this. You can always suggest changes in testing regime without being rude.

- Joined

- Dec 22, 2011

- Messages

- 3,947 (0.80/day)

| Processor | AMD Ryzen 7 5700X3D |

|---|---|

| Motherboard | MSI MAG B550 TOMAHAWK |

| Cooling | Thermalright Peerless Assassin 120 SE |

| Memory | Team Group Dark Pro 8Pack Edition 3600Mhz CL16 |

| Video Card(s) | Sapphire AMD Radeon RX 9070 XT NITRO+ |

| Storage | Kingston A2000 1TB + Seagate HDD workhorse |

| Display(s) | Hisense 55" U7K Mini-LED 4K@144Hz |

| Case | Thermaltake Ceres 500 TG ARGB |

| Power Supply | Seasonic Focus GX-850 |

| Mouse | Razer Deathadder Chroma |

| Keyboard | Logitech UltraX |

| Software | Windows 11 |

15% slower on average is far away from "brutal". Nonsense. Plus, if someone plays in 4K, the difference in experience is pretty much negligible. However, your pocket will feel a real and deep difference by deciding to game with halo CPU 12900K.

Average does tend to sooth the pain, I'm sure a cheaper 12600 would also leave the 5800X behind, but hey I get your point... percentages shrink to nothing at 4K with my 3700X at 4K too.

Devils indeed do cry.

Here. 12600 did not have any 'oomph' to offer either, at any resolution.Average does tend to sooth the pain, I'm sure a cheaper 12600 would also leave the 5800X behind, but hey I get your point... percentages shrink to nothing at 4K with my 3700X at 4K too.

- Joined

- Dec 22, 2011

- Messages

- 3,947 (0.80/day)

| Processor | AMD Ryzen 7 5700X3D |

|---|---|

| Motherboard | MSI MAG B550 TOMAHAWK |

| Cooling | Thermalright Peerless Assassin 120 SE |

| Memory | Team Group Dark Pro 8Pack Edition 3600Mhz CL16 |

| Video Card(s) | Sapphire AMD Radeon RX 9070 XT NITRO+ |

| Storage | Kingston A2000 1TB + Seagate HDD workhorse |

| Display(s) | Hisense 55" U7K Mini-LED 4K@144Hz |

| Case | Thermaltake Ceres 500 TG ARGB |

| Power Supply | Seasonic Focus GX-850 |

| Mouse | Razer Deathadder Chroma |

| Keyboard | Logitech UltraX |

| Software | Windows 11 |

So basically your upset with these results, I get it.

Why would I be upset? And, results have not changed.So basically your upset with these results, I get it.

- Joined

- Dec 22, 2011

- Messages

- 3,947 (0.80/day)

| Processor | AMD Ryzen 7 5700X3D |

|---|---|

| Motherboard | MSI MAG B550 TOMAHAWK |

| Cooling | Thermalright Peerless Assassin 120 SE |

| Memory | Team Group Dark Pro 8Pack Edition 3600Mhz CL16 |

| Video Card(s) | Sapphire AMD Radeon RX 9070 XT NITRO+ |

| Storage | Kingston A2000 1TB + Seagate HDD workhorse |

| Display(s) | Hisense 55" U7K Mini-LED 4K@144Hz |

| Case | Thermaltake Ceres 500 TG ARGB |

| Power Supply | Seasonic Focus GX-850 |

| Mouse | Razer Deathadder Chroma |

| Keyboard | Logitech UltraX |

| Software | Windows 11 |

Just a hunch, I look at all the green bars to right and wondered if anyone was bothered by the results, hey AMD will be fine.Why would I be upset? And, results have not changed.

- Joined

- Apr 18, 2013

- Messages

- 1,260 (0.28/day)

- Location

- Artem S. Tashkinov

The amount of work put into this review is staggering.

Thank you @W1zzard.

The only issue is that RTX 4090 is a luxury card for like 0.5% of gamers and GTX 1060 still rocks the world. The past 3-4 years of GPUs have been insane. There's nothing even close to this GPU in terms of price/performance. Probably the best/most popular GPU that's ever been released.

Thank you @W1zzard.

The only issue is that RTX 4090 is a luxury card for like 0.5% of gamers and GTX 1060 still rocks the world. The past 3-4 years of GPUs have been insane. There's nothing even close to this GPU in terms of price/performance. Probably the best/most popular GPU that's ever been released.

- Joined

- May 3, 2018

- Messages

- 2,881 (1.10/day)

Things will get real interesting when we have Zen 4 v-cache cpu's vs 13700K and 13900K. 13700K should handily beat 12900K across the board, basically it's an improved version in all ways. 13900K is going to win quite a few productivity test against 7950X just for having 24 cores, but will lose quite a few too I expect. Clocks though will win it for gaming for Intel in a lot of cases, against standard Zen 4, but I expect v-cache models to rule the roost.