Now you're changing your tune? You flat out denied that the same happened with 2GB, 4GB and 6GB. Now you're saying that it's natural evolution and you agree with me that it will happen with 10GB, 12GB etc in the future? Stop flip-flopping and make up your mind.

So this means you dont even know what you're talking about since you've never experienced this yourself.

Only a denier would look at 7.6GB on a 8GB card and say - yeah that outta be enough...

Did how? Provided zero links to disprove those youtubers. Who are people gonna believe?

Random guy with a few hundred posts in a forum where he joined a few weeks ago or a person with decades of experience and millions of subs?

Only an Nvidia fanboy would say something as moronic as that. I think everyone who's rational would agree that Nvidia is ahead, but Intel is not generations behind with "primitive software". They have all the same features as Nvidia. What holds Intel back is their own management on low volume on those cards.

Oh so now they're poor or 1080p is edge case. Didn't you say earlier that 1080p is the most used res? Now suddenly it's an edge case....

Oh so you looked at average framerate numbers and concluded that 4060 has zero issues.

What about 1% lows?, frametimes?, what about image quality (textures not loading in) etc.

Average framerate is only one metric and by that even ancient SLI was totally ok with zero issues...

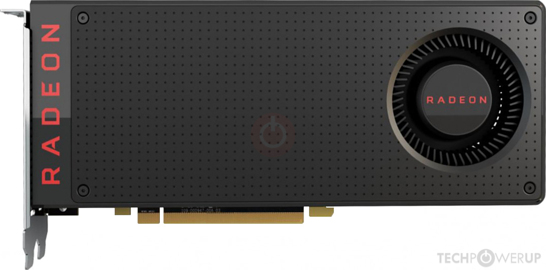

From TPU's own chart. 480 is 4% slower than 1060 6GB and 7% faster than 1060 3GB. I guess that's competitive.

It is you who has nothing to provide in this discussion. Calling 1080p as edge case, ignoring mounting evidence of 8GB problems and cheering Nvidia.

I hope at least your green check clears.

And even others mocking you for your delusions. But sure. Go on buying your high end cards and believing 8GB is enough.

I've done arguing with a fanboy. And dont bother replying. Your in my blocklist now.

I'm not going endlessly with you into arguments you have 100% lost. By the arguments provided, by even the links, proof, which I was talking about from the beginning, provided, you were firmly wrong, now you're only deflecting and I have no time for this senseless and endless debate. For you this is just about "being right" now, not about technology and interest and technical facts. 8 GB is 100% enough for even most 1440p games today, so it will be easily easily enough for 1080p for the foreseeable future, now. End of story. Also, Nvidia would 100% not risk a 8 GB card if you were right, but oh surprise, you're obviously not right. -> Otherwise debate this with

@W1zzard perhaps. It's his data. Maybe you'd listen to him.

edit: to make this a bit more clear, I'm gonna explain what the game review data shows for the RTX 4060 - and what it does not show:

- games are all tested in Ultra settings, so in a lot of cases *beyond* sweet spot of the 4060, settings which should not be used for a 4060, in other words, or only with DLSS activated. Worst case scenario, you could say.

- despite this beyond sweet spot usage, the card never had terrible fps (< 30 or even <10 fps), which would show that the vram buffer is running into short comings, a very obvious sign usually.

- the only "problem" the card had was in some games in 1080p (and I only talk about 1080p here for this card), it had less than 60 fps.

- the min fps scaled with the average fps it had in that game, so if the avg fps was already under 60 fps, obviously the min fps wouldn't be great as well - not related to vram.

- also there are games like Starfield which generally have issues with min fps that are visible in that review, and not only with the RTX 4060. Also not related to vram.

- the card generally behaved like it should, it was *not* hampered by 8 GB vram in any of the games. Just proving that what I said all along is true.

- furthermore 8 GB vram is also proven to be mostly stable for even 1440p+, as the vram requirements barely increase with resolution alone. There are a variety of parameters that will increase vram usage, for example world size, ray tracing, texture complexity, optimisation issues. A lot of games don't even have problems in 4K (!) with 8 GB vram. That is the funny thing here.

The problems start when the parameters get more exotic, so to say.

- so saying 8 GB vram isn't enough today for 1080p or even 1440p, is just wrong. Can it have select issues? Yes. It's not perfect, if you go beyond 1080 it will have more issues, but it will still mostly be fine. In 1080p, which this discussion was about, it's 99.9% fine on the other hand, making this discussion unnecessary.

- as it's still easily enough for 1080p, it will also be enough for a new low end card, for the foreseeable future.

There are rumors of a number of games that will push beyond the 24GB limit of the 4090. IF true, then the 32GB will be relevant.

haha now let's stay in reality. What I can tell you is this: if you have a ton of vram it can be used, it can be useful even in games that don't *need* it, just so your PC has never to reload vram again, it's basically luxury, less stutter than cards with 16 GB for example. I experienced this while switching from my older GPU to the new one, Diablo 4 ran that much smoother, there was basically no reloading anymore while zoning.

You're also completely wrong and think youre smarter than you actually are, but I digress. Not going to bother arguing with someone who quote replies literally everyone.

The issue here is that you're comparing two different companies. AMD used to do a "I give you extra vram" for marketing vs Nvidia. The fact here is, that historically Nvidia Upper Mid range GTX 1070 and Nvidia Semi-High End GTX 1080 used 8 GB back then, this is a fact. Whether AMD used a ton of vram on a mid range card, does not change this fact. The same chip of AMD started originally with

4 GB, as per the link you provided yourself, everyone can see. AMD also used marketing tactics like this on R9 390X, so you can go even further back to GPUs that never needed 8 GB in their relevant life time. When the R9 390X "needed" 8 GB, it was already too slow to really utilise it, making 4 GB the sweet spot for the GPU, not 8 GB (averaged on the life time of course).

And as was already said, by multiple people not just me, Nvidia vram management is simply better than AMDs - this is also a historical fact since this is true for a very long time now, making a AMD vs Nvidia comparison kinda moot. AMD will historically always need a bit more vram to do the same things Nvidia does (not have lags or stutter). As someone said this is probably due to some software optimisation.

You seem to lack footing in reality.

You seem to lack footing in reality.