- Joined

- Dec 26, 2006

- Messages

- 4,164 (0.62/day)

- Location

- Northern Ontario Canada

| Processor | Ryzen 5700x |

|---|---|

| Motherboard | Gigabyte X570S Aero G R1.1 BiosF5g |

| Cooling | Noctua NH-C12P SE14 w/ NF-A15 HS-PWM Fan 1500rpm |

| Memory | Micron DDR4-3200 2x32GB D.S. D.R. (CT2K32G4DFD832A) |

| Video Card(s) | AMD RX 6800 - Asus Tuf |

| Storage | Kingston KC3000 1TB & 2TB & 4TB Corsair MP600 Pro LPX |

| Display(s) | LG 27UL550-W (27" 4k) |

| Case | Be Quiet Pure Base 600 (no window) |

| Audio Device(s) | Realtek ALC1220-VB |

| Power Supply | SuperFlower Leadex V Gold Pro 850W ATX Ver2.52 |

| Mouse | Mionix Naos Pro |

| Keyboard | Corsair Strafe with browns |

| Software | W10 22H2 Pro x64 |

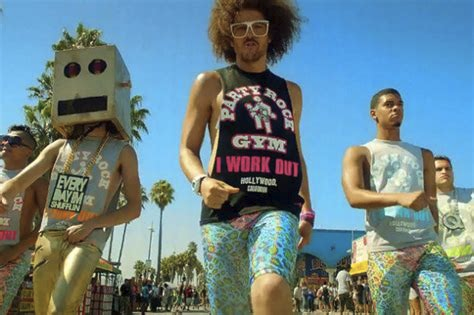

Nice looking card