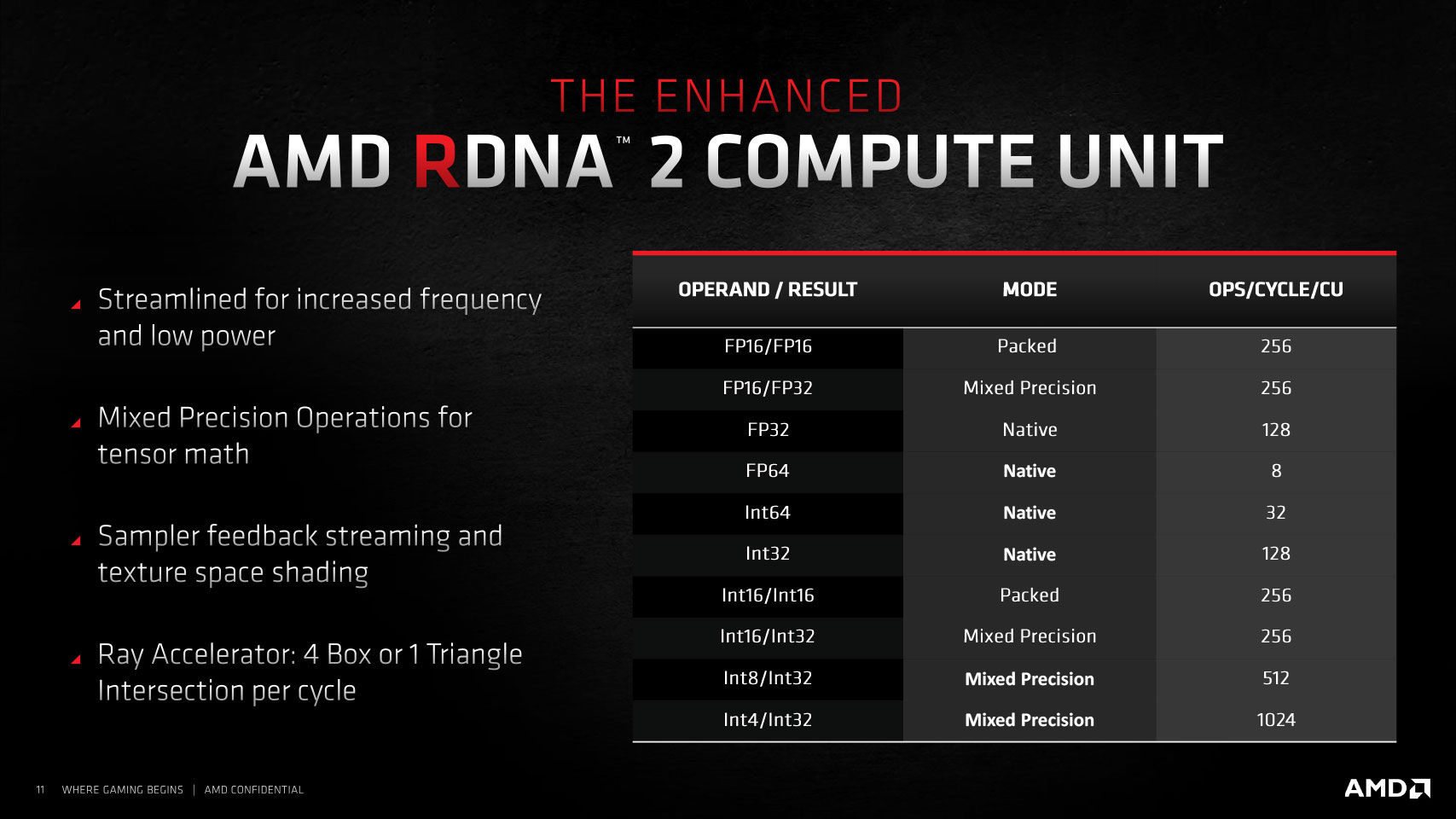

Actuall you don't. AMD in fact HAD to go for a feature like Infinity Cache. It was not an option, it was a requirement. It's because of their raytracing solution. If you look at the RDNA2 presentation slides, there is a clear line that says:

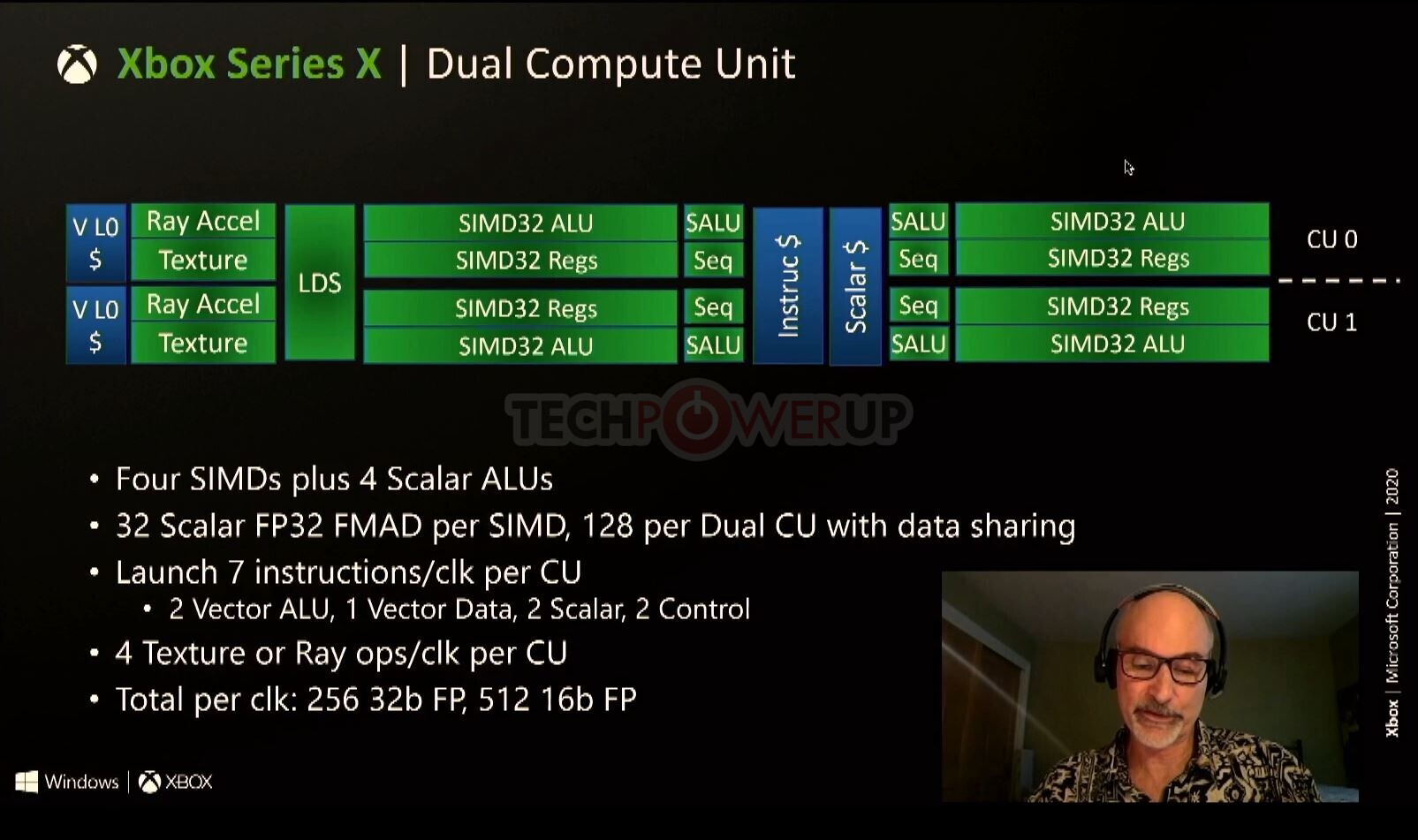

"4 texture OR ray ops/clock per CU"

Now what do you think that means? I'll enlighten you. Remember there is such a thing as the bounding volume hierarchy tree (BVH). That is a big chunk of data, as it holds the bounding boxed for all objects in the scene to help optimize ray intersections. Unfortunately for AMD, as you see in the slide, their cores cannot perform raytracing operations at the same time as texturing operations, unlike in Nvidia's design. Even worse, they are using the same memory (as AMD repeatedly stated) as they use for texture data. If AMD GPUs did not have the Infinity Cache, they would be in huge trouble, as their per-core cache would keep being invalidated all the time, having to dump the BVH data and replacing them with texture data (and vice versa). And you can see the Big Navi paying the price for that in 4k and raytracing.

AMD will continue to show improved numbers in future next gen titles versus nvidia

AMD will continue to show improved numbers in future next gen titles versus nvidia