- Joined

- Jun 14, 2020

- Messages

- 5,002 (2.80/day)

| System Name | Mean machine |

|---|---|

| Processor | AMD 6900HS |

| Memory | 2x16 GB 4800C40 |

| Video Card(s) | AMD Radeon 6700S |

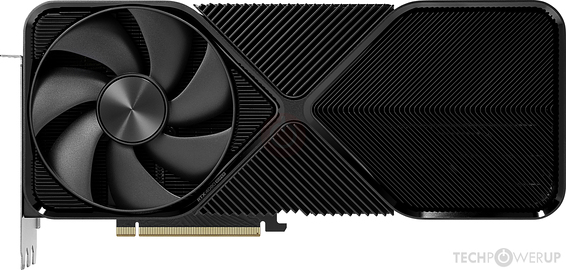

Just an example from nvidias computex presentation regarding B200Fair enough. Still wrong, imo, but as long as buyers are fine with it, who am I to argue.

Really? That's poor as well. I guess no one was really interested in that CPU. I don't even know which one you're talking about, it completely missed the spot with me (although I admit, I only looked for GPUs this time around).

The CPU in question was strix point (390Ai). But you know, it's amd, so it's not trying to mislead us