Plenty of genshin impact gamers with 4090s....Do people really pay $2000 to play crappy 1080p upscaled gamed?

-

Welcome to TechPowerUp Forums, Guest! Please check out our forum guidelines for info related to our community.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

NVIDIA GeForce RTX 5090 Founders Edition

- Thread starter W1zzard

- Start date

- Joined

- Feb 24, 2023

- Messages

- 3,950 (4.95/day)

- Location

- Russian Wild West

| System Name | D.L.S.S. (Die Lekker Spoed Situasie) |

|---|---|

| Processor | i5-12400F |

| Motherboard | Gigabyte B760M DS3H |

| Cooling | Laminar RM1 |

| Memory | 32 GB DDR4-3200 |

| Video Card(s) | RX 6700 XT (vandalised) |

| Storage | Yes. |

| Display(s) | MSi G2712 |

| Case | Matrexx 55 (slightly vandalised) |

| Audio Device(s) | Yes. |

| Power Supply | Thermaltake 1000 W |

| Mouse | Don't disturb, cheese eating in progress... |

| Keyboard | Makes some noise. Probably onto something. |

| VR HMD | I live in real reality and don't need a virtual one. |

| Software | Windows 11 / 10 / 8 |

| Benchmark Scores | My PC can run Crysis. Do I really need more than that? |

GTX 770 came one year after 670 and offered 13% more edge. 970 offered at least 33% advantage.GTX 770 refresh scenario.....

5070 came two years after 4070 and is to offer 25%, at the very most.

Triple A gaming in 2020s is like waterboarding in the sense it only sounds cool till you know what it actually is.

I prefer boogie boarding to water boarding

- Joined

- Dec 28, 2012

- Messages

- 4,378 (0.97/day)

| System Name | Skunkworks 3.0 |

|---|---|

| Processor | 5800x3d |

| Motherboard | x570 unify |

| Cooling | Noctua NH-U12A |

| Memory | 32GB 3600 mhz |

| Video Card(s) | asrock 6800xt challenger D |

| Storage | Sabarent rocket 4.0 2TB, MX 500 2TB |

| Display(s) | Asus 1440p144 27" |

| Case | Old arse cooler master 932 |

| Power Supply | Corsair 1200w platinum |

| Mouse | *squeak* |

| Keyboard | Some old office thing |

| Software | Manjaro |

Still incorrect to label it as "inefficient". Its more efficient then the 4090 and blows every single AMD card out of the water.RTX 4090 was not most efficient card, based on W1zzard's reviews:

View attachment 381454

(TPU's RTX 4080 Super review here.)

Same, it's not more efficient than previous top efficient card - aka RTX 4080(S).

Still, remember, guys, those results are based on just one game - Cyberpunk 2077. It varies between games, just so you know.

Unfortunately, GN video shows efficiency comparison only in 3 games, which is still more than in one as seen on TPU:

It would be nice to have bigger statistical sample, 10 games at least, same settings, same rest of hardware, RTX 4090 vs RTX 5090. The more games, the better accuracy of the results. What was already tested by German colleagues, they limited power of RTX 5090 to 450W (RTX 4090 level) and saw 11-15% performance improvement. That means RTX 5090 limited to 450W is indeed more efficient than RTX 4090. As for 575W TGP, I don't think so. I'd say they are pretty much on par, though RTX 4090 might be very slightly more efficient. Of course, undervolted RTX 5090 might be totally different story, similarly to undervolted RTX 4090's story.

- Joined

- May 29, 2017

- Messages

- 771 (0.27/day)

- Location

- Latvia

| Processor | AMD Ryzen™ 7 7700 |

|---|---|

| Motherboard | ASRock B650 PRO RS |

| Cooling | Thermalright Peerless Assassin 120 SE + Arctic P12 |

| Memory | XPG Lancer Blade 6000Mhz CL30 2x16GB |

| Video Card(s) | ASUS Prime Radeon™ RX 9070 XT OC Edition |

| Storage | Lexar NM790 2TB + Lexar NM790 2TB |

| Display(s) | HP X34 UltraWide IPS 165Hz |

| Case | Lian Li Lancool 207 + Arctic P12/14 |

| Audio Device(s) | Airpulse A100 |

| Power Supply | Sharkoon Rebel P20 750W |

| Mouse | Cooler Master MM730 |

| Keyboard | Krux Atax PRO Gateron Yellow |

| Software | Windows 10 Pro |

Those are some strong words when new AMD cards are not even tested yet. IPC will be more easy to test on RTX 5080 vs RTX 4080 due to the same 256 bit bus. Apples vs apples not apples vs oranges!Its more efficient then the 4090 and blows every single AMD card out of the water.

- Joined

- Oct 30, 2020

- Messages

- 468 (0.28/day)

- Location

- Toronto

| System Name | GraniteXT |

|---|---|

| Processor | Ryzen 9950X |

| Motherboard | ASRock B650M-HDV |

| Cooling | 2x360mm custom loop |

| Memory | 2x24GB Team Xtreem DDR5-8000 [M die] |

| Video Card(s) | RTX 3090 FE underwater |

| Storage | Intel P5800X 800GB + Samsung 980 Pro 2TB |

| Display(s) | MSI 342C 34" OLED |

| Case | O11D Evo RGB |

| Audio Device(s) | DCA Aeon 2 w/ SMSL M200/SP200 |

| Power Supply | Superflower Leadex VII XG 1300W |

| Mouse | Razer Basilisk V3 |

| Keyboard | Steelseries Apex Pro V2 TKL |

Still better than sharing flat out incorrect info I supopose. When Der8auer contacted nvidia to ask why the hotspot temperature was removed, their reply was somewhere along the lines of "oh that sensor was bogus, but we added memory temperatures now!". We've had memory temperature for ages.The CUDA cores can now all execute either INT or FP, on Ada only half had that capability. When I asked NVIDIA for more details on the granularity of that switch they acted dumb and gave me an answer to a completely different question and mentioned "that's all that we can share"

Also the FE cooler seems pretty bad at cooling memory with 94-96'c on test benches, just like the 3090 FE memory temperatures. When people start putting these in a case and it accumulates a bit of dust, memory throttling is a real possibility down the line but with so much memory bandwidth I doubt it'll be much of an issue.

Now with the hotspot temperature removed, how does one figure out if their TIM/LM application is accurate or if the block is slightly misaligned? Since the core temperature is an average of sorts, I feel like removing that wasn't a great idea because we just lost another data point which was actually useful in these scenarios.

- Joined

- Feb 1, 2019

- Messages

- 3,946 (1.73/day)

- Location

- UK, Midlands

| System Name | Main PC |

|---|---|

| Processor | 13700k |

| Motherboard | Asrock Z690 Steel Legend D4 - Bios 13.02 |

| Cooling | Noctua NH-D15S |

| Memory | 32 Gig 3200CL14 |

| Video Card(s) | 4080 RTX SUPER FE 16G |

| Storage | 1TB 980 PRO, 2TB SN850X, 2TB DC P4600, 1TB 860 EVO, 2x 3TB WD Red, 2x 4TB WD Red |

| Display(s) | LG 27GL850 |

| Case | Fractal Define R4 |

| Audio Device(s) | Soundblaster AE-9 |

| Power Supply | Antec HCG 750 Gold |

| Software | Windows 10 21H2 LTSC |

The 50

A issue has cropped up since 4000 launch, I noticed it when trying to make a curve like my 3080 had, the 3d mode on the cards cant go below about 0.910v which is pretty high, and the clocks also have a high min speed as well. The downside of this is when you have a card with a high core count, it basically now has a higher power draw floor.

5090 is basically a "I give no ***** about being economic". Worse under load, massively worse when idle.A bit more apples to apples:

4090 idle: 22W

5090 idle: 30W, +36%

4090 multi monitor: 27W

5090 multi monitor:39W, +44%

4090 video playback: 26W

5090 video playback: 54W, +108%

It's quite horrible. AMD "We'll fix it in drivers (but doesn't)" horrible.

But making excuses for Nvidia that this card isn't meant for gamers, home users is silly. Nvidia spent quite a big chunk of their presentation of RTX 5090 on how good it is in gaming - since it's apparently the only card that will have any significant performance uplift compared to Lovelace equivalent without using "frame quadrupling". Delegate this card to "Quadro" lineup, or "home and small business AI accelerator" lineup, what are you left with? Cards within 10- 15 % of their predecessors? That's within overclocking margin, as measly as it is now.

A issue has cropped up since 4000 launch, I noticed it when trying to make a curve like my 3080 had, the 3d mode on the cards cant go below about 0.910v which is pretty high, and the clocks also have a high min speed as well. The downside of this is when you have a card with a high core count, it basically now has a higher power draw floor.

Thats scary. UK regulated tariff, what we call SVR, at current exchange rates is in between those bottom 2 examples you listed.Anyone that can afford a 5090 probably isn't overly concerned about the cost to run it for gaming.

If you game 4 hours a day, that's 28 hours a week.

If the GPU runs at a continuous 600W an hour while gaming you end up with 16.8kWh a week.

If you pay $0.10 / kWh = $1.68 a week

If you pay $0.20 / kWh = $3.36 a week

If you pay $0.30 / kWh = $5.04 a week

If you pay $0.70 / kWh = $11.76 a week

Remember, this is if the GPU is running a sustained, continuous 600W those 4 straight hours of gaming. It all depends on the game, resolution, settings and so on. Also, remember the V-Sync power chart shows the GPU pulling about 90W. The above numbers would be for top-end power draw scenarios.

Personally I wouldn't want a GPU that can suck 600W for gaming. Not to mention the fact that this GPU is priced nearly 3x over what I'm comfortable in spending on a GPU, so I'm not the target for this product. If I had oodles of money and no brains, I'd get one, but I've got a meager amount of money and brains so I won't be getting one.

- Joined

- Dec 28, 2012

- Messages

- 4,378 (0.97/day)

| System Name | Skunkworks 3.0 |

|---|---|

| Processor | 5800x3d |

| Motherboard | x570 unify |

| Cooling | Noctua NH-U12A |

| Memory | 32GB 3600 mhz |

| Video Card(s) | asrock 6800xt challenger D |

| Storage | Sabarent rocket 4.0 2TB, MX 500 2TB |

| Display(s) | Asus 1440p144 27" |

| Case | Old arse cooler master 932 |

| Power Supply | Corsair 1200w platinum |

| Mouse | *squeak* |

| Keyboard | Some old office thing |

| Software | Manjaro |

Well, given the last 3 generations of AMD struggled in the efficiency game and the actual arch improvements have been near non existent, I'mma make an educated guess and say rDNA4 isnt going to be setting that efficiency graph on fire.Those are some strong words when new AMD cards are not even tested yet.

It's also a correct statement. Every AMD card on that list is below the 5090 in efficiency. Dont need to be bold to state fact. There's future nvidia cards too, you'll ahv eto factor that in always, unless its announced no new GPUs will ever be made.

No idea why IPC was brought up. Apples vs oranges indeed.IPC will be more easy to test on RTX 5080 vs RTX 4080 due to the same 256 bit bus. Apples vs apples not apples vs oranges!

Yeah, but those costs are at 600w continuously, 4 hours a day, every day.Thats scary. UK regulated tariff, what we call SVR, at current exchange rates is in between those bottom 2 examples you listed.

Who does that? If you're a gaming enthusiast, you wont see those numbers sustained for very long, if you're running AI workloads you're either an enthusiast in which case the power use is a non issue or you're making money on it, see above.

The power use argument has just never made any sense.

- Joined

- May 29, 2017

- Messages

- 771 (0.27/day)

- Location

- Latvia

| Processor | AMD Ryzen™ 7 7700 |

|---|---|

| Motherboard | ASRock B650 PRO RS |

| Cooling | Thermalright Peerless Assassin 120 SE + Arctic P12 |

| Memory | XPG Lancer Blade 6000Mhz CL30 2x16GB |

| Video Card(s) | ASUS Prime Radeon™ RX 9070 XT OC Edition |

| Storage | Lexar NM790 2TB + Lexar NM790 2TB |

| Display(s) | HP X34 UltraWide IPS 165Hz |

| Case | Lian Li Lancool 207 + Arctic P12/14 |

| Audio Device(s) | Airpulse A100 |

| Power Supply | Sharkoon Rebel P20 750W |

| Mouse | Cooler Master MM730 |

| Keyboard | Krux Atax PRO Gateron Yellow |

| Software | Windows 10 Pro |

384 (4090) vs 512 (5090) technically not the same. For power and IPC testing 256 vs 256 will be more accurate.No idea why IPC was brought up. Apples vs oranges indeed.

- Joined

- Sep 19, 2015

- Messages

- 47 (0.01/day)

| Processor | AMD Ryzen 9 7900 |

|---|---|

| Motherboard | Gigabyte B650M AORUS Elite AX |

| Video Card(s) | AMD Radeon RX 6700 XT |

Recall that for us European consumers, once VAT is calculated, the card costs approximately 2'300 /2'400 Euro.If you pay $0.10 / kWh = $1.68 a week

If you pay $0.20 / kWh = $3.36 a week

If you pay $0.30 / kWh = $5.04 a week

If you pay $0.70 / kWh = $11.76 a week

- Joined

- Oct 19, 2022

- Messages

- 458 (0.49/day)

- Location

- Los Angeles, CA

| Processor | AMD Ryzen 7 9800X3D (+PBO 5.4GHz) |

|---|---|

| Motherboard | MSI MPG X870E Carbon Wifi |

| Cooling | ARCTIC Liquid Freezer II 280 A-RGB |

| Memory | 2x32GB (64GB) G.Skill Trident Z Royal @ 6200MHz 1:1 (30-38-38-30) |

| Video Card(s) | MSI GeForce RTX 4090 SUPRIM Liquid X |

| Storage | Crucial T705 4TB (PCIe 5.0) w/ Heatsink + Samsung 990 PRO 2TB (PCIe 4.0) w/ Heatsink |

| Display(s) | AORUS FO32U2P 4K QD-OLED 240Hz (DP 2.1 UHBR20 80Gbps) |

| Case | CoolerMaster H500M (Mesh) |

| Audio Device(s) | AKG N90Q w/ AudioQuest DragonFly Red (USB DAC) |

| Power Supply | Seasonic Prime TX-1600 Noctua Edition (1600W 80Plus Titanium) ATX 3.1 & PCIe 5.1 |

| Mouse | Logitech G PRO X SUPERLIGHT |

| Keyboard | Razer BlackWidow V3 Pro |

| Software | Windows 10 64-bit |

On Blackwell all the CUDA Cores can do FP32 or INT32, but games use about ~35% of INT32 cores to run them (some Nvidia employees confirmed it), so the 5090 is only a ~110 FP32 Gaming GPU ! That's a lot of FP32 performance left on the table... I wonder if they could make each core have a "Dual Instruction mode" aka doing both FP32 + INT32 at the same time in next-gen architectures. That could give them a huge boost just by changing the way the architecture works.The CUDA cores can now all execute either INT or FP, on Ada only half had that capability. When I asked NVIDIA for more details on the granularity of that switch they acted dumb and gave me an answer to a completely different question and mentioned "that's all that we can share"

The 5090 has a 512-bit bus and 33% more CUDA Cores...and they had to lower Core clocks compared to the 4090 to not use "too much" power... Also they're on a TSMC 4nm node so they can't get much more than that.A bit more apples to apples:

4090 idle: 22W

5090 idle: 30W, +36%

4090 multi monitor: 27W

5090 multi monitor:39W, +44%

4090 video playback: 26W

5090 video playback: 54W, +108%

It's quite horrible. AMD "We'll fix it in drivers (but doesn't)" horrible.

But making excuses for Nvidia that this card isn't meant for gamers, home users is silly. Nvidia spent quite a big chunk of their presentation of RTX 5090 on how good it is in gaming - since it's apparently the only card that will have any significant performance uplift compared to Lovelace equivalent without using "frame quadrupling". Delegate this card to "Quadro" lineup, or "home and small business AI accelerator" lineup, what are you left with? Cards within 10- 15 % of their predecessors? That's within overclocking margin, as measly as it is now.

Blackwell was supposed to be on a TSMC 3nm node but Nvidia decided to cheap out by relying on AI to do the heavy work.

If the RTX 6090 released in Q1 2027 or Q2 2027 they could definitely go for a TSMC 2nm node and have much better efficiency already. But they might need to work on their architecture efficiency too.

I feel like Ampere, Lovelace and Blackwell all have a similar IPC. Lovelace was just on a much better node than Ampere but Core for Core we didn't see much improvement, and the same goes for Blackwell (at least as of now).

Last edited:

- Joined

- Feb 1, 2019

- Messages

- 3,946 (1.73/day)

- Location

- UK, Midlands

| System Name | Main PC |

|---|---|

| Processor | 13700k |

| Motherboard | Asrock Z690 Steel Legend D4 - Bios 13.02 |

| Cooling | Noctua NH-D15S |

| Memory | 32 Gig 3200CL14 |

| Video Card(s) | 4080 RTX SUPER FE 16G |

| Storage | 1TB 980 PRO, 2TB SN850X, 2TB DC P4600, 1TB 860 EVO, 2x 3TB WD Red, 2x 4TB WD Red |

| Display(s) | LG 27GL850 |

| Case | Fractal Define R4 |

| Audio Device(s) | Soundblaster AE-9 |

| Power Supply | Antec HCG 750 Gold |

| Software | Windows 10 21H2 LTSC |

So the drivers for DLSS4 not out yet, they reviewers only? (570.xx)

- Joined

- Jun 2, 2017

- Messages

- 9,828 (3.40/day)

| System Name | Best AMD Computer |

|---|---|

| Processor | AMD 7900X3D |

| Motherboard | Asus X670E E Strix |

| Cooling | In Win SR36 |

| Memory | GSKILL DDR5 32GB 5200 30 |

| Video Card(s) | Sapphire Pulse 7900XT (Watercooled) |

| Storage | Corsair MP 700, Seagate 530 2Tb, Adata SX8200 2TBx2, Kingston 2 TBx2, Micron 8 TB, WD AN 1500 |

| Display(s) | GIGABYTE FV43U |

| Case | Corsair 7000D Airflow |

| Audio Device(s) | Corsair Void Pro, Logitch Z523 5.1 |

| Power Supply | Deepcool 1000M |

| Mouse | Logitech g7 gaming mouse |

| Keyboard | Logitech G510 |

| Software | Windows 11 Pro 64 Steam. GOG, Uplay, Origin |

| Benchmark Scores | Firestrike: 46183 Time Spy: 25121 |

Well I watched the OC3D review of the 5090 Suprim from MSI. When I saw the power draw was 836 Watts I was blown away. Just about all reviewers are looking at price as the mitigating factor and it does not matter how you try to fit it 836 watts from 1 component in a PC is insane.

Ritch boys video card.Well I watched the OC3D review of the 5090 Suprim from MSI. When I saw the power draw was 836 Watts I was blown away. Just about all reviewers are looking at price as the mitigating factor and it does not matter how you try to fit it 836 watts from 1 component in a PC is insane.

- Joined

- May 20, 2011

- Messages

- 209 (0.04/day)

- Location

- Columbus, OH

Well I watched the OC3D review of the 5090 Suprim from MSI. When I saw the power draw was 836 Watts I was blown away. Just about all reviewers are looking at price as the mitigating factor and it does not matter how you try to fit it 836 watts from 1 component in a PC is insane.

Are you sure that isn't total system draw? I haven't seen review go that high yet unless they were showing the total system power instead of just the GPU.

- Joined

- Jun 2, 2017

- Messages

- 9,828 (3.40/day)

| System Name | Best AMD Computer |

|---|---|

| Processor | AMD 7900X3D |

| Motherboard | Asus X670E E Strix |

| Cooling | In Win SR36 |

| Memory | GSKILL DDR5 32GB 5200 30 |

| Video Card(s) | Sapphire Pulse 7900XT (Watercooled) |

| Storage | Corsair MP 700, Seagate 530 2Tb, Adata SX8200 2TBx2, Kingston 2 TBx2, Micron 8 TB, WD AN 1500 |

| Display(s) | GIGABYTE FV43U |

| Case | Corsair 7000D Airflow |

| Audio Device(s) | Corsair Void Pro, Logitch Z523 5.1 |

| Power Supply | Deepcool 1000M |

| Mouse | Logitech g7 gaming mouse |

| Keyboard | Logitech G510 |

| Software | Windows 11 Pro 64 Steam. GOG, Uplay, Origin |

| Benchmark Scores | Firestrike: 46183 Time Spy: 25121 |

I am pretty sure it was FurmarkAre you sure that isn't total system draw? I haven't seen review go that high yet unless they were showing the total system power instead of just the GPU.

- Joined

- Sep 26, 2022

- Messages

- 2,539 (2.68/day)

- Location

- Braziguay

| System Name | G-Station 2.0 "YGUAZU" |

|---|---|

| Processor | AMD Ryzen 7 5700X3D |

| Motherboard | Gigabyte X470 Aorus Gaming 7 WiFi |

| Cooling | Freezemod: Pump, Reservoir, 360mm Radiator, Fittings / Bykski: Blocks / Barrow: Meters |

| Memory | Asgard Bragi DDR4-3600CL14 2x16GB |

| Video Card(s) | Sapphire PULSE RX 7900 XTX |

| Storage | 240GB Samsung 840 Evo, 1TB Asgard AN2, 2TB Hiksemi FUTURE-LITE, 320GB+1TB 7200RPM HDD |

| Display(s) | Samsung 34" Odyssey OLED G8 |

| Case | Lian Li Lancool 216 |

| Audio Device(s) | Astro A40 TR + MixAmp |

| Power Supply | Cougar GEX X2 1000W |

| Mouse | Razer Viper Ultimate |

| Keyboard | Razer Huntsman Elite (Red) |

| Software | Windows 11 Pro, Garuda Linux |

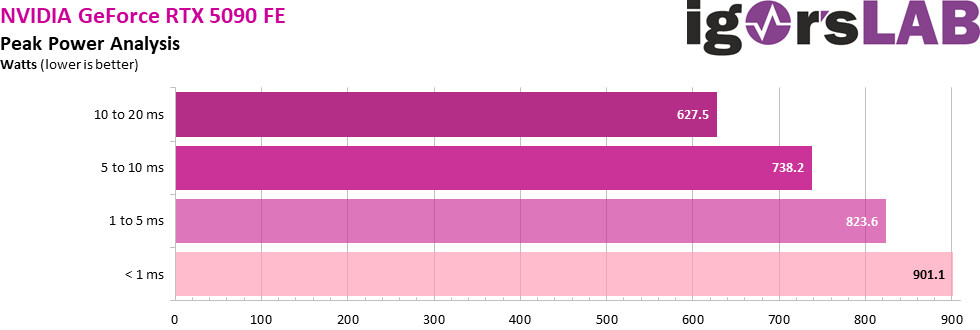

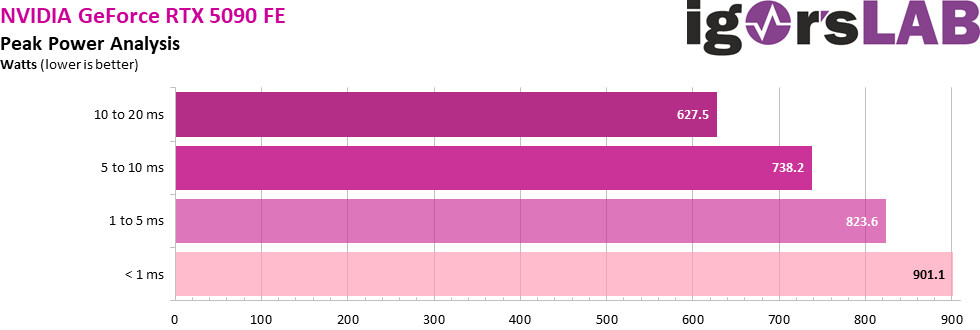

I mean, there's an article here in TPU about Igor's Lab measuring 901W spikesAre you sure that isn't total system draw? I haven't seen review go that high yet unless they were showing the total system power instead of just the GPU.

GeForce RTX 5090 Power Excursions Tested: Can Spike to 901W Under 1ms

Igor's Lab conducted an in-depth analysis of the power management system of the new NVIDIA GeForce RTX 5090 graphics card, including the way the card draws peak power within the tolerances of the ATX 3.1 specification. This analysis should prove particularly useful for those still on older ATX...

But that's what it is: spikes. And still covered by ATX 3.1 specs.

- Joined

- May 20, 2011

- Messages

- 209 (0.04/day)

- Location

- Columbus, OH

I mean, there's an article here in TPU about Igor's Lab measuring 901W spikes

GeForce RTX 5090 Power Excursions Tested: Can Spike to 901W Under 1ms

Igor's Lab conducted an in-depth analysis of the power management system of the new NVIDIA GeForce RTX 5090 graphics card, including the way the card draws peak power within the tolerances of the ATX 3.1 specification. This analysis should prove particularly useful for those still on older ATX...www.techpowerup.com

But that's what it is: spikes. And still covered by ATX 3.1 specs.

Thanks, I had missed that article. Seems like a nothingburger if it is a 1ms spike and covered by the spec to account for it, not that it is constantly pulling almost 850watts continuously

- Joined

- Jun 2, 2017

- Messages

- 9,828 (3.40/day)

| System Name | Best AMD Computer |

|---|---|

| Processor | AMD 7900X3D |

| Motherboard | Asus X670E E Strix |

| Cooling | In Win SR36 |

| Memory | GSKILL DDR5 32GB 5200 30 |

| Video Card(s) | Sapphire Pulse 7900XT (Watercooled) |

| Storage | Corsair MP 700, Seagate 530 2Tb, Adata SX8200 2TBx2, Kingston 2 TBx2, Micron 8 TB, WD AN 1500 |

| Display(s) | GIGABYTE FV43U |

| Case | Corsair 7000D Airflow |

| Audio Device(s) | Corsair Void Pro, Logitch Z523 5.1 |

| Power Supply | Deepcool 1000M |

| Mouse | Logitech g7 gaming mouse |

| Keyboard | Logitech G510 |

| Software | Windows 11 Pro 64 Steam. GOG, Uplay, Origin |

| Benchmark Scores | Firestrike: 46183 Time Spy: 25121 |

Well the Asus card here draws over 600 Watts. I am not trying to bash the card but the power draw is insane. No matter how good the cooling solution is. 1200 Watt PSUs are not cheap.Thanks, I had missed that article. Seems like a nothingburger if it is a 1ms spike and covered by the spec to account for it, not that it is constantly pulling almost 850watts continuously

- Joined

- Feb 24, 2015

- Messages

- 34 (0.01/day)

- Location

- Romania

| System Name | PC / NB |

|---|---|

| Processor | i7-13700k / Ultra 9-185H |

| Motherboard | Z790 Aorus Elite AX / Yoga Pro 7 14IMH9 |

| Cooling | NH-D15 / inside |

| Memory | Corsair 2x32GB 6000mtps cl30 / 32GB LPDDR5x |

| Video Card(s) | RTX4070 Gaming OC / Intel ARC |

| Storage | 2TB 990 pro, 2TB XPG SX8200 Pro, 2TB 870 EVO, 2TB 870 QVO, 3x8TB Exos 7E10, 6TB N300 / 1TB+2TB |

| Display(s) | Dell S2522HG / 14.5" 3K (3072x1920) Multi-touch IPS |

| Case | Fractal Define R6 TG / Thunderbolt 4 dock |

| Audio Device(s) | Creative AE-5 plus + 2 x Presonus Eris E8 XT / Creative G3 + 2 x Presonus Eris E5 |

| Power Supply | Corsair RM750i / 100W charger |

| Mouse | Logitech G403 / Logitech M650 L BT |

| Keyboard | Corsair K70 TKL MX Speed / Fnatic MiniStreak TKL Speed |

| Software | win10 pro + win11pro + fedora / win11pro |

| Benchmark Scores | https://www.3dmark.com/spy/44211099 https://www.3dmark.com/spy/52803246 |

... and the electricity bill won't be cheap, for 800W/h (entire PC in gaming)… 4 hours a day… I let you do the math

- Joined

- May 29, 2017

- Messages

- 771 (0.27/day)

- Location

- Latvia

| Processor | AMD Ryzen™ 7 7700 |

|---|---|

| Motherboard | ASRock B650 PRO RS |

| Cooling | Thermalright Peerless Assassin 120 SE + Arctic P12 |

| Memory | XPG Lancer Blade 6000Mhz CL30 2x16GB |

| Video Card(s) | ASUS Prime Radeon™ RX 9070 XT OC Edition |

| Storage | Lexar NM790 2TB + Lexar NM790 2TB |

| Display(s) | HP X34 UltraWide IPS 165Hz |

| Case | Lian Li Lancool 207 + Arctic P12/14 |

| Audio Device(s) | Airpulse A100 |

| Power Supply | Sharkoon Rebel P20 750W |

| Mouse | Cooler Master MM730 |

| Keyboard | Krux Atax PRO Gateron Yellow |

| Software | Windows 10 Pro |

Good pc case also needed to cool down that oven. Original cooler is bad ~40dba for that price it is not acceptable.....No matter how good the cooling solution is. 1200 Watt PSUs are not cheap.

Thunderslat

New Member

- Joined

- Jan 24, 2025

- Messages

- 1 (0.01/day)

Anyone that can afford a 5090 probably isn't overly concerned about the cost to run it for gaming.

If you game 4 hours a day, that's 28 hours a week.

If the GPU runs at a continuous 600W an hour while gaming you end up with 16.8kWh a week.

If you pay $0.10 / kWh = $1.68 a week

If you pay $0.20 / kWh = $3.36 a week

If you pay $0.30 / kWh = $5.04 a week

If you pay $0.70 / kWh = $11.76 a week

Remember, this is if the GPU is running a sustained, continuous 600W those 4 straight hours of gaming. It all depends on the game, resolution, settings and so on. Also, remember the V-Sync power chart shows the GPU pulling about 90W. The above numbers would be for top-end power draw scenarios.

Personally I wouldn't want a GPU that can suck 600W for gaming. Not to mention the fact that this GPU is priced nearly 3x over what I'm comfortable in spending on a GPU, so I'm not the target for this product. If I had oodles of money and no brains, I'd get one, but I've got a meager amount of money and brains so I won't be getting one.

It isn't really the energey cost. It's the amount of heat it puts into your room making it less comfortable and then the cost to cool the room.

- Joined

- Feb 24, 2015

- Messages

- 34 (0.01/day)

- Location

- Romania

| System Name | PC / NB |

|---|---|

| Processor | i7-13700k / Ultra 9-185H |

| Motherboard | Z790 Aorus Elite AX / Yoga Pro 7 14IMH9 |

| Cooling | NH-D15 / inside |

| Memory | Corsair 2x32GB 6000mtps cl30 / 32GB LPDDR5x |

| Video Card(s) | RTX4070 Gaming OC / Intel ARC |

| Storage | 2TB 990 pro, 2TB XPG SX8200 Pro, 2TB 870 EVO, 2TB 870 QVO, 3x8TB Exos 7E10, 6TB N300 / 1TB+2TB |

| Display(s) | Dell S2522HG / 14.5" 3K (3072x1920) Multi-touch IPS |

| Case | Fractal Define R6 TG / Thunderbolt 4 dock |

| Audio Device(s) | Creative AE-5 plus + 2 x Presonus Eris E8 XT / Creative G3 + 2 x Presonus Eris E5 |

| Power Supply | Corsair RM750i / 100W charger |

| Mouse | Logitech G403 / Logitech M650 L BT |

| Keyboard | Corsair K70 TKL MX Speed / Fnatic MiniStreak TKL Speed |

| Software | win10 pro + win11pro + fedora / win11pro |

| Benchmark Scores | https://www.3dmark.com/spy/44211099 https://www.3dmark.com/spy/52803246 |

the 5090FE has memory too hot, 94-95 degrees, risky, plus noise...

- Joined

- May 20, 2011

- Messages

- 209 (0.04/day)

- Location

- Columbus, OH

Well the Asus card here draws over 600 Watts. I am not trying to bash the card but the power draw is insane. No matter how good the cooling solution is. 1200 Watt PSUs are not cheap.

Oh, no disagreement there at all, the power draw is insane, the only thing that gave me pause for my original comment was the mention of 850 Watts for a single component, which I haven't seen as a continuous draw in the reviews I've seen/read (versus being total system). 1200 Watt PSUs are not cheap (I have a 1000 Watt EVGA power supply that I feel confident in, but nonetheless, it's an insane amount of power for a single card to draw, especially considering the performance increase being pretty linear between generations.