I ran into something relevant to this when deep-diving monitor related tech in another thread.

This all boils down to Vsync and now various games/titles/engines and displays all handle it differently

None of this will help you if your stutter is caused by hardware thermal/power throttling or software (crap like wallpaper engine)

If you run Vsync without VRR (be it adaptive sync, freesync or gsync) or go outside of your VRR range, this can occur

The only true fix is a situation where neither CPU nor GPU end is the bottleneck, usually by an FPS cap - but even that can go wrong, if your display can't handle the capped frame rate you're feeding it or drops below that limit regularly.

If your FPS values dont math neatly into your refresh rate, situations can occur where every single frame has a different latency to the one before it - or they can be spread out (every 3rd 5th 10th frame etc)

Some techniques like larger render-ahead frames alleviate this, keeping the latency constantly high.

If you settle for 2 frames render ahead every user gets double the latency what they should, but hey - it let us spend less time optimising the game!

Nvidias low latency mode lowers the render ahead queue to 1 or '0', which can trade the higher latency for the stuttering - those modes only help if you can always sustain the FPS you're using.

Using 100Hz for easy math here, can display an image every 10ms

at 90FPS the frames are ready every 11.1ms, so what's going to happen is simple: the frames dont fit. They have to be delayed by one, so each frame is 21.1ms old when its sent out.

Vsync off would get you 11.1ms, with tearing. You can see why Vsync off is popular with the crowd who cant reach their refresh rate, or cant sustain it.

The thing is a 50FPS cap here would be 20ms and fit perfectly - so you'd get a flat 20ms every time (displaying the same frame twice)

(Adjusting for the render ahead queue, 48 or 49fps)

If you had 66FPS (15.15ms),

things would get weird - and stuttery

The display can only push out an image every 10ms, so that first image ends up being rendered by the CPU (lets say at 0ms)

Trying to explain this is difficult, so i'll stick to some numbers and show how they need to line up

Excusing that it's not to scale, you'll see that some frames have a double gap - they've got twice the

display latency of the others. That's your stutter.

Each frame displayed has varying input latency, which also adds to the 'feel' of the stutter.

Some are 0.9ms delayed while some are 9.7ms delayed

The problem is that people diagnosing this will look at render latency (purely GPU) or frametimes from afterburner (which are literally 1000ms divided by framerate)

Game devs try and fix this by using the render-ahead from the CPU, but that only works if your CPU is much faster than your GPU - and you're willing to tolerate the extra latency in EVERY Frame, such as 33ms for every single frame vs varying between 15 and 30

This is where i think people are going wrong by copying guides from people giving an incomplete picture or "this worked for me" answers because the answer varies

depending on the monitor and setup used in so many ways - Vsync, Gsync, VRR ranges, refresh rates, frame rates and even the render queue in the game or forced in the driver.

You could have stutter caused by an external source, software or hardware - or just by running a refresh rate with a framerate that doesnt match up well.

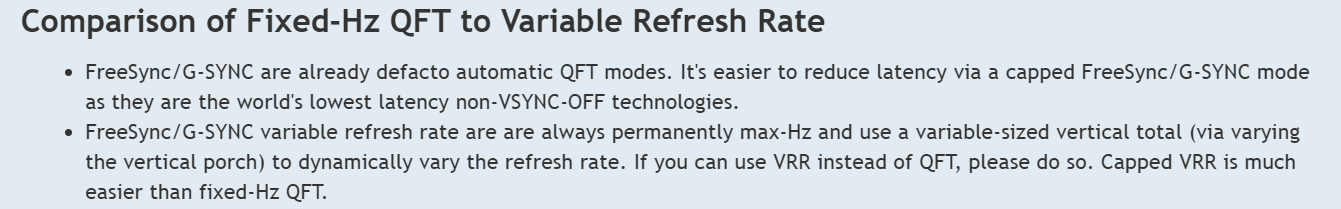

If you cant use VRR or your frame rates go outside your VRR range, run your FPS cap at a binary division of your refresh rate, or lower the refresh rate.

(Bonus points, if its within your VRR range running 1/2 or 1/4 FPS gives you the same latency as the full FPS - so 120FPS on a 240Hz monitor will actually give you better input latency than 121FPS, as they can send the signal in the later half of the Vsync signal instead of waiting for the start of the next one)

LFC is also helpful here when you go below your VRR range since it treats them as two seperate frames - this means if the PC sends a signal before the duplicate needs to be displayed, it can do that.

This is why some people can see the stutter and others cannot - some of their monitors can recover in half the time, and others never display it (

Gsync ultimate displays have a 1Hz minimum, vs 48+ on 'compatible' displays)

Heres one example, an otherwise amazing display with a high VRR minimum could have issues if you have games, or hardware, that cause FPS drops

Better not play skyrim with its 60Hz engine limitation on that 240Hz display!

Where this seemingly inferior display would give you the stutter and latency free dream:

Sadly LFC only works fully with displays that have a larger VRR range - this was later made a requirement for all Freesync 2 monitors, so it's becoming more common... but people disable their unofficial Gsync compatibility because cheaper displays have flickering issues or were not fully compatible, making them trade one issue for another.

Low Framerate Compensation only works on FreeSync monitors in which the maximum refresh rate is at least 2.5 times greater than its minimum refresh rate. For example, if you’ve got a newer FreeSync monitor with a 40Hz to 144Hz range, then you are good to go

60Hz users Vsync off tip: On my 4k 60Hz displays, in games that use a render ahead of 2, they get tearing with a 60FPS cap. At 65Hz, the same happens at 65FPS. Setting an FPS limit to 58/63 gives me no tearing at all, without any of the latency issues - unless the FPS dips as a result of something drastic in game, in which case it can recover at full speed with no Vsync related delay.

Using Nvidias Ultra low latency mode lets me drop that to 1FPS below Vsync, but many of those settings -including fast vsync- only work in DX9/10/11 - not in DX12, vulkan or openGL.