I think purchasing high quality graphics cards for gaming is a good decision. It's not an investment, it's a cost. Buying shares of NVDA is an investment.

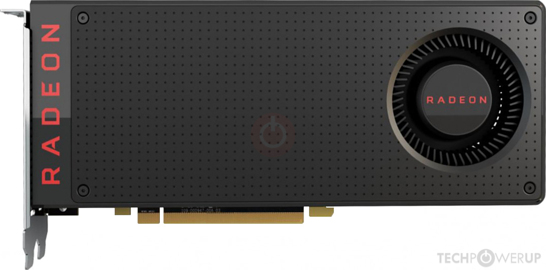

Basically any card nowadays (except frying RTX 3090s) are well made. The only difference is price, performance, power consumption. So far there hasn't been a single properly priced, quite fast for price and reasonably efficient card in a while. The last attempt was GTX 1660 series, but they lacked performance for their price, so the last truly good all around card was AMD Radeon RX 480 8GB (4GB is e-waste). It was cheap, fast and efficient. More than half of Vega 64 performance for 3 times lower price. Good and it lasts forever, as it is on GCN architecture, also has 8GB VRAM and now with FSR, it's essentially immortal card for another 4 years. nVidia's last best card was GTX 1060 6GB, but it's just worse than RX 480 8GB in how it will fare long term.

Games look better at Ultra settings if you haven't noticed. A decade ago there was no ray-tracing either.

Only sometimes. It's hard to say if high and ultra look any different at all.

And I was benching RX 580 in Unigine Tropics yesterday, I went through every setting combination and many things made little visual difference. Often even medium was nearly indistinguishable from high. For example, shader quality past medium, made no difference, but reduced fps. AA past 4x made such a tiny difference that it just didn't matter. Disabling tessellation made zero visual difference. I'm still mystified as to what refractions setting does. Ambient occlusion makes some subconscious (I wasn't able to tell if demo had it on or not, but when it was on it felt a little bit better, but I still can't point a finger and answer where and why) quality difference, but costs quite a bit of fps.

And same story is in games, thought visual difference is greater, it still isn't big enough for me to care.

A lot of the more sophisticated graphics settings have developed over the years and weren't around in the first decade of this millennium.

That's mostly due to transition from fixed pipeline to unified shader architecture. After that switch, game devs can make their own shaders (graphics effects) in ways that they want, not in graphics card predefined ways. Other than that, as long as card is unified shader and supports proper API versions, there isn't any visual setting difference between two cards. They render exactly the same image, but perhaps at different fps. The only big innovation is ray tracing, but it's still somewhat too early as it still is too darn slow and current architectures for it aren't that great, also it is way too much cost prohibitive to truly become mainstream. Even if it is a future, currently it's not much better than other technologies like 3D Vision, ATi TruForm or PhysX, which eventually became irrelevant and died. Once their updated version, which fixed initial issues, rolls out (if it even does), only then technology stays for good. But before that, I think that something like FSR will do that a lot faster, as it solves a very relevant problem for many gamers, instead of offering nice visual candy that adds nothing to gameplay.

Heck, one simply needs to look at the evolution of anti-aliasing algorithms.

Not at all. The main reason why there are so many of them is because full supersampling anti-aliasing is already made and it looks as good as it could be, but it is insanely heavy even on powerful hardware. RX 580 can achieve consistent 60 fps with it in Colin McRae Rally 2005 (by that I mean average fps is a lot higher, but game never drops below 60 fps), running at 1280x1024 resolution. Let's say most powerful card today is 4 times faster than RX 580, that gives enough boost to run probably 5 year old AAA game at same resolution with 8x super sampling AA. That's simply not fast enough and that's why many AA techniques exist today, but even if they get close to SSAA, they still look somewhat worse than SSAA, but offer times better performance. It's certainly not because SSAA can't achieve best visual quality, why those AAs exist, it's only due to limited performance of current hardware. And here's one dirty secret, MSAA (multisampling AA) pretty much achieved a perfect quality and performance balance in early 00s already and there's not much point in having other AAs, unless even MSAA is a bit too heavy, but then you inevitably end up with some kind of blurry antialiasing, that just looks like game is running at lower resolution and that kind of defeats the whole purpose of anti-aliasing in the first place.

There's also the notion of screen resolutions increasing at a faster rate than years ago. Heck, you don't even need to look at PC hardware to recognize this. Today's smartphones are shooting 4K Dolby Vision in low light conditions that would have stymied the top-of-the-line smartphones from ten years ago.

Phones aren't PCs. In PC space, resolution grows faster than even, but also stagnates more than ever. Because most people are still on 1080p and don't upgrade from 1080p, due to massive expense that isn't properly justified anymore as it was 15 years ago, when hardware was cheaper and generational gains were actually big.

Just as important: dickering with each game's setting to find adequate performance is laborious and time consuming. From a time perspective, it makes more sense just buying quality hardware, setting everything to Ultra and not wasting my limited free time futzing around with game configuration.

Once you get more skilled at that, it takes no more than half hour to find the perfect setting config. And your free time is financially free, as you wouldn't work (or generate money somehow else) during it anyway.

Just because something is marketed as something doesn't automatically mean it will deliver. Look at Cyberpunk 2077. The world is full of "over promise, under deliver" and not just the PC hardware market.

Same can be said for RTX 3090, it's the Cyberpunk of card. Many of them fry and you really can't run games at 8K, without some trickery that makes game to render at much lower resolution and then upscales it to 8K. It can't do that honestly, without DLSS it's dead meat at 8K, unless you play older games at lower settings and sometimes are fine with lower fps than you usually get. RX 550, however, delivered what it was advertised to do, which is replacement for older card, replacement for IGP or a card good for running esport titles. It does all that and it does a lot more. That was a proper card release.

Unfortunately, the TPU operators have

already let console discussions happen so you're too late complaining.

That's of no relevance here. PC isn't console and Console isn't PC, they can't be really directly compared as they are completely different products. I don't care if mods give bans for that or not.

Sorry, US prices here are very relevant because THAT'S THE CURRENCY WHAT AMD AND NVIDIA ANNOUNCE THEIR PRODUCTS. When Nvidia CEO Jensen Huang takes the stage to announce a new GeForce product, he quotes US dollars.

That same Jensen dude also announces products in US. Sure as hell, he won't announce price in Chinese Yuans or Brazilian Reals, as crowd won't understand how much it is. But that's it. Or maybe his side of business is also done in USD, but beyond that, cards aren't infallible to taxes and other factors that change their price. And also that's MSRP, Market Suggested Retail Price, he doesn't say this is GTX Ultra nd you sell it for 500 dolleroos or else you are going to be straightened out. Nah, MSRP is a soft suggestion and is only useful to know roughly for how much you will be able to the card. And also , if you don't want very loud and hot version, then you will pay more too.

There are some great new games that demand a lot of hardware performance and there are terrible new games that demand a lot of hardware. There are great new games that have modest hardware requirements and there are terrible new games that require modest hardware. There are tons of older games that fit all the same description.

That's correct, and I just pick some titles from certain eras and play them. Latest and greatest isn't much more valuable than classics. There are plenty of old games that never got a new version, that is better in every way.

You clearly don't realize that all games were new at one point. Don't you remember the whole "But can it play Crysis?" era? Now it's a meme from an era before they were known as memes. And yes, plenty of today's systems can play Crysis, back when it was released very few. There are games that have a rough start and got better. There are games that were better before then got worse.

I can say that I didn't know much about computers in that era and certainly didn't know about that meme probably until 2013 or 2014. I only completed the game in 2019 or 2020 just to see what the big deal was and the game itself ended up being pretty boring sci-fi shooter. I don't even know how it got so popular for being such a mediocre game, that brought nothing new or fun. I'm not saying that it can't be enjoyed, it can be, but it really is quite generic and it has tons of similar games that you can play instead of it. It's also surprising how franchise didn't die after Crysis hardware spec disaster, for many games that would mean a tons of lost sales, but they made Crysis 2 and Crysis 3 even. It's hard to believe that Crysis franchise was even a modest financial success. I doubt that Crysis got better over time, I had a poor time on my GTX 650 Ti. It could only play it at 1024x768 resolution and pretty much everything set to low or medium, even then average fps was okay, but it tended to drop often. Kepler was released in 2013, 6-7 years after Crysis was made. And only then you could have expected to run it on sub native resolution. That's garbage optimization. And finally, a glorious day came, when RTX 2080 Ti was launched. It was the first single card that can max out Crysis at 4K and get average of 60 fps (it still dropped to lower than that). No matter how I look at it, but Crysis seems to be a shoddy game launch. Crysis 2 was a lot better, but it still was scandalous for tessellation. That one setting killed performance on GCN 1 cards. It wasn't a fault of game itself, it just exposed a fault of GCN 1 architecture, which had a really weak tessellator (which was shown to be true in other games and benches), but it was enough for many idiots to say that nVidia gimped AMD performance and for some that Crysis 2 might be as brutal to run as Crysis.

However, if you have very capable new hardware you can play anything the game studios can pump out without spending any effort trying to figure out what to run it satisfactorily. That's the point of premium gaming hardware.

Perhaps, that's a valid reason, but to max out games at say 1080p, for a while you really didn't need much of card. I go the other way, I buy lower end hardware and run games at higher than recommended resolution. And I found out that messing with settings, can yield minimal fidelity losses, but higher resolution and much better fps than what reviewers said. I even found out that RX 560 could run AAA games at 1440p, meanwhile reviewers said that it can't even do 1080p always. The only problem was that after a year or two it could no longer do that and I needed low settings and 1600x900 to make stuff run on that card, so it was upgraded to RX 580.

I never said that newer games were better than older games (in fact, I mentioned that play a bunch of older games). However, newer games will push hardware farther than older games.

One thing is for certain: you have much lower standards than some others here.

Everyone has that choice, just like the shoes you put on your feet or what you put on your dinner plate.

Perhaps due to me using nVidia GeForce FX 5200 128MB 128 bit for a long time. And for a long time having a very limited budget for computer hardware, basically I had to live with some poor choices that I may have made before for a long time. So my hardware buying rationale has always been to get a lot of bang for the buck and get stuff that will last for a long time. If my computer after 3 years couldn't play games at 1080p, then I would have to play at 1600x900 or 1280x1024, and then wait a lot until there was enough money to get something better. Such conditions certainly made me rather skeptical of ultra settings and many other things. I only cared about things that make a big impact and are inexpensive, ultra settings fails at that. My first completely mine card was GTX 650 Ti and since start, I had to make it work at 1080p as it certainly couldn't do ultra. Right now I'm less restrained by money, but things I learned before weren't forgotten and now it's no longer a necessity to be super stingy, just that beyond certain threshold there isn't anything really all that much better that I would really care about. And to be honest, I probably care about gaming much less than I did before. I think that I like learning about hardware much more than to actually use it. Right now I'm more interested in some GPU architectures and why some of them perform better than others, especially after fixed pipeline era. I might be stupid enough and buy GTX 560 Ti for benching only.