NVIDIA to Acquire Mellanox Technology for $6.9 Billion

NVIDIA and Mellanox today announced that the companies have reached a definitive agreement under which NVIDIA will acquire Mellanox. Pursuant to the agreement, NVIDIA will acquire all of the issued and outstanding common shares of Mellanox for $125 per share in cash, representing a total enterprise value of approximately $6.9 billion. Once complete, the combination is expected to be immediately accretive to NVIDIA's non-GAAP gross margin, non-GAAP earnings per share and free cash flow.

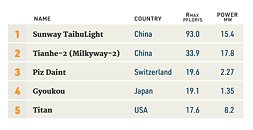

The acquisition will unite two of the world's leading companies in high performance computing (HPC). Together, NVIDIA's computing platform and Mellanox's interconnects power over 250 of the world's TOP500 supercomputers and have as customers every major cloud service provider and computer maker. The data and compute intensity of modern workloads in AI, scientific computing and data analytics is growing exponentially and has put enormous performance demands on hyperscale and enterprise datacenters. While computing demand is surging, CPU performance advances are slowing as Moore's law has ended. This has led to the adoption of accelerated computing with NVIDIA GPUs and Mellanox's intelligent networking solutions.

The acquisition will unite two of the world's leading companies in high performance computing (HPC). Together, NVIDIA's computing platform and Mellanox's interconnects power over 250 of the world's TOP500 supercomputers and have as customers every major cloud service provider and computer maker. The data and compute intensity of modern workloads in AI, scientific computing and data analytics is growing exponentially and has put enormous performance demands on hyperscale and enterprise datacenters. While computing demand is surging, CPU performance advances are slowing as Moore's law has ended. This has led to the adoption of accelerated computing with NVIDIA GPUs and Mellanox's intelligent networking solutions.