63

63

Call of Duty Modern Warfare Benchmark Test, RTX & Performance Analysis

Conclusion »Test System

| Test System | |

|---|---|

| Processor: | Intel Core i9-9900K @ 5.0 GHz (Coffee Lake, 16 MB Cache) |

| Motherboard: | EVGA Z390 DARK Intel Z390 |

| Memory: | 16 GB DDR4 @ 3867 MHz 18-19-19-39 |

| Storage: | 2x 960 GB SSD |

| Power Supply: | Seasonic Prime Ultra Titanium 850 W |

| Cooler: | Cryorig R1 Universal 2x 140 mm fan |

| Software: | Windows 10 Professional 64-bit Version 1903 (May 2019 Update) |

| Drivers: | AMD: Radeon Software 19.11.1 Beta NVIDIA: GeForce 441.12 WHQL |

| Display: | Acer CB240HYKbmjdpr 24" 3840x2160 |

We tested the public Battle.net release version of Call of Duty Modern Warfare (not a press pre-release). We also installed the latest drivers from AMD and NVIDIA, which both have game-ready support for the game.

Graphics Memory Usage

Using a GeForce RTX 2080 Ti, which has 11 GB of VRAM, we measured the game's memory usage at the highest setting.

Just like in previous Call of Duty titles, the game seems very memory hungry at first, but the performance results from our weaker cards show that all these memory allocations happen preemptively. The philosophy here seems to be "fill up the VRAM as much as possible, maybe we need the data later".

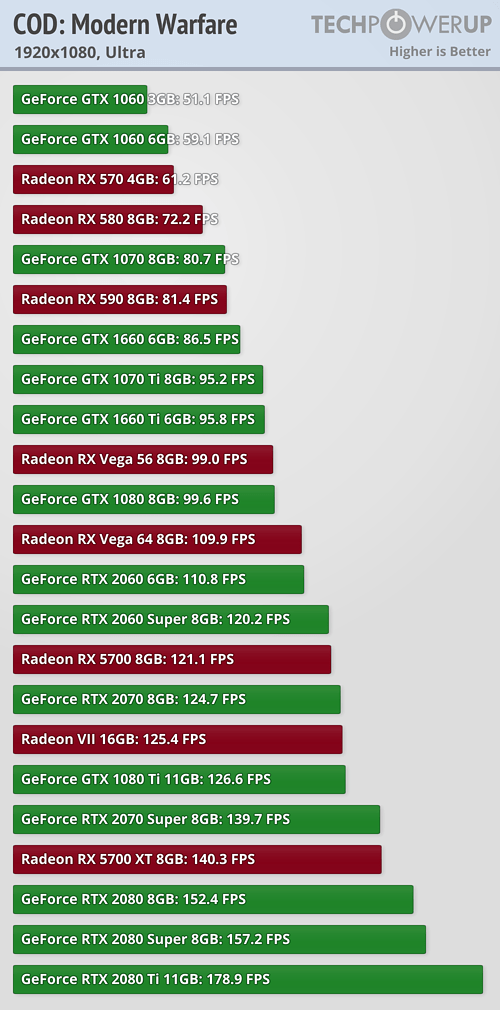

GPU Performance

FPS Analysis

In this new section we're comparing each card's performance against the average FPS measured in our graphics card reviews, which is based on a mix of 22 games. That should provide a realistic "average", covering a wide range of APIs, engines and genres.

We can clearly see that NVIDIA's Pascal generation of GPUs is at a large disadvantage here, while Turing does much better. It seems that NVIDIA focused their optimization efforts on improving performance for Turing, possibly through the use of Turing's concurrent FP+Int execution capabilities.

On the AMD side, the improvements look fairly constant across generations, showing that AMD took a more general approach. Only Radeon VII falls behind, no idea why.

May 7th, 2025 16:59 EDT

change timezone

Latest GPU Drivers

New Forum Posts

- 5070 Ti power limit questions (87)

- Black screens leading to restarts (Event ID 18) on AMD platform since changing graphics card (48)

- It's happening again, melting 12v high pwr connectors (1104)

- Forza Motorsport discussion thread (235)

- RX 9000 series GPU Owners Club (721)

- Ryzen Owners Zen Garden (7760)

- Ventoy how to copy iso to the Ventoy drive? (6)

- Is RX 9070 VRAM temperature regular value or hotspot? (384)

- How high of a ram frequency can i run on a Z690 with an 14700Kf processor? (15)

- Asrock rx 5500 xt 8gb / I flashed BIOS, but I can't install drivers (4)

Popular Reviews

- Arctic Liquid Freezer III Pro 360 A-RGB Review

- Clair Obscur: Expedition 33 Performance Benchmark Review - 33 GPUs Tested

- ASUS Radeon RX 9070 XT TUF OC Review

- ASUS ROG Maximus Z890 Hero Review

- ASRock Radeon RX 9070 Steel Legend OC Review

- Upcoming Hardware Launches 2025 (Updated Apr 2025)

- Sapphire Radeon RX 9070 XT Nitro+ Review - Beating NVIDIA

- SCYROX V6 Review

- AMD Ryzen 7 9800X3D Review - The Best Gaming Processor

- Seasonic Vertex GX 850 W Review

Controversial News Posts

- AMD Radeon RX 9060 XT to Roll Out 8 GB GDDR6 Edition, Despite Rumors (142)

- NVIDIA Sends MSRP Numbers to Partners: GeForce RTX 5060 Ti 8 GB at $379, RTX 5060 Ti 16 GB at $429 (128)

- NVIDIA Launches GeForce RTX 5060 Series, Beginning with RTX 5060 Ti This Week (115)

- Microsoft Forces Automatic Windows 11 24H2 Update on Pro and Home PCs (90)

- Sony Increases the PS5 Pricing in EMEA and ANZ by Around 25 Percent (84)

- Parts of NVIDIA GeForce RTX 50 Series GPU PCB Reach Over 100°C: Report (78)

- Intel "Bartlett Lake-S" Gaming CPU is Possible, More Hints Appear for a 12 P-Core SKU (78)

- NVIDIA GeForce RTX 5060 Ti 8 GB Variant Benched by Chinese Reviewer, Lags Behind 16 GB Sibling in DLSS 4 Test Scenario (73)