Wednesday, April 23rd 2025

Parts of NVIDIA GeForce RTX 50 Series GPU PCB Reach Over 100°C: Report

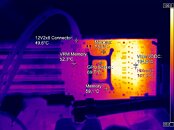

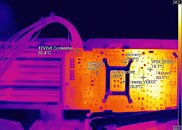

Igor's Lab has run independent testing and thermal analysis of NVIDIA's latest GeForce RTX 50-series graphics cards, including the add-in card partner design RTX 5080, 5070 Ti, and 5060 Ti, which are now attracting attention for surprising thermal "hotspots" on the back of their PCBs. These hotspots are just the areas on PCB that get hot under load, and not the "Hot Spot" sensor NVIDIA removed with RTX 50 series. Infrared tests have shown temperatures climbing above 100°C in the power delivery region, even though the GPU die stays below 80°C. This isn't a problem with the silicon but with concentrated heating in clusters of thin copper planes and via arrays. Card makers like Palit, PNY, and MSI have all seen the same issue since they closely follow NVIDIA's reference PCB layout and use similar cooler mounting. A big part of the trouble comes down to how PCB designers and cooler engineers work separately.

NVIDIA's Thermal Design Guide gives AIC partners detailed power-loss budgets, listing worst-case dissipation for the GPU, memory, NVVDD and FBVDDQ rails, inductors, MOSFETs, and other components, and it recommends ideal thermal interface materials and mounting pressures. The guide assumes that even heat is spreading and that there is perfect airflow in a wind tunnel, but actual consumer PCs don't match those conditions. Multi-layer PCBs force high currents through 35 to 70 µm copper layers, which join at tight via clusters under the VRMs. Without dedicated thermal bridges or reinforced vias, these areas become bottlenecks where heat builds up, and the standard backplate plus heat-pipe layout can't pull it away fast enough.This hotspot issue isn't limited to the newest Blackwell GPUs. Even the previous-generation GeForce RTX 4090, which was engineered for up to 600 W of heat dissipation with multiple vapor chambers and a three-slot cooler, showed a similar pattern. Thermographic snapshots of prototype Ada architecture boards revealed rear-side VRM zones reaching the mid-70s Celsius while the GPU die sat in the low-60s. Internal versions of NVIDIA's guide were redacted to protect internal details, and no special note was made to reinforce the backplate in that spot. As a result, partners assumed the standard backplate contact area was sufficient and didn't add any extra cooling measures. Fortunately, simple tweaks can make a big difference. In Igor's Lab tests, placing a thin thermal pad or a small amount of conductive putty between the hotspot area and the backplate dropped peak VRM temperatures by 8 to 12 °C under the same load.

Sources:

Igor's Lab, via Tom's Hardware

NVIDIA's Thermal Design Guide gives AIC partners detailed power-loss budgets, listing worst-case dissipation for the GPU, memory, NVVDD and FBVDDQ rails, inductors, MOSFETs, and other components, and it recommends ideal thermal interface materials and mounting pressures. The guide assumes that even heat is spreading and that there is perfect airflow in a wind tunnel, but actual consumer PCs don't match those conditions. Multi-layer PCBs force high currents through 35 to 70 µm copper layers, which join at tight via clusters under the VRMs. Without dedicated thermal bridges or reinforced vias, these areas become bottlenecks where heat builds up, and the standard backplate plus heat-pipe layout can't pull it away fast enough.This hotspot issue isn't limited to the newest Blackwell GPUs. Even the previous-generation GeForce RTX 4090, which was engineered for up to 600 W of heat dissipation with multiple vapor chambers and a three-slot cooler, showed a similar pattern. Thermographic snapshots of prototype Ada architecture boards revealed rear-side VRM zones reaching the mid-70s Celsius while the GPU die sat in the low-60s. Internal versions of NVIDIA's guide were redacted to protect internal details, and no special note was made to reinforce the backplate in that spot. As a result, partners assumed the standard backplate contact area was sufficient and didn't add any extra cooling measures. Fortunately, simple tweaks can make a big difference. In Igor's Lab tests, placing a thin thermal pad or a small amount of conductive putty between the hotspot area and the backplate dropped peak VRM temperatures by 8 to 12 °C under the same load.

78 Comments on Parts of NVIDIA GeForce RTX 50 Series GPU PCB Reach Over 100°C: Report

My Palit RTX3070Ti used to have a hotspot of 107C, suffice to say, it broke soon after. I got it replaced with an ASUS RTX3070Ti TUF, which never went above 88C, that was much better, although, still a bit high. My brother has one too, same thing, but his hasn't broken as of yet, but suffers extreme performance throttling.

The ASUS 5070Ti should be fine from what I saw.

Not to take a dump on your reviews @W1zzard as they are very thorough, but this is why we requested FLIR imaging before in your reviews, thankfully, at the moment we can get that on Guru3D. For most of the RTX5000 AIBs, the PCBs look very cool under operation, but let's face it, AIBs don't send every model they make to reviewers.

Anyways, I do expect extremely high hotspot temps on founder models, especially the 5090, so much circuity to cool in such a small surface area, it's to be expected.

What Igor is reporting are PCB temperatures, and in this context "hotspot" means "hottest measurement in the FLIR image", it has nothing to do with the GPU or the sensors in it.

Hope that makes sense

I wouldn't discount the probability that GPU die hotspot will kill some cards though, given that customers are no longer able to see that info and there are always cards that come out of the factory with paste or mounting issues.It's a bad choice of words. Most people thinking of hotspot temp will indeed think of GPU die hotspot. The title should be appended to include "VRM" before the word hotspot to make it clear.

Just think those temps are outside of a case with no extra radiated heat from your CPU/MB VRMs and the SSD affecting it, and it's not covered in 2 years' worth of dust either. And we won't mention the whole thing running in a 27c room either...

Hopefully AMD make a strong enthusiast tier comeback with UDNA because we definitely need more options to choose from.

The hardware needs to last until the warranty is over anyway.

Those repair youtbue channel which i sometimes watch have very new cards on the repair table. Everything over 600€ should last much longer when it is a graphic card.

I'd like to see the marketing hoax from that particular graphic card. Every graphic card advertises how fabulous and awesome the cooling solution is.

I agree with you that these expensive cards should have had higher built quality and wider safety tolerances but unfortunately now we live in a world where profit commands.

The 5070 has fewer VRM phases compared to the 5080 and relative to the load they are stressed more.

Of course this is just one variable, but probably one of the most if not the most important one.

So extrapolating from this it seems that lower-tier GPUs (5070 and downwards) are more likely to have undersized VRMs relative to their power draw compared to higher-tier GPUs (5070Ti and upwards).

Going further it seems that the 5070 Ti is probably the most fortunate of this lineup.

There are 5070 Ti variants which have (apparently) the same PCB as the corresponding 5080 variants, that means the same number of phases for a lower power draw. One example is the ASUS TUF -> 5070 Ti & 5080.

Other variants are even better, not only the same PCB but also the same cooler, which as proven by the review has better results on the 5070 Ti. One example is the Palit Gamerock -> 5070 Ti & 5080.

Of course the AIBs are still to blame, looking at TPU reviews for 5070 Tis and 5080s the usual increase over MSRP is about $250. Yes this does include other stuff like RGB, dual BIOS, factory OC whatever. But the biggest reason for the increase is the cooling solution, and not everyone is including thermal pads on the backplate, when it would cost literally pennies to do so. The profit margins are sufficient to allow the whole back of the PCB to be padded.

The greed knows no limits.