Monday, August 17th 2020

Intel Xeon Scalable "Ice Lake-SP" 28-core Die Detailed at Hot Chips - 18% IPC Increase

Intel in the opening presentation of the Hot Chips 32 virtual conference detailed its next-generation Xeon Scalable "Ice Lake-SP" enterprise processor. Built on the company's 10 nm silicon fabrication process, "Ice Lake-SP" sees the first non-client and non-mobile deployment of the company's new "Sunny Cove" CPU core that introduces higher IPC than the "Skylake" core that's been powering Intel microarchitectures since 2015. While the "Sunny Cove" core itself is largely unchanged from its implementation in 10th Gen Core "Ice Lake-U" mobile processors, it conforms to the cache hierarchy and tile silicon topology of Intel's enterprise chips.

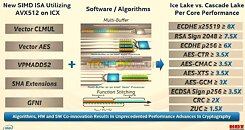

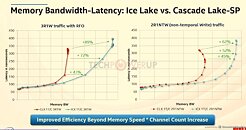

The "Ice Lake-SP" die Intel talked about in its Hot Chips 32 presentation had 28 cores. The "Sunny Cove" CPU core is configured with the same 48 KB L1D cache as its client-segment implementation, but a much larger 1280 KB (1.25 MB) dedicated L2 cache. The core also receives a second fused multiply/add (FMA-512) unit, which the client-segment implementation lacks. It also receives a handful new instruction sets exclusive to the enterprise segment, including AVX-512 VPMADD52, Vector-AES, Vector Carry-less Multiply, GFNI, SHA-NI, Vector POPCNT, Bit Shuffle, and Vector BMI. In one of the slides, Intel also detailed the performance uplifts from the new instructions compared to "Cascade Lake-SP".Besides higher IPC from the "Sunny Cove" cores, an equally big selling point for "Ice Lake-SP" is its improved and modernized I/O. To begin with, the processor comes with an 8-channel DDR4 interface for the 28-core die. This is accomplished using four independent memory controller tiles, each with two memory channels. The previous-generation 28-core "Cascade Lake-SP" die features two memory controller tiles, each with three memory channels, making up its 6-channel interface. Each of the memory controllers features fixed-function hardware for total memory encryption using the AES-XTS 128-bit algorithm without CPU overhead. Improvements were also made to the per-channel scheduling.Besides the 8-channel memory interface, the other big I/O feature is PCI-Express gen 4.0 bus. The 28-core "Ice Lake-SP" die features four independent PCIe Gen4 root-complex tiles. Intel enabled all enterprise-relevant features of PCIe Gen4, including I/O virtualization, and a P2P credit fabric to improve P2P bandwidth. The "Ice Lake-SP" silicon is also ready for Intel's Optane Persistent Memory 200 "Barlow Pass" series. The die also features three UPI links for inter-socket or inter-die communication, put out by independent UPI controllers that have their own mesh interconnect ring stops.The main interconnect on the "Ice Lake-SP" die is a refined second-generation mesh interconnect, which coupled with the improved floor-planning and new features, works to significantly reduce interconnect-level latencies over the previous generation dies. Intel also worked to improve memory access and latencies beyond simply dialing up memory channels and frequency.Besides benefiting from the switch to the 10 nm silicon fabrication process, "Ice Lake-SP" introduces several new power management latency reduction features, including Fast Core Frequency Change, and Coherent Mesh Fabric Drainless Frequency Change. Effort also appears to be put in the area of improving CPU core frequencies in response to AVX-512 workloads.Intel Speed Select Technology is a unique new feature that allows admins to power-gate CPU cores to spend the processor's TDP budget to bump up frequency on fewer cores. This allows you to reconfigure the 28-core die to something like a 16-core or even 8-core die, on the fly, with aggressive clock speed profile. This feature is also used to power-gate cores when not needed, to minimize the server's power draw.Intel is expected to launch its Xeon Scalable "Ice Lake-SP" processor within 2H-2020.

The "Ice Lake-SP" die Intel talked about in its Hot Chips 32 presentation had 28 cores. The "Sunny Cove" CPU core is configured with the same 48 KB L1D cache as its client-segment implementation, but a much larger 1280 KB (1.25 MB) dedicated L2 cache. The core also receives a second fused multiply/add (FMA-512) unit, which the client-segment implementation lacks. It also receives a handful new instruction sets exclusive to the enterprise segment, including AVX-512 VPMADD52, Vector-AES, Vector Carry-less Multiply, GFNI, SHA-NI, Vector POPCNT, Bit Shuffle, and Vector BMI. In one of the slides, Intel also detailed the performance uplifts from the new instructions compared to "Cascade Lake-SP".Besides higher IPC from the "Sunny Cove" cores, an equally big selling point for "Ice Lake-SP" is its improved and modernized I/O. To begin with, the processor comes with an 8-channel DDR4 interface for the 28-core die. This is accomplished using four independent memory controller tiles, each with two memory channels. The previous-generation 28-core "Cascade Lake-SP" die features two memory controller tiles, each with three memory channels, making up its 6-channel interface. Each of the memory controllers features fixed-function hardware for total memory encryption using the AES-XTS 128-bit algorithm without CPU overhead. Improvements were also made to the per-channel scheduling.Besides the 8-channel memory interface, the other big I/O feature is PCI-Express gen 4.0 bus. The 28-core "Ice Lake-SP" die features four independent PCIe Gen4 root-complex tiles. Intel enabled all enterprise-relevant features of PCIe Gen4, including I/O virtualization, and a P2P credit fabric to improve P2P bandwidth. The "Ice Lake-SP" silicon is also ready for Intel's Optane Persistent Memory 200 "Barlow Pass" series. The die also features three UPI links for inter-socket or inter-die communication, put out by independent UPI controllers that have their own mesh interconnect ring stops.The main interconnect on the "Ice Lake-SP" die is a refined second-generation mesh interconnect, which coupled with the improved floor-planning and new features, works to significantly reduce interconnect-level latencies over the previous generation dies. Intel also worked to improve memory access and latencies beyond simply dialing up memory channels and frequency.Besides benefiting from the switch to the 10 nm silicon fabrication process, "Ice Lake-SP" introduces several new power management latency reduction features, including Fast Core Frequency Change, and Coherent Mesh Fabric Drainless Frequency Change. Effort also appears to be put in the area of improving CPU core frequencies in response to AVX-512 workloads.Intel Speed Select Technology is a unique new feature that allows admins to power-gate CPU cores to spend the processor's TDP budget to bump up frequency on fewer cores. This allows you to reconfigure the 28-core die to something like a 16-core or even 8-core die, on the fly, with aggressive clock speed profile. This feature is also used to power-gate cores when not needed, to minimize the server's power draw.Intel is expected to launch its Xeon Scalable "Ice Lake-SP" processor within 2H-2020.

13 Comments on Intel Xeon Scalable "Ice Lake-SP" 28-core Die Detailed at Hot Chips - 18% IPC Increase

Frequency ?

TDP ?

Price ?

As for core count, I predict Intel only has up to 28 cores per die, with which it will achieve SKUs with core counts ≤28 using a single die, and >28 cores using a 2-die MCM for up to 56 cores.

Hot Chips is more of a technical deep-dive conference. They seldom talk productization/segmentation in these sessions.

Intel is still pursuing monolithic die. At this point, having experienced a couple of years of EPYC servers, and seeing where the server market is headed (even if that's not currently the direction my servers are headed) it seems boneheaded to be chasing monolithic dies at this point.

I don't know the exact details but surely the cost of making a single HUGE die with 28 cores and that much cache without defects is exponentially difficult. Meanwhile AMD is successfully glueing lots of dirt-cheap 8C chiplets together with excellent yields, a fantastic interconnect, and doing so with linear cost and power increases. If you have a real need for cores, 28C simply doesn't cut it, and the minute you move to a 2P solution, all of the performance benefits of monolithic dies vanish, because the inter-socket interconnects and RAM latency are way worse than the inter-die interconnects on an Epyc chip.

There's a non-zero chance that by the time Intel goes mainstream with Ice Lake-SP 28-core products, AMD will be taping out their 128-core next-gen.

As for the guy before me, you'll need some time to see what Intel actually has in store...Sapphire Rapids will be the first actual competitive product. Ice Lake SP is just so 2018 and they know it. As for 128 core next-gen from AMD, that won't happen any time soon. I am pretty sure Milan will still be 64 cores max and maybe Zen 4 will afford to up the core count thanks to TSMC 5nm.

However, this isn't using 10SF process, but 10+, which has clock issues at the high-end - not such a problem for servers where base clock matters more. Might be a yield problem however. Let's see when it hits the market in quantity - I doubt this year except maybe a limited launch to tell the investors they managed it.

My speculation about Milan's successor is based on a hunch, but a realistic one - here's my 'logic' if you want to call it that.

Zen2 scales very well from 1, 2, 4, 8 chiplets. Sure, there are losses and latencies, but in the grand scheme of things the infinity fabric works well enough that scaling to 16 chiplets is plausible.

Zen3's major change is that a chiplet contains a unified CCD that combines both 4-core CCX of Zen2 into a single 8-core block. There's nothing to stop AMD reverting Zen4 to a pair of 8-core Zen3 CCXs to make a 16-core CCD, and then having 8 of those.

Speculation, take with a pinch of salt etc - but the core count race has been on for a few years now and if AMD feels there's a market for 128-core parts, it doesn't have to do anything technically radical to achieve that. Also, the 8C chiplet for Zen2 has spent a year serving the entire market by itself, so the 3100/3300X wouldn't be possible with a 16C chiplet but that's changed now with Renoir (and the upcoming Cezanne APUs) to serve the lower-half of the consumer market, freeing up AMD the potential to double the size of its chiplet without making big gaps in its product stack. We will see 4C and 6C Zen3 chips but we may get double core counts with Zen4, Especially now that Intel has nearly doubled its thread count for consumers despite being stuck on a dated architecture and process node.

Ice Lake-SP loses a lot less clock speed during heavy AVX workloads, so there are substantial gains beyond just IPC.

Ice Lake-SP features the same cache system as Tiger Lake, so it should feature the performance benefits from this change. Ice Lake-SP should offer 18% IPC gains over Skylake-SP, not Skylake client.While Ice Lake-SP might be delayed, how is it "2018"? It offers performance per core beyond any competitor on the market (as of right now).

I think many of you guys in here are far too fixated on specs and theoretical benchmarks. Servers are nearly always built for a specific purpose, and the hardware is chosen based on that purpose. For many workloads, including heavy AVX workloads, Intel is still king. So it's not really just about core count or cores per dollar. Servers are not built to get Cinebench or Geekbench scores.

But the interesting part will be Ice Lake-X vs. Zen 3, which will become more relevant for many of us.