Tuesday, April 1st 2014

Radeon R9 295X2 Pictured in the Flesh, Specs Leaked

Here it is, folks! The first pictures of what you get inside the steel briefcase AMD ships the Radeon R9 295X2 in. AMD got over the stonewall of having to cool two 250W GPUs with a single two-slot cooling solution, by making it an air+liquid hybrid. The cooler appears to have been designed by any of the major water-cooling OEMs (such as Asetek, Akasa, etc.), and most likely consists of a pair of pump-blocks plumbed to a single 120 x 120 mm radiator, over a single coolant loop. The coolant channel, we imagine, could be identical to that of the ROG ARES 2 by ASUS. There's also a 90 mm fan, but that probably cools heatsinks covering the memory, VRM, and PCIe bridge. The card draws power from two 8-pin PCIe power connectors, which as you'll soon find out, are running at off-specs.

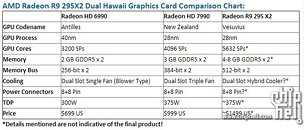

The Radeon R9 295X2, codenamed "Vesuvius," runs a pair of 28 nm "Hawaii" chips, routed to a PLX PEX8747 PCIe bridge. Each of the two have all 2,816 stream processors enabled, totaling the count to 5,632. The two also have 352 TMUs, and 128 ROPs between them. The entire 512-bit memory bus width is enabled, and each GPU is wired to 4 GB of memory totaling 8 GB on the card. Clock speeds remain a mystery, and probably hold the key to a lot of things, such as power draw and cooling. Lastly, there's the price. AMD could price the R9 295X2 at US $1,499, half that of NVIDIA's GeForce GTX TITAN-Z. In that price difference, heck, even for $500, you could probably buy yourself a full-coverage water block, and a full-fledged loop, complete with a meaty 3 x 120 mm radiator.

Sources:

ChipHell, WCCFTech

The Radeon R9 295X2, codenamed "Vesuvius," runs a pair of 28 nm "Hawaii" chips, routed to a PLX PEX8747 PCIe bridge. Each of the two have all 2,816 stream processors enabled, totaling the count to 5,632. The two also have 352 TMUs, and 128 ROPs between them. The entire 512-bit memory bus width is enabled, and each GPU is wired to 4 GB of memory totaling 8 GB on the card. Clock speeds remain a mystery, and probably hold the key to a lot of things, such as power draw and cooling. Lastly, there's the price. AMD could price the R9 295X2 at US $1,499, half that of NVIDIA's GeForce GTX TITAN-Z. In that price difference, heck, even for $500, you could probably buy yourself a full-coverage water block, and a full-fledged loop, complete with a meaty 3 x 120 mm radiator.

72 Comments on Radeon R9 295X2 Pictured in the Flesh, Specs Leaked

3870x2 was my last ATI/AMD card.. i remember the price was right, perform well and still lookin good.

why can't they drop the price of a single 290x card and price the dual gpu at a good price/performance ratio.

cause people still buy whatever the price is.

this stupid mining shit is getting ridiculous.

295x2 = 375w

Leaked Benchmark

Benchmark Source Courtesy of DarkTech-Reviews.com

14% more performance than two single cards in Crossfire ?!?! Seems at odds with AMD's own slide showing the 295X2 having a 60-65% speed up over a single card in Fire Strike.

That chart also looks suspiciously like an MS Paint worked version of Hilbert's

Plus, where the hell does Xzibit get this stuff? He's a google master I clicked the link - it's arabic?

o_O

Actually. You know what? Maybe it's 'stolen' from Guru3D, in as much as Hilbert has done the review and someone has posted some results before the NDA. That would explain the same scores.

ThinkComputers

WCCFTech

Overclock.net

etc...

I was just catching u guys up

AMD should focus on improving their CPUs, compete with Intel. And for graphics all manpower should go to the next generation.

The dual-gpu cards design keeps proving less than ideal: currently Thief (game AMD promotes pridely) has flickering in crossfire so you want to have the choice to disable crossfire. You can't disable crossfire on a dual-gpu card. Not with 4870X2 that is, maybe something's changed idk...

Thief Mantle doesn't support crossfire so i suppose on this card it just runs 1 gpu then. But for Thief or other games where crossfire has issues under DirectX or doesn't work at all under Mantle no-one needs this card.

I feel like they could have done more intensive engineering work and done something like NVidia did with the Titan-Z.