Thursday, May 14th 2015

Intel Core i7-5775C "Broadwell" Scrapes 5 GHz OC on Air

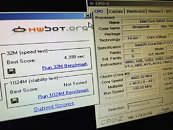

Intel's parting gifts to the LGA1150 platform, the Core i5-5675C and the Core i7-5775C, are shaping up to be a pleasant surprise to overclockers. Built on the 14 nm "Broadwell" silicon, the two quad-core chips come with extremely low rated TDP of 65W, for products of its segment. We weren't sure of those energy savings somehow translated into a massive overclocking headroom. It turns out, there's hope. Toying with a Core i7-5775C chip on an ASRock Z97 OC Formula, Hong Kong-based HKEPC found that the chip was able to reach 5.00 GHz clock speeds with ease on air-cooling, and a core voltage of 1.419V. At 4.80 GHz, the i7-5775C crunches 32M wPrime in 4.399 seconds.

Sources:

HKEPC, Expreview

70 Comments on Intel Core i7-5775C "Broadwell" Scrapes 5 GHz OC on Air

Also a lot of tech press is shill for adverteries only a few independent left. Btw it was a funny read , thanks

The i5 system is there for my visitors to game with. It will game as well as I need it to.

The same goes for the i7-4790K.

My wife will be fine with the 2600K. She's a teacher and uses a dual monitor setup for lesson plans, documents, and internet. She also writes books.

That's what I'm doing and if you disagree, that's OK too.

I agree that the 980 is still a strong powerful CPU but the reason I sold mine and moved on was the performance for GPUs

I am on a 4690k and it would take a lot lot more than Skylake to get me to move up, like say PCIe 4.0 and with that will come much faster CPUs so win win.

Intel not about being evil or anything, it's simply that adding more cores or more threads simply does not affect the average machine. If you want a nice, value-oriented machine, go get a simple i5-44xx, pair it with a good H97 or Z97 motherboard, drop a good GPU and you'll be hard pressed to find games that run better on more expensive chips. Intel knows that because they have very, very extensive validation labs running all kinds of software, as well as a very, very large network of partners asking them different things for their next CPU. Gamedevs also know that, afterall, they build said games, and they react appropriately lazily, because concurrency (the hard part in multithreading anything) is hard, yo. To see what I mean for gaming, just compare a 5930K (6C/12T) to a 4690K (4C/4T) - <2fps difference in most tests, and in most of them, the 5930K is slower than the 4690K, simply because it can't turbo boost as high ( www.anandtech.com/bench/product/1261?vs=1316 ). Much the same story with the 5960X (8C/16T) vs 4690K (4C/4T) ( www.anandtech.com/bench/product/1261?vs=1317 )

So, if games and general purpose computing doesn't really scale with cores (unlike virtualization and databses for example), what can we try? Oh I know, let's clock the chips faster! Oh, wait.. we tried that one, and it turns out, you can't really raise clockspeeds much higher than they already are due to thermals and error rates for mainstream chips. Intel learnt this the hard way with NetBurst (remember the launch when they promised 10-50GHz?). AMD mistakenly thought they could do better with Bulldozer - they couldn't. The 5GHz chip they push now has a laughable 220W TDP compared to it's 4.2GHz brethren at 125W, and ships with a CLC. Sure, it looks fast on paper, but a similarly-priced 4690K (at launch at least) matches the FX-9590 quite easily (scroll down for gaming benchmarks): www.anandtech.com/bench/product/1261?vs=1289 . In the tests AT runs, the 9590 wins so few benchmarks that are so niche for most people they're basically irrelevant. I mean, how often is file archival CPU-bound for you? How often are you rendering and encoding video?

So.. now that's over, what HAS Intel been doing since they decomissioned the NetBurst based spaceheater cores (did you know they managed to make dual-core variants of Prescott hit 3.73GHz on air at 65nm?)? Let's have a look:

2006: Core 2. Conroe was introduced, based on an updated dual-core variant of the Pentium M, it get Instruction set parity with the Pentium 4s, and finally fit for duty as the main platform. Clockspeeds went down from the 3.2-3.6GHz typical down to 2.4-3.0GHz, depending on variant and core counts (1, 2 or 4).

2007: Penryn launch. Die-shrunk Conroe, among the first to hit 4GHz on air, added SSE4.1 instructions, 50% more cache and other minor tweaks. Very similar to the CPUs-die changes on SB -> IVB for example.

2008: Nehalem got pushed out. Here, we find Intel implementing the first third of it's platform upgrade: the memory controller was moved onto the CPU die, leaving the Northbridge (renamed to an I/O Hub) to be but a shell of it's former glory, now being nothing more than a glorified PCI Express controller. It also introduced triple-channel RAM. Together with the IMC-CPU integration, it made the memory system of Nehalem much faster, and finally competitive with AMD's similar approach that they implemented first with their K8 (S754 and S940, later S939, then Phenom) CPUs may years ago. The x86 Core itself was an updated Penryn/Conroe core, itself an updated P6 core used in the Pentium Pro, 2 and 3. Nehalem also saw the return of HyperThreading, not seen since the Pentium 4. It was a nice performance boost at the time.

On the very high-end server market, Intel introduced the hilariously expensive Dunnington 6-core chip (Xeon 7500 series). Interestingly, Dunnington is based off Penryn. Over the years, we'd end up seeing more and more of the high-end chips have later launches than the mainstream laptop and desktop chips.

2009: Lynnfield: A smaller, cheaper variant based around Nehalem cores. This implemented the second half of Intel's platform evolution by moving the PCIe controller on to the CPU itself. This left only the southbridge as an external component (now renamed the Platform Controller Hub, or PCH), itself connected via a modified PCIe link Intel called DMI.

2010: Arrandale, Clarkdale, Westmere and Nehalem-EX. The Arrandale, Clarkdale and Westmere were 32nm die-shrink of the Nehalem chips. The Westmere core was an updated Nehalem core, adding a few more instructions, the most interesting being the AES-NI Instruction set to do AES crypto very, very fast compared to software implementations.

Arrandale and Clarkdale were the mobile and desktop Dual-core variants. with these chips, Intel finally integrated their iGPU into the CPU. Fun fact: the GPU was 45nm while the rest was 32nm on these chips.

Westmere was the desktop chip, only two variants of which were ever launched under the i7 moniker: the 980 and 990X, most notable for bringing 6-core CPUs to the desktop, but not much else.

The Nehalem-EX (aka Beckton) on the other hand was Intel's new crowning jewel, with 8 HyperThreaded cores, lots of cache, a quad-channel memory controller, a brand new socket (LGA1567, to fit the pins needed for the increased I/O) and compatibility with 8 sockets and beyond (for the 7500 series at least). After 2 years, Dunnington was finally put to rest, and really high-end servers finally got the much improved memory controller and I/O Hub design.

2011: Sandy Bridge (LGA1155), Westmere-EX.

Sandy Bridge was the third and final piece of Intel's platform evolution, introducing a brand-new core built from the ground up. A sharp contrast to what had so far (outside of a brief stint with NetBurst) been a long, long line of repeatedly extended P6 cores. The results of this new architecture showed themselves immediately. Sadly for the server people, the immense validation timescales needed for server chips meant that by this stage, the desktop platform was basically a generation ahead of the servers, and thus while desktop users enjoyed the benefits of Sandy Bridge, servers made do with Westmere-EP. Available in up to quad-core, hyperthreaded variants, and a fair bit faster than the higher-end i7-900 series above it. Thanks to this new architecture, Intel was able to push TDP quite far down. Down to 17W in fact, and launched the Ultrabook initiative to push thin, light, stylish laptops in an effort to revitalize the PC market.

Westmere-EX. A comparatively minor update to the MP platform, adding more cores (now up to 10 HyperThreaded cores) and the newer, updated Instructions of the Westmere core.

2012: Sandy Bridge-E/EP, Ivy Bridge.

Ivy Bridge was a simple Instruction Set update and heftier than expected iGPU upgrade, together with a 22nm die shrink from the 32nm used on Westmere and Sandy Bridge. Fairly simple stuff. Reduced power consumption, minorly improved performance. Thanks to the extremely low power and much improved GPU, IVB made the Ultrabook form factor far, far less compromised than the previous SB-based machines. IVB-Y CPUs were also introduced, allowing for 7W chips, in the hopes of seeing use in tablets. Due to die cracking issues, Intel was forced to use TIM inside their heatspreader rather than solder, and still has to even now on the smaller chips. This reduces thermal conductivity and makes overclocking the K-series ships harder if one does not wish to delid their CPU.

Sandy-Bridge-E/EP: Introduced the LGA2011 socket, bringing quad-channel RAM and the shiny new SB cores to the mainstream server market and finally replacing the aging i7-900 series of chips. These came in variants ranging from 4 cores all the way up to 8 cores. These did not find their way into the MP platform, leaving MP users to slug onwards with the aging Nehalem-EX and Westmere-EX chips, though 4-socket variants of SB-E were released.

2013: IVB-E/EP, Haswell.

And now we reach the end of the line, with Haswell. Haswell is a CPU entirely focused on reducing power consumption to the minimum possible, though there is still a performance improvement of 10-20% overall, with much bigger improvements possible when using the AVX2 instructions. The TSX instructions were expected to provide a large improvement for mult-ithreaded tasks by making programming concurrent systems easier. Sadly, these were bugged, and Intel released a microcode update to disable the instructions.

IVB-E/EP were direct die-shrink upgrades to SB-E/EP, allowing for even more cores (up to 12 now) and higher clockspeeds.

2014: IVB-EX, HSW-E/EP, Broadwell-Y

IVB-EX finally launches (January), bringing with it yet another new socket, LGA2011-1. Thanks to moving part of the memory controller onto an external module, the CPUs support much larger amounts of RAM. Together with an increased core count of up to 15 cores per CPU, higher IPC and clockspeeds than Westmere-EX. DBAs and Big Data analysts are overjoyed at being able to put huge amounts of data into much faster RAM.

HSW-E/EP launched later in the year (September), bringing with it more cores (now up to 18 cores per CPU) memory improvements from moving to DDR4 (requiring a new socket, LGA2011-3, along the way) as well as the new instruction set extensions, most notably the AVX2 Instruction set. HSW-E is also notable for being the first consumer 8-core CPU.

BDW-Y (launched as Core M) also launches in September, providing very low-power CPUs for tablet usage. These are pure die-shrinks, so there are almost no performance improvements. Due to low 14nm yields, no other CPUs based on BDW are launched.

2015: BDW-U, BDW-H, BDW-C, BDW-E/EP/EX, Xeon-D, HSW-EX, Skylake

BDW-U, the low-power Ultrabook-optimised CPUs launch in February. Most laptops now being powered by Ultrabook CPUs regardless of size class update to it.

BDW-H, now primarily targeted at SFF machines (though still usable in high-performance laptops, having a maximum TDP of 47W) and BDW-C (full-sized desktops) are repeatedly delayed. Some market placement changes mean that the unlocked BDW-C CPUs now have the top of the line iGPU, including a large eDRAM cache. These are expected to be short-lived on the market as Skylake lanches soon after.

BDW-E/EP/EX: much like the rest of the BDW family, are expected to be pure die-shrinks and end up being drop-in replacements for HSW-E/EP/EX. Expect the server variants to add even more cores.

Xeon-D SoC is also launched in 2015, without much fanfare. These have 4 or 8 BDW cores with HT, dual channel DDR4 support, and integrate the PCH onto the same die as the CPU. The CPU is BGA-only, but with an 80W TDP, far outpaces the Xeon E-3 platform for CPU-bound tasks, uses less power and simplifies motherboard design. It also integrates 4 ethernet controllers - 2 gigabit and 2 10gigabit, 24 PCIe 3.0 lanes from the CPU and 8 PCIe 2.0 lanes from the integrated PCH.

HSW-EX: the final server segment Intel hadn't launched HSW for. Basically bringing up core-count, adding the new fixed TSX-NI instructions and adding DDR4 support to the 4+ socker server market. Thanks to the external memory modules (called buffers by Intel) being, well, external, these can also run DDR3 memory when fitted with DDR3 memory risers

Skylake: Another mobile focused release, with the focus still on reducing power consumption. Aside from the socketed desktop and server platforms, all Skylake CPUs are now SoCs, with the PCH integrated into the CPU core, with the expectation of reduced power consumption and simpler board design.

In conclusion: Desktop performance improvements slowed down when Sandy-Bridge was launched, because all the obvious improvements had been implemented by then. What remained was to improve power efficiency as far as it could go, so Intel went just there. The fact that there were able to extract 5-20% of performance improvement (depending on which generational jump you look) while also dropping power consumption across the board, AND improving power efficiency to the point where a full Broadwell Core can be used in a fanless environment like a tablet is simply a testament to the sheer excellence of Intel's engineering teams. On the server side, we've seen a steady, relentless increase in core counts every generation. Combined with the improvements in IPC, this means that a modern 2699 v3 CPU is easily over 4 times as fast as the Dunnington core that brought Intel into the 8-socket market to compete with IBM POWER (and now OpenPOWER) and SUN (now Oracle) SPARC. Oddly enough, it has made those machines lower in price as a direct result of providing serious competition (yes, Intel providing competition to lower prices?! Who woulda thought!).

Now sure, I can be a whiny crybaby and whine how Intel isn't doing anything and resting on their laurels and fucking the desktop market over and not improving performance, but I have to look at the big picture (mostly because I have to in order to choose which server and server CPU I need for my dual-socket server), and in the big picture, Intel has been continuously improving, and as much as I'd love to see a socketed, overclockable variant of the Xeon-D (preferably with ECC memory support), I also completely understand why Intel hasn't introduced such a CPU yet: it's simply unneeded for the vast, vast majority of people, from the most basic facebook poster all the way up to the dual-GPU gamers. For those who want even more, we've have the LGA1366, then LGA2011, then LGA2011-3 platforms, PLX-equipped motherboards (for up to quad-card setups) and dual-GPU graphics cards available to us. Sure, Intel could price everything lower, but I ask you, honestly, if you were in Intel's position, would you not charge more for higher-performing parts?

So sure, I am excited about next-gen, more for the high-end server chips than anything else, but Xeon-D and Skylake integrating the PCH into the CPU is also very exciting to me - for the first time ever we might have a completely passive x86 chip, but with good graphics! how is that not exciting?! How is it not exciting that we'll have x86 CPUs in our phones that we can drop into a dock and have the PHONE drive full windows desktop apps? Or do full dual-boot between, multiple OSes?! I am excited, and I'm really happy I am, it's just that my current excitement is over slightly different things than 12-year old me would be excited about.

Finally, a little note about Mantle, DX12 and Vulkan: see my above comment about concurrency is hard. A direct consequence to that is that optimizing the API is easier than writing high-performance concurrent code. And you won't find your average game studio working on releasing a new game ever 2-3 years investing anywhere as much time into making their games scale very well with threads compared to, say, people working on PostgreSQL or MapReduce or Ceph; especially at the rate gamedevs are paid and the hours they work.

EDITS: Corrected S940 to K8, as pointed out by newtekie1, various spelling corrections, clarifications and added HSW-EX (which I completely forgot about)

I read it all, but I was lost for most of it. LOL!

I mean everyone has a laptop or phone of there own these days...

Three of us can play at a time, and in a pinch, I could take my FX-9590 out of the box and set it up too.

So they don't all get daily use. I usually use two at a time. (one for movies and music, the other for myself to surf with)

:toast:

With the rate smartphone chips are going (30% increase annually -- which is where desktop CPUs should be), it won't be long before they catch up to desktop CPUs. That's how much Intel have ruined the desktop market det last 5 years, by notoriously taking advantage of the monopoly that they have.

Something tells me that when AMD releases its Zen-CPUs, and if they end up becoming as good as AMD claims, that Intel will give an unsual extra performance bump (albeit small -- enough to circumvent AMD) their CPUs the subsequent. All just out of random coincidence of course, as something-something this-or-that allowed Intel to improve technology further at exact that moment and time...

It was Heady stuff. I learned something every day that I went to work.

(All numbers sourced from AnandTech because easy to get, reliable and pretty accurate)

Conroe (Core 2 65nm) to Penryn (Core 2 45nm): 5-15%, depending on test. Most of which can be directly attributed to the larger cache size and possibly increased memory bandwidth (most Penryn desktops came with faster DDR3 memory). On more "pure" CPU-bound tests and more memory intensive stuff where stuff no longer fits in the cache, the improvement scores about 5%. On stuff like the Excel Monte Carlo test, which fits more nicely in the cache, the boost is larger.

Penryn to Nehalem: 15-30%, depending on test. The big gains in memory-sensitive items like video rendering and multithreading benchmarks point to moving the memory controller to a CPU as having a large contribution to the improved performance. Other benchmarks like Excel Monte Carlo show that the x86 core itself didn't get that much improvement that generation. With HyperThreading, the gap grows even larger, more than 40% in some cases. What this shows you quite clearly is that the x86 core is already not capable of being fully loaded by typical loads, even if the usage graph shows 100%: it simply can't get enough instructions to keep it's execution units fully loaded. For cases like gaming, this is not particularly relevant (concurrency is hard, yo), but for rendering farms, databases, virtualization, HPC, big data, and low-power cores (non-exhaustive list here, ofc, and you should always test your own loads anyways) HT makes a lot of sense.

Nehalem to Westmere: Getting benchmarks for this is somewhat harder. Anyways, we still have single-threaded benchmarks on AT, so let's use that (and you can do some math from the multithreaded benchmarks to get the 4->6 core boost, out, though that leads to other items like memory and disk performance now being non-equalised. We see basically 2% improvement in pure x86 performance. Pretty much irrelevant, but you're not buying Westmere for IPC improvements, you're buying it to get more core, which is why aside from the 980X and 990X CPUs, these were server only.

Westmere to Sandy Bridge: 0-30% improvement, with an average of about 20%. This is what happens when you get to redesign the entirety of the CPU core. Not much else to say, really... The much-loved, much extended P6 architecture that's been trudging on since the Pentium Pro in 1995 (16 years of pretty much continuous use in 2011, when SB launched) is finally put to rest.

Sandy Bridge to Ivy Bridge: 2-10%, around 3% overall. Just a straight Die-shrink, and in the case of LGA1155 and laptop CPUs, much improved GPU. At this point, Intel is well into it's pretty firm tick-tock cycle. The real benefit of IVB was the power consumption of course, and on the server side, the 8->12 core jump works wonders where they're used.

Ivy Bridge to Haswell: 0-3% typical, with peaks of 10-15% in places. In Haswell, being a pure power-consumption focused design, Intel made the improvements-per-watt ratio even harsher (basically twice as hard, though I can't remember if it's %/W or W consumed vs W consumed by the previous arch). In practice, the lack of IPC improvements on the tock affects only the small gaming/higher-end desktop market, which is a pretty small market for Intel: most of the market has either moved to laptops (unless you need lots of performance (which most users browsing facebook don't need), laptops are just more practical than a desktop), doesn't mind paying the premium for high-end chips (the big, sometimes dual-CPU Xeon machines) or lives in serverland, where the costs of adding a more cores and/or CPUs is basically irrelevant compared to RAM, dev time, bandwidth, licensing, support or any combination thereof.

So, since SNB, we've seen around 6% of IPC improvement, some rising clock speeds and more cores. Why's that you ask? Well, let's have some educated speculation (warning, nitty-gritty details follow, external research is expected if you don't understand something):

CPUs these days beyond a certain point of performance (basically once you're out of the truly low-power embedded market), are all superscalar architectures, because having multiple EUs (Execution Unitsm as Intel calls them) in your pipeline is an obvious way to improve performance. Of course, in order to fully use a superscalar architecture, you have to be able to have all the EUs loaded and executing, and not just sitting there idle. As you add more EUs to a fixed in-order pipeline, it gets harder to keep them all loaded, because the next instruction wants an EU that's already in use despite having more behind it that could be executing already.

The solution, quite obviously, is to dynamically re-order the instructions during execution, then re-order them back when complete before handing the results to the OS, and thus, Intel built their first Out-of-Order (OoO) core in 1995 with the Pentium Pro's P6 core.

Alternatively you can add SMT (multithreading, HyperThreading is an implementation of SMT). The POWER8 architecture for example runs 8 threads per core instead of going out of order, and is competitive with the equivalent Intel chips. Interestingly enough, SMT works well when paired with OoO architectures as well, and is why Intel implemented it, first on the NetBurst, then later on with a new implementation on Nehalem.

Even when you have your EUs fully loaded (ideal case), you can still make the core faster: faster EUs, even more EUs, faster/better OoO re-order logic and queues, new instructions to do specific things better, things Intel did over the years with the Pentium 2, 3, M, Core, Core 2, Nehalem and Westmere. Eventually though, all this extending hits it's limit, and so Intel hit it with Westmere.

Consequently, with Sandy Bridge Intel built a new core from scratch, with all the lessons they learnt over the years, and the 20% average improvement is the result of that.

Now, how do we go from there? Well, we do some tweaking (that's how we get 3% improvement every gen), but by and large, Intel has their optimisation right up there at the limit for the obvious cases. For more, you would need either more expensive EUs (power and die-area), more EUs, or new instructions paired with new EUs.

If Intel forcibly went ahead with more expensive EUs, it would end up with Intel producing less performance on their very large-die, high core-count chips purely because they would not be able to fit in as many cores, and that would make them lose their competitiveness against the POWER- and SPARC-based CPUs, something that Intel does not want to do, especially after they and AMD have spent some 15 years or so basically shunting PPC an SPARC clear out of the datacenter. Thanks to AMD not producing anything competitive on the server-side of things, Intel pretty much owns the server market right now, and anything that threatens their domination of the server market is simply not allowed to not be addressed. And so, more expensive EUs aren't generally added because they don't meet the performance improvement per Watt ratio Intel demands.

The other option is to add more targeted instructions, and the EUs to go with. Intel did exactly that with AVX for example. AVX2 is an interesting example, because it uses lot of power. So much so that Intel basically has two TDPs for their AVX2 chips, one with AVX2 use, one without. This is allowed, because running the AVX2 variant of programs that can use AVX2 is twice as fast as the non-AVX2 program, so the 30% increased heat load is perfectly acceptable.

This rounds up how you do core improvements in the CPU core itself.

Now, we have platform improvements. For most modern CPUs, wire bandwidth limitations come into play, so the single biggest improvement you can make is to move the memory controller into the CPU. This shortens the length of the connection to the CPU cores massively. The next step will be bringing in much larger near-CPU memory, through stuff like HMC and HBM, and will happen on GPUs first.

When Intel did it, it increased the CPU-memory controller bandwidth from 1.6GT/s (the highest they could push FSB, with 1.06GT/s and 1.33GT/s being magnitudes more common) up to 6.4GT/s with the first-gen Nehalem cores through QPI (the memory controller on the big chips sits on a QPI connection to the CPU, the smaller cores just have the memory controller directly attached to the cache). It also reduced the latency by a fair chunk, which is always good for reducing cache pressure.

So to conclude: IPC improved fast from Pentium Pro to Core 2 because of clockspeeds. Performance improved fast from Core 2 to SNB because of platform and core improvements. Now that Intel is pretty much at the top, they have one extremely high-margin market: the server market and one very large market, the mobile market. Both can be served using the same design approach: cores that are as low-power as possible, the former because we genuinely, truly have enough performance with a piddly dual-core for 4K video streaming and just about anything else I'd want to do on a laptop or tablet, the latter because most server workloads either scale quite well with cores or can be made to scale quite well with cores.

Now let's have a look at ARM. ARM has been an embedded processor design since they got shunted from the desktop market (back when they were still called "Acorn RISC Machine") quite quickly. The iPhone, as well as all the old Windows Mobile and Symbian phones used ARM because it was low-power, easy enough to hand-optimise and sufficiently low-power, in terms of absolute low power. In terms of IPC, they sucked compared to the what we consider incredibly poor on the desktop, the Pentium 4.

Since the iPhone came along, and thanks to increased competition from Android, vendors left right and center, as well as ARM themselves started working on high-performance, high-clocked ARM CPUs, largely by integrating the various bits of tech the desktop machines have been steadily, steadfastly improving for a very long time.

Much like the desktop, they are hitting their limits too, right about now in fact: the Cortex-A57 cores, a scratch-built core, fully OoO, dual-channel memory, embedded memory controller (does this remind you of another Intel chip? Cause it reminds me of SNB of all things) overheats in the average device they're put in, because you simply can't cool more than around 4W in a phone passively. Especially if you don't have a direct metallic connection from the CPU to the chassis. Samsung's 14nm chips do better, but they're right at the limit themselves.

The Cortex-A72 is hoping to fix the power consumption and heat output issues, but by and large, the improvement rate in phones is also going to start slowing down quite quickly because the quick, obvious and large jumps (wide superscalar (more than 2-issue/EU), OoO, SMT and SMP (multi-core and multi-CPU)) have been made. Some more work will happen, but the ARM legion is about to hit their wall quite soon and when that happens, bye-bye 30% a year improvements.

If AMD Zen is the panacea AMD hopes it to be, Intel will just run around doing some pricing changes. A new architecture is highly unlikely IMO, not with the way the market is going, and the OEM success of Core M at breaking into the tablet market, where the good OEMs want the SoC to provide compelling performance (battery life, smoothness) to users above all else, which is why the HSW Y-series (now Core M) saw almost no use - it was too power-hungry.

Thank you.

From what I can understand you try make the point that Intel actually are doing the best they can, and mention a long list of "what to do" to improve, and explaining how they are not realistically feasible. But isn't the whole point of technology and innovation growth, whether it be modification, reversal, or any other solution that leads to advancement? Take the Space Race during the Cold War. Engineers of both parties could talk and write about what was needed to do to reach their potential, and where they were already restricted in the early 60s. 10 years later they could be applying certain standards and technology that conflicted with the "limitations" that were mentions earlier, whereas the same time a whole new generation of "challenges, limitations, can-dos and can't-dos would have appeared"? Also, take a look at the magnitude of achievments reached during those 30-40 years of racing between two super-powers, and compare the 30 years that has followed after it ended. Why do you think that is?

Also, what you say about smartphone chips seems quite odd to me. The Exynos 7420 made an extreme bump in performance this year, and it is expected to make an equally big og a jump in performance next year with its rumored custom "Mongoose" cores (somewhere around 40% increase in single-core performance, according to rumours). The same is the case with Qualcomm. The Snapdragon 810 despite being a catastrophe of sorts, made 40% increase in performance from the SD801. And it's expected to continue making big increases with the Snapdragon 820 (its first 14nm chip). Now, there are several actors in play producing chips in that market, which I attribute to the wide range of competitors. The accelerated innovation is also seen in other aspects also: in display technology, camera technology, memory, sound, etc. It was only 2 years ago that Samsung's AMOLED-displays were butchered for being bad in every aspects but viewing angles and black levels. Today they are vastly superior of anything else. DisplayMate, and industry standard in display technology, went as far as calling the display "visually indistinguishable from perfect, and very likely considerably better than your living room TV". What does this tell us, when they make a comparison of a screen of such a small and cheap device to a much larger and more expensive televisons -- which has display quality as its single purpose? We can sit here can make a list of why this is the case based on factual details. But the reality of it is that the most important factor is the stiumulus of growth and technology that is forced to make its ways into the hands of costumer's in an ever-increasing competitive market.

Intel's R&D budget is immense, to say the least, and yet the "alternative" paths to the stagnation of CPU technology has been provided by the smartphone manufacturers or much smaller companies than Intel: the heterogeneous systems, or Graphics Core Next -- by AMD and ARM, for example. And that's something I find really atypical, if we look at the enormous difference in size between Intel and those companies. Or the fact that every new year, Intel CPUs make extremely small enough performance bump sto justify their sale; releases that they could have ignored, mind you. Or how about the constant new sockets that are forcing customers to keep purchasing new hardware? Are there any logical reasons (reasons, not pretexts) for those?

I don't know about you, but I have the feeling that Intel processors will give us better increases when we get to the point where other companies with their technologies and solution start threatening them (ARM) in the desktop and laptop market. Skylake seems to be first of these. What do you think?

As for why Intel makes minor bumps on the desktop, it's mostly so they can retire their older fabs for retooling to produce newer generation chips. If it were more economically feasible to produce older generation chips and update less often, they'd do just that. Westmere is a good example of just that line of logic, where they passed on updating any quad core Nehalem CPUs consumer-side. Same story for why there wasn't a consumer variant of Dunnington. It's a bit of a kick in the teeth, but it simply wasn't worth the effort because it won't sell for long, or sell very much. At the end of the day, desktops and laptops are commodity, and aside from us enthusiasts, nobody is asking for more performance, so they get small, minor incremental upgrades every year as Intel shrinks and tweaks for servers and their mobile aspirations, highly reminiscent of the frequent releases of ever so slightly faster Pentium 4 and Athlon chips every few months back in the day.

On the ARM side, the Exynos 7420 is a nice boost in performance, but it also has most of the benefits of a 14nm node (the interconnects are 20nm, even if the transistors are 14nm) that allow it to run cooler than the competition. The S810 vs S801 under thermal throttle conditions is the ineteresting one, since you can see quite directly the power-performance tradeoff curve, where when given the same cooling, the S810 is barely any faster than the S801. I also think that the 7420 is barely competitive with Atom at the moment, but I'm still waiting on the Asus ZenFone 2 review from somewhere decent like AT to get a good look at it's performance vs the 7420 in the SGS6.

On the forthcoming architectures side, the A72 looks to be around 30% faster, but then you're potentially (but hopefully not) back into the power/heat argument. At the end of the day though, ARM is still moving fast because they're still catching up to bleeding-edge x86/POWER designs. I expect them to slow down sometime in the next 2-5 years as ARM polishes their OoO designs - A15/A57 are by and large first-generation designs, where correctness and reliability are the absolute priority rather than pure performance. Now start the incremental improvements in their OoO parts. At the same time, Intel is getting quite successful at scaling absolute x86 power consumption down on their big x86 cores (see Core M). The "alternate" path as you put it, isn't particularly alternate, it's just that Intel and AMD have walked that road since 1995, while ARM joined in around 2009, with their first true high-performance core shipped in 2012. No matter how you view it, 17 years is enough to get the obvious innovations out and optimized as perfectly as possible for a given typical load. IBM was the first to use OoO in POWER1 in 1990, and despite the 5-year headstart, is currently pretty much on par with Intel, which is quite obvious in hindsight: they have very similar die sizes - with the biggest Xeon at 662mm² and the biggest POWER8 at 649mm². Power consumption is worse on the POWER8 machine though, but then again, the Xeon can't hope to catch up to POWER8's memory implementation.

Overall design isn't really relevant, but I think I can see what you're getting at: companies breaking the established status quo by improving regardless of the necessity. It's easy on a phone to improve stuff like screens (much easier on small panels than large panels), but it's driven more by an incessant need to one-up the competition than providing better experience.

For the multiple actors thing, it's not as big a deal as it seems - there's QComm, who by and large own the western mobile market and Mediatek, Allwinner and other Taiwanese/Chinese vendors own the low-end. One the sidelines you have Samsung who often go for a QComm chip for integration and battery life reasons, even if they do produce Exynos chips, Nvidia, who have exited the mobile market in favour of high-performance embedded systems like in cars, LG, who haven't shipped anything of their own (to my knowledge) and Apple who don't sell components. Everything else ARM is so far away from the consumer market it's not really worth talking about.

Personally, what I expect to see happening when AMD (or ARM, though they're unlikely to affect anything desktop-/laptop-side) puts up something competitive with Intel (I assume they will, eventually, if only barely competitive) for the desktop and server market, is Intel enabling HT on the i5s and upping the i7s to being 6-core designs on the mainstream socket, something I view as a simple market reshuffle rather than a major improvement. Sure, it's 50% faster under the right conditions, but it's not really much R&D effort compared to redesigning an ALU or working on making the chip reliable when clocked higher without relying purely on binning and allowing more heat.