Monday, January 22nd 2018

AMD Reveals Specs of Ryzen 2000G "Raven Ridge" APUs

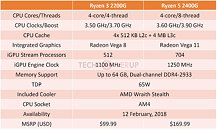

AMD today revealed specifications of its first desktop socket AM4 APUs based on the "Zen" CPU micro-architecture, the Ryzen 2000G "Raven Ridge" series. The chips combine a quad-core "Zen" CPU with an integrated graphics core based on the "Vega" graphics architecture, with up to 11 NGCUs, amounting to 704 stream processors. The company is initially launching two SKUs, the Ryzen 3 2200G, and the Ryzen 5 2400G. Besides clock speeds, the two are differentiated with the Ryzen 5 featuring CPU SMT, and more iGPU stream processsors. The Ryzen 5 2400G is priced at USD $169, while the Ryzen 3 2200G goes for $99. Both parts will be available on the 12th of February, 2018.

The Ryzen 5 2400 features an 4-core/8-thread CPU clocked at 3.60 GHz, with a boost frequency of 3.90 GHz; 2 MB of L2 cache (512 KB per core), and 4 MB of shared L3 cache; and Radeon Vega 11 graphics (with the 11 denoting NGCU count), featuring 704 stream processors. The iGPU engine clock is set at 1250 MHz. The dual-channel DDR4 integrated memory controller supports up to 64 GB of dual-channel DDR4-2933 MHz memory. The Ryzen 3 2200G is a slightly cut down part. Lacking SMT, its 4-core/4-thread CPU ticks at 3.50 GHz, with 3.70 GHz boost. Its CPU cache hierarchy is unchanged; the iGPU features only 8 out of 11 NGCUs, which translate to 512 stream processors. The iGPU engine clock is set at 1100 MHz. Both parts feature unlocked CPU base-clock multipliers; and have their TDP rated at 65W, and include AMD Wraith Stealth cooling solutions.

The Ryzen 5 2400 features an 4-core/8-thread CPU clocked at 3.60 GHz, with a boost frequency of 3.90 GHz; 2 MB of L2 cache (512 KB per core), and 4 MB of shared L3 cache; and Radeon Vega 11 graphics (with the 11 denoting NGCU count), featuring 704 stream processors. The iGPU engine clock is set at 1250 MHz. The dual-channel DDR4 integrated memory controller supports up to 64 GB of dual-channel DDR4-2933 MHz memory. The Ryzen 3 2200G is a slightly cut down part. Lacking SMT, its 4-core/4-thread CPU ticks at 3.50 GHz, with 3.70 GHz boost. Its CPU cache hierarchy is unchanged; the iGPU features only 8 out of 11 NGCUs, which translate to 512 stream processors. The iGPU engine clock is set at 1100 MHz. Both parts feature unlocked CPU base-clock multipliers; and have their TDP rated at 65W, and include AMD Wraith Stealth cooling solutions.

97 Comments on AMD Reveals Specs of Ryzen 2000G "Raven Ridge" APUs

So yes, might be up to the MBs, but the Pro stuff is not an argument.

The Bulldozers supported it also, and im not aware of any Bulldozer Pro line.

It's going to be the best IGP save for the Intel+Vega combo, that it's not sold as an standalone product for DIY clients, or the Nintendo Switch, that it's a console.

Anything else you expect for it, it's your fault. You want 1080 ultra gaming? Go for a dedicated video card, don't ask a small CPU+GPU combo limited to a 65w envelope to do that.

Other than that, you're pretty much right, even if you could express it slight less aggressively :p

Now I want a Threadripper with a full Vega IGP with HBM.

You can compare which is smoother or better looking for a user experience point of view, but it IS NOT, and CAN NOT be used as a performance benchmark unless the graphical details are 100% the same on both platforms (complicate that even further for games that use different settings for the various hardware models of each console)

Threadripper isn't necessary though, it's pretty useless for gaming. Full Ryzen 8c16t die with on-package 24CU Vega Mobile GPU and HBM, though? Yes please.

For me, there's a clear cut: the IGP is for desktop work, for games you need at least a mid range dGPU. Anything between that is just money down the drain: it will not play games well, it will not make Office or your browser of choice perform any better.

Let's just wait for benchmarks and see where this stands, instead of passing on definitive judgment based on pictures and impressions, ok?

Still, given the good CPU performance we know Zen cores deliver, with a "good enough for esports" iGPU, these chips would make for amazing budget-level gaming boxes with the option of adding a dGPU down the line. This argument was made for the previous A-series also, but they fell flat due to weak CPU perf making them too weak for anything but a bargain bin dGPU. I'm hoping this manages to live up to this expectation (i.e. run DOTA, CS:GO, RL and Overwatch at 1080p medium or so), as that could be a great market share win for AMD. Which, again, brings me to why I find it worth my time to speculate on and discuss. Of course, we can't know until we see benchmarks, so the discussion is ultimately meaningless. That doesn't mean it's a waste of time, though :PThis is just plain misunderstood. Of course you can compare them even if they have different graphical settings. It's even quite simple, as they both have fixed (non-user adjustable) graphical settings. It simply adds another variable, meaning that you have to have to stop asking "which performs better at X settings?" and start asking "which can deliver the best combination of performance and graphical fidelity for a given piece of software?". This does of course mean that the answers might also become more complex, with possibilities such as better graphical fidelity but worse performance. But how is that really a problem? This ought to be far easier to grasp for the average user than the complexities of frame timing and 99th percentile smoothness, after all.

You seem to say that performance benchmarks are only useful if they can provide absolute performance rankings. This is quite oversimplified: the point of a game performance benchmark is not ultimately to rank absolute performance, but to show which components/systems can run [software X] at the the most ideal settings for the end user. Ultimately, absolute performance doesn't matter to the end user - perceived performance does. If a less powerful system lowers graphical fidelity imperceptibly and manages to match a more powerful system, they are for all intents and purposes identical to the end user. To put it this way: if a $100 GPU lets you play your favourite games at ~40fps, and a $150 GPU runs them at ~60fps, that's perceivable for most users. On the other hand, if a $120 GPU runs them at ~45fps, is there any reason to buy the more expensive GPU? Arguably not.

Absolute performance doesn't matter unless it's perceivable. Of course, this also brings into question the end user's visual acuity and sensitivity to various graphical adjustments, which is highly subjective (and at least partially placebo-ish, not entirely unlike the Hi-Fi scene), but that doesn't change if you instead try measuring absolute performance. The end user still has to know their own sensitivity to these things, and ultimately decide what matters to them. That's not the job of the reviewer. The job of the reviewer is to inform the end user in the best way possible, which multi-variable testing arguably does better than absolute performance rankings (as those have a placebo-reinforcing effect, and ignore crucial factors of gameplay).

-Gigabyte A320M-S2H-CF Motherboard

-8Gig Patriot DDR4 2400

-ADATA M.2 SSD 128g (Fast Win10 Boot)

-RECADATA Industrial MLC Series SATA3 SSD 128g

$220 dollar system

Im amazed at the all-use performance for this price.

The Ryzen 3 2200G single thread and multi thread performance is impressive

Especially when compared to its previous gen FX chip which are priced around equal at $99

The upgradability on the AM4 is great IMO.

The Vega Graphics does a decent job at gaming for the price of this APU

Adding a discreete video card will boost the value even more.

SO far so good for AMD Ryzen series

*Please note the above information is based just on my opinion based on 1 month of use in a windows 10 gaming/ multimedia use

Had time to try Ark (the requested game) and both Persona 5 and Breath of the Wild (I love to benchmark with console emulators). The PS3 emulator ran great save the need for more threads (the PS3 is an eight core monster, and you have to emulate that), it even had better performance than my 1200 + 270X combo or my brother's G4560+1030 one, thanks to the IGP sharing the RAM, so less latency internaly when emulating the PS3 components. Diferent story with Breath of the Wild, the tri-core emulation, full GPU usage and the bad AMD OpenGL drivers managed to reach the CPU's power throttle, so performance was inferior than the G4560 + 1030 in this case, thanks to the reduced CPU frequency. The same happens with the old FX APUs, but you get 30FPS with Zen, instead of 11.

The weird thing is, it was an A320 motherboard, but it did have overclock and voltage options.