Monday, January 28th 2019

MSI Monitors are Now G-Sync Compatible

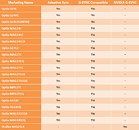

Following NVIDIA's announcement of their newest drivers, MSI monitors are effectively G-Sync Compatible! This technology allows G-Sync to be used on Adaptive Sync monitors. G-Sync, an anti-tearing, anti-flickering and anti-stuttering monitor technology designed by NVIDIA, was once only exclusive to monitors that had passed the NVIDIA certification. With the newest release of NVIDIA GPU driver, NVIDIA now allows G-Sync to be used on monitors that support Adaptive Sync technology when they are connected to an NVIDIA graphics card.At the moment, not all Adaptive Sync monitors on the market are perfectly G-Sync Compatible. MSI, on the other hand, has been constantly testing Adaptive Sync monitors to determine if they are G-Sync Compatible. Below are the test results.*Models not listed are still under internal testing

Graphics cards used for the test:

Graphics cards used for the test:

- MSI RTX 2070 Ventus 8G

- MSI RTX 2080 Ventus 8G

- MSI GTX 1080 Gaming 8G

- Graphics Card: NVIDIA 10 series (pascal) or newer

- VGA driver version should be later than 417.71

- Display Port 1.2a (or above) cable

- Windows 10

- Only a single display is currently supported; Multiple monitors can be connected but no more than one display should have G-SYNC Compatible enabled

22 Comments on MSI Monitors are Now G-Sync Compatible

So much for selling some weird premium form of Gsync at the end of the day, Huang. That ain't gonna fly. You lost this one, now get back to work on that driver TY.

If it is- FWIW - I paid 379 EUR for this monitor.... look around for a quality VA high refresh 1080p panel today and I'm not so sure its a premium at all ;) Regardless. There are some arguments to be made here;

- ULMB / strobe only works with fixed refresh rates, so it doesn't mix with Gsync or FreeSync at all. The best Huang could sell today is 'we have a very expensive strobing backlight'. And its not even a USP in todays' market. So its a very awkward proposition to make, when all you offer is a display mode that only works in a very specific use case, and excludes the main selling point of an adaptive sync monitor. They also can't pull an AMD and call it Gsync 2, because there is nothing new to offer. All Gsync monitors have ULMB.

- There are also monitors without Gsync and with strobe, like mine (its also not ULMB, but a slightly different tech/implementation).

- Back then it was one of the first VA gaming panels around. Hard to compare to begin with, most of what you had with high refresh was TN or astronomically priced IPS (equally, or more expensive than this one at the time, and without strobe).

Not a big risk to MSI to expand business.

Go look at NVDA stock price this morning. They cut forward guidance once again, it's down 12% at the moment.

:peace::peace::peace:

The gsync module experience is still superior, lets not ignore that. Is or was it ever worth it? Maybe not for most of us :)

But assuming this driver enabled gsync experience is the same, is wrong.

And this doesn´t mean I don´t agree with you; For example, Nvidia considers Asus XG248Q as a Gsync compatible on their official list. If you try Gsync on that monitor and compare with let´s say a Samsung FG73 the difference is clear. A Gsync module monitor still offers a superior VRR experience that can´t be ignored.

You can also notice how many times a no Gsync Module monitor hits the ceilling, unless you cap to 120fps. While on a Gsync module monitor you can get away with a 141fps cap and be assured it will never hit the ceilling. Hitting the ceilling will cause either:

- tearing

- input lag

It is more precise when you have a module, while with the driver the monitor adjustements are all over the place, again, depending on the model. You can notice it more on some compared to others.

You can visit Blur Busters if you are interested in reading the differences. With that being said, Gsync is still too expensive and that´s the problem. Getting just a good FreeSync monitor that works well with driver enabled gsync is a much smarter option. But simply saying this driver Gsync is the same as the module one, is not right, trust me.

Oh compared to what? the stock of AMD which is worth as much as $115 less than that of NVIDIA? LOL yeah great job there. oh AMD is also currently at a 7% drop.

We all know the mining craze of 2018 HUGELY inflated the stock for NVIDIA and it has been dropped because that craze is long over.

I don't think that stock prices and G-Sync being free is all of the sudden a similarity.

I run NV and G-sync. I haven't seen reviews saying the experience on NV and Freesync is favorable over NV and G-sync. They say it is the cheaper monitor option but if you want the best you will pay for the best. I am sure more YouTubers will be making more NV and Freesync videos as this ramps up onto more monitors.

Wait for the right deals and don't buy things on day 1 release ;) I picked up mine on a killer deal and no regrets.

If it was equal NVIDIA would or will stop making G-SYNC all together, guess we will wait and see what happens. the price of G-sync monitors will likely drop as well.

It's not intended to, at least at present, tech hasn't advanced that far. You turn off G-Sync to use ULMB ... most find the break off point to be between 70 and 80 *minimum* fps, but in speaking with users we've done builds for who game a lot more than i have time to .... most of them with the necessary GFX horsepower are using 1440p IPS AUOptronics 8 or 10 bit screens and using ULMB almost exclusively. It's not as if you use the 100 Mhz or 120 Mhz setting and if you have 90 fps all goes to hell. Look at the reviews on TFtcentral... not having ULMB is considered a major con.

3.5+ years old but still relevant ...

www.tftcentral.co.uk/articles/variable_refresh.htmThe only thing new about G-Sync that I can recall was adding windowed mode and making more ports available. Wasn't that 2 or II thing a mistake ? I remember something about Dell or someone announcing and then retracting a claim in this regard .... but hey I'm old, :)

All G-Sync monitors do not have ULMB.

www.tftcentral.co.uk/reviews/asus_rog_swift_pg348q.htm#conclusion

www.tftcentral.co.uk/reviews/asus_rog_swift_pg27uq.htm#conclusion

As for ULMB/strobe. Yes I can only agree, I really don't miss the variable refresh until I drop under 65-70 FPS on my current monitor, and I have strobe active all the time. I consider strobing a much stronger feature than variable refresh for any high end spec rig tbh.