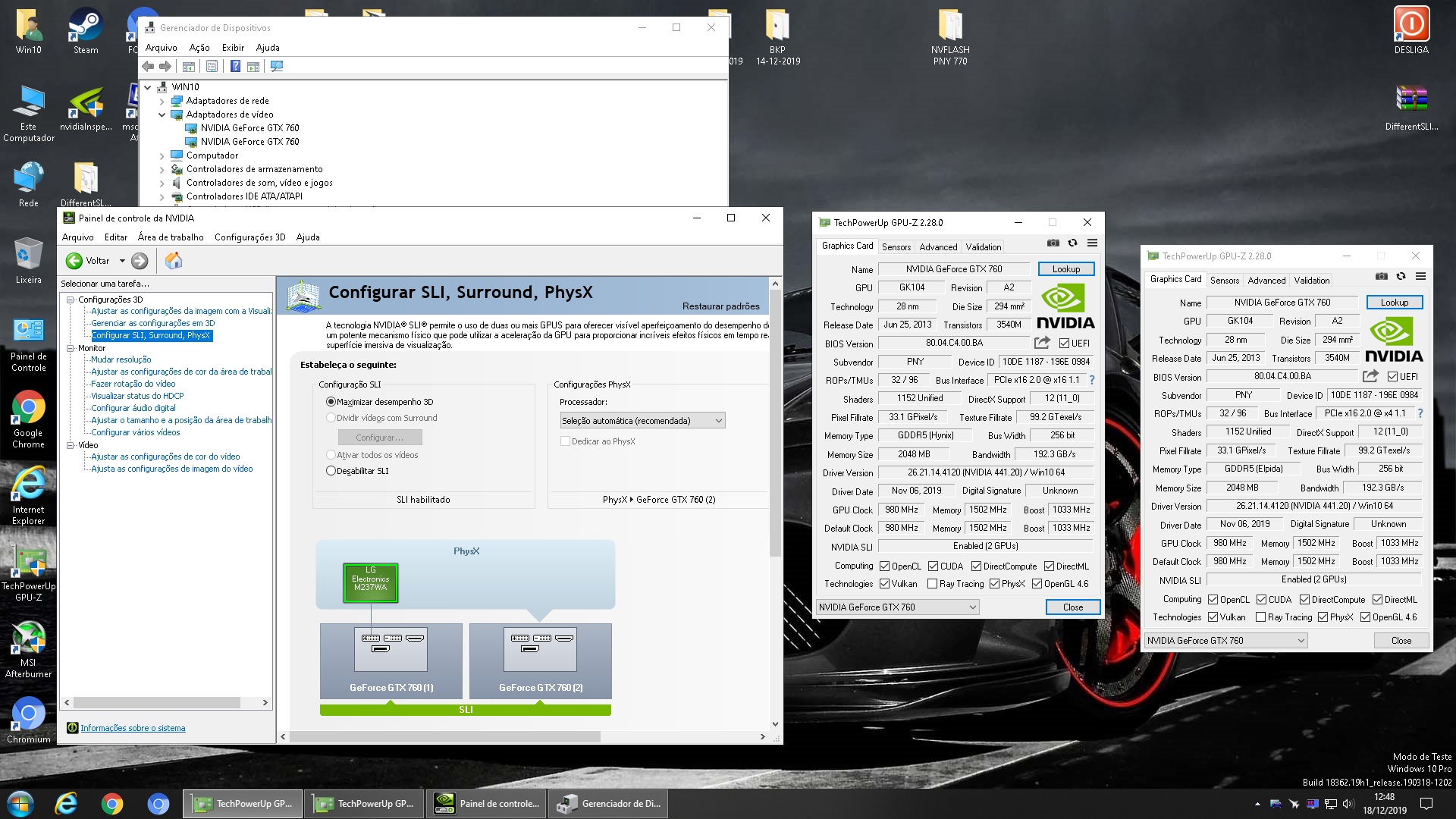

Not working for me. Followed all steps but no use. If anyone has used DifferentSLIauto on a Chinese x79 motherboard & has been successful do tell meDepois de estudar um pouco , e com ajuda do forum, consegui colocar duas GTX 760 em uma GA-Z77M-D3H

Ainda nao testei a performance, mas estou ancioso.

Porem, segue minha contribuiçao:

Eu compilei o arquivo nvlddmkm.sys da versao 441.41

Versao windows 10 x64

LINK:

https://www.sendspace.com/file/5wdlm7

Segue imagem e arquivo ja modificado para download.

O procedimento de instalar é o mesmo ja citado nos posts anteriores.

Obrigado

--------------------------------------------------------------------------------------------------------------------------------------------------------------------

After studying a little, and with the help of the forum, I was able to put two GTX 760 in one GA-Z77M-D3H

I haven't tested the performance yet, but I'm looking forward to it.

However, follows my contribution:

I have compiled the nvlddmkm.sys file from version 441.41

Windows 10 x64 version

LINK:

https://www.sendspace.com/file/5wdlm7

Follows image and file already modified for download.

The procedure of installing is the same already mentioned in previous posts.

Thank youView attachment 139419View attachment 139421

-

Welcome to TechPowerUp Forums, Guest! Please check out our forum guidelines for info related to our community.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

SLI with different cards

- Thread starter anatolymik

- Start date

- Joined

- Apr 25, 2017

- Messages

- 362 (0.13/day)

- Location

- Switzerland

| System Name | https://valid.x86.fr/6t2pb7 |

|---|---|

| Processor | AMD Ryzen 5 1600 |

| Motherboard | Gigabyte - GA-AB350M-Gaming 3 |

| Memory | Corsair - Vengeance LED DDR4-3000 16GB |

| Video Card(s) | https://www.techpowerup.com/gpudb/b4362/msi-gtx-1080-ti-gaming-x |

| Storage | Western Digital - Black PCIe 256GB SSD + 3x HDD |

| Display(s) | 42" TV @1080p (main) + 32" TV (side) |

| Case | Cooler Master HAF X NV-942 |

| Audio Device(s) | Line 6 KB37 |

| Power Supply | Thermaltake Toughpower XT 775W |

| Mouse | Roccat Kova / Logitech G27 Steering Wheel |

| Keyboard | Roccat Ryos TKL Pro |

| Software | Windows 10 Pro x64 v1803 |

No.what if i dont delete different sli, then i switched to a different card like, 2 1060s to 1 2070? would that not cause any errors or problems?

But after going back to a single card, you'd better make a clean driver install anyways, just to get rid of test signing mode.

Last edited:

what is the worst possible scenario if i dont remove differentsli when going back to single card?No.

But after going back to a single card, you'd better make a clean driver install anyways, just to get rid of test signing mode.

- Joined

- Apr 25, 2017

- Messages

- 362 (0.13/day)

- Location

- Switzerland

| System Name | https://valid.x86.fr/6t2pb7 |

|---|---|

| Processor | AMD Ryzen 5 1600 |

| Motherboard | Gigabyte - GA-AB350M-Gaming 3 |

| Memory | Corsair - Vengeance LED DDR4-3000 16GB |

| Video Card(s) | https://www.techpowerup.com/gpudb/b4362/msi-gtx-1080-ti-gaming-x |

| Storage | Western Digital - Black PCIe 256GB SSD + 3x HDD |

| Display(s) | 42" TV @1080p (main) + 32" TV (side) |

| Case | Cooler Master HAF X NV-942 |

| Audio Device(s) | Line 6 KB37 |

| Power Supply | Thermaltake Toughpower XT 775W |

| Mouse | Roccat Kova / Logitech G27 Steering Wheel |

| Keyboard | Roccat Ryos TKL Pro |

| Software | Windows 10 Pro x64 v1803 |

I don't expect any problems by doing that, but you probably wouldn't want to keep test signing mode active for nothing.what is the worst possible scenario if i dont remove differentsli when going back to single card?

So you would at least revert that by installing an unmodified driver and running:

bcdedit.exe /set loadoptions ENABLE_INTEGRITY_CHECKS

bcdedit.exe /set NOINTEGRITYCHECKS OFF

bcdedit.exe /set TESTSIGNING OFF

Last edited:

Raul Chartouni

New Member

- Joined

- Oct 29, 2019

- Messages

- 2 (0.00/day)

In the previous post I made available the file nvlddmkm.DLL from version 441.41

But I had crash issues in some games.

So I edited 441.20 and now it works fine.

Follow LINK:

https://www.sendspace.com/file/ifsmug

DRIVER WINDOWS 10 64BITS

The only problem I'm having now is with GRID 2019.

It's directx 12, and I think this API doesn't support multi GPU

As shown in the image, GPU2 is not required.

If anyone knows any way share.

Thank you

----------------------------------------------------------------------------------------------------------------------------

No post anterior eu coloque disponivel o arquivo nvlddmkm.DLL da versao 441.41

Mas eu tive problemas com crash em alguns jogos.

Entao eu editei o 441.20 e agora funciona bem.

Segue LINK:

https://www.sendspace.com/file/ifsmug

DRIVER WINDOWS 10 64BITS

O unico problema que estou tendo agora é com o GRID 2019.

Ele é directx 12, e eu acho que esta API nao oferece suporte para multi GPU

Como mostro em imagem, a GPU2 nao é requisitada.

Se alguem souber alguma maneira compartilhe.

Obrigado

-------------------------------------------------------------------------------------------------------------------------------

But I had crash issues in some games.

So I edited 441.20 and now it works fine.

Follow LINK:

https://www.sendspace.com/file/ifsmug

DRIVER WINDOWS 10 64BITS

The only problem I'm having now is with GRID 2019.

It's directx 12, and I think this API doesn't support multi GPU

As shown in the image, GPU2 is not required.

If anyone knows any way share.

Thank you

----------------------------------------------------------------------------------------------------------------------------

No post anterior eu coloque disponivel o arquivo nvlddmkm.DLL da versao 441.41

Mas eu tive problemas com crash em alguns jogos.

Entao eu editei o 441.20 e agora funciona bem.

Segue LINK:

https://www.sendspace.com/file/ifsmug

DRIVER WINDOWS 10 64BITS

O unico problema que estou tendo agora é com o GRID 2019.

Ele é directx 12, e eu acho que esta API nao oferece suporte para multi GPU

Como mostro em imagem, a GPU2 nao é requisitada.

Se alguem souber alguma maneira compartilhe.

Obrigado

-------------------------------------------------------------------------------------------------------------------------------

- Joined

- Mar 27, 2019

- Messages

- 12 (0.01/day)

I wrote a small python script that should be able to automatically generate an SLI-hacked driver file for all recent versions (I tested back to 391.35 and up to current, 441.66). You can get it here. Note that this only patches the .sys file for you, it doesn't install it (you still need to use the DifferentSLIAuto batch script) and it doesn't fix driver signature issues with anticheat (I've seen this again and again in this thread but I've discussed it before, the permanent fix for this is https://github.com/Mattiwatti/EfiGuard.)

Use the script by invoking it with the .sys file as an argument, e.g. python FreeSLI.py nvlddmkm.sys.

It will produce another file, nvlddmkm_SLI.sys in the same directory. Backup your original, then rename this file to nvlddmkm.sys and execute the DifferentSLIAuto install script as normal.

An explanation of how it works on an assembly level is available commented in the python source. Basically what I did was disassemble the driver file and a hacked one modified with Pretentious's bytes and examined the actual assembly that his bytecode modification was changing. I did this on a recent driver as well as on the oldest that he provided, 391.35. Interestingly, I found that his bytecode modifications changed sometime during the r410 branch. He used to replace the JNZ and BTS instructions with a single MOV, which was sufficient for the bypass to work. Later, he started replacing the preceding TEST instruction as well, and allowing the last two bytes of the BTS instruction to fall off into a new instruction, that being AND AL, 0xe. I'm not sure why he changed this, but I theorized that this side effect probably does not matter since the AL register isn't used for a while after. Regardless, I decided to use his old set of instructions since they should leave the other registers and flags in a state closer to what they should have been in had the hack not taken place. If it is sufficient to enable SLI, I prefer this solution to his newer one.

After that it's a simple matter of opening the file in a memory map, finding a common marker nearby, then modifying at a proper offset.

Note that this is not doing anything intelligent with function tracing. This does not represent a universal solution and will break if there are significant changes to the bytecode marker used to locate the correct instructions. It is, however, encouraging that the code is stable enough to have kept this marker for the better part of 2 years now. I'd guess that Nvidia has an extremely stable toolchain for compiling their driver, so unless they make changes to this function directly it will probably keep working. When it stops working... I guess I'll try my hand at patch diffing in Ghidra.

Usual disclaimers apply, I make no promises this will work, I'm not responsible for your 2080Tis catching on fire, etc.

Use the script by invoking it with the .sys file as an argument, e.g. python FreeSLI.py nvlddmkm.sys.

It will produce another file, nvlddmkm_SLI.sys in the same directory. Backup your original, then rename this file to nvlddmkm.sys and execute the DifferentSLIAuto install script as normal.

An explanation of how it works on an assembly level is available commented in the python source. Basically what I did was disassemble the driver file and a hacked one modified with Pretentious's bytes and examined the actual assembly that his bytecode modification was changing. I did this on a recent driver as well as on the oldest that he provided, 391.35. Interestingly, I found that his bytecode modifications changed sometime during the r410 branch. He used to replace the JNZ and BTS instructions with a single MOV, which was sufficient for the bypass to work. Later, he started replacing the preceding TEST instruction as well, and allowing the last two bytes of the BTS instruction to fall off into a new instruction, that being AND AL, 0xe. I'm not sure why he changed this, but I theorized that this side effect probably does not matter since the AL register isn't used for a while after. Regardless, I decided to use his old set of instructions since they should leave the other registers and flags in a state closer to what they should have been in had the hack not taken place. If it is sufficient to enable SLI, I prefer this solution to his newer one.

After that it's a simple matter of opening the file in a memory map, finding a common marker nearby, then modifying at a proper offset.

Note that this is not doing anything intelligent with function tracing. This does not represent a universal solution and will break if there are significant changes to the bytecode marker used to locate the correct instructions. It is, however, encouraging that the code is stable enough to have kept this marker for the better part of 2 years now. I'd guess that Nvidia has an extremely stable toolchain for compiling their driver, so unless they make changes to this function directly it will probably keep working. When it stops working... I guess I'll try my hand at patch diffing in Ghidra.

Usual disclaimers apply, I make no promises this will work, I'm not responsible for your 2080Tis catching on fire, etc.

I wrote a small python script that should be able to automatically generate an SLI-hacked driver file for all recent versions (I tested back to 391.35 and up to current, 441.66). You can get it here. Note that this only patches the .sys file for you, it doesn't install it (you still need to use the DifferentSLIAuto batch script) and it doesn't fix driver signature issues with anticheat (I've seen this again and again in this thread but I've discussed it before, the permanent fix for this is https://github.com/Mattiwatti/EfiGuard.)

Use the script by invoking it with the .sys file as an argument, e.g. python FreeSLI.py nvlddmkm.sys.

It will produce another file, nvlddmkm_SLI.sys in the same directory. Backup your original, then rename this file to nvlddmkm.sys and execute the DifferentSLIAuto install script as normal.

An explanation of how it works on an assembly level is available commented in the python source. Basically what I did was disassemble the driver file and a hacked one modified with Pretentious's bytes and examined the actual assembly that his bytecode modification was changing. I did this on a recent driver as well as on the oldest that he provided, 391.35. Interestingly, I found that his bytecode modifications changed sometime during the r410 branch. He used to replace the JNZ and BTS instructions with a single MOV, which was sufficient for the bypass to work. Later, he started replacing the preceding TEST instruction as well, and allowing the last two bytes of the BTS instruction to fall off into a new instruction, that being AND AL, 0xe. I'm not sure why he changed this, but I theorized that this side effect probably does not matter since the AL register isn't used for a while after. Regardless, I decided to use his old set of instructions since they should leave the other registers and flags in a state closer to what they should have been in had the hack not taken place. If it is sufficient to enable SLI, I prefer this solution to his newer one.

After that it's a simple matter of opening the file in a memory map, finding a common marker nearby, then modifying at a proper offset.

Note that this is not doing anything intelligent with function tracing. This does not represent a universal solution and will break if there are significant changes to the bytecode marker used to locate the correct instructions. It is, however, encouraging that the code is stable enough to have kept this marker for the better part of 2 years now. I'd guess that Nvidia has an extremely stable toolchain for compiling their driver, so unless they make changes to this function directly it will probably keep working. When it stops working... I guess I'll try my hand at patch diffing in Ghidra.

Usual disclaimers apply, I make no promises this will work, I'm not responsible for your 2080Tis catching on fire, etc.

I'm running Windows 10. P.s. Could you share the .sys file for 441.66 by any chance?

EDIT: Managed to run the script, generate the alternate .sys, and run DifferentSLIAuto (install.cmd with modified driver path and as admin, in SAFE mode, UAC off).

SLI option sadly does not show.

I'm trying to get two 2080 Ti's to run in SLI with one in x8 and one in x4. Using a riser on a X570 and the PCIe 3.0/4.0 is causing issues.

Was hoping this would 'force' SLI to work with one car in x4.

Last edited:

- Joined

- Mar 27, 2019

- Messages

- 12 (0.01/day)

What Python version should I run for this? If I run the latest 3.8.1-amd64 it does not generate the _SLI.sys file (tried both relative and absolute paths from command prompt).

I'm running Windows 10. P.s. Could you share the .sys file for 441.66 by any chance?

EDIT: Managed to run the script, generate the alternate .sys, and run DifferentSLIAuto (install.cmd with modified driver path and as admin, in SAFE mode, UAC off).

SLI option sadly does not show.

I'm trying to get two 2080 Ti's to run in SLI with one in x8 and one in x4. Using a riser on a X570 and the PCIe 3.0/4.0 is causing issues.

Was hoping this would 'force' SLI to work with one car in x4.

I've tested with 2x1080Ti's but I have a 2080Ti in waiting and will be receiving another one sometime this week which I plan to test with the hacked driver. NVLink may be doing something different, but I would wager that the issue with your setup is sadly physical. My testing case is a virtual machine passing through two fully-enabled x16 slots on a motherboard with SLI-compatible hardware; the only leap is tricking the driver into enabling it on the virtualized chipset. Have you gotten your setup to work using a manual patch with bytes provided by Pretentious? My application is based entirely off his method and it has some limitations; for instance, the old DifferentSLIAuto was generally more successful at getting mismatched SLI to work.

stunts513

New Member

- Joined

- Dec 24, 2019

- Messages

- 6 (0.00/day)

@DonnerPartyOf1

Nice script, worked like a charm on the latest driver release for me, minus shadow of the tomb raider not wanting to correctly initialize but i've always had issues with that game to some degree. I'm curious, I am also running a virtual machine, kvm with 2 1070's in sli. Have you had any issues with fullscreen games making the display driver go unstable? It tends to happen to me more often than not and it is getting annoying, has been doing it since before i tested your script out so i'm just kinda wondering since i don't think as many people are using this method for virtualization. Usually when it happens it will force the vm to reset after it has the driver go unstable and stable again several times in a row.

Nice script, worked like a charm on the latest driver release for me, minus shadow of the tomb raider not wanting to correctly initialize but i've always had issues with that game to some degree. I'm curious, I am also running a virtual machine, kvm with 2 1070's in sli. Have you had any issues with fullscreen games making the display driver go unstable? It tends to happen to me more often than not and it is getting annoying, has been doing it since before i tested your script out so i'm just kinda wondering since i don't think as many people are using this method for virtualization. Usually when it happens it will force the vm to reset after it has the driver go unstable and stable again several times in a row.

Last edited:

- Joined

- Mar 27, 2019

- Messages

- 12 (0.01/day)

@DonnerPartyOf1

Nice script, worked like a charm on the latest driver release for me, minus shadow of the tomb raider not wanting to correctly initialize but i've always had issues with that game to some degree. I'm curious, I am also running a virtual machine, kvm with 2 1070's in sli. Have you had any issues with fullscreen games making the display driver go unstable? It tends to happen to me more often than not and it is getting annoying, has been doing it since before i tested your script out so i'm just kinda wondering since i don't think as many people are using this method for virtualization. Usually when it happens it will force the vm to reset after it has the driver go unstable and stable again several times in a row.

It would be difficult to diagnose without knowing more about the system. Are you using EfiGuard to load the hacked driver on-the-fly? I noted that doing so for the first time on installing a new driver version would often cause system instability, but subsequent attempts at the same would work fine after rebooting the VM.

A frequent source of crash-chaining is slow interrupt and DPC handling since the driver basically freezes while these routines catch up, then unfreezes when they do, just to freeze again on the next tick. In SLI the driver has to maintain these time-critical routines on both cards and keep them synced, so fast delivery of them out and into the VM is paramount... which can be affected by many things, highest on the list are:

- Use of message-signaled interrupts in the guest

- Proper interrupt mapping between guest and host (what host system platform are you on? Are you using AVIC/APICv? Are you certain they're configured properly? If you're not, are you using vAPIC? Which ioapic driver are you using? Are you using irqbalance in a manner that is consistent with these features?)

- Timer support (are you passing through an invariant TSC clock to the VM? HPET or no HPET? If using SynIC, also using STimer?)

- Resource isolation (are you using a dedicated emulator thread? IOThread? Dedicated vCPUs on a realtime scheduler? Did you make those physical cores tickless? Force the kernel to not run callbacks on them?)

There's a lot of shit that you can tweak on KVM. Gaming on metal is all about throughput; virtualized gaming is all about latency and accuracy.

stunts513

New Member

- Joined

- Dec 24, 2019

- Messages

- 6 (0.00/day)

Ok lets see how much of this i can answer, i haven't played around with the hypervisor in a while for some of this.I think this in a way reminded me of a problem i had that i'm pretty sure is related now. Short version of what it reminded me of was my audio has always glitched out on the vm every so often, and i started running dpc related tests at the time and never fixed it thanks t o windows build update crashing the machine and i forgot about it.It would be difficult to diagnose without knowing more about the system. Are you using EfiGuard to load the hacked driver on-the-fly? I noted that doing so for the first time on installing a new driver version would often cause system instability, but subsequent attempts at the same would work fine after rebooting the VM.

A frequent source of crash-chaining is slow interrupt and DPC handling since the driver basically freezes while these routines catch up, then unfreezes when they do, just to freeze again on the next tick. In SLI the driver has to maintain these time-critical routines on both cards and keep them synced, so fast delivery of them out and into the VM is paramount... which can be affected by many things, highest on the list are:

- Use of message-signaled interrupts in the guest

- Proper interrupt mapping between guest and host (what host system platform are you on? Are you using AVIC/APICv? Are you certain they're configured properly? If you're not, are you using vAPIC? Which ioapic driver are you using? Are you using irqbalance in a manner that is consistent with these features?)

- Timer support (are you passing through an invariant TSC clock to the VM? HPET or no HPET? If using SynIC, also using STimer?)

- Resource isolation (are you using a dedicated emulator thread? IOThread? Dedicated vCPUs on a realtime scheduler? Did you make those physical cores tickless? Force the kernel to not run callbacks on them?)

There's a lot of shit that you can tweak on KVM. Gaming on metal is all about throughput; virtualized gaming is all about latency and accuracy.

The setup i have is a ryzen 1700x based computer i built running centos 7(planning on upgrading to 8 soon). I do have EfiGuard running as well, was totally useless as a gaming vm without it.

The guest was not using message-signaled interrupts for the gpu based on the output from lspci while the vm is running (Capabilities: [68] MSI: Enable- Count=1/1 Maskable- 64bit+)

though i did just enable it in the guest for the gpu's after reading up on it and i think the latency went down a bit but it still spikes up at random and when games are trying to initialize.

Here's an example when launching Shadow of the Tomb Raider(before i get a gpu hung error):

Fair warning, i haven't played around with some of the settings you were referring to, i recognize them somewhat so my answers may be a bit vague.

i'm a bit thrown off by the AVIC, i'm assuming since i have an AMD based pc i should be using that, i'm not that familiar with it but i think i read its only available after linux kernel 4.7 and later unless it was backported and since i'm on centos, i'm still using an older 3.10 kernel.

As to the ioapic driver, also a bit confused on that because i do see vapic on in my configuration, not seeing ioapic in their though. If i was supposed to define that in features i didn't.

I'm seeing hpet as "not present" as well.

To probably be less vague i'm going to pastebin my config if thats alright.

https://pastebin.com/Gz1g3k7D

Any advice is appreciated. Hopefully i didn't trainwreck that info too much.

- Joined

- Mar 27, 2019

- Messages

- 12 (0.01/day)

Ok lets see how much of this i can answer, i haven't played around with the hypervisor in a while for some of this.I think this in a way reminded me of a problem i had that i'm pretty sure is related now. Short version of what it reminded me of was my audio has always glitched out on the vm every so often, and i started running dpc related tests at the time and never fixed it thanks t o windows build update crashing the machine and i forgot about it.

The setup i have is a ryzen 1700x based computer i built running centos 7(planning on upgrading to 8 soon). I do have EfiGuard running as well, was totally useless as a gaming vm without it.

The guest was not using message-signaled interrupts for the gpu based on the output from lspci while the vm is running (Capabilities: [68] MSI: Enable- Count=1/1 Maskable- 64bit+)

though i did just enable it in the guest for the gpu's after reading up on it and i think the latency went down a bit but it still spikes up at random and when games are trying to initialize.

Here's an example when launching Shadow of the Tomb Raider(before i get a gpu hung error):

View attachment 140766

Fair warning, i haven't played around with some of the settings you were referring to, i recognize them somewhat so my answers may be a bit vague.

i'm a bit thrown off by the AVIC, i'm assuming since i have an AMD based pc i should be using that, i'm not that familiar with it but i think i read its only available after linux kernel 4.7 and later unless it was backported and since i'm on centos, i'm still using an older 3.10 kernel.

As to the ioapic driver, also a bit confused on that because i do see vapic on in my configuration, not seeing ioapic in their though. If i was supposed to define that in features i didn't.

I'm seeing hpet as "not present" as well.

To probably be less vague i'm going to pastebin my config if thats alright.

https://pastebin.com/Gz1g3k7D

Any advice is appreciated. Hopefully i didn't trainwreck that info too much.

Use LatencyMon instead of DPC Lat Checker. LatencyMon tells you the exact ISR causing the long latency instead of making you guess. I'd bet money it's the Nvidia driver.

You should set up 2MB hugepages to reduce some pressure on the MMU.

I don't have an AMD system (anymore, I used to run a 1950X but now it's a 7920X) so I can only tell you what I have gleaned from research. The first thing is that you need to distro hop or upgrade ASAP, CentOS 7 is way too old for this platform. They only get the bare minimum backports from upstream to make Ryzen work at all, you have none of the Ryzen-specific optimizations that have been made to KVM since then. I don't know the exact version of QEMU currently in the RH repo, but it's probably ancient; I saw a reference to QEMU 2.12 being used in CentOS this year and the current version is 4.2.0. The same applies here, QEMU has gotten a ton of updates specifically for Ryzen. Zen SMT implementation didn't even work right at all until QEMU 3.0 released.

For VFIO I recommend Arch or a derivative distro, though I know it can be scary to jump into a rolling-release. Personally I've only had an update break my system once, and it was because I was running Antergos which fucked up their localization settings when they pushed out their last dying breath update. When I switched all my packages to raw Arch and fixed my language, everything was good again. Otherwise I've only broken my system through my own stupidity (owning and using an Nvidia GPU on Linux, expecting their driver to survive a kernel update)

You should enable AVIC when you upgrade your kernel to one that supports it. Then in Libvirt you should disable vAPIC. vAPIC is a paravirtualized device that performs similar functions to what AVIC does in hardware and they are incompatible; therefore, turn off vAPIC to get faster hardware interrupt control support. Luckily for you, SynIC and therefore STimers can still work on AMD systems using AVIC, whereas on Intel systems using the comparable APICv tech, these enlightenments are incompatible. So you can enable those in your hyperv block too.

The ioapic driver might not be available for changing in your old version of libvirt, but it basically has 2 states. split = APIC split between userspace and kernel space; kvm = all APIC done in kernel space. KVM can perform slightly better but you can only use ioapic=kvm with AVIC and SynIC on very recent distributions, there was a fix that got put into the kernel just 2-3 months ago that enabled this combination. Before that, AVIC + SynIC only worked properly in split ioapic mode. On top of all that, split ioapic mode has been known to cause issues with the Nvidia driver, so you should strive to use KVM if possible.

You should dedicate specific physical cores for vCPUS in order to prevent threadwalking (use vcpupin). You also need to set a couple of CPU features that tell Windows how to handle the cache architecture and SMT on Zen. I'm also passing through an invariant TSC for timekeeping here, you should do that as well if your system has a stable TSC:

<cpu mode='host-passthrough' check='none'>

<topology sockets='1' cores='5' threads='2'/>

<cache mode='passthrough'/>

<feature policy='require' name='invtsc'/>

<feature policy='require' name='topoext'/>

</cpu>

The topoext feature exposes the Zen SMT arch and CCX split to the guest.

Can you afford to dedicate 5 entire cores to the VM while it's running? Consider using a real-time scheduler (vcpusched with fifo, priority 1) and process isolation (cpuset shielding, disable kernel scheduling timer ticks on the dedicated cores, prevent RCU callbacks on those cores, poll for RCU on the host cores instead of generating them via interrupt from the guest cores. Check kernel options nohz_full=cset; rcu_nocbs=cset; rcu_nocb_poll)

Can you afford to dedicate even more cores to your VM while it's running? Consider using emulatorpin to dedicate a thread for emulation processes. Also consider using iothread to dedicate a thread for your disk I/O.

Unfortunately any advice I would have regarding the use of irqbalance is dependent on whether or not AVIC supports posted interrupts like Intel's APICv, but I can't find info on that. You could experiment with rebalancing kvm interrupts both exclusively onto and exclusively off of your vCPUs if nothing else works, but you have a lot to do before hitting this point.

Worth noting any of the phrases I italicized in this post you should be able to find more info on how to use it at the Libvirt domain XML page here.

Also, go join and ask questions at the /r/vfio subreddit.

stunts513

New Member

- Joined

- Dec 24, 2019

- Messages

- 6 (0.00/day)

Use LatencyMon instead of DPC Lat Checker. LatencyMon tells you the exact ISR causing the long latency instead of making you guess. I'd bet money it's the Nvidia driver.

You should set up 2MB hugepages to reduce some pressure on the MMU.

I don't have an AMD system (anymore, I used to run a 1950X but now it's a 7920X) so I can only tell you what I have gleaned from research. The first thing is that you need to distro hop or upgrade ASAP, CentOS 7 is way too old for this platform. They only get the bare minimum backports from upstream to make Ryzen work at all, you have none of the Ryzen-specific optimizations that have been made to KVM since then. I don't know the exact version of QEMU currently in the RH repo, but it's probably ancient; I saw a reference to QEMU 2.12 being used in CentOS this year and the current version is 4.2.0. The same applies here, QEMU has gotten a ton of updates specifically for Ryzen. Zen SMT implementation didn't even work right at all until QEMU 3.0 released.

For VFIO I recommend Arch or a derivative distro, though I know it can be scary to jump into a rolling-release. Personally I've only had an update break my system once, and it was because I was running Antergos which fucked up their localization settings when they pushed out their last dying breath update. When I switched all my packages to raw Arch and fixed my language, everything was good again. Otherwise I've only broken my system through my own stupidity (owning and using an Nvidia GPU on Linux, expecting their driver to survive a kernel update)

You should enable AVIC when you upgrade your kernel to one that supports it. Then in Libvirt you should disable vAPIC. vAPIC is a paravirtualized device that performs similar functions to what AVIC does in hardware and they are incompatible; therefore, turn off vAPIC to get faster hardware interrupt control support. Luckily for you, SynIC and therefore STimers can still work on AMD systems using AVIC, whereas on Intel systems using the comparable APICv tech, these enlightenments are incompatible. So you can enable those in your hyperv block too.

The ioapic driver might not be available for changing in your old version of libvirt, but it basically has 2 states. split = APIC split between userspace and kernel space; kvm = all APIC done in kernel space. KVM can perform slightly better but you can only use ioapic=kvm with AVIC and SynIC on very recent distributions, there was a fix that got put into the kernel just 2-3 months ago that enabled this combination. Before that, AVIC + SynIC only worked properly in split ioapic mode. On top of all that, split ioapic mode has been known to cause issues with the Nvidia driver, so you should strive to use KVM if possible.

You should dedicate specific physical cores for vCPUS in order to prevent threadwalking (use vcpupin). You also need to set a couple of CPU features that tell Windows how to handle the cache architecture and SMT on Zen. I'm also passing through an invariant TSC for timekeeping here, you should do that as well if your system has a stable TSC:

<cpu mode='host-passthrough' check='none'>

<topology sockets='1' cores='5' threads='2'/>

<cache mode='passthrough'/>

<feature policy='require' name='invtsc'/>

<feature policy='require' name='topoext'/>

</cpu>

The topoext feature exposes the Zen SMT arch and CCX split to the guest.

Can you afford to dedicate 5 entire cores to the VM while it's running? Consider using a real-time scheduler (vcpusched with fifo, priority 1) and process isolation (cpuset shielding, disable kernel scheduling timer ticks on the dedicated cores, prevent RCU callbacks on those cores, poll for RCU on the host cores instead of generating them via interrupt from the guest cores. Check kernel options nohz_full=cset; rcu_nocbs=cset; rcu_nocb_poll)

Can you afford to dedicate even more cores to your VM while it's running? Consider using emulatorpin to dedicate a thread for emulation processes. Also consider using iothread to dedicate a thread for your disk I/O.

Unfortunately any advice I would have regarding the use of irqbalance is dependent on whether or not AVIC supports posted interrupts like Intel's APICv, but I can't find info on that. You could experiment with rebalancing kvm interrupts both exclusively onto and exclusively off of your vCPUs if nothing else works, but you have a lot to do before hitting this point.

Worth noting any of the phrases I italicized in this post you should be able to find more info on how to use it at the Libvirt domain XML page here.

Also, go join and ask questions at the /r/vfio subreddit.

Thanks, you definitely have given me some ideas to think about. I was also using latency mon, i just only posted the pics from DPC Lat Checker. I liked its graph style a bit better but yea its lacking in useful diagnostic info.

Highest reported DPC routine usually is either that or the directx graphics kernel.

I'm not sure how i feel about changing distros, I've wanted to try out Arch at some point, but the primary purpose of my hypervisor is that its runs my network. It runs a windows server and a pfsense router for the other machines. The gaming machine was more like left over resources i had to play with. I just upgraded the machine to 32 gb of ram and got an nvme drive that i'm going to migrate the os and boot drives over to, as most of the boot drives are sitting on some partitions on a 4tb array i don't really want mixed with what the array was created for. I have another machine running centos 8 laying around, i might check its repos and see what they are using for qemu on 8. I think centos 7 is literally on version 2.0. This will definitely make me want to upgrade sooner rather than later, I'm just going to do a fresh install on the nvme drive and set it up based on the original os. Depending on what is in the centos 8 repos i might consider switching distros, i just don't want to have to worry about the server side of the hypervisor going down after an update as i will lose internet.

Thanks again for all the advice, i will be putting it to use and hopefully i can get rid of this annoying latency.

Last edited:

Depois de estudar um pouco , e com ajuda do forum, consegui colocar duas GTX 760 em uma GA-Z77M-D3H

Ainda nao testei a performance, mas estou ancioso.

Porem, segue minha contribuiçao:

Eu compilei o arquivo nvlddmkm.sys da versao 441.41

Versao windows 10 x64

LINK:

https://www.sendspace.com/file/5wdlm7

Segue imagem e arquivo ja modificado para download.

O procedimento de instalar é o mesmo ja citado nos posts anteriores.

Obrigado

--------------------------------------------------------------------------------------------------------------------------------------------------------------------

After studying a little, and with the help of the forum, I was able to put two GTX 760 in one GA-Z77M-D3H

I haven't tested the performance yet, but I'm looking forward to it.

However, follows my contribution:

I have compiled the nvlddmkm.sys file from version 441.41

Windows 10 x64 version

LINK:

https://www.sendspace.com/file/5wdlm7

Follows image and file already modified for download.

The procedure of installing is the same already mentioned in previous posts.

Thank youView attachment 139419View attachment 139421

Bom dia Raul Chartouni, tudo bem?

Estou tentando colocar duas GTX 970 em SLI (esta GPU possui grupos que inviabilizam).

Vou tentar usar seu arquivo. Pode explicar melhor os procedimentos que vc adotou, como por exemplo instalar em modo de segurança e qual versão do DifferentSLIAuto vc utilizou?

Obrigado!

_____________

Dear Raul Chartouni.

I'm tryin SLI in GTX 970.

I will try your .sys file. Could you explain your procedures better?

Like DifferentSLIAuto version, etc.

Thanks!

I've tested with 2x1080Ti's but I have a 2080Ti in waiting and will be receiving another one sometime this week which I plan to test with the hacked driver. NVLink may be doing something different, but I would wager that the issue with your setup is sadly physical. My testing case is a virtual machine passing through two fully-enabled x16 slots on a motherboard with SLI-compatible hardware; the only leap is tricking the driver into enabling it on the virtualized chipset. Have you gotten your setup to work using a manual patch with bytes provided by Pretentious? My application is based entirely off his method and it has some limitations; for instance, the old DifferentSLIAuto was generally more successful at getting mismatched SLI to work.

I managed to get my bifurcated slots running at x8x8 by providing more power to the 12v connector on the C_Payme riser card.

SLI/NVLINK still doesn't show up in my control panel though.

The manual patch by Pretentious does not seem to work, but I suspect that my Nvidia drivers auto-update (which I tried to disable based on the hardware_id using the Windows Group Policy manager).

I'd love to hear about your attempts once you get that second 2080ti.

Here are my system specs:

- MB: Asrock X570 Phantom Gaming-ITX/TB3

- CPU: Ryzen 9 3950x

- GPU1: EVGA 2080 Ti XC Ultra

- GPU2: EVGA 2080 Ti XC Ultra

- RAM: 32Gb G.SKILL Trident Z Neo 3600 ( PC4 28800)

- NVMe: Patriot VP4100 M.2 1TB

- SSD: 2x Samsung 860 EV

.

.

Last edited:

Bom dia Raul Chartouni, tudo bem?

Estou tentando colocar duas GTX 970 em SLI (esta GPU possui grupos que inviabilizam).

Vou tentar usar seu arquivo. Pode explicar melhor os procedimentos que vc adotou, como por exemplo instalar em modo de segurança e qual versão do DifferentSLIAuto vc utilizou?

Obrigado!

_____________

Dear Raul Chartouni.

I'm tryin SLI in GTX 970.

I will try your .sys file. Could you explain your procedures better?

Like DifferentSLIAuto version, etc.

Thanks!

Your file didn't work for me.

I guess that this file has some differences between user systems.

I say that because I modified mine with Hex Editor, as you did, and works fine for 441.20!!

Thanks you all, especially @P!nkpanther for the tutorials.

Just to explain better, EVGA builded incompatibles GTX 970 as informed below:

So, with DifferentSLIAuto I put an EVGA GTX 970 SC (04G-P4-2974-KR ) to work together to EVGA GTX 970 SSC (04G-P4-3975-KR).

We fixed this EVGA mistake!

Thanks you all, again!

- Joined

- Dec 8, 2018

- Messages

- 59 (0.03/day)

| Processor | 2x Intel Xeon E5-2680 v2 |

|---|---|

| Motherboard | ASUSTeK COMPUTER INC. Z9PE-D8 WS |

| Memory | SAMSUNG DDR3-1600MHz ECC 128GB |

| Video Card(s) | 2x ASUS GEFORCE® GTX 1080 TI 11GB TURBO |

| Display(s) | SAMSUNG 32" UJ590 UHD 4k QLED |

| Case | Carbide Series™ Air 540 High Airflow ATX Cube Case |

| Power Supply | EVGA 1000 GQ, 80+ GOLD 1000W, Semi Modular |

| Mouse | MX MASTER 2S |

| Keyboard | Azio Mk Mac Wired USB Backlit Mechanical Keyboard |

| Software | Ubuntu 18.04 / [KVM - Windows 10 1809 / OSX 10.14] |

I wrote a small python script that should be able to automatically generate an SLI-hacked driver file for all recent versions (I tested back to 391.35 and up to current, 441.66). You can get it here. Note that this only patches the .sys file for you, it doesn't install it (you still need to use the DifferentSLIAuto batch script) and it doesn't fix driver signature issues with anticheat (I've seen this again and again in this thread but I've discussed it before, the permanent fix for this is https://github.com/Mattiwatti/EfiGuard.)

Use the script by invoking it with the .sys file as an argument, e.g. python FreeSLI.py nvlddmkm.sys.

It will produce another file, nvlddmkm_SLI.sys in the same directory. Backup your original, then rename this file to nvlddmkm.sys and execute the DifferentSLIAuto install script as normal.

An explanation of how it works on an assembly level is available commented in the python source. Basically what I did was disassemble the driver file and a hacked one modified with Pretentious's bytes and examined the actual assembly that his bytecode modification was changing. I did this on a recent driver as well as on the oldest that he provided, 391.35. Interestingly, I found that his bytecode modifications changed sometime during the r410 branch. He used to replace the JNZ and BTS instructions with a single MOV, which was sufficient for the bypass to work. Later, he started replacing the preceding TEST instruction as well, and allowing the last two bytes of the BTS instruction to fall off into a new instruction, that being AND AL, 0xe. I'm not sure why he changed this, but I theorized that this side effect probably does not matter since the AL register isn't used for a while after. Regardless, I decided to use his old set of instructions since they should leave the other registers and flags in a state closer to what they should have been in had the hack not taken place. If it is sufficient to enable SLI, I prefer this solution to his newer one.

After that it's a simple matter of opening the file in a memory map, finding a common marker nearby, then modifying at a proper offset.

Note that this is not doing anything intelligent with function tracing. This does not represent a universal solution and will break if there are significant changes to the bytecode marker used to locate the correct instructions. It is, however, encouraging that the code is stable enough to have kept this marker for the better part of 2 years now. I'd guess that Nvidia has an extremely stable toolchain for compiling their driver, so unless they make changes to this function directly it will probably keep working. When it stops working... I guess I'll try my hand at patch diffing in Ghidra.

Usual disclaimers apply, I make no promises this will work, I'm not responsible for your 2080Tis catching on fire, etc.

Well done, happy to see someone take initiative! As for the different approach with the instructions I think at some point the driver started to use a different register right before the TEST but I don't recall much more other than that

Thanks, you definitely have given me some ideas to think about. I was also using latency mon, i just only posted the pics from DPC Lat Checker. I liked its graph style a bit better but yea its lacking in useful diagnostic info.

View attachment 140790

Highest reported DPC routine usually is either that or the directx graphics kernel.

I'm not sure how i feel about changing distros, I've wanted to try out Arch at some point, but the primary purpose of my hypervisor is that its runs my network. It runs a windows server and a pfsense router for the other machines. The gaming machine was more like left over resources i had to play with. I just upgraded the machine to 32 gb of ram and got an nvme drive that i'm going to migrate the os and boot drives over to, as most of the boot drives are sitting on some partitions on a 4tb array i don't really want mixed with what the array was created for. I have another machine running centos 8 laying around, i might check its repos and see what they are using for qemu on 8. I think centos 7 is literally on version 2.0. This will definitely make me want to upgrade sooner rather than later, I'm just going to do a fresh install on the nvme drive and set it up based on the original os. Depending on what is in the centos 8 repos i might consider switching distros, i just don't want to have to worry about the server side of the hypervisor going down after an update as i will lose internet.

Thanks again for all the advice, i will be putting it to use and hopefully i can get rid of this annoying latency.

Post your libvirt / qemu config and I will take a look. I had the same issue in the past. Now my highest interrupt is usually under 120 and DPC under 500 (500 is the occasional peak, it stays below that point most of the time even while gaming on a xeon 2680v2.

On a different topic, does anyone happen to know if NVIDIA virtualization is disabled on consumer cards on a hardware or driver level? They are pissing me off by not allowing me to use GPU direct RDMA on a 1080 TI so I am probably going to tackle that in the near future.

Last edited:

stunts513

New Member

- Joined

- Dec 24, 2019

- Messages

- 6 (0.00/day)

Thanks! I posted it above but i have been tweaking it, i'll post the updated version but it still isn't working great currently.Well done, happy to see someone take initiative! As for the different approach with the instructions I think at some point the driver started to use a different register right before the TEST but I don't recall much more other than that

Post your libvirt / qemu config and I will take a look. I had the same issue in the past. Now my highest interrupt is usually under 120 and DPC under 500 (500 is the occasional peak, it stays below that point most of the time even while gaming on a xeon 2680v2.

On a different topic, does anyone happen to know if NVIDIA virtualization is disabled on consumer cards on a hardware or driver level? They are pissing me off by not allowing me to use GPU direct RDMA on a 1080 TI so I am probably going to tackle that in the near future.

https://pastebin.com/eu4xigfK

I also may be switching my distro as i double checked and i was reading the wrong package info sort of. Centos 7 apparently ships with qemu-kvm 1.5.3 but i was using qemu-kvm-ev which is basically i think upstream testing or something, so i was running version 2.12. So the fun thing is it looks like Centos 8 ships with 2.12 and there's no qemu-kvm-ev package soooo yea. I may switch over to Ubuntu server 19.10 because i was running it in a vm for testing among others and a base install is using only like ~150mb of ram at idle and it ships with qemu 4.0 and a linux kernel 5.3, where as its LTS variant is more comparable to centos in versions of qemu and the linux kernel versions. Any further suggestions are much appreciated though. Hopefully i can get the new install going soon, i have to fix my truck though this weekend so who knows.

I managed to get my bifurcated slots running at x8x8 by providing more power to the 12v connector on the C_Payme riser card.

SLI/NVLINK still doesn't show up in my control panel though.

The manual patch by Pretentious does not seem to work, but I suspect that my Nvidia drivers auto-update (which I tried to disable based on the hardware_id using the Windows Group Policy manager).

I'd love to hear about your attempts once you get that second 2080ti.

Here are my system specs:

I managed to cram it all into a Sliger SM580 case with 2 280mm radiators and EKWB blocks, so would love to make this work

- MB: Asrock X570 Phantom Gaming-ITX/TB3

- CPU: Ryzen 9 3950x

- GPU1: EVGA 2080 Ti XC Ultra

- GPU2: EVGA 2080 Ti XC Ultra

- RAM: 32Gb G.SKILL Trident Z Neo 3600 ( PC4 28800)

- NVMe: Patriot VP4100 M.2 1TB

- SSD: 2x Samsung 860 EV

.

While I wait and see if manobroda's test of his 2080 Ti's works out.

I did find this article that describes the process on the X370 chipset (also with bifurcated ITX):

Dropbox

- Joined

- Dec 8, 2018

- Messages

- 59 (0.03/day)

| Processor | 2x Intel Xeon E5-2680 v2 |

|---|---|

| Motherboard | ASUSTeK COMPUTER INC. Z9PE-D8 WS |

| Memory | SAMSUNG DDR3-1600MHz ECC 128GB |

| Video Card(s) | 2x ASUS GEFORCE® GTX 1080 TI 11GB TURBO |

| Display(s) | SAMSUNG 32" UJ590 UHD 4k QLED |

| Case | Carbide Series™ Air 540 High Airflow ATX Cube Case |

| Power Supply | EVGA 1000 GQ, 80+ GOLD 1000W, Semi Modular |

| Mouse | MX MASTER 2S |

| Keyboard | Azio Mk Mac Wired USB Backlit Mechanical Keyboard |

| Software | Ubuntu 18.04 / [KVM - Windows 10 1809 / OSX 10.14] |

Thanks! I posted it above but i have been tweaking it, i'll post the updated version but it still isn't working great currently.

https://pastebin.com/eu4xigfK

I also may be switching my distro as i double checked and i was reading the wrong package info sort of. Centos 7 apparently ships with qemu-kvm 1.5.3 but i was using qemu-kvm-ev which is basically i think upstream testing or something, so i was running version 2.12. So the fun thing is it looks like Centos 8 ships with 2.12 and there's no qemu-kvm-ev package soooo yea. I may switch over to Ubuntu server 19.10 because i was running it in a vm for testing among others and a base install is using only like ~150mb of ram at idle and it ships with qemu 4.0 and a linux kernel 5.3, where as its LTS variant is more comparable to centos in versions of qemu and the linux kernel versions. Any further suggestions are much appreciated though. Hopefully i can get the new install going soon, i have to fix my truck though this weekend so who knows.

Sorry I did not noticed you had posted your config, I have the attention span of a 5 year old

.

.Here's a list of tips that I can give you. Personally on my system I am able to achieve around 99% performance from host baseline, regardless if I use NUMA or single CPU.

- Exceptionally important: Isolate cores from kernel, there are two approaches:

- Use [isolcpus nohz_full rcu_nocbs] for the cores you want to expose to your VM in your /etc/default/grub, example from mine:

The issue with this approach is that the cores won't be used by your linux applications, so if you want to move the cores between guests and hosts this is not a good approach; it does give the best performance boost/latency.Code:

isolcpus=10,11,12,13,14,15,16,17,18,19,30,31,32,33,34,35,36,37,38,39 nohz_full=10,11,12,13,14,15,16,17,18,19,30,31,32,33,34,35,36,37,38,39 rcu_nocbs=10,11,12,13,14,15,16,17,18,19,30,31,32,33,34,35,36,37,38,39 - Cgroups via CSET, similar to isolcpus except you can make a CPU mask to isolate the cores between application on demand; more flexibility but there will be kernel threads that will stick to those cores even when you decide to give them to qemu/kvm.

- Use [isolcpus nohz_full rcu_nocbs] for the cores you want to expose to your VM in your /etc/default/grub, example from mine:

- Memory wise Huge pages can be helpful in terms of overall performance but I did not notice difference latency wise but it doesn't hurt.

- QEMU version 4.0 would be ideal as there are a couple extra hyperv parameters that seem to help namely:

-

Code:

<hyperv> <relaxed state='on'/> <vapic state='on'/> <spinlocks state='on' retries='8191'/> <vpindex state='on'/> <runtime state='on'/> <synic state='on'/> <stimer state='on'/> <reset state='on'/> <vendor_id state='on' value='whatever'/> </hyperv> - I use Q35 chipset:

Code:

<os> <type arch='x86_64' machine='pc-q35-4.1'>hvm</type> <loader readonly='yes' type='pflash'>/var/lib/libvirt/images/OVMF_CODE.fd</loader> <nvram>/var/lib/libvirt/images/OVMF_VARS.fd</nvram> <bootmenu enable='no'/> </os> - Clock time I seem to recall combining kvmclock and hypervclock and disabling TSC inside windows? I could be wrong though:

Code:

<clock offset='localtime'> <timer name='rtc' present='no' tickpolicy='catchup' track='guest'/> <timer name='pit' present='no' tickpolicy='delay'/> <timer name='hpet' present='no'/> <timer name='kvmclock' present='yes'/> <timer name='hypervclock' present='yes'/> </clock> - VCPU pinning is also important. With that being said some processors perform better if you pin the logical core but not the hyperthread. I personally pin everything and it works fine but that is a matter of experimentation:

Code:

<vcpu placement='static' cpuset='10-19,30-39'>6</vcpu> <cputune> <vcpupin vcpu='0' cpuset='11'/> <vcpupin vcpu='1' cpuset='12'/> <vcpupin vcpu='2' cpuset='13'/> <vcpupin vcpu='3' cpuset='14'/> <vcpupin vcpu='4' cpuset='15'/> <vcpupin vcpu='5' cpuset='16'/> <emulatorpin cpuset='18-19,38-39'/> <vcpusched vcpus='0' scheduler='fifo' priority='1'/> <vcpusched vcpus='1' scheduler='fifo' priority='1'/> <vcpusched vcpus='2' scheduler='fifo' priority='1'/> <vcpusched vcpus='3' scheduler='fifo' priority='1'/> <vcpusched vcpus='4' scheduler='fifo' priority='1'/> <vcpusched vcpus='5' scheduler='fifo' priority='1'/> </cputune> - Depending on your processor you might want to disable https://www.grc.com/inspectre.htm inside windows as linux is already providing updated microcode with the patches; I forgot if there is a performance difference by doing this or not.

-

Code:

#CONFIG_HZ_300=y

CONFIG_HZ_1000=y

CONFIG_HZ=1000I run my own version patched versions of both KVM and QEMU (I have a mac OSX VM working with 64 cores and some monkey patched numa support applied to https://github.com/apple/darwin-xnu; QEMU and KVM don't play nice with some of the MSR interrupts that Apple uses .... it was a pain in the ass to reverse engineer) but I don't think I patched much in terms of performance other than compiling with the --enable-jemalloc flag. Jemalloc might make a difference, honestly I don't remember

I strongly advise you to use something like GeekBench (4 if you have older hardware) 5 to see how your setup is performing compared to others. As a reference my xeon 2680 v2 I'm still #1 (highest core is Linux but if you look up by Windows or OSX my scores should also be relatively high - they are not listed under my username as I only have a Linux license):

https://browser.geekbench.com/v4/cpu/search?dir=desc&q=2680+v2&sort=multicore_score

Hope some of these tips were helpful and disregards any typos, can't be bothered to proofread my own stuff

- Joined

- Mar 27, 2019

- Messages

- 12 (0.01/day)

I managed to get my bifurcated slots running at x8x8 by providing more power to the 12v connector on the C_Payme riser card.

SLI/NVLINK still doesn't show up in my control panel though.

The manual patch by Pretentious does not seem to work, but I suspect that my Nvidia drivers auto-update (which I tried to disable based on the hardware_id using the Windows Group Policy manager).

I'd love to hear about your attempts once you get that second 2080ti.

Here are my system specs:

I managed to cram it all into a Sliger SM580 case with 2 280mm radiators and EKWB blocks, so would love to make this work

- MB: Asrock X570 Phantom Gaming-ITX/TB3

- CPU: Ryzen 9 3950x

- GPU1: EVGA 2080 Ti XC Ultra

- GPU2: EVGA 2080 Ti XC Ultra

- RAM: 32Gb G.SKILL Trident Z Neo 3600 ( PC4 28800)

- NVMe: Patriot VP4100 M.2 1TB

- SSD: 2x Samsung 860 EV

.

I'm typing this message from inside a Windows 10 Q35 virtual machine with 2x2080Tis and SLI enabled on 441.66 (latest as of today) hacked using my Python utility. So there's something else going wrong on your end.

Well done, happy to see someone take initiative! As for the different approach with the instructions I think at some point the driver started to use a different register right before the TEST but I don't recall much more other than that

Post your libvirt / qemu config and I will take a look. I had the same issue in the past. Now my highest interrupt is usually under 120 and DPC under 500 (500 is the occasional peak, it stays below that point most of the time even while gaming on a xeon 2680v2.

On a different topic, does anyone happen to know if NVIDIA virtualization is disabled on consumer cards on a hardware or driver level? They are pissing me off by not allowing me to use GPU direct RDMA on a 1080 TI so I am probably going to tackle that in the near future.

If you're referring to the for-real enterprise virtualization stuff, I'm fairly certain it's disabled on hardware level. Nvidia drives that capability with a proprietary hardware scheduler, not SR-IOV like AMD's offerings. I'd assume they either don't etch GeForce dies with the controller at all, or fuse it off after.

I would assume the opposite for RDMA however; it's just a CUDA function. I see no reason for it to require specific hardware. Most likely a driver limitation.

- Joined

- Dec 8, 2018

- Messages

- 59 (0.03/day)

| Processor | 2x Intel Xeon E5-2680 v2 |

|---|---|

| Motherboard | ASUSTeK COMPUTER INC. Z9PE-D8 WS |

| Memory | SAMSUNG DDR3-1600MHz ECC 128GB |

| Video Card(s) | 2x ASUS GEFORCE® GTX 1080 TI 11GB TURBO |

| Display(s) | SAMSUNG 32" UJ590 UHD 4k QLED |

| Case | Carbide Series™ Air 540 High Airflow ATX Cube Case |

| Power Supply | EVGA 1000 GQ, 80+ GOLD 1000W, Semi Modular |

| Mouse | MX MASTER 2S |

| Keyboard | Azio Mk Mac Wired USB Backlit Mechanical Keyboard |

| Software | Ubuntu 18.04 / [KVM - Windows 10 1809 / OSX 10.14] |

I'm typing this message from inside a Windows 10 Q35 virtual machine with 2x2080Tis and SLI enabled on 441.66 (latest as of today) hacked using my Python utility. So there's something else going wrong on your end.

If you're referring to the for-real enterprise virtualization stuff, I'm fairly certain it's disabled on hardware level. Nvidia drives that capability with a proprietary hardware scheduler, not SR-IOV like AMD's offerings. I'd assume they either don't etch GeForce dies with the controller at all, or fuse it off after.

I would assume the opposite for RDMA however; it's just a CUDA function. I see no reason for it to require specific hardware. Most likely a driver limitation.

Thank you. Yeah based on what I am seeing it sounds like you're right. I'll probably pursue this through the use of NTB; my goal was to use a remote GPU from inside a VM on a different machine as a rendering device. I haven't found much info online on any success stories on that except for using SR-IOV with firepro series but even so that is virtualization locally =/

I'll figure something out... eventually. Worst case scenario I will patch looking glass to use a RDMA and forward everything through a mellanox.

stunts513

New Member

- Joined

- Dec 24, 2019

- Messages

- 6 (0.00/day)

Thanks for more advice. Hopefully i can put all of this to work. Still trying to get everything up and running, i had already started getting everything up on Ubuntu Server 19.10. I got my basic vm's that handle my network up but the stupid gaming vm is giving me hell... Every time i enable the code 43 workaround i get a bsod on boot even with the ioapic driver="kvm" fix on so yea... Maybe eventually i will have good news. I'm probably going to head to either reddit or freenode in the meantime and see what i can figure out about that, i've been going through the web for a solution for a bit and i'm still no closer to solving it.Sorry I did not noticed you had posted your config, I have the attention span of a 5 year old.

Here's a list of tips that I can give you. Personally on my system I am able to achieve around 99% performance from host baseline, regardless if I use NUMA or single CPU.

Let me know if you need further clarification on any of those points. As for what distro to use I would say go for Manjaro instead of Ubuntu if you want to put in the time to learn Arch (it's worth it). I would still recompile the kernel (regardless of which distro you device to use) with:

- Exceptionally important: Isolate cores from kernel, there are two approaches:

- Use [isolcpus nohz_full rcu_nocbs] for the cores you want to expose to your VM in your /etc/default/grub, example from mine:

The issue with this approach is that the cores won't be used by your linux applications, so if you want to move the cores between guests and hosts this is not a good approach; it does give the best performance boost/latency.Code:isolcpus=10,11,12,13,14,15,16,17,18,19,30,31,32,33,34,35,36,37,38,39 nohz_full=10,11,12,13,14,15,16,17,18,19,30,31,32,33,34,35,36,37,38,39 rcu_nocbs=10,11,12,13,14,15,16,17,18,19,30,31,32,33,34,35,36,37,38,39- Cgroups via CSET, similar to isolcpus except you can make a CPU mask to isolate the cores between application on demand; more flexibility but there will be kernel threads that will stick to those cores even when you decide to give them to qemu/kvm.

- Memory wise Huge pages can be helpful in terms of overall performance but I did not notice difference latency wise but it doesn't hurt.

- QEMU version 4.0 would be ideal as there are a couple extra hyperv parameters that seem to help namely:

<hyperv> <relaxed state='on'/> <vapic state='on'/> <spinlocks state='on' retries='8191'/> <vpindex state='on'/> <runtime state='on'/> <synic state='on'/> <stimer state='on'/> <reset state='on'/> <vendor_id state='on' value='whatever'/> </hyperv>- I use Q35 chipset:

Code:<os> <type arch='x86_64' machine='pc-q35-4.1'>hvm</type> <loader readonly='yes' type='pflash'>/var/lib/libvirt/images/OVMF_CODE.fd</loader> <nvram>/var/lib/libvirt/images/OVMF_VARS.fd</nvram> <bootmenu enable='no'/> </os>- Clock time I seem to recall combining kvmclock and hypervclock and disabling TSC inside windows? I could be wrong though:

Code:<clock offset='localtime'> <timer name='rtc' present='no' tickpolicy='catchup' track='guest'/> <timer name='pit' present='no' tickpolicy='delay'/> <timer name='hpet' present='no'/> <timer name='kvmclock' present='yes'/> <timer name='hypervclock' present='yes'/> </clock>- VCPU pinning is also important. With that being said some processors perform better if you pin the logical core but not the hyperthread. I personally pin everything and it works fine but that is a matter of experimentation:

Code:<vcpu placement='static' cpuset='10-19,30-39'>6</vcpu> <cputune> <vcpupin vcpu='0' cpuset='11'/> <vcpupin vcpu='1' cpuset='12'/> <vcpupin vcpu='2' cpuset='13'/> <vcpupin vcpu='3' cpuset='14'/> <vcpupin vcpu='4' cpuset='15'/> <vcpupin vcpu='5' cpuset='16'/> <emulatorpin cpuset='18-19,38-39'/> <vcpusched vcpus='0' scheduler='fifo' priority='1'/> <vcpusched vcpus='1' scheduler='fifo' priority='1'/> <vcpusched vcpus='2' scheduler='fifo' priority='1'/> <vcpusched vcpus='3' scheduler='fifo' priority='1'/> <vcpusched vcpus='4' scheduler='fifo' priority='1'/> <vcpusched vcpus='5' scheduler='fifo' priority='1'/> </cputune>- Depending on your processor you might want to disable https://www.grc.com/inspectre.htm inside windows as linux is already providing updated microcode with the patches; I forgot if there is a performance difference by doing this or not.

Code:#CONFIG_HZ_300=y CONFIG_HZ_1000=y CONFIG_HZ=1000

I run my own version patched versions of both KVM and QEMU (I have a mac OSX VM working with 64 cores and some monkey patched numa support applied to https://github.com/apple/darwin-xnu; QEMU and KVM don't play nice with some of the MSR interrupts that Apple uses .... it was a pain in the ass to reverse engineer) but I don't think I patched much in terms of performance other than compiling with the --enable-jemalloc flag. Jemalloc might make a difference, honestly I don't remember

I strongly advise you to use something like GeekBench (4 if you have older hardware) 5 to see how your setup is performing compared to others. As a reference my xeon 2680 v2 I'm still #1 (highest core is Linux but if you look up by Windows or OSX my scores should also be relatively high - they are not listed under my username as I only have a Linux license):

https://browser.geekbench.com/v4/cpu/search?dir=desc&q=2680+v2&sort=multicore_score

Hope some of these tips were helpful and disregards any typos, can't be bothered to proofread my own stuff

Last edited:

SLI with different cards

Can you please link the post on the instructions for installing the older drivers on Win10 You need the old patcher and driver (1.7.1 & 388.71), as outlined in this post (start reading below the video): https://www.techpowerup.com/forums/threads/sli-with-different-cards.158907/post-3968942

MB - Asus Prime B360 Plus GPU GTX1060 6gb + GTX1060 3gb

Help me please.