Is there a chart showing 1440p and 4k results?

Since all the reviews are showing the 4060 could lose to a 3060 in some games above 1080p, maybe we should put those in account before calling it 【Not a turd】

If a product is really technically superior in every aspects it should outperform the previous generation in every aspects.

If it is just outperforming the previous generation in some specific scenarios but loses in some, I would call it a sidegrade instead of an upgrade.

Yeah, it's a bit of a side-grade. But that's somewhat true in most cases. Massive jumps in the same segment in a single generation, the kind that would cause a person to seriously consider an upgrade, are the exception rather than the rule. General advice is usually to skip at least one gen. 1440p and 4k charts are available, but 60-series cards are targeted mainly at the 1080p market, so I don't consider those results terribly relevant.

Not to mention it is consistently losing to the 6700XT, which is competitor's last generation price competitive offerings atm.

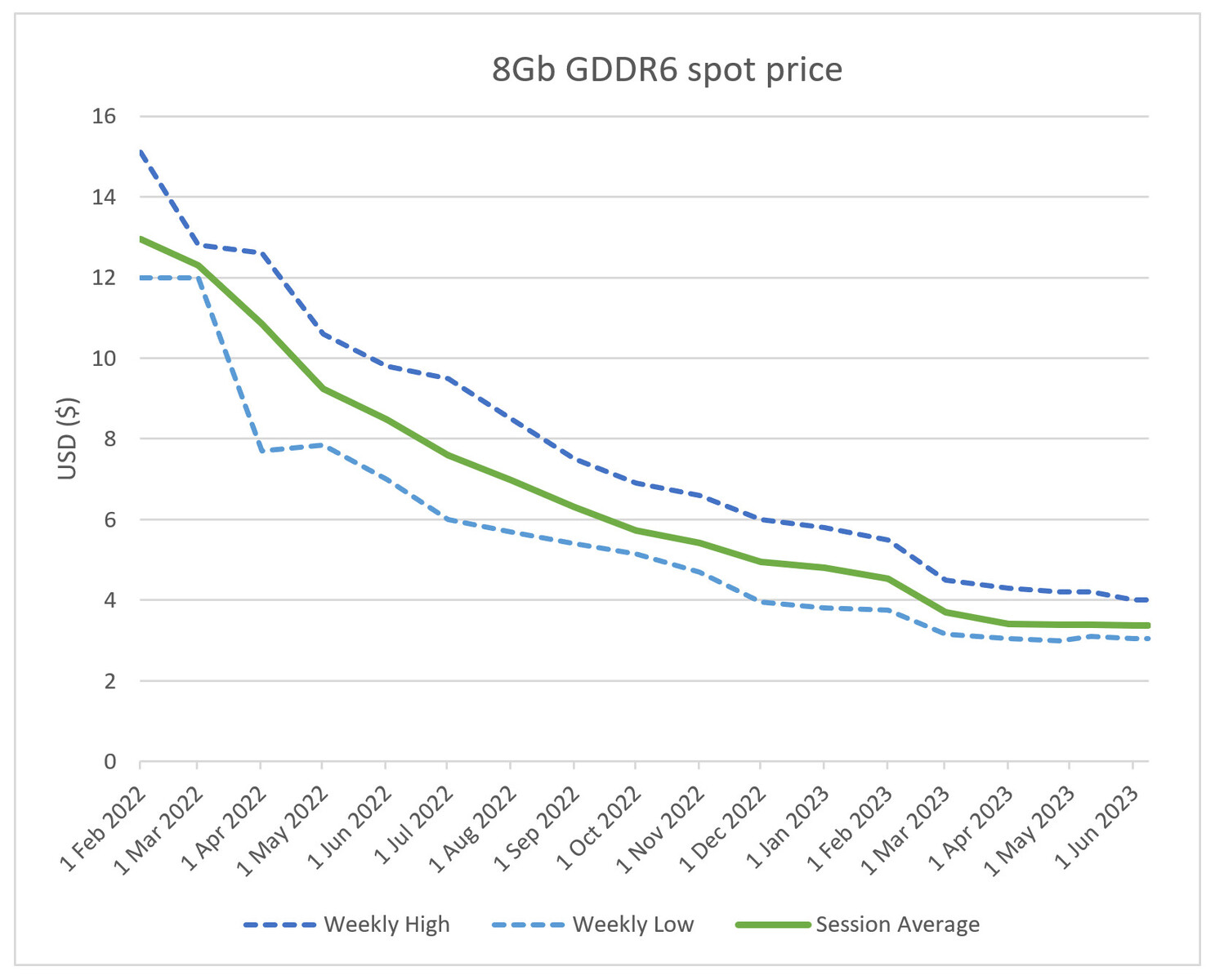

The 6700 XT def smacks the 4060 around in $/perf, but one needs to pick a comparison metric. The easiest is product segment, i.e. comparing only 6-segment cards against each other as I did above. Price is also an option, either RRP or street. Power envelope can also be used. The only of those in which the 4060 and 6700 XT are similar is street price. 6700 XT is one (or two) tiers up, had a launch price of $180 higher (though street is more like $350 atm), and twice the rated power. Starting at Maxwell, 80-series cards went from 165 - 180 - 215 - 320W. The 4080 is considered impressive vs. the 3080 because it performs 30% better within the same power envelope, and it costs more to boot. A lot more. Yet the 4060 accomplishes equal or better performance to the 3060 for 30%

less power while costing less, and it gets decried as a turd and a waste of sand.

4090 has 435% more cores than 4060,

3090ti has 200% more cores than 3060

3090ti has 320% more cores than 3050

Just the jacket cannot milking like in the mining crisis and now is selling something that cannot be called even 4050 as 4060

You're letting Nvidia recalibrate the performance scale. 90-class cards are outliers when considering historical norms. 50-series cards Maxwell to Turing were sub-100W, sub-$150 cards that could only manage 1080p60 with reduced settings in contemporary titles. The

Palit 4060 Dual (chosen because it runs at reference clocks) pulls a 96fps average in the current test battery at 1080p, with only two titles averaging below 60. At 120 watts. With RT, Nvidia decided they could tack on an extra hundred bucks and hundred watts to everything and move every tier upmarket. And we let them.

EDIT: typo