Apr 10th, 2025 07:16 EDT

change timezone

Latest GPU Drivers

New Forum Posts

- Looking for input on fan placement for my Define R5 (9)

- Problem "Vu meter Windows 11 24h2 missing" (0)

- MSI 4090 Pump Out Is Real (6)

- How is the Gainward Phoenix Model in terms of quality? (5)

- RX 9000 series GPU Owners Club (277)

- OEM and Retail GPU (11)

- Downgrading bios on asrock A320 board (2)

- Will you buy a RTX 5090? (478)

- Star Citizen (2515)

- ## [Golden Sample] RTX 5080 – 3300 MHz @ 1.020 V (Stock Curve) – Ultra-Stable & Efficient (45)

Popular Reviews

- The Last Of Us Part 2 Performance Benchmark Review - 30 GPUs Compared

- ASRock Z890 Taichi OCF Review

- MCHOSE L7 Pro Review

- Sapphire Radeon RX 9070 XT Pulse Review

- PowerColor Radeon RX 9070 Hellhound Review

- Upcoming Hardware Launches 2025 (Updated Apr 2025)

- Sapphire Radeon RX 9070 XT Nitro+ Review - Beating NVIDIA

- Acer Predator GM9000 2 TB Review

- ASUS GeForce RTX 5080 Astral OC Review

- UPERFECT UStation Delta Max Review - Two Screens In One

Controversial News Posts

- NVIDIA GeForce RTX 5060 Ti 16 GB SKU Likely Launching at $499, According to Supply Chain Leak (174)

- MSI Doesn't Plan Radeon RX 9000 Series GPUs, Skips AMD RDNA 4 Generation Entirely (146)

- Microsoft Introduces Copilot for Gaming (124)

- AMD Radeon RX 9070 XT Reportedly Outperforms RTX 5080 Through Undervolting (119)

- NVIDIA Reportedly Prepares GeForce RTX 5060 and RTX 5060 Ti Unveil Tomorrow (115)

- Nintendo Confirms That Switch 2 Joy-Cons Will Not Utilize Hall Effect Stick Technology (100)

- Over 200,000 Sold Radeon RX 9070 and RX 9070 XT GPUs? AMD Says No Number was Given (100)

- Nintendo Switch 2 Launches June 5 at $449.99 with New Hardware and Games (99)

News Posts matching #Stanford

Return to Keyword BrowsingQuantum Machines OPX+ Platform Enabled Breaking of Entanglement Qubit Bottleneck, via Multiplexing

Quantum networks—where entanglement is distributed across distant nodes—promise to revolutionize quantum computing, communication, and sensing. However, a major bottleneck has been scalability, as the entanglement rate in most existing systems is limited by a network design of a single qubit per node. A new study, led by Prof. A. Faraon at Caltech and conducted by A. Ruskuc et al., recently published in Nature (ref: 1-2), presents a groundbreaking solution: multiplexed entanglement using multiple emitters in quantum network nodes. By harnessing rare-earth ions coupled to nanophotonic cavities, researchers at Caltech and Stanford have demonstrated a scalable platform that significantly enhances entanglement rates and network efficiency. Let's take a closer look at the two key challenges they tackled—multiplexing to boost entanglement rates and dynamic control strategies to ensure qubit indistinguishability—and how they overcame them.

Breaking the Entanglement Bottleneck via Multiplexing

One of the biggest challenges in scaling quantum networks is the entanglement rate bottleneck, which arises due to the fundamental constraints of long-distance quantum communication. When two distant qubits are entangled via photon interference, the rate of entanglement distribution is typically limited by the speed of light and the node separation distance. In typical systems with a single qubit per node, this rate scales as c/L (where c is the speed of light and L is the distance between nodes), leading to long waiting times between successful entanglement events. This severely limits the scalability of quantum networks.

Breaking the Entanglement Bottleneck via Multiplexing

One of the biggest challenges in scaling quantum networks is the entanglement rate bottleneck, which arises due to the fundamental constraints of long-distance quantum communication. When two distant qubits are entangled via photon interference, the rate of entanglement distribution is typically limited by the speed of light and the node separation distance. In typical systems with a single qubit per node, this rate scales as c/L (where c is the speed of light and L is the distance between nodes), leading to long waiting times between successful entanglement events. This severely limits the scalability of quantum networks.

Jensen Huang Will Discuss AI's Future at NVIDIA GTC 2024

NVIDIA's GTC 2024 AI conference will set the stage for another leap forward in AI. At the heart of this highly anticipated event: the opening keynote by Jensen Huang, NVIDIA's visionary founder and CEO, who speaks on Monday, March 18, at 1 p.m. Pacific, at the SAP Center in San Jose, California.

Planning Your GTC Experience

There are two ways to watch. Register to attend GTC in person to secure a spot for an immersive experience at the SAP Center. The center is a short walk from the San Jose Convention Center, where the rest of the conference takes place. Doors open at 11 a.m., and badge pickup starts at 10:30 a.m. The keynote will also be livestreamed at www.nvidia.com/gtc/keynote/.

Planning Your GTC Experience

There are two ways to watch. Register to attend GTC in person to secure a spot for an immersive experience at the SAP Center. The center is a short walk from the San Jose Convention Center, where the rest of the conference takes place. Doors open at 11 a.m., and badge pickup starts at 10:30 a.m. The keynote will also be livestreamed at www.nvidia.com/gtc/keynote/.

Jensen Huang to Unveil Latest AI Breakthroughs at GTC 2024 Conference

NVIDIA today announced it will host its flagship GTC 2024 conference at the San Jose Convention Center from March 18-21. More than 300,000 people are expected to register to attend in person or virtually. NVIDIA founder and CEO Jensen Huang will deliver the keynote from the SAP Center on Monday, March 18, at 1 p.m. Pacific time. It will be livestreamed and available on demand. Registration is not required to view the keynote online. Since Huang first highlighted machine learning in his 2014 GTC keynote, NVIDIA has been at the forefront of the AI revolution. The company's platforms have played a crucial role in enabling AI across numerous domains including large language models, biology, cybersecurity, data center and cloud computing, conversational AI, networking, physics, robotics, and quantum, scientific and edge computing.

The event's 900 sessions and over 300 exhibitors will showcase how organizations are deploying NVIDIA platforms to achieve remarkable breakthroughs across industries, including aerospace, agriculture, automotive and transportation, cloud services, financial services, healthcare and life sciences, manufacturing, retail and telecommunications. "Generative AI has moved to center stage as governments, industries and organizations everywhere look to harness its transformative capabilities," Huang said. "GTC has become the world's most important AI conference because the entire ecosystem is there to share knowledge and advance the state of the art. Come join us."

The event's 900 sessions and over 300 exhibitors will showcase how organizations are deploying NVIDIA platforms to achieve remarkable breakthroughs across industries, including aerospace, agriculture, automotive and transportation, cloud services, financial services, healthcare and life sciences, manufacturing, retail and telecommunications. "Generative AI has moved to center stage as governments, industries and organizations everywhere look to harness its transformative capabilities," Huang said. "GTC has become the world's most important AI conference because the entire ecosystem is there to share knowledge and advance the state of the art. Come join us."

OpenAI Degrades GPT-4 Performance While GPT-3.5 Gets Better

When OpenAI announced its GPT-4 model, it first became a part of ChatGPT, behind the paywall for premium users. The GPT-4 is the latest installment in the Generative Pretrained Transformer (GPT) Large Language Models (LLMs). The GPT-4 aims to be a more capable version than the GPT-3.5 that powered ChatGPT at first, which was capable once it launched. However, it seems like the performance of GPT-4 has been steadily dropping since its introduction. Many users noted the regression, and today we have researchers from Stanford University and UC Berkeley, who benchmarked the GPT-4 performance in March 2023, and the model's performance in June 2023 in tasks like solving math problems, visual reasoning, code generation, and answering sensitive questions.

The results? The paper shows that GPT-4 performance has been significantly degraded in all the tasks. This could be attributed to improving stability, lowering the massive compute demand, and much more. What is unexpected, GPT-3.5 experienced a significant uplift in the same period. Below, you can see the examples that were benchmarked by the researchers, which also compare GTP-4 and GPT-3.5 performance in all cases.

The results? The paper shows that GPT-4 performance has been significantly degraded in all the tasks. This could be attributed to improving stability, lowering the massive compute demand, and much more. What is unexpected, GPT-3.5 experienced a significant uplift in the same period. Below, you can see the examples that were benchmarked by the researchers, which also compare GTP-4 and GPT-3.5 performance in all cases.

NVIDIA Founder and CEO Jensen Huang to Receive Prestigious Robert N. Noyce Award

The Semiconductor Industry Association (SIA) today announced Jensen Huang, founder and CEO of NVIDIA and a trailblazer in building accelerated computing platforms, is the 2021 recipient of the industry's highest honor, the Robert N. Noyce Award. SIA presents the Noyce Award annually in recognition of a leader who has made outstanding contributions to the semiconductor industry in technology or public policy. Huang will accept the award at the SIA Awards Dinner on Nov. 18, 2021.

"Jensen Huang's extraordinary vision and tireless execution have greatly strengthened our industry, revolutionized computing, and advanced artificial intelligence," said John Neuffer, SIA president and CEO. "Jensen's accomplishments have fueled countless innovations—from gaming to scientific computing to self-driving cars—and he continues to advance technologies that will transform our industry and the world. We're pleased to recognize Jensen with the 2021 Robert N. Noyce Award for his many achievements in advancing semiconductor technology."

"Jensen Huang's extraordinary vision and tireless execution have greatly strengthened our industry, revolutionized computing, and advanced artificial intelligence," said John Neuffer, SIA president and CEO. "Jensen's accomplishments have fueled countless innovations—from gaming to scientific computing to self-driving cars—and he continues to advance technologies that will transform our industry and the world. We're pleased to recognize Jensen with the 2021 Robert N. Noyce Award for his many achievements in advancing semiconductor technology."

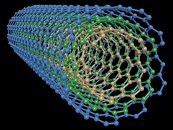

MIT, Stanford Partner Towards Making CPU-Memory BUSes Obsolete

Graphene has been hailed for some time now as the next natural successor to silicon, today's most used medium for semiconductor technology. However, even before such more exotic solutions to current semiconductor technology are employed (and we are still way off that future, at least when it comes to mass production), engineers and researchers seem to be increasing their focus in one specific part of computing: internal communication between components.

Typically, communication between a computer's Central Processing Unit (CPU) and a system's memory (usually DRAM) have occurred through a bus, which is essentially a communication highway between data stored in the DRAM, and the data that the CPU needs to process/has just finished processing. The fastest CPU and RAM is still only as fast as the bus, and recent workloads have been increasing the amount of data to be processed (and thus transferred) by orders of magnitude. As such, engineers have been trying to figure out ways of increasing communication speed between the CPU and the memory subsystem, as it is looking increasingly likely that the next bottlenecks in HPC will come not through lack of CPU speed or memory throughput, but from a bottleneck in communication between those two.

Typically, communication between a computer's Central Processing Unit (CPU) and a system's memory (usually DRAM) have occurred through a bus, which is essentially a communication highway between data stored in the DRAM, and the data that the CPU needs to process/has just finished processing. The fastest CPU and RAM is still only as fast as the bus, and recent workloads have been increasing the amount of data to be processed (and thus transferred) by orders of magnitude. As such, engineers have been trying to figure out ways of increasing communication speed between the CPU and the memory subsystem, as it is looking increasingly likely that the next bottlenecks in HPC will come not through lack of CPU speed or memory throughput, but from a bottleneck in communication between those two.

Apr 10th, 2025 07:16 EDT

change timezone

Latest GPU Drivers

New Forum Posts

- Looking for input on fan placement for my Define R5 (9)

- Problem "Vu meter Windows 11 24h2 missing" (0)

- MSI 4090 Pump Out Is Real (6)

- How is the Gainward Phoenix Model in terms of quality? (5)

- RX 9000 series GPU Owners Club (277)

- OEM and Retail GPU (11)

- Downgrading bios on asrock A320 board (2)

- Will you buy a RTX 5090? (478)

- Star Citizen (2515)

- ## [Golden Sample] RTX 5080 – 3300 MHz @ 1.020 V (Stock Curve) – Ultra-Stable & Efficient (45)

Popular Reviews

- The Last Of Us Part 2 Performance Benchmark Review - 30 GPUs Compared

- ASRock Z890 Taichi OCF Review

- MCHOSE L7 Pro Review

- Sapphire Radeon RX 9070 XT Pulse Review

- PowerColor Radeon RX 9070 Hellhound Review

- Upcoming Hardware Launches 2025 (Updated Apr 2025)

- Sapphire Radeon RX 9070 XT Nitro+ Review - Beating NVIDIA

- Acer Predator GM9000 2 TB Review

- ASUS GeForce RTX 5080 Astral OC Review

- UPERFECT UStation Delta Max Review - Two Screens In One

Controversial News Posts

- NVIDIA GeForce RTX 5060 Ti 16 GB SKU Likely Launching at $499, According to Supply Chain Leak (174)

- MSI Doesn't Plan Radeon RX 9000 Series GPUs, Skips AMD RDNA 4 Generation Entirely (146)

- Microsoft Introduces Copilot for Gaming (124)

- AMD Radeon RX 9070 XT Reportedly Outperforms RTX 5080 Through Undervolting (119)

- NVIDIA Reportedly Prepares GeForce RTX 5060 and RTX 5060 Ti Unveil Tomorrow (115)

- Nintendo Confirms That Switch 2 Joy-Cons Will Not Utilize Hall Effect Stick Technology (100)

- Over 200,000 Sold Radeon RX 9070 and RX 9070 XT GPUs? AMD Says No Number was Given (100)

- Nintendo Switch 2 Launches June 5 at $449.99 with New Hardware and Games (99)