Monday, July 10th 2017

MIT, Stanford Partner Towards Making CPU-Memory BUSes Obsolete

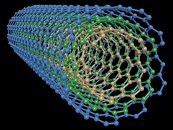

Graphene has been hailed for some time now as the next natural successor to silicon, today's most used medium for semiconductor technology. However, even before such more exotic solutions to current semiconductor technology are employed (and we are still way off that future, at least when it comes to mass production), engineers and researchers seem to be increasing their focus in one specific part of computing: internal communication between components.

Typically, communication between a computer's Central Processing Unit (CPU) and a system's memory (usually DRAM) have occurred through a bus, which is essentially a communication highway between data stored in the DRAM, and the data that the CPU needs to process/has just finished processing. The fastest CPU and RAM is still only as fast as the bus, and recent workloads have been increasing the amount of data to be processed (and thus transferred) by orders of magnitude. As such, engineers have been trying to figure out ways of increasing communication speed between the CPU and the memory subsystem, as it is looking increasingly likely that the next bottlenecks in HPC will come not through lack of CPU speed or memory throughput, but from a bottleneck in communication between those two.The MIT and Stanford researchers' solution? Do away with a bus entirely, by entwining the CPU and memory together so closely that there is no need for performance bottle-necking buses. According to the lead author in the research paper, Max Shulaker, "The RRAM and carbon nanotubes are built vertically over one another, making a new, dense 3-D computer architecture with interleaving layers of logic and memory. By inserting ultradense wires between these layers, this 3-D architecture promises to address the communication bottleneck." As a proof of concept (let's call it PoC for the Mass Effect: Andromeda fans out there), the team has produced a small-scale carbon nanotube (CNT) computer, and get this: it was actually capable of running programs, a basic multitasking operating system, and performing MIPS instructions. The pairing with RRAM (Resistive Random Access Memory) is a feat unto itself, as H.-S. Philip Wong, a co-author of the research, says that "RRAM can be denser, faster, and more energy-efficient compared to DRAM." In-between the logic and memory layers are "ultradense" wires that provide communication, which is "more than an order of magnitude" faster and more energy efficient than silicon.Granted, the new system likely won't break speed records soon - it ran at 1 kHz. However, researchers behind the paper claim that achieving higher speeds is a more trivial task compared to the actual development of this unit in particular. As they put it, the speed limit "(...) is not due to the limitations of the CNT technology or our design methodology, but instead is caused by capacitive loading introduced by the measurement setup, the 1-mm minimum lithographic feature size possible in our academic fabrication facility, and CNT density and contact resistance." Compare the university's 1-mm minimum litographic feature size they can possibly achieve with their tools with those currently employed by state-of-the-art foundries by the likes of Intel and Samsung, and you're likely to even more respect the scientist's achievement. Even so, "the researchers integrated over 1 million RRAM cells and 2 million carbon nanotube field-effect transistors, making the most complex nanoelectronic system ever made with emerging nanotechnologies."One of the key benefits of fabricating the new computer chips from graphene stems from the much lower temperatures involved in fabrication - and is what makes such a marriage between a CPU and its memory all but impossible through silicon. Silicon chip creation requires temperatures up to 1,000 degrees Celsius, so it's difficult to make multi-layers and 3D structures without damaging prior layers. However, "carbon nanotube circuits and RRAM memory can be fabricated at much lower temperatures, below 200°C", says Shulaker, which enables the stacking and interlinking of memory and CPU through fine wires.

Sources:

TechSpot, Nature, News @ MIT

Typically, communication between a computer's Central Processing Unit (CPU) and a system's memory (usually DRAM) have occurred through a bus, which is essentially a communication highway between data stored in the DRAM, and the data that the CPU needs to process/has just finished processing. The fastest CPU and RAM is still only as fast as the bus, and recent workloads have been increasing the amount of data to be processed (and thus transferred) by orders of magnitude. As such, engineers have been trying to figure out ways of increasing communication speed between the CPU and the memory subsystem, as it is looking increasingly likely that the next bottlenecks in HPC will come not through lack of CPU speed or memory throughput, but from a bottleneck in communication between those two.The MIT and Stanford researchers' solution? Do away with a bus entirely, by entwining the CPU and memory together so closely that there is no need for performance bottle-necking buses. According to the lead author in the research paper, Max Shulaker, "The RRAM and carbon nanotubes are built vertically over one another, making a new, dense 3-D computer architecture with interleaving layers of logic and memory. By inserting ultradense wires between these layers, this 3-D architecture promises to address the communication bottleneck." As a proof of concept (let's call it PoC for the Mass Effect: Andromeda fans out there), the team has produced a small-scale carbon nanotube (CNT) computer, and get this: it was actually capable of running programs, a basic multitasking operating system, and performing MIPS instructions. The pairing with RRAM (Resistive Random Access Memory) is a feat unto itself, as H.-S. Philip Wong, a co-author of the research, says that "RRAM can be denser, faster, and more energy-efficient compared to DRAM." In-between the logic and memory layers are "ultradense" wires that provide communication, which is "more than an order of magnitude" faster and more energy efficient than silicon.Granted, the new system likely won't break speed records soon - it ran at 1 kHz. However, researchers behind the paper claim that achieving higher speeds is a more trivial task compared to the actual development of this unit in particular. As they put it, the speed limit "(...) is not due to the limitations of the CNT technology or our design methodology, but instead is caused by capacitive loading introduced by the measurement setup, the 1-mm minimum lithographic feature size possible in our academic fabrication facility, and CNT density and contact resistance." Compare the university's 1-mm minimum litographic feature size they can possibly achieve with their tools with those currently employed by state-of-the-art foundries by the likes of Intel and Samsung, and you're likely to even more respect the scientist's achievement. Even so, "the researchers integrated over 1 million RRAM cells and 2 million carbon nanotube field-effect transistors, making the most complex nanoelectronic system ever made with emerging nanotechnologies."One of the key benefits of fabricating the new computer chips from graphene stems from the much lower temperatures involved in fabrication - and is what makes such a marriage between a CPU and its memory all but impossible through silicon. Silicon chip creation requires temperatures up to 1,000 degrees Celsius, so it's difficult to make multi-layers and 3D structures without damaging prior layers. However, "carbon nanotube circuits and RRAM memory can be fabricated at much lower temperatures, below 200°C", says Shulaker, which enables the stacking and interlinking of memory and CPU through fine wires.

11 Comments on MIT, Stanford Partner Towards Making CPU-Memory BUSes Obsolete

That's how I feel after reading this. Thanks, Bill.

Those elements are standardized for CPUs and the satellite elements they connect to.

On a GPU board you can cram in whatever you want, as long as the final I/O is PCI-e compatible and transmissible.

So I think it's not very odd that on a GPU you can have 2048 bus while on a CPU you still get 256.

That said, there are also other factors to account for, like path density on the MoBo and such.

Joke aside, it's quite impressive in this state of tech development.

Trace length is a limiting factor, and together with the slot with for DDR3 and DDR4 you get longer traces on the edges, and since DDR still is a parallel interface the shorter traces will need to be elongated so they are the same length. This is simpler when the interfaces are smaller chips you can place around the GPU.

The slot for DDR3 and DDR4 is also not as good as a soldered connection.

Another reason is that one does not need the super wide bus. A 290x has a wider bus then a GTX 1080, as a i7 920 has a wider bus then a i7 7700k. On modern CPUs the latency is just as important as the memory bandwidth, and where the bandwidth has had a explosive growth the last decade, the latency has not improved nearly as much, witch is why we have CPUs with L3 and some with L4 now.

So in summary, because its difficult and the gains are not that big, that is why we are on 128 to 256 bit buses for consumer CPUs.