Thursday, September 6th 2018

NVIDIA TU106 Chip Support Added to HWiNFO, Could Power GeForce RTX 2060

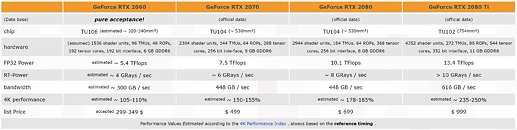

We are all still awaiting how NVIDIA's RTX 2000 series of GPUs will fare in independent reviews, but that has not stopped the rumor mill from extrapolating. There have been alleged leaks of the RTX 2080 Ti's performance and now we see HWiNFO add support to an unannounced NVIDIA Turing microarchitecture chip, the TU106. As a reminder, the currently announced members in RTX series are based off TU102 (RTX 2080 Ti), and TU104 (RTX 2080, RTX 2070). It is logical to expect a smaller die for upcoming RTX cards based on NVIDIA's history, and we may well see an RTX 2060 using the TU106 chip.

This addition to HWiNFO is to be taken with a grain of salt, however, as they have been wrong before. Even recently, they had added support for what, at the time, was speculated to be NVIDIA Volta microarchitecture which we now know as Turing. This has not stopped others from speculating further, however, as we see 3DCenter.org give their best estimates on how TU106 may fare in terms of die size, shader and TMU count, and more. Given that TSMC's 7 nm node will likely be preoccupied with Apple iPhone production through the end of this year, NVIDIA may well be using the same 12 nm FinFET process that TU102 and TU104 are being manufactured on. This mainstream GPU segment is NVIDIA's bread-and-butter for gross revenue, and so it is possible we may see an announcement with even retail availability towards the end of Q4 2018 to target holiday shoppers.

Source:

HWiNFO Changelog

This addition to HWiNFO is to be taken with a grain of salt, however, as they have been wrong before. Even recently, they had added support for what, at the time, was speculated to be NVIDIA Volta microarchitecture which we now know as Turing. This has not stopped others from speculating further, however, as we see 3DCenter.org give their best estimates on how TU106 may fare in terms of die size, shader and TMU count, and more. Given that TSMC's 7 nm node will likely be preoccupied with Apple iPhone production through the end of this year, NVIDIA may well be using the same 12 nm FinFET process that TU102 and TU104 are being manufactured on. This mainstream GPU segment is NVIDIA's bread-and-butter for gross revenue, and so it is possible we may see an announcement with even retail availability towards the end of Q4 2018 to target holiday shoppers.

56 Comments on NVIDIA TU106 Chip Support Added to HWiNFO, Could Power GeForce RTX 2060

Best example : 8600 series all over again... too slow for anything to be usefull (but a good HTPC card in retrospect).

If Navi is good enough, we may get a GTX 2060 (without RT cores), which will refresh the Pascal high-ish end stuff, with offering better non-RayTraces capabilities (DLSS for example).

It will give NV best position maket wise, since it will mean that they can adress both Ray-Traced part (RTX series), and those that want best and relatively cheap stuff without that unfinished/not-fast-enough Ray Tracing.

I guess they are not posting names for criticism

And if there is a tu106 2060 it will be 128bit at 14gbps which is a little faster than 9gbps 192bit gddr6 vs gddr5 of course

RTX2080 TU104

RTX2070 TU106 (no nvlink)

This is akin to Ferrari putting their name to a 1-litre 75bhp "supercar". Doubtful anyone is going to trust Nvidia after this... even the fanboys are going to have a hard time defending them. But then this is what happens when you don't have any competition in the marketplace... it was inevitable.

However it could show just how potent Nvidias shaders and core are unhindered by the requirements of tensor and Rtx cores, ie, could possibly be that first to Ship above 2Ghz , maybe that's it's thing.

:banghead:

Having RTX features on the x60 card seems pointless if the Tomb Raider RT demo wasn't extremely lacking on optimization.

Nvidia “Hold my beer, here’s the 2060”

I told you, they're scam cores, and it's all for marketing. Real time reflections aren't new, but robbing this much perf is lol

It's time for AMD to have the devs add vega support and release a RT driver lol. I'm practically convinced that AMD perf can't be worse.