Monday, November 2nd 2020

AMD Releases Even More RX 6900 XT and RX 6800 XT Benchmarks Tested on Ryzen 9 5900X

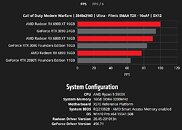

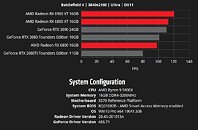

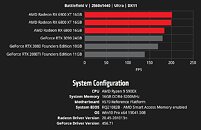

AMD sent ripples in its late-October even launching the Radeon RX 6000 series RDNA2 "Big Navi" graphics cards, when it claimed that the top RX 6000 series parts compete with the very fastest GeForce "Ampere" RTX 30-series graphics cards, marking the company's return to the high-end graphics market. In its announcement press-deck, AMD had shown the $579 RX 6800 beating the RTX 2080 Ti (essentially the RTX 3070), the $649 RX 6800 XT trading blows with the $699 RTX 3080, and the top $999 RX 6900 XT performing in the same league as the $1,499 RTX 3090. Over the weekend, the company released even more benchmarks, with the RX 6000 series GPUs and their competition from NVIDIA being tested by AMD on a platform powered by the Ryzen 9 5900X "Zen 3" 12-core processor.

AMD released its benchmark numbers as interactive bar graphs, on its website. You can select from ten real-world games, two resolutions (1440p and 4K UHD), and even game settings presets, and 3D API for certain tests. Among the games are Battlefield V, Call of Duty Modern Warfare (2019), Tom Clancy's The Division 2, Borderlands 3, DOOM Eternal, Forza Horizon 4, Gears 5, Resident Evil 3, Shadow of the Tomb Raider, and Wolfenstein Youngblood. In several of these tests, the RX 6800 XT and RX 6900 XT are shown taking the fight to NVIDIA's high-end RTX 3080 and RTX 3090, while the RX 6800 is being shown significantly faster than the RTX 2080 Ti (roughly RTX 3070 scores). The Ryzen 9 5900X itself is claimed to be a faster gaming processor than Intel's Core i9-10900K, and features PCI-Express 4.0 interface for these next-gen GPUs. Find more results and the interactive graphs in the source link below.

Source:

AMD Gaming Benchmarks

AMD released its benchmark numbers as interactive bar graphs, on its website. You can select from ten real-world games, two resolutions (1440p and 4K UHD), and even game settings presets, and 3D API for certain tests. Among the games are Battlefield V, Call of Duty Modern Warfare (2019), Tom Clancy's The Division 2, Borderlands 3, DOOM Eternal, Forza Horizon 4, Gears 5, Resident Evil 3, Shadow of the Tomb Raider, and Wolfenstein Youngblood. In several of these tests, the RX 6800 XT and RX 6900 XT are shown taking the fight to NVIDIA's high-end RTX 3080 and RTX 3090, while the RX 6800 is being shown significantly faster than the RTX 2080 Ti (roughly RTX 3070 scores). The Ryzen 9 5900X itself is claimed to be a faster gaming processor than Intel's Core i9-10900K, and features PCI-Express 4.0 interface for these next-gen GPUs. Find more results and the interactive graphs in the source link below.

147 Comments on AMD Releases Even More RX 6900 XT and RX 6800 XT Benchmarks Tested on Ryzen 9 5900X

Thanks a lot for sharing the results.

Looks very promising.

Waiting for review from TPU especially for 6800XT to decide if that is my next GPU. :rolleyes:

I would be interested to see how the performance is with Zen 2 without SAM.

When Nvidia launched their new RTX generation I was thinking that it would really tough for AMD to match that but looking at the benchmarks until now it is really impressive to see AMD is catching up to NVidia at least at non DXR. :)

Let's hope that these will be available on the release day in larger quantities and not be sold out within minutes after release giving one option to buy it after reading reviews and not disappear from the online stores while one is looking at reviews. :roll:

PP 280 vs 190

TP 720 vs 556.

LoL. AMD is more future proof for long-term use!

PS. RX 6800 XT also is better than RTX 3090 if we rely only on a comparison of these numbers.

Fine wine? A couple % uptick overall more in a title or two? I wouldn't hold my breath for that. And those numbers you quoted don't add up to your conclusion.

And you cant predict either the future performance gains or losses of a GPU against another product as the factors related are far too many.

First:Second:Links are below "First & Second"!

10GB may fall short at 4K in a few years... but by then, you'll want another GPU anyway. Even DOOM on nightmare doesn't eclipse 10GB @ 4K.I'm giving up. ;)

More likely scenario, though, is that in that form (brute force path tracing) it will never take off.Smaller chips, lower power consumption, slower (and cheaper) VRAM, more of it, for lower price than competition and better perf/$ than competition.

Catching up, eh? :)

Another is that CGN balance between fillrate/texture rate vs compute performance was a bit more on the Compute side. NVidia on the other hands focused a bit more on the fill rate side.

Each generation of games was shifting the resource from fill rate to compute by using more and more power and AMD GPU in a better position. But not really enough to make a card last way longer. Also the thing is low end cards where outclassed anyway were High end cards were bought by people with money that would probably change them as soon as it would make sense.

It look like that AMD with NAVI went to a more balanced setup where Nvidia is going onto the heavy compute path. We will see in the future what is the better balance but right now it's too early to tell.

So in the end, it do not really matter. a good strategy is to buy a PC at a price that you can afford another one at the same price in 3-4 year and you will always be in good shape. If paying 500$ for a card every 3-4 years is too much, buy something cheaper and that's it.

there is good chance that in 4 years, that 500$ card will be beaten by a 250$ card anyway. Even more when we think they are going to chiplet design with GPU. that will drive a good increase on performance.

Yes, the scheduler was flexible, as it was announced to be in its launch, but instruction reordering does not necessarily mean the full extent of its performance. IPC still was 0.25 and now that it is 1 is a lot in comparison. They have all these baked-in instructions doing the intrinsic tuning for them in the hardware. The isa moved away from where gcn was by a great deal. Plus, they have this mesh shader which abnegate the triangle pixel size vs wavefront thread cost to deal with it in hardware. Performance really suffered with <64 pixel area triangles. Not so, any more.

EPIC on UE4 "it was optimized for NVidia GPUs".

EPIC today, demoes UE5 on RDNA2 chip running on the weaker of the two next gen consoles, spits on Huang's RT altogether, even though it is supported even in UE4.

There is more fun to come.

Recent demo of XSeX vs 3080 was commended by a greenboi like "merely 2080Ti levels".

That is where next gen consoles ar > 98-99% of the PC GPU market.It was a rhetorical question.

Did Nvidia bring more performance at times, yes of course but that doesn't preclude AMD having good support for their features.

And GCN looked pretty effing capable until afew years after last gen consoles came out, about the Maxwell era no?.