Monday, November 2nd 2020

AMD Releases Even More RX 6900 XT and RX 6800 XT Benchmarks Tested on Ryzen 9 5900X

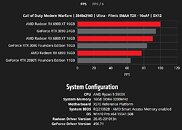

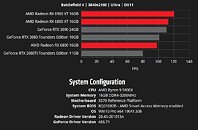

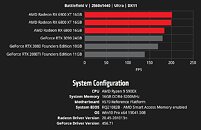

AMD sent ripples in its late-October even launching the Radeon RX 6000 series RDNA2 "Big Navi" graphics cards, when it claimed that the top RX 6000 series parts compete with the very fastest GeForce "Ampere" RTX 30-series graphics cards, marking the company's return to the high-end graphics market. In its announcement press-deck, AMD had shown the $579 RX 6800 beating the RTX 2080 Ti (essentially the RTX 3070), the $649 RX 6800 XT trading blows with the $699 RTX 3080, and the top $999 RX 6900 XT performing in the same league as the $1,499 RTX 3090. Over the weekend, the company released even more benchmarks, with the RX 6000 series GPUs and their competition from NVIDIA being tested by AMD on a platform powered by the Ryzen 9 5900X "Zen 3" 12-core processor.

AMD released its benchmark numbers as interactive bar graphs, on its website. You can select from ten real-world games, two resolutions (1440p and 4K UHD), and even game settings presets, and 3D API for certain tests. Among the games are Battlefield V, Call of Duty Modern Warfare (2019), Tom Clancy's The Division 2, Borderlands 3, DOOM Eternal, Forza Horizon 4, Gears 5, Resident Evil 3, Shadow of the Tomb Raider, and Wolfenstein Youngblood. In several of these tests, the RX 6800 XT and RX 6900 XT are shown taking the fight to NVIDIA's high-end RTX 3080 and RTX 3090, while the RX 6800 is being shown significantly faster than the RTX 2080 Ti (roughly RTX 3070 scores). The Ryzen 9 5900X itself is claimed to be a faster gaming processor than Intel's Core i9-10900K, and features PCI-Express 4.0 interface for these next-gen GPUs. Find more results and the interactive graphs in the source link below.

Source:

AMD Gaming Benchmarks

AMD released its benchmark numbers as interactive bar graphs, on its website. You can select from ten real-world games, two resolutions (1440p and 4K UHD), and even game settings presets, and 3D API for certain tests. Among the games are Battlefield V, Call of Duty Modern Warfare (2019), Tom Clancy's The Division 2, Borderlands 3, DOOM Eternal, Forza Horizon 4, Gears 5, Resident Evil 3, Shadow of the Tomb Raider, and Wolfenstein Youngblood. In several of these tests, the RX 6800 XT and RX 6900 XT are shown taking the fight to NVIDIA's high-end RTX 3080 and RTX 3090, while the RX 6800 is being shown significantly faster than the RTX 2080 Ti (roughly RTX 3070 scores). The Ryzen 9 5900X itself is claimed to be a faster gaming processor than Intel's Core i9-10900K, and features PCI-Express 4.0 interface for these next-gen GPUs. Find more results and the interactive graphs in the source link below.

147 Comments on AMD Releases Even More RX 6900 XT and RX 6800 XT Benchmarks Tested on Ryzen 9 5900X

SOTR and Doom Eternal seem to be the more even benchmarks of them all, with the Foundation engine in SOTR being on DX12 (although it supports DLSS and RTX) and id Engine Tech 7 on Vulkan.

I'd like to see how well these do in the Quantic Dreams engine or in the latest revision of Unreal Engine 4. My prediction is that the 6800 XT is match-for-match with the RTX 3080 and the RTX 3090 will be beaten due to the waaaaay more affordable price.The RX 5700 XT didn't really overclock great (2,000+ MHz only yielded at most 10 FPS with most models) as well, but we'll see how the 6800 XT works out.

I see (without all the boosts), AMD.... Wins/Ties/Wins/Wins/Wins/Loses/Loses. I'd also like to note the scale. 20% between tiers. so most of these wins (Doom, GOW5, Hitman) are really negligible. I'd call the cards about equal as well (unless you're overclocking with Rage and using X570/B550/5000 Series). But yeah, W/T/W/W/W/L/L with negligible differences between two of those wins.

RE: Overclocking, indeed, nobody has a lot of headroom these days. That said, if you look at TPU reviews, we are seeing a couple-few % depending on the model. With how close some of these AMD benchmarks are, that makes those titles a tie or flips them the other way negligibly, just like what we see in red only.

I can't believe in 1440p the middle RDNA2 card (6800XT) is faster than the 3090!! And this is without RAGE mode enabled:

videocardz.com/newz/amd-discloses-more-radeon-rx-6900xt-rx-6800xt-and-rx-6800-gaming-benchmarks

1= you talk raw price, you forgot there is also manufacturing to add

2= you forget that those are B2B price (no VAT) you have to add VAT to consumers

3= you forget about commercial margins.

when you add say 20% VAT on your 56 is ... 84

Honestly ... big fanboyism towards Nvidia right here. they give slightly better perf and double the ram for slightly more price but not much more that what the 8gb costs.

60 for me is low and i clearly feel the difference between my 144hz and a 60hz

however for having done a blind test on 100-120-144-240 past 120hz i cant see the difference anymore.

so for now im keeping my 144hz monitor, and i know that eventually if a decent smart TV 120hz 4K screen i can handle that but i couldnt go back to 60Hz

I mean there are a few reasons for jaguar and it's failure but they're arguably just the competition was better but damn that was a while ago.

www.amd.com/en/gaming/graphics-gaming-benchmarksSo the RX6800 relative to the RTX 3080 at 4K is 10.8% slower while at 1440p it's 5.2% slower. I suppose that means at 1080p the RX6800 should be about even to the RTX 3080 at that resolution at least in these types of benchmarks. In the case of RTRT it could be different of course still impressive for a card that's actually competing with the much cheaper RTX 3070. It looks like that resolution comparative difference is more pronounced on the RX6800 than with the RX6800XT and RX6900XT the additional performance gained relative to the RTX 3080 is lower for those higher up models. The fact that it's 5.2% slower in relative terms dropping from 4K to 1440p and also is not 5.4% slower is telling as well. The RX6800 will likely compete quite well against the RTX 3080 at 1080p resolution perhaps from the looks of things if the results continue to fall in line at 1080p all while being much cheaper.

I wish to say ray tracing is a success but let us be real, unless consoles make it to the mainstream, it won't be.

Exclusivity is good and all, but it doesn't pay the bills. I have anectodal reference of just how pitiful the situation is right now. I'm sure you have watched the webcasters talking the same market insider pitches on how little, just a drop in the bucket, sponsorships are next to the big bucks. Unless sales move forward, the number of ray tracing consumers are just hunting for nvidia sponsored titles. Have they yet caught on to their big break, or are they that many?

1: Can we please have an update of this chart for the three SKUs since it appears that this is based on 6800 (little big Navi)?

2: Do you think this indicates that the lower TGP/CU units in the consoles do indeed compare to 2080Ti?

3: Do the console numbers give an indication of the lower SKUs in the RX 6000 product stack?

4: Do the PS and Xbox SoCs pave the way for the 6000G APUs?

5: Do the PS5 and XBox get a 5nm refresh in a couple of years?

CUs, Frequency, Platform

20 1.565 GHz XBox Series S (6500? equivalent to 5700)

36 2.23 GHz PlayStation 5 (6600?)

52 1.825 GHz Xbox Series X (6700?)

60 1.815/2.105 GHz 6800

72 2.015/2.25 GHz 6800XT

80 2.015/2.25 GHz 6900

And you can't compare console SOCs to PC components CPU/GPU. Console's package of CPU and GPU combined is completely custom, designed for a console with its constraints of power draw and heat output, and price.