Wednesday, April 21st 2021

DirectStorage API Works Even with PCIe Gen3 NVMe SSDs

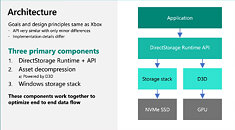

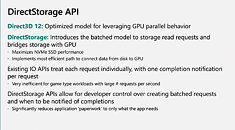

Microsoft on Tuesday, in a developer presentation, confirmed that the DirectStorage API, designed to speed up the storage sub-system, is compatible even with NVMe SSDs that use the PCI-Express Gen 3 host interface. It also confirmed that all GPUs compatible with DirectX 12 support the feature. A feature making its way to the PC from consoles, DirectStorage enables the GPU to directly access an NVMe storage device, paving the way for GPU-accelerated decompression of game assets.

This works to reduce latencies at the storage sub-system level, and offload the CPU. Any DirectX 12-compatible GPU technically supports DirectStorage, according to Microsoft. The company however recommends DirectX 12 Ultimate GPUs "for the best experience." The GPU-accelerated game asset decompression is handled via compute shaders. In addition to reducing latencies; DirectStorage is said to accelerate the Sampler Feedback feature in DirectX 12 Ultimate.More slides from the presentation follow.

Source:

NEPBB (Reddit)

This works to reduce latencies at the storage sub-system level, and offload the CPU. Any DirectX 12-compatible GPU technically supports DirectStorage, according to Microsoft. The company however recommends DirectX 12 Ultimate GPUs "for the best experience." The GPU-accelerated game asset decompression is handled via compute shaders. In addition to reducing latencies; DirectStorage is said to accelerate the Sampler Feedback feature in DirectX 12 Ultimate.More slides from the presentation follow.

76 Comments on DirectStorage API Works Even with PCIe Gen3 NVMe SSDs

you were a poet but you didnt even know it

also, looks like it's time to upgrade to gen4 nvme after all.

Gen Three

NVMee

SSDEee

it just all rhymes :P

Also, when MicroShaft says "current GPU's" are compatible, what does "current" mean.? Does this only apply tos DX12 GPU's or DX11 GPU's as well.?

So depending on implementation it could increase framerates etc by removing CPU cycles being used in the main game thread to decompress assets for the GPU. This could also impact loading times etc as instead of the CPU having to decompress assets to then pass onto the GPU the GPU can take care of that while the CPU is doing things like Level building, AI creation etc etc etc.

Now the question is for drives running in NVME mode through the PCH.

My gut feeling is most likely no since going through the PCH naturally causes a latency penalty which nullify the benefits.

Also this might cause security issues, as the PCH is connected to a lot of external IO that could potentially be attacked.

So most likely it just works on drives connected directly to the "northbridge"/uncore/soc/IO die.

So it should let textures stream across fast, get decompressed and smashed open fast, and generally make load times and texture pop in go away

- 1.0e (January 2013)

- 1.1b (July 2014)

- 1.2 (November 2014)

- 1.2a (October 2015)

- 1.2b (June 2016)

- 1.2.1 (June 2016)

- 1.3 (May 2017)

- 1.3a (October 2017)

- 1.3b (May 2018)

- 1.3c (May 2018)

- 1.3d (March 2019)

- 1.4 (June 2019)

- 1.4a (March 2020)

- 1.4b (September 2020)

I'm going to assume they are starting it at 1.2Dev's will not go back to implement this on older titles not worth spending the resources on that.___

It will be interesting to see how this pans out over time and is certainly a boon that AMD has created the APU's for the XBONE and PS5 so us PC gamers will also get the rewards of simple (and better) game ports and this DX12/DirectStorage/XBONE updatewill likely also pass through as the XBONE is of course essentially now just a PC, the future is bright.

The key is that it requires a DX12 GPU to have support for the feature, not that it requires the game to be running in DX12 mode

(At this stage no one knows if the feature will get rolled back to older GPU's, we've only got a few vague clues)

For the reference: 1080p screen at 21.5 inches (so, around 102 PPI, a bit above the 92 PPI of a 1080p 24 inch screen), my phone's 1280x720p 5.7 inch screen scaled to match in real-world size against my display at the upper right and Firefox Responsive Design mode on the lower right to show what it would be like if phones didn't have high pixel density displays. The giant black/white circles are the size of my finger tip on the screen.

Your issue is that you need or want to be able to see a massive amount of content (otherwise you wouldn't have a 4K screen), have a small desk and want to use normal size scales. You can't have all three. Something's gotta give. You gotta step down your resolution, or get a bigger desk or get used to scaling.

I can honestly see the theory of this working on DX11 cards but as with anything in the tech world. Unless its pushing something new or shiny you will pretty much never see it back ported en mass.

Older GPUs don't even get driver updates anymore, so even if M$ makes them work somehow they won't get the driver needed.