Thursday, July 17th 2025

AMD Radeon AI PRO R9700 GPU Arrives on July 23rd

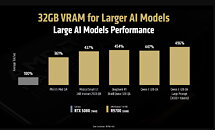

AMD confirmed today that its RDNA 4‑based Radeon AI PRO R9700 GPU will reach retail on Wednesday, July 23. Built on the Navi 48 die with a full 32 GB of GDDR6 memory and supporting PCIe 5.0, the R9700 is specifically tuned for lower‑precision calculations and demanding AI workloads. According to AMD, it delivers up to 496% faster inference on large transformer models compared to NVIDIA's desktop RTX 5080, while carrying roughly half the price of the forthcoming RTX PRO "Blackwell" series. At launch, the Radeon AI PRO R9700 will only be offered inside turnkey workstations from OEM partners such as Boxx and Velocity Micro. Enthusiasts who wish to install the card themselves can expect standalone boards from ASRock, PowerColor, and other add‑in‑board vendors later in the Q3. Early listings suggest a price of around $1,250, placing it above the $599 RX 9070 XT yet considerably below competing NVIDIA workstation GPUs. Retailers are already accepting pre-orders.

Designed for AI professionals who require more than what consumer‑grade hardware can provide, the Radeon AI PRO R9700 excels at natural language processing, text‑to‑image generation, generative design, and other high‑complexity tasks that rely on large models or memory‑intensive pipelines. It's 32 GB of VRAM allows production-scale inference, local fine-tuning, and multi-modal workflows to run entirely on-premises, improving performance, reducing latency, and enhancing data security compared to cloud-based solutions. Full compatibility with AMD's open ROCm 6.3 platform provides developers with access to leading frameworks, including PyTorch, ONNX Runtime, and TensorFlow. This enables AI models to be built, tested, and deployed efficiently on local workstations. The card's compact dual-slot design and blower-style cooler ensure reliable front-to-back airflow in dense multi-GPU configurations, making it simple to expand memory capacity, deploy parallel inference pipelines, and sustain high-throughput AI infrastructure in enterprise environments.

Sources:

AMD Blog, via VideoCardz

Designed for AI professionals who require more than what consumer‑grade hardware can provide, the Radeon AI PRO R9700 excels at natural language processing, text‑to‑image generation, generative design, and other high‑complexity tasks that rely on large models or memory‑intensive pipelines. It's 32 GB of VRAM allows production-scale inference, local fine-tuning, and multi-modal workflows to run entirely on-premises, improving performance, reducing latency, and enhancing data security compared to cloud-based solutions. Full compatibility with AMD's open ROCm 6.3 platform provides developers with access to leading frameworks, including PyTorch, ONNX Runtime, and TensorFlow. This enables AI models to be built, tested, and deployed efficiently on local workstations. The card's compact dual-slot design and blower-style cooler ensure reliable front-to-back airflow in dense multi-GPU configurations, making it simple to expand memory capacity, deploy parallel inference pipelines, and sustain high-throughput AI infrastructure in enterprise environments.

90 Comments on AMD Radeon AI PRO R9700 GPU Arrives on July 23rd

The initial announcement more than implied that many AIBs (pretty much all of AMD's partners) will be making R9700s. Plus, Gigabyte has an Aorus (gaming) branded R9700 listing up.

BTW: Tech-America, has since pulled the listings.

Why not pick a model that's optimized for RDNA 4 and claim ~50% better performance? Everyone knows that first-party benchmarks and advertising claims are just marketing. We're willing to accept a certain amount of BS. But there's a difference between cherry-picking benchmarks where you perform better, and being so desperate to appear competitive you lose all credibility.

This is regarding productivity OFC, not gaming, where RDNA 4 is reasonably competitive vs Blackwell.

Beyond just the hardware, developer and software support for NVIDIA's CUDA architecture is so many orders of magnitude ahead it's not even funny. AMD has made some steps in the right direction recently, but they have a lot of catching up to do and NVIDIA has insane momentum.

Anything to show a bigger bar chart.

If it actually retails for that price, it will be a great option for those that have the knowledge and bandwidth to sort out some minor ROCm quirks, or that use stacks that support it already.

Even a $300 RTX 5060 or a used RTX 2060 will run CUDA software, though you might end up desiring more VRAM.

AMD needs to prove to software companies, developers, and users that they will continue to support cards many years after release, and code will function on a 10 year old CUDA card or a brand new one, like NVIDIA does.What they're doing instead is trying to write translation layers like SCALE or ZLUDA so that CUDA will work on AMD. It's not working very well. The problem is NVIDIA released CUDA in 2007 and has been steadily improving it since then, while fostering developer and partner support through providing free resources, libraries and education, through great cost. That investment is what has paid off and continues to pay off as now everyone is pulling on the CUDA rope, whereas AMD skimping on support and development has meant they are irrevelant in the prosumer/workstation domain. Support is rarely dropped, even recently the huge fiasco over 32 bit Physx being depreciated (due to 32 bit CUDA being dropped) with Blackwell only goes to show that the concept of an NVIDIA card not natively running everything is incredibly shocking to the community.

:laugh:

Making a forward thinking product before anyone else realised it was a good idea, then working on it continuously for ~20 years while retaining support for older architectures, promoting adoption, is "rigging the game".

Mhm.

I suppose AMD should have given the x86-64 to Intel instead of cross licensing it? I mean why patent or protect their IP? AMD should just give away everything for free. That's what they've been doing all this time right?

Businesses operate as businesses, more news at 7.The key words there are "compelling" and "better". AMD and it's partners would have to do how many millions/billions of man hours of development to get to the point where those words were accurate descriptions? I too enjoy some wishful thinking sometimes but doing so doesn't make me an optimist that these things will or even can happen.

Thankfully the GPU division isn't going to just die, because it's pulled along by AI/CDNA at hyperscale, hence why RDNA is being dropped next gen.

Most of the cases Nvidia is still the option that makes the most sense, but AMD products are getting traction and I do recommend you to get updated on the ongoing progress for that, since your opinion seems to be quite outdated.That's not meant to sustain AMD, but rather to enable folks to use an AMD product with their software stack. There's no point in having ROCm working flawlessly and really amazing and effective rack scale hardware solutions if you won't be able to hire anyone that can work on those.

Nvidia has done the same with GeForce and CUDA, enabling students and researchers to dip into the stack with consumer-grade hardware, and then move up the chain and make use of their bigger offerings as time goes, with Pro offerings for their workstations so they can develop stuff, and deploying such things on the big x100 chips, all with the same code.

AMD is now trying to do a similar thing, and UDNA is a clear example of this path. Get consumer-grade hardware that enables the common person to dip their toes into ROCm (this is the part they are mostly lacking), allow them to have a better workstation option paid by their employer (product in the OP), and then deploy this on a big instinct platform.CUDA is not a single piece of software, and MANY parts of it are open sourced already. Your complaint is clearly from someone that just wants to bash at a company without any experience on the actual stack.

Technically having a similar capability ≠ equivalent to.

The market speaks for itself.

RTX 3090s/4090s are still snapped up for close to what people paid for them new, because they're just so useful.

Same goes for two to three generation old RTX Pro cards, which are also valuable on the second hand market because they too, are just so useful.

What's a Vega 64/AMD workstation card from a few generations ago worth now when support has already dropped?

Heck, even upstream pytorch support is in place and you can make use of it as simple as if you were running CUDA.That's unrelated to Nvidia itself. AMD's Instinct GPUs are also backordered by a LOT. Ofc their capacity planning was a fraction of Nvidia's, but still.

People are looking for accelerators right and left.That's a wrong comparison. Vega 64 is worth as much as Pascal, no one cares about those and newer gens made them look like crap.

AMD only started having worthwhile hardware for compute with the 7000 generation/RDNA3, before that it mas moot talking about it given that it lacked both hardware and software.

Back to your point:So are the 7900xtx'es (which had a lower MSRP than the nvidia equivalent to begin with), and hence why I'm still considering another used 3090 instead of a 4090/7900xtx. But I'm in no rush and will wait for the 24GB battlemage and that r9700 before deciding.

If I were able to snatch a 7900xtx for cheap, I would have already bought a couple of those :laugh:

Viable (if that's true, which isn't clear) ≠ competitive.Ask a dev whether they'd prefer a Vega 64 or a 1080 Ti. Are you really going to keep pretending they're even close to being equivalent for productivity software?

The 1080 Ti supports CUDA. The Vega 64 supports what?Another false equivalence. The 7900XTXs are nowhere near as sought after as the 24/32 GB NVIDIA consumer cards for prosumer/workstation, beyond people who don't actually make money with their GPU and just like big VRAM numbers. The release of the 9070XT has made them essentially irrelevant for gaming too, though there's still hangers on due to what, 5% better raster?

Again, CUDA still works on these cards, they're just not getting further developments.

It is starting to be viable. Just to be clear, so far we have been talking about AI and whatnot, for which Pytorch already has upstream ROCm support and even vLLM is getting first-grade support after AMD got their shit straight and is helping the devs.

If you were to say something like rendering (which is not my area), I'd have no opinion whatsoever, other than agreeing that Optix simply mops the floor with AMD when it comes to blender.The 1080ti has no cooperative matrix support, and its performance is subpar, a 2060 manages to be over 2x faster for anything matrices.

Both are shit.

Do you know what folks on a really tight budget prefer? Cheaper and crappy GPUs with tons of VRAM, be it a P40 or a MI25. If not that, geforce pascal makes no sense whatsoever and you'd be better off with a 2060, so vega64 and 1080ti are equally irrelevant.7900XTXs are simply almost impossible to find used in my market, and go for way more than 3090s, and priced way too close to 4090s (which doesn't make sense whatsoever).

Looking over on ebay US, 3090s are a bit cheaper than 7900XTXs, with the 4090s being way more expensive than both.Now I'm confused. We are talking about used products. People making proper money as a business won't even be looking for those so the discussion of used products would be moot.

Hobbyists and small scale stuff would be the ones looking into those, no matter if nvidia or AMD, and that's where the argument makes sense.What does gaming have to do with anything we've discussed so far?So? How's that any relevant? I'm not talking about those lacking any sort of software support, rather that the hardware itself is useless, which applies for both vega64 and the 1080ti.

Again, all your points seem to be from someone that has no industry experience in that specific field. I'm not sure what you're even trying to argue for anymore lol

lol

They are comparing their best vs their best.