Apr 14th, 2025 03:18 EDT

change timezone

Latest GPU Drivers

New Forum Posts

- Regarding fan noise (19)

- 9070XT or 7900XT or 7900XTX (190)

- What's your latest tech purchase? (23542)

- RX 9000 series GPU Owners Club (313)

- Multiple major problems that i can't explain (1)

- Game Soundtracks You Love (1038)

- Advice on GPU upgrade (19)

- Anime Nation (13022)

- ThrottleStop - 14900HX, 4090RTX MSI laptop (13)

- TPU's Nostalgic Hardware Club (20237)

Popular Reviews

- TerraMaster F8 SSD Plus Review - Compact and quiet

- ASUS GeForce RTX 5080 TUF OC Review

- Thermaltake TR100 Review

- The Last Of Us Part 2 Performance Benchmark Review - 30 GPUs Compared

- Zotac GeForce RTX 5070 Ti Amp Extreme Review

- Sapphire Radeon RX 9070 XT Pulse Review

- Sapphire Radeon RX 9070 XT Nitro+ Review - Beating NVIDIA

- Upcoming Hardware Launches 2025 (Updated Apr 2025)

- MSI MAG CORELIQUID A15 360 Review

- AMD Ryzen 7 9800X3D Review - The Best Gaming Processor

Controversial News Posts

- NVIDIA GeForce RTX 5060 Ti 16 GB SKU Likely Launching at $499, According to Supply Chain Leak (181)

- MSI Doesn't Plan Radeon RX 9000 Series GPUs, Skips AMD RDNA 4 Generation Entirely (146)

- NVIDIA Sends MSRP Numbers to Partners: GeForce RTX 5060 Ti 8 GB at $379, RTX 5060 Ti 16 GB at $429 (124)

- Microsoft Introduces Copilot for Gaming (124)

- Nintendo Confirms That Switch 2 Joy-Cons Will Not Utilize Hall Effect Stick Technology (105)

- Over 200,000 Sold Radeon RX 9070 and RX 9070 XT GPUs? AMD Says No Number was Given (100)

- Nintendo Switch 2 Launches June 5 at $449.99 with New Hardware and Games (99)

- NVIDIA PhysX and Flow Made Fully Open-Source (77)

News Posts matching #SDK

Return to Keyword BrowsingInnodisk Proves AI Prowess with Launch of FPGA Machine Vision Platform

Innodisk, a leading global provider of industrial-grade flash storage, DRAM memory and embedded peripherals, has announced its latest step into the AI market, with the launch of EXMU-X261, an FPGA Machine Vision Platform. Powered by AMD's Xilinx Kria K26 SOM, which was designed to enable smart city and smart factory applications, Innodisk's FPGA Machine Vision Platform is set to lead the way for industrial system integrators looking to develop machine vision applications.

Automated defect inspection, a key machine vision application, is an essential technology in modern manufacturing. Automated visual inspection guarantees that the product works as expected and meets specifications. In these cases, it is vital that a fast and highly accurate inspection system is used. Without AI, operators must manually inspect each product, taking an average of three seconds per item. Now, with the help of AI solutions such as Innodisk's FPGA Machine Vision Platform, product inspection in factories can be automated, and the end result is not only faster and cheaper, but can be completely free of human error.

Automated defect inspection, a key machine vision application, is an essential technology in modern manufacturing. Automated visual inspection guarantees that the product works as expected and meets specifications. In these cases, it is vital that a fast and highly accurate inspection system is used. Without AI, operators must manually inspect each product, taking an average of three seconds per item. Now, with the help of AI solutions such as Innodisk's FPGA Machine Vision Platform, product inspection in factories can be automated, and the end result is not only faster and cheaper, but can be completely free of human error.

HaptX Introduces Industry's Most Advanced Haptic Gloves, Priced for Scalable Deployment

HaptX Inc., the leading provider of realistic haptic technology, today announced the availability of pre-orders of the company's new HaptX Gloves G1, a ground-breaking haptic device optimized for the enterprise metaverse. HaptX has engineered HaptX Gloves G1 with the features most requested by HaptX customers, including improved ergonomics, multiple glove sizes, wireless mobility, new and improved haptic functionality, and multiplayer collaboration, all priced as low as $4,500 per pair - a fraction of the cost of the award-winning HaptX Gloves DK2.

"With HaptX Gloves G1, we're making it possible for all organizations to leverage our lifelike haptics," said Jake Rubin, Founder and CEO of HaptX. "Touch is the cornerstone of the next generation of human-machine interface technologies, and the opportunities are endless." HaptX Gloves G1 leverages advances in materials science and the latest manufacturing techniques to deliver the first haptic gloves that fit like a conventional glove. The Gloves' digits, palm, and wrist are soft and flexible for uninhibited dexterity and comfort. Available in four sizes (Small, Medium, Large, and Extra Large), these Gloves offer the best fit and performance for all adult hands. Inside the Gloves are hundreds of microfluidic actuators that physically displace your skin, so when you touch and interact with virtual objects, the objects feel real.

"With HaptX Gloves G1, we're making it possible for all organizations to leverage our lifelike haptics," said Jake Rubin, Founder and CEO of HaptX. "Touch is the cornerstone of the next generation of human-machine interface technologies, and the opportunities are endless." HaptX Gloves G1 leverages advances in materials science and the latest manufacturing techniques to deliver the first haptic gloves that fit like a conventional glove. The Gloves' digits, palm, and wrist are soft and flexible for uninhibited dexterity and comfort. Available in four sizes (Small, Medium, Large, and Extra Large), these Gloves offer the best fit and performance for all adult hands. Inside the Gloves are hundreds of microfluidic actuators that physically displace your skin, so when you touch and interact with virtual objects, the objects feel real.

Tap Systems Launches TapXR - A Wrist Worn Keyboard/Controller For AR and VR

Tap Systems is excited to announce the release of the new TapXR wearable keyboard and controller. The TapXR is the first wrist-wearable device that allows users to type, input commands, and navigate menus. It allows fast, accurate, discreet, and eyes-free texting and control with any bluetooth device, including phones, tablets, smart TVs, and virtual and augmented reality headsets. TapXR works by sensing the user's finger taps on any surface, and decoding them into digital signals.

While conventional hand gestures are slow, error prone and fatiguing, tapping is fast, accurate, and does not cause visual or physical fatigue. Tap users have achieved typing speeds of over 70 words per minute using one hand. While hand tracking supports relatively few gestures and has no haptic feedback, TapXR has over 100 unique commands, and is inherently tactile.

While conventional hand gestures are slow, error prone and fatiguing, tapping is fast, accurate, and does not cause visual or physical fatigue. Tap users have achieved typing speeds of over 70 words per minute using one hand. While hand tracking supports relatively few gestures and has no haptic feedback, TapXR has over 100 unique commands, and is inherently tactile.

Intel Accelerates Developer Innovation with Open, Software-First Approach

On Day 2 of Intel Innovation, Intel illustrated how its efforts and investments to foster an open ecosystem catalyze community innovation, from silicon to systems to apps and across all levels of the software stack. Through an expanding array of platforms, tools and solutions, Intel is focused on helping developers become more productive and more capable of realizing their potential for positive social good. The company introduced new tools to support developers in artificial intelligence, security and quantum computing, and announced the first customers of its new Project Amber attestation service.

"We are making good on our software-first strategy by empowering an open ecosystem that will enable us to collectively and continuously innovate," said Intel Chief Technology Officer Greg Lavender. "We are committed members of the developer community and our breadth and depth of hardware and software assets facilitate the scaling of opportunities for all through co-innovation and collaboration."

"We are making good on our software-first strategy by empowering an open ecosystem that will enable us to collectively and continuously innovate," said Intel Chief Technology Officer Greg Lavender. "We are committed members of the developer community and our breadth and depth of hardware and software assets facilitate the scaling of opportunities for all through co-innovation and collaboration."

NVIDIA Jetson AGX Orin 32GB Production Modules Now Available

Bringing new AI and robotics applications and products to market, or supporting existing ones, can be challenging for developers and enterprises. The NVIDIA Jetson AGX Orin 32 GB production module—available now—is here to help. Nearly three dozen technology providers in the NVIDIA Partner Network worldwide are offering commercially available products powered by the new module, which provides up to a 6x performance leap over the previous generation.

With a wide range of offerings from Jetson partners, developers can build and deploy feature-packed Orin-powered systems sporting cameras, sensors, software and connectivity suited for edge AI, robotics, AIoT and embedded applications. Production-ready systems with options for peripherals enable customers to tackle challenges in industries from manufacturing, retail and construction to agriculture, logistics, healthcare, smart cities, last-mile delivery and more.

With a wide range of offerings from Jetson partners, developers can build and deploy feature-packed Orin-powered systems sporting cameras, sensors, software and connectivity suited for edge AI, robotics, AIoT and embedded applications. Production-ready systems with options for peripherals enable customers to tackle challenges in industries from manufacturing, retail and construction to agriculture, logistics, healthcare, smart cities, last-mile delivery and more.

Mobileye Launches EyeQ Kit: New SDK for Advanced Safety and Driver-Assistance Systems

Mobileye, an Intel company, has launched the EyeQ Kit - its first software development kit (SDK) for the EyeQ system-on-chip that powers driver-assistance and future autonomous technologies for automakers worldwide. Built to leverage the powerful and highly power-efficient architecture of the upcoming EyeQ 6 High and EyeQ Ultra processors, EyeQ Kit allows automakers to utilize Mobileye's proven core technology, while deploying their own differentiated code and human-machine interface tools on the EyeQ platform.

"EyeQ Kit allows our customers to benefit from the best of both worlds — Mobileye's proven and validated core technologies, along with their own expertise in delivering unique driver experiences and interfaces. As more core functions of vehicles are defined in software, we know our customers will want the flexibility and capacity they need to differentiate and define their brands through code."

- Prof. Amnon Shashua, Mobileye president and chief executive officer

"EyeQ Kit allows our customers to benefit from the best of both worlds — Mobileye's proven and validated core technologies, along with their own expertise in delivering unique driver experiences and interfaces. As more core functions of vehicles are defined in software, we know our customers will want the flexibility and capacity they need to differentiate and define their brands through code."

- Prof. Amnon Shashua, Mobileye president and chief executive officer

Synopsys Introduces Industry's Highest Performance Neural Processor IP

Addressing increasing performance requirements for artificial intelligence (AI) systems on chip (SoCs), Synopsys, Inc. today announced its new neural processing unit (NPU) IP and toolchain that delivers the industry's highest performance and support for the latest, most complex neural network models. Synopsys DesignWare ARC NPX6 and NPX6FS NPU IP address the demands of real-time compute with ultra-low power consumption for AI applications. To accelerate application software development for the ARC NPX6 NPU IP, the new DesignWare ARC MetaWare MX Development Toolkit provides a comprehensive compilation environment with automatic neural network algorithm partitioning to maximize resource utilization.

"Based on our seamless experience integrating the Synopsys DesignWare ARC EV Processor IP into our successful NU4000 multi-core SoC, we have selected the new ARC NPX6 NPU IP to further strengthen the AI processing capabilities and efficiency of our products when executing the latest neural network models," said Dor Zepeniuk, CTO at Inuitive, a designer of powerful 3D and vision processors for advanced robotics, drones, augmented reality/virtual reality (AR/VR) devices and other edge AI and embedded vision applications. "In addition, the easy-to-use ARC MetaWare tools help us take maximum advantage of the processor hardware resources, ultimately helping us to meet our performance and time-to-market targets."

"Based on our seamless experience integrating the Synopsys DesignWare ARC EV Processor IP into our successful NU4000 multi-core SoC, we have selected the new ARC NPX6 NPU IP to further strengthen the AI processing capabilities and efficiency of our products when executing the latest neural network models," said Dor Zepeniuk, CTO at Inuitive, a designer of powerful 3D and vision processors for advanced robotics, drones, augmented reality/virtual reality (AR/VR) devices and other edge AI and embedded vision applications. "In addition, the easy-to-use ARC MetaWare tools help us take maximum advantage of the processor hardware resources, ultimately helping us to meet our performance and time-to-market targets."

Report: AMD Radeon Software Could Alter CPU Settings Quietly

According to the latest investigation made by a German publication, Igor's Lab, AMD's Adrenalin GPU software could experience unexpected behavior when Ryzen Master software is integrated into it. Supposedly, the combination of the two would allow AMD Adrenalin GPU software to misbehave and accidentally change CPU PBO and Precision Boost settings, disregarding the user's permissions. What Igor's Lab investigated was a case of Adrenalin software automatically enabling PBO or "CPU OC" setting when applying GPU profiles. This also happens when the GPU is in the Default mode, which is set automatically by the software.

Alterations can happen without user knowledge. If a user applies custom voltage and frequency settings in BIOS, Adrenalin software can and sometimes will override those settings to set arbitrary ones, potentially impacting the CPU's stability. The software can also alter CPU power limits as it has the means to do so. This problem only occurs when AMD CPU is combined with AMD GPU and AMD Ryzen Master SDK is installed. If another configuration is present, there is no change to the system. There are ways to bypass this edge case, and that is going back to BIOS to re-apply CPU settings manually or disable PBO. A Reddit user found that creating new GPU tuning profiles without loading older profiles will also bypass Adrenalin from adjusting your CPU settings. AMD hasn't made comments about the software, and so far remains a mystery why this is happening.

Alterations can happen without user knowledge. If a user applies custom voltage and frequency settings in BIOS, Adrenalin software can and sometimes will override those settings to set arbitrary ones, potentially impacting the CPU's stability. The software can also alter CPU power limits as it has the means to do so. This problem only occurs when AMD CPU is combined with AMD GPU and AMD Ryzen Master SDK is installed. If another configuration is present, there is no change to the system. There are ways to bypass this edge case, and that is going back to BIOS to re-apply CPU settings manually or disable PBO. A Reddit user found that creating new GPU tuning profiles without loading older profiles will also bypass Adrenalin from adjusting your CPU settings. AMD hasn't made comments about the software, and so far remains a mystery why this is happening.

Intel XeSS Coming Early-Summer Alongside Arc 5 and Arc 7 Series

XeSS is arguably the most boasted-about new graphics technology by Intel. A performance enhancement that is functionally similar to AMD FSR or NVIDIA DLSS, XeSS will instrumental in making Arc "Alchemist" GPUs offer high performance with minimal loss to image quality, especially given that Intel's first crack at premium gaming GPUs also happens to include real-time ray tracing support, to meet DirectX 12 Ultimate specs.

Intel announced that XeSS won't be debuting with the Arc 3 series mobile GPUs that launched yesterday (March 30), but instead alongside the Arc 5 and Arc 7 series slated for early-Summer (late-May to early-June). At launch, several AAA titles will be optimized for XeSS, including "Ghostwire: Tokyo," "Death Stranding," "Anvil," "Hitman III," "GRID Legends," etc.

Intel announced that XeSS won't be debuting with the Arc 3 series mobile GPUs that launched yesterday (March 30), but instead alongside the Arc 5 and Arc 7 series slated for early-Summer (late-May to early-June). At launch, several AAA titles will be optimized for XeSS, including "Ghostwire: Tokyo," "Death Stranding," "Anvil," "Hitman III," "GRID Legends," etc.

Imagination launches RISC-V CPU family

Imagination Technologies announces Catapult, a RISC-V CPU product line designed from the ground-up for next-generation heterogeneous compute needs. Based on RISC-V, the open-source CPU architecture, which is transforming processor design, Imagination's Catapult CPUs can be configured for performance, efficiency, or balanced profiles, making them suitable for a wide range of markets.

Leveraging Imagination's 20 years of experience in delivering complex IP solutions, the new CPUs are supported by the rapidly expanding open-standard RISC-V ecosystem, which continues to shake up the embedded CPU industry by offering greater choice. Imagination's entry will enable the rapidly expanding RISC-V ecosystem to add a greater range of product offerings, especially for heterogeneous systems. Now customers have an even wider choice of solutions built on the open RISC-V ISA, avoiding lock-in with proprietary architectures.

Leveraging Imagination's 20 years of experience in delivering complex IP solutions, the new CPUs are supported by the rapidly expanding open-standard RISC-V ecosystem, which continues to shake up the embedded CPU industry by offering greater choice. Imagination's entry will enable the rapidly expanding RISC-V ecosystem to add a greater range of product offerings, especially for heterogeneous systems. Now customers have an even wider choice of solutions built on the open RISC-V ISA, avoiding lock-in with proprietary architectures.

NVIDIA Announces Updated Open-Source Image Scaling SDK

For the past two years, NVIDIA has offered a driver-based spatial upscaler called NVIDIA Image Scaling and Sharpening, for all your games, that didn't require game or SDK integrations to work. With the new November GeForce Game Ready Driver, we have improved the scaling and sharpening algorithm to now use a 6-tap filter with 4 directional scaling and adaptive sharpening filters to boost performance. And we have also added an in-game sharpness slider, accessible via GeForce Experience, so you can do real-time customizations to sharpness.

In contrast to NVIDIA DLSS, the algorithm is non-AI and non-temporal, using only information from the current low resolution image rendered by the game as an input. While the resulting image quality is best-in-class in comparison to scaling offered by monitors or other in-game scaling techniques, it lacks the temporal data and AI smarts of DLSS, which are required to deliver native resolution detail and robust frame-to-frame stability. By combining both NVIDIA DLSS and NVIDIA Image Scaling, the developer gets the best of both worlds: NVIDIA DLSS for the best image quality, and NVIDIA Image Scaling for cross-platform support. You can read how to enable the feature for any game down below.

In contrast to NVIDIA DLSS, the algorithm is non-AI and non-temporal, using only information from the current low resolution image rendered by the game as an input. While the resulting image quality is best-in-class in comparison to scaling offered by monitors or other in-game scaling techniques, it lacks the temporal data and AI smarts of DLSS, which are required to deliver native resolution detail and robust frame-to-frame stability. By combining both NVIDIA DLSS and NVIDIA Image Scaling, the developer gets the best of both worlds: NVIDIA DLSS for the best image quality, and NVIDIA Image Scaling for cross-platform support. You can read how to enable the feature for any game down below.

AMD Enables FidelityFX Suite on Xbox Series X|S

AMD has announced that Microsoft's Xbox Series S|X now features support for the company's FidelityFX suite. This move, which enabled previously PC-centric technologies on Microsoft's latest-generation gaming consoles, will bring feature parity between RDNA 2-powered graphics, and will eventually enable support for AMD's FSR (FidelityFX Super Resolution), the company's eventual competition to NVIDIA's DLSS tech.

This means that besides the technologies that are part of the DX 12 Ultimate spec (and which the consoles already obviously support), developers now have access to AMD's Fidelity FX technologies such as Contrast Adaptive Sharpening, Variable Rate Shading, ray traced shadow Denoiser, Ambient Occlusion and Screen Space Reflections. All of these AMD-led developments in the SDK allow for higher performance and/or better visual fidelity. However, the icing on the cake should be the FSR support, which could bring the Series X's 8K claims to bear (alongside high-refresh-rate 4K gaming) - should FSR turn out be in a similar performance-enhancing ballpark as NVIDIA's DLSS, which we can't really know for sure at this stage (and likely neither can AMD). No word on Fidelity FX support on the PS5 has been announced at this time, which does raise the question of its eventual support, or if Sony will enable a similar feature via their own development tools.

This means that besides the technologies that are part of the DX 12 Ultimate spec (and which the consoles already obviously support), developers now have access to AMD's Fidelity FX technologies such as Contrast Adaptive Sharpening, Variable Rate Shading, ray traced shadow Denoiser, Ambient Occlusion and Screen Space Reflections. All of these AMD-led developments in the SDK allow for higher performance and/or better visual fidelity. However, the icing on the cake should be the FSR support, which could bring the Series X's 8K claims to bear (alongside high-refresh-rate 4K gaming) - should FSR turn out be in a similar performance-enhancing ballpark as NVIDIA's DLSS, which we can't really know for sure at this stage (and likely neither can AMD). No word on Fidelity FX support on the PS5 has been announced at this time, which does raise the question of its eventual support, or if Sony will enable a similar feature via their own development tools.

NVIDIA Extends Data Center Infrastructure Processing Roadmap with BlueField-3 DPU

NVIDIA today announced the NVIDIA BlueField -3 DPU, its next-generation data processing unit, to deliver the most powerful software-defined networking, storage and cybersecurity acceleration capabilities available for data centers.

The first DPU built for AI and accelerated computing, BlueField-3 lets every enterprise deliver applications at any scale with industry-leading performance and data center security. It is optimized for multi-tenant, cloud-native environments, offering software-defined, hardware-accelerated networking, storage, security and management services at data-center scale.

The first DPU built for AI and accelerated computing, BlueField-3 lets every enterprise deliver applications at any scale with industry-leading performance and data center security. It is optimized for multi-tenant, cloud-native environments, offering software-defined, hardware-accelerated networking, storage, security and management services at data-center scale.

Get Re-Connected With the Latest Version of EK-Loop Connect Software and Sensors

EK, the leading computer cooling solutions provider, is announcing the release of EK-Loop Connect software version 1.3.11. As a company dedicated to providing water cooling enthusiasts and PC builders with the best of what the market has to offer, and after listening to customers' feedback several months ago, EK has issued a rework on the EK-Loop Connect software. Today, the new version of the software is officially ready and can already be downloaded.

"We were fortunate enough to have onboarded a team of software developers who were dedicated to the cause and managed to deliver functional software within the set deadline. We promised to deliver the software within 3 months, and here we are. The latest version of the EK-Loop Connect software was tested and confirmed by a small group of beta testers, and today we are ready to present it to the public," said Edvard König, founder of EK.

"We were fortunate enough to have onboarded a team of software developers who were dedicated to the cause and managed to deliver functional software within the set deadline. We promised to deliver the software within 3 months, and here we are. The latest version of the EK-Loop Connect software was tested and confirmed by a small group of beta testers, and today we are ready to present it to the public," said Edvard König, founder of EK.

NVIDIA Reflex Feature Detailed, Vastly Reduce Input Latency, Measure End-to-End System Latency

NVIDIA Reflex is a new innovation designed to minimize input latency with competitive e-sports games. When it comes out later this month with patches to popular e-sports titles such as Fortnite, Apex Legends, and Valorant, along with a GeForce driver update, the feature could improve input latencies even without any specialized hardware. Input latency is defined as the time it takes for a user input (such as a mouse click) in a game, to reflect as output on the screen, or the time it takes for your mouse click to register as a gunshot in an online shooter, and appear on-screen. The feature is compatible with any NVIDIA GeForce GPU, GTX 900 series or later.

NVIDIA briefly detailed how this works. On the software side, the NVIDIA driver co-operates with a compatible game engine to optimize the game's 3D rendering pipeline. This is accomplished by dynamically reducing the rendering queue, so fewer frames are queued up for the GPU to render. NVIDIA claims that the technology can also keep the GPU perfectly in sync with the CPU (1:1 render queue), reducing the "back-pressure" on the GPU, letting the game sample mouse input at the last possible moment. NVIDIA is releasing Reflex to gamers as GeForce driver updates, and to game developers as the Reflex SDK. This allows them to integrate the technology with their game engine, providing a toggle for the technology, and also put out in-game performance metrics.

NVIDIA briefly detailed how this works. On the software side, the NVIDIA driver co-operates with a compatible game engine to optimize the game's 3D rendering pipeline. This is accomplished by dynamically reducing the rendering queue, so fewer frames are queued up for the GPU to render. NVIDIA claims that the technology can also keep the GPU perfectly in sync with the CPU (1:1 render queue), reducing the "back-pressure" on the GPU, letting the game sample mouse input at the last possible moment. NVIDIA is releasing Reflex to gamers as GeForce driver updates, and to game developers as the Reflex SDK. This allows them to integrate the technology with their game engine, providing a toggle for the technology, and also put out in-game performance metrics.

IBM Delivers Its Highest Quantum Volume to Date

Today, IBM has unveiled a new milestone on its quantum computing road map, achieving the company's highest Quantum Volume to date. Combining a series of new software and hardware techniques to improve overall performance, IBM has upgraded one of its newest 27-qubit client-deployed systems to achieve a Quantum Volume 64. The company has made a total of 28 quantum computers available over the last four years through IBM Quantum Experience.

In order to achieve a Quantum Advantage, the point where certain information processing tasks can be performed more efficiently or cost effectively on a quantum computer, versus a classical one, it will require improved quantum circuits, the building blocks of quantum applications. Quantum Volume measures the length and complexity of circuits - the higher the Quantum Volume, the higher the potential for exploring solutions to real world problems across industry, government, and research.

To achieve this milestone, the company focused on a new set of techniques and improvements that used knowledge of the hardware to optimally run the Quantum Volume circuits. These hardware-aware methods are extensible and will improve any quantum circuit run on any IBM Quantum system, resulting in improvements to the experiments and applications which users can explore. These techniques will be available in upcoming releases and improvements to the IBM Cloud software services and the cross-platform open source software development kit (SDK) Qiskit.

In order to achieve a Quantum Advantage, the point where certain information processing tasks can be performed more efficiently or cost effectively on a quantum computer, versus a classical one, it will require improved quantum circuits, the building blocks of quantum applications. Quantum Volume measures the length and complexity of circuits - the higher the Quantum Volume, the higher the potential for exploring solutions to real world problems across industry, government, and research.

To achieve this milestone, the company focused on a new set of techniques and improvements that used knowledge of the hardware to optimally run the Quantum Volume circuits. These hardware-aware methods are extensible and will improve any quantum circuit run on any IBM Quantum system, resulting in improvements to the experiments and applications which users can explore. These techniques will be available in upcoming releases and improvements to the IBM Cloud software services and the cross-platform open source software development kit (SDK) Qiskit.

Intel Hit by a Devastating Data Breach, Chip Designs, Code, Possible Backdoors Leaked

Intel on Thursday was hit by a massive data-breach, with someone on Twitter posting links to an archive that contains the dump of the breach - a 20-gigabyte treasure chest that includes - but not limited to - Intel Management Engine bringup guides, flashing tools, samples; source code of Consumer Electronics Firmware Development Kit (CEFDK); silicon and FSP source packages for various platforms; an assortment of development and debugging tools; Simics simulation for "Rocket Lake S" and other platforms; a wealth of roadmaps and other documents; shcematics, documents, tools, and firmware for "Tiger Lake," Intel Trace Hub + decoder files for various Intel ME versions; "Elkhart Lake" silicon reference and sample code; Bootguard SDK, "Snow Ridge" simulator; design schematics of various products; etc.

The most fascinating part of the leak is the person points to the possibility of Intel laying backdoors in its code and designs - a very tinfoil hat though likely possibility in the post-9/11 world. Intel in a comment to Tom's Hardware denied that its security apparatus had been compromised, and instead blamed someone with access to this information for downloading the data. "We are investigating this situation. The information appears to come from the Intel Resource and Design Center, which hosts information for use by our customers, partners and other external parties who have registered for access. We believe an individual with access downloaded and shared this data," a company spox said.

The most fascinating part of the leak is the person points to the possibility of Intel laying backdoors in its code and designs - a very tinfoil hat though likely possibility in the post-9/11 world. Intel in a comment to Tom's Hardware denied that its security apparatus had been compromised, and instead blamed someone with access to this information for downloading the data. "We are investigating this situation. The information appears to come from the Intel Resource and Design Center, which hosts information for use by our customers, partners and other external parties who have registered for access. We believe an individual with access downloaded and shared this data," a company spox said.

Matrox D1450 Graphics Card for High-Density Output Video Walls Now Shipping

Matrox is pleased to announce that the Matrox D-Series D1450 multi-display graphics card is now shipping. Purpose-built to power next-generation video walls, this new single-slot, quad-4K HDMI graphics card enables OEMs, system integrators, and AV installers to easily combine multiple D1450 boards to quickly deploy high-density-output video walls of up 16 synchronized 4K displays. Along with a rich assortment of video wall software and developer tools for advanced custom control and application development, D1450 is ideal for a broad range of commercial and critical 24/7 applications, including control rooms, enterprises, industries, government, military, digital signage, broadcast, and more.

Advanced capabilities

Backed by innovative technology and deep industry expertise, D1450 delivers exceptional video and graphics performance on up to four 4K HDMI monitors from a single-slot card. OEMs, system integrators, and AV professionals can easily add—and synchronize—displays by framelocking up to four D-Series cards via board-to-board framelock cables. In addition, D1450 offers HDCP support to display copy-protected content, as well as Microsoft DirectX 12 and OpenGL support to run the latest professional applications.

Advanced capabilities

Backed by innovative technology and deep industry expertise, D1450 delivers exceptional video and graphics performance on up to four 4K HDMI monitors from a single-slot card. OEMs, system integrators, and AV professionals can easily add—and synchronize—displays by framelocking up to four D-Series cards via board-to-board framelock cables. In addition, D1450 offers HDCP support to display copy-protected content, as well as Microsoft DirectX 12 and OpenGL support to run the latest professional applications.

Dynics Announces AI-enabled Vision System Powered by NVIDIA T4 Tensor Core GPU

Dynics, Inc., a U.S.-based manufacturer of industrial-grade computer hardware, visualization software, network security, network monitoring and software-defined networking solutions, today announced the XiT4 Inference Server, which helps industrial manufacturing companies increase their yield and provide more consistent manufacturing quality.

Artificial intelligence (AI) is increasingly being integrated into modern manufacturing to improve and automate processes, including 3D vision applications. The XiT4 Inference Server, powered by the NVIDIA T4 Tensor Core GPUs, is a fan-less hardware platform for AI, machine learning and 3D vision applications. AI technology is allowing manufacturers to increase efficiency and throughput of their production, while also providing more consistent quality due to higher accuracy and repeatability. Additional benefits are fewer false negatives (test escapes) and fewer false positives, which reduce downstream re-inspection needs, all leading to lower costs of manufacturing.

Artificial intelligence (AI) is increasingly being integrated into modern manufacturing to improve and automate processes, including 3D vision applications. The XiT4 Inference Server, powered by the NVIDIA T4 Tensor Core GPUs, is a fan-less hardware platform for AI, machine learning and 3D vision applications. AI technology is allowing manufacturers to increase efficiency and throughput of their production, while also providing more consistent quality due to higher accuracy and repeatability. Additional benefits are fewer false negatives (test escapes) and fewer false positives, which reduce downstream re-inspection needs, all leading to lower costs of manufacturing.

Epic Online Services Launches with New Tools for Cross-Play and More

Epic Games today announces the launch of Epic Online Services, unlocking the ability to effortlessly scale games and unify player communities for all developers. First announced in December 2018, Epic Online Services are battle-tested and powered by the services built for Fortnite across seven major platforms (PlayStation, Xbox, Nintendo Switch, PC, Mac, iOS, and Android). Open to all developers, Epic Online Services is completely free and offers creators a single SDK to quickly and easily launch, operate, and scale their games across engines, stores, and platforms of their choice.

"At Epic, we believe in open, integrated platforms and in the future of gaming being a highly social and connected experience," said Chris Dyl, General Manager, Online Services, Epic Games. "Through Epic Online Services, we strive to help build a user-friendly ecosystem for both developers and players, where creators can benefit regardless of how they choose to build and publish their games, and where players can play games with their friends and enjoy the same quality experience regardless of the hardware they own."

"At Epic, we believe in open, integrated platforms and in the future of gaming being a highly social and connected experience," said Chris Dyl, General Manager, Online Services, Epic Games. "Through Epic Online Services, we strive to help build a user-friendly ecosystem for both developers and players, where creators can benefit regardless of how they choose to build and publish their games, and where players can play games with their friends and enjoy the same quality experience regardless of the hardware they own."

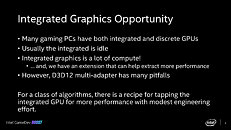

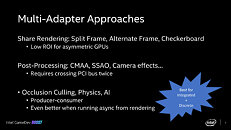

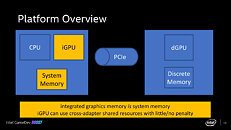

Intel iGPU+dGPU Multi-Adapter Tech Shows Promise Thanks to its Realistic Goals

Intel is revisiting the concept of asymmetric multi-GPU introduced with DirectX 12. The company posted an elaborate technical slide-deck it originally planned to present to game developers at the now-cancelled GDC 2020. The technology shows promise because the company isn't insulting developers' intelligence by proposing that the iGPU lying dormant be made to shoulder the game's entire rendering pipeline for a single-digit percentage performance boost. Rather, it has come up with innovating augments to the rendering path such that only certain lightweight compute aspects of the game's rendering be passed on to the iGPU's execution units, so it has a more meaningful contribution to overall performance. To that effect, Intel is on the path of coming up with SDK that can be integrated with existing game engines.

Microsoft DirectX 12 introduced the holy grail of multi-GPU technology, under its Explicit Multi-Adapter specification. This allows game engines to send rendering traffic to any combinations or makes of GPUs that support the API, to achieve a performance uplift over single GPU. This was met with lukewarm reception from AMD and NVIDIA, and far too few DirectX 12 games actually support it. Intel proposes a specialization of explicit multi-adapter approach, in which the iGPU's execution units are made to process various low-bandwidth elements both during the rendering and post-processing stages, such as Occlusion Culling, AI, game physics, etc. Intel's method leverages cross-adapter shared resources sitting in system memory (main memory), and D3D12 asynchronous compute, which creates separate processing queues for rendering and compute.

Microsoft DirectX 12 introduced the holy grail of multi-GPU technology, under its Explicit Multi-Adapter specification. This allows game engines to send rendering traffic to any combinations or makes of GPUs that support the API, to achieve a performance uplift over single GPU. This was met with lukewarm reception from AMD and NVIDIA, and far too few DirectX 12 games actually support it. Intel proposes a specialization of explicit multi-adapter approach, in which the iGPU's execution units are made to process various low-bandwidth elements both during the rendering and post-processing stages, such as Occlusion Culling, AI, game physics, etc. Intel's method leverages cross-adapter shared resources sitting in system memory (main memory), and D3D12 asynchronous compute, which creates separate processing queues for rendering and compute.

Khronos Group Releases Vulkan Ray Tracing

Today, The Khronos Group, an open consortium of industry-leading companies creating advanced interoperability standards, announces the ratification and public release of the Vulkan Ray Tracing provisional extensions, creating the industry's first open, cross-vendor, cross-platform standard for ray tracing acceleration. Primarily focused on meeting desktop market demand for both real-time and offline rendering, the release of Vulkan Ray Tracing as provisional extensions enables the developer community to provide feedback before the specifications are finalized. Comments and feedback will be collected through the Vulkan GitHub Issues Tracker and Khronos Developer Slack. Developers are also encouraged to share comments with their preferred hardware vendors. The specifications are available today on the Vulkan Registry.

Ray tracing is a rendering technique that realistically simulates how light rays intersect and interact with scene geometry, materials, and light sources to generate photorealistic imagery. It is widely used for film and other production rendering and is beginning to be practical for real-time applications and games. Vulkan Ray Tracing seamlessly integrates a coherent ray tracing framework into the Vulkan API, enabling a flexible merging of rasterization and ray tracing acceleration. Vulkan Ray Tracing is designed to be hardware agnostic and so can be accelerated on both existing GPU compute and dedicated ray tracing cores if available.

Ray tracing is a rendering technique that realistically simulates how light rays intersect and interact with scene geometry, materials, and light sources to generate photorealistic imagery. It is widely used for film and other production rendering and is beginning to be practical for real-time applications and games. Vulkan Ray Tracing seamlessly integrates a coherent ray tracing framework into the Vulkan API, enabling a flexible merging of rasterization and ray tracing acceleration. Vulkan Ray Tracing is designed to be hardware agnostic and so can be accelerated on both existing GPU compute and dedicated ray tracing cores if available.

NVIDIA Files for "Hopper" and "Aerial" Trademarks

In a confirmation that a future NVIDIA graphics architecture will be codenamed "Hopper," the company has trademarked the term with the US-PTO. The trademark application was filed as recently as December 4, and closely follows that of "Aerial," another trademark, which is an SDK for a GPU-accelerated 5G vRANs (virtual radio-access networks). Named after eminent computing scientist Grace Hopper, the new graphics architecture by NVIDIA reportedly sees one of the first GPU die MCMs (package with multiple GPU dies). It reportedly succeeds "Ampere," NVIDIA's next graphics architecture.

NVIDIA Announces New GeForce Experience Features Ahead of RTX Push

NVIDIA today announced new GeForce experience features to be integrated and expanded in wake of its RTX platform push. The new features include increased number of Ansel-supporting titles (including already released Prey and Vampyr, as well as the upcoming Metro Exodus and Shadow of the Tomb Raider), as well as RTX-exclusive features that are being implemented into the company's gaming system companion.

There are also some features being implemented that gamers will be able to take advantage of without explicit Ansel SDK integration done by the games developer - which NVIDIA says will bring Ansel support (in any shape or form) to over 200 titles (150 more than the over 50 titles already supported via SDK). And capitalizing on Battlefield V's relevance to the gaming crowd, NVIDIA also announced support for Ansel and its Highlights feature for the upcoming title.

There are also some features being implemented that gamers will be able to take advantage of without explicit Ansel SDK integration done by the games developer - which NVIDIA says will bring Ansel support (in any shape or form) to over 200 titles (150 more than the over 50 titles already supported via SDK). And capitalizing on Battlefield V's relevance to the gaming crowd, NVIDIA also announced support for Ansel and its Highlights feature for the upcoming title.

Microsoft Releases DirectX Raytracing - NVIDIA Volta-based RTX Adds Real-Time Capability

Microsoft today announced an extension to its DirectX 12 API with DirectX Raytracing, which provides components designed to make real-time ray-tracing easier to implement, and uses Compute Shaders under the hood, for wide graphics card compatibility. NVIDIA feels that their "Volta" graphics architecture, has enough computational power on tap, to make real-time ray-tracing available to the masses. The company has hence collaborated with Microsoft to develop the NVIDIA RTX technology, as an interoperative part of the DirectX Raytracing (DXR) API, along with a few turnkey effects, which will be made available through the company's next-generation GameWorks SDK program, under GameWorks Ray Tracing, as a ray-tracing denoiser module for the API.

Real-time ray-tracing has for long been regarded as a silver-bullet to get lifelike lighting, reflections, and shadows right. Ray-tracing is already big in the real-estate industry, for showcasing photorealistic interactive renderings of property under development, but has stayed away from gaming, that tends to be more intense, with larger scenes, more objects, and rapid camera movements. Movies with big production budgets use pre-rendered ray-tracing farms to render each frame. Movies have, hence, used ray-traced visual-effects for years now, since it's not interactive content, and its studios are willing to spend vast amounts of time and money to painstakingly render each frame using hundreds of rays per pixel.

Real-time ray-tracing has for long been regarded as a silver-bullet to get lifelike lighting, reflections, and shadows right. Ray-tracing is already big in the real-estate industry, for showcasing photorealistic interactive renderings of property under development, but has stayed away from gaming, that tends to be more intense, with larger scenes, more objects, and rapid camera movements. Movies with big production budgets use pre-rendered ray-tracing farms to render each frame. Movies have, hence, used ray-traced visual-effects for years now, since it's not interactive content, and its studios are willing to spend vast amounts of time and money to painstakingly render each frame using hundreds of rays per pixel.

Apr 14th, 2025 03:18 EDT

change timezone

Latest GPU Drivers

New Forum Posts

- Regarding fan noise (19)

- 9070XT or 7900XT or 7900XTX (190)

- What's your latest tech purchase? (23542)

- RX 9000 series GPU Owners Club (313)

- Multiple major problems that i can't explain (1)

- Game Soundtracks You Love (1038)

- Advice on GPU upgrade (19)

- Anime Nation (13022)

- ThrottleStop - 14900HX, 4090RTX MSI laptop (13)

- TPU's Nostalgic Hardware Club (20237)

Popular Reviews

- TerraMaster F8 SSD Plus Review - Compact and quiet

- ASUS GeForce RTX 5080 TUF OC Review

- Thermaltake TR100 Review

- The Last Of Us Part 2 Performance Benchmark Review - 30 GPUs Compared

- Zotac GeForce RTX 5070 Ti Amp Extreme Review

- Sapphire Radeon RX 9070 XT Pulse Review

- Sapphire Radeon RX 9070 XT Nitro+ Review - Beating NVIDIA

- Upcoming Hardware Launches 2025 (Updated Apr 2025)

- MSI MAG CORELIQUID A15 360 Review

- AMD Ryzen 7 9800X3D Review - The Best Gaming Processor

Controversial News Posts

- NVIDIA GeForce RTX 5060 Ti 16 GB SKU Likely Launching at $499, According to Supply Chain Leak (181)

- MSI Doesn't Plan Radeon RX 9000 Series GPUs, Skips AMD RDNA 4 Generation Entirely (146)

- NVIDIA Sends MSRP Numbers to Partners: GeForce RTX 5060 Ti 8 GB at $379, RTX 5060 Ti 16 GB at $429 (124)

- Microsoft Introduces Copilot for Gaming (124)

- Nintendo Confirms That Switch 2 Joy-Cons Will Not Utilize Hall Effect Stick Technology (105)

- Over 200,000 Sold Radeon RX 9070 and RX 9070 XT GPUs? AMD Says No Number was Given (100)

- Nintendo Switch 2 Launches June 5 at $449.99 with New Hardware and Games (99)

- NVIDIA PhysX and Flow Made Fully Open-Source (77)