Wednesday, March 25th 2020

Intel iGPU+dGPU Multi-Adapter Tech Shows Promise Thanks to its Realistic Goals

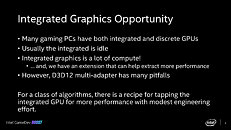

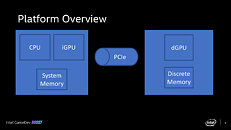

Intel is revisiting the concept of asymmetric multi-GPU introduced with DirectX 12. The company posted an elaborate technical slide-deck it originally planned to present to game developers at the now-cancelled GDC 2020. The technology shows promise because the company isn't insulting developers' intelligence by proposing that the iGPU lying dormant be made to shoulder the game's entire rendering pipeline for a single-digit percentage performance boost. Rather, it has come up with innovating augments to the rendering path such that only certain lightweight compute aspects of the game's rendering be passed on to the iGPU's execution units, so it has a more meaningful contribution to overall performance. To that effect, Intel is on the path of coming up with SDK that can be integrated with existing game engines.

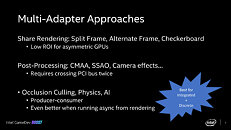

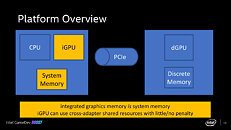

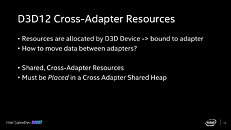

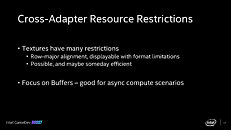

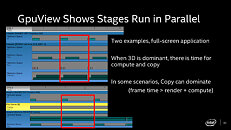

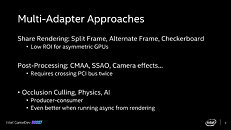

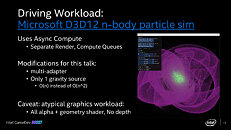

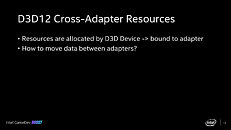

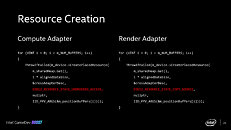

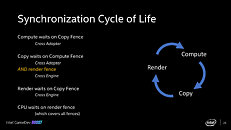

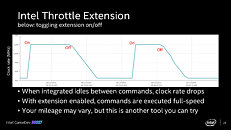

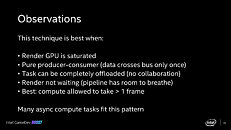

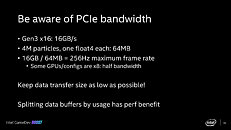

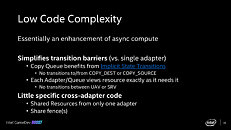

Microsoft DirectX 12 introduced the holy grail of multi-GPU technology, under its Explicit Multi-Adapter specification. This allows game engines to send rendering traffic to any combinations or makes of GPUs that support the API, to achieve a performance uplift over single GPU. This was met with lukewarm reception from AMD and NVIDIA, and far too few DirectX 12 games actually support it. Intel proposes a specialization of explicit multi-adapter approach, in which the iGPU's execution units are made to process various low-bandwidth elements both during the rendering and post-processing stages, such as Occlusion Culling, AI, game physics, etc. Intel's method leverages cross-adapter shared resources sitting in system memory (main memory), and D3D12 asynchronous compute, which creates separate processing queues for rendering and compute.Intel developed easy code for game engine developers to integrate the new tech, with code for creating cross-adapter resources, shared heaps, and resources. The presentation also includes examples of how to how to leverage async compute and get the lightweight rendering- and compute paths to work with as little latency as possible. Intel also developed code for cross-adapter synchronization, called Intel Command Queue Throttle. This piece of code ensures performance and low frame-times when when the load is inconsistent between the iGPU and dGPU.All current Intel Graphics drivers include support for the extension, and Intel has started giving out headers for the extension through its developer support. Intel notes that its method can be used for various kinds of async compute tasks such as shadows, AI, mesh deformation, and physics. Load on the system's PCIe and memory bandwidth is minimized because the iGPU isn't made to handle heavyweight resources such as texture filtering.Intel iGPUs are approaching the 1 TFLOPs compute power barrier, with Gen11 and the upcoming Xe-based iGPU debuting with "Tiger Lake." That's a lot of compute power not to take advantage of. Intel's tech can prove particularly useful with notebooks that have entry- thru mid-range discrete GPUs, as all Intel mobile processors pack iGPUs and implement dynamic switching between iGPU and dGPU.The complete Intel presentation follows.

Microsoft DirectX 12 introduced the holy grail of multi-GPU technology, under its Explicit Multi-Adapter specification. This allows game engines to send rendering traffic to any combinations or makes of GPUs that support the API, to achieve a performance uplift over single GPU. This was met with lukewarm reception from AMD and NVIDIA, and far too few DirectX 12 games actually support it. Intel proposes a specialization of explicit multi-adapter approach, in which the iGPU's execution units are made to process various low-bandwidth elements both during the rendering and post-processing stages, such as Occlusion Culling, AI, game physics, etc. Intel's method leverages cross-adapter shared resources sitting in system memory (main memory), and D3D12 asynchronous compute, which creates separate processing queues for rendering and compute.Intel developed easy code for game engine developers to integrate the new tech, with code for creating cross-adapter resources, shared heaps, and resources. The presentation also includes examples of how to how to leverage async compute and get the lightweight rendering- and compute paths to work with as little latency as possible. Intel also developed code for cross-adapter synchronization, called Intel Command Queue Throttle. This piece of code ensures performance and low frame-times when when the load is inconsistent between the iGPU and dGPU.All current Intel Graphics drivers include support for the extension, and Intel has started giving out headers for the extension through its developer support. Intel notes that its method can be used for various kinds of async compute tasks such as shadows, AI, mesh deformation, and physics. Load on the system's PCIe and memory bandwidth is minimized because the iGPU isn't made to handle heavyweight resources such as texture filtering.Intel iGPUs are approaching the 1 TFLOPs compute power barrier, with Gen11 and the upcoming Xe-based iGPU debuting with "Tiger Lake." That's a lot of compute power not to take advantage of. Intel's tech can prove particularly useful with notebooks that have entry- thru mid-range discrete GPUs, as all Intel mobile processors pack iGPUs and implement dynamic switching between iGPU and dGPU.The complete Intel presentation follows.

28 Comments on Intel iGPU+dGPU Multi-Adapter Tech Shows Promise Thanks to its Realistic Goals

Besides MS's Edge not a single browser use AMD's hardware decoder. But sadly it will repleased by inefficient Chrome's engine.

www.amd.com/en/technologies/radeon-dual-graphics-faq

www.amd.com/en/support/kb/faq/dh-017en.wikipedia.org/wiki/AMD_Hybrid_Graphics

The biggest problem by far is the fact that under a lot of circumstances the distribution of compute is counterproductive, the gap caused by the different levels of performance makes it so that stalls are inevitable. So the closer the gap the better the performance, this basically makes the combination of iGPU+dGPU the worst contender for this sort of stuff.

The same problem existed with CPU+GPU hybrid compute, nothing really works in that way, most things are either fully GPU accelerated or run on just the CPU.

The main concern I have about the concept of using the iGPU (whether it's on AMD or Intel) in a hybrid manner is how much of this will adversely affect performance of the CPU. Intel already throttles when it gets too toasty, and that's mostly just pure CPU work. AMD APUs also have bottleneck limitations, but assuming the next-gen APU is more like the PS5's via Infinity Arch, what about heat limitations for it?

And for that matter, will gaming companies really be interested in pursuing and optimizing games to work over such a niche distributed GPU method when they haven't done so for when the tech was first available? I mean sure, a few might just because Intel sponsored a game, but it seems like an overall waste of time if they have to build in the feature for it instead of letting the system distribute it intelligently (ie: it's up to the CPU/APU + GPU to intelligently distribute the resources necessary).

Also, this is something different compared to Hybrid CrossFire.Under DX12 we could see something much better compared to what Hybrid Graphics was giving us. If Intel's approach works and probably works in combination with AMD and Nvidia discrete cards, it will be proof that AMD and Nvidia just decided to ignore it.

So I guess you can either choose between your CPU boosting to max and iGPU doing nothing, or CPU and iGPU both running but at lower speeds? Not seeing the value proposition TBH.

software.intel.com/en-us/articles/multi-adapter-support-in-directx-12

www.gpucheck.com/compare/amd-radeon-r9-fury-x-vs-amd-radeon-vii/intel-core-i7-4790k-4-00ghz-vs-intel-core-i7-9700k-3-60ghz/

They must do something and be kept busy up to 80-90%, rather than sitting lazy.

Besides, what are you winning here. Just buy a decent GPU. We all know that any realtime critical task needs to be done as close to the hardware as possible, yet here we are making a full round trip back to the CPU to win what, 5-10% fps?

This is already dead imo, but maybe Intel can pull out rabbits if they combine this with new Xe sauce. End result: you will need an Intel CPU (lol, in 2020-2021?!) and an Intel Xe GPU (which?) to make a dent.That's not how it works obviously. Its the IGP doing the work, not the CPU cycles you have sitting idle while gaming.

In my newer Pascal/G-Sync notebook the iGPU is hidden at BIOS level; and my old Maxwell/Broadwell/Optimus notebook refuses to run it.

So i'm out of the race...

Intel now is a different story, it starts with zero market share, that will definitely jump thank's to the major OEMs getting nice deals on the GPUs, if they buy them as a nice little package with the CPUs. Intel's offerings will also have lower performance compared to AMD and Nvidia offerings. Not to mention probably shorter list of features. So, Intel is in a position where they will try every way possible to extent that feature list and probably close the gap in performance with their competitors. And multi adapter support can do both. Offer something that the competition doesn't and also improve performance when combining an Intel iGPU and a discrete Intel GPU compared to combining an Intel iGPU, that will be doing nothing in 3D gaming, with an AMD or Nvidia discrete GPU.

P.S. "Combining two Intel GPUs for the ultimate performance in games"

That's a nice marketing line on the box of a laptop, don't you think? And many consumers will never think to ask what kind of GPUs. 2 GPUs vs 1 GPU does sound better.