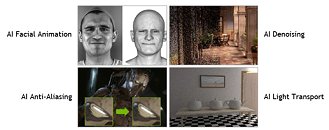

Microsoft today announced an extension to its DirectX 12 API with DirectX Raytracing, which provides components designed to make real-time ray-tracing easier to implement, and uses Compute Shaders under the hood, for wide graphics card compatibility. NVIDIA feels that their "Volta" graphics architecture, has enough computational power on tap, to make real-time ray-tracing available to the masses. The company has hence collaborated with Microsoft to develop the NVIDIA RTX technology, as an interoperative part of the DirectX Raytracing (DXR) API, along with a few turnkey effects, which will be made available through the company's next-generation GameWorks SDK program, under GameWorks Ray Tracing, as a ray-tracing denoiser module for the API.

Real-time ray-tracing has for long been regarded as a silver-bullet to get lifelike lighting, reflections, and shadows right. Ray-tracing is already big in the real-estate industry, for showcasing photorealistic interactive renderings of property under development, but has stayed away from gaming, that tends to be more intense, with larger scenes, more objects, and rapid camera movements. Movies with big production budgets use pre-rendered ray-tracing farms to render each frame. Movies have, hence, used ray-traced visual-effects for years now, since it's not interactive content, and its studios are willing to spend vast amounts of time and money to painstakingly render each frame using hundreds of rays per pixel.