Tuesday, August 1st 2017

NVIDIA Announces OptiX 5.0 SDK - AI-Enhanced Ray Tracing

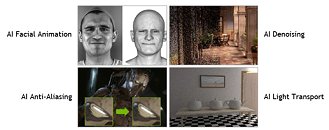

At SIGGRAPH 2017, NVIDIA introduced the latest version of their AI-based, GPU-enabled ray-tracing OptiX API. The company has been at the forefront of GPU-powered AI endeavors in a number of areas, including facial animation, anti-aliasing, denoising, and light transport. OptiX 5.0 brings a renewed focus on AI-based denoising.

AI training is still a brute-force scenario with finesse applied at the end: basically, NVIDIA took tens of thousands of image pairs of rendered images with one sample per pixel and a companion image of the same render with 4,000 rays per pixel, and used that to train the AI to predict what a denoised image looks like. Basically (and picking up the numbers NVIDIA used for its AI training), this means that in theory, users deploying OptiX 5.0 only need to render one sample per pixel of a given image, instead of the 4,000 rays per pixel that would be needed for its final presentation. Based on its learning, the AI will then be able to fill in the blanks towards finalizing the image, saving the need to render all that extra data. NVIDIA quotes a 157x improvement in render time using a DGX station with Optix 5.0 deployed against the same render on a CPU-based platform (2 x E5-2699 v4 @ 2.20GHz). The Optix 5.0 release also includes provisions for GPU-accelerated motion blur, which should do away with the need to render a frame multiple times and then applying a blur filter through a collage of the different frames. NVIDIA said OptiX 5.0 will be available in November. Check the press release after the break.Running OptiX 5.0 on the NVIDIA DGX Station -- the company's recently introduced deskside AI workstation -- will give designers, artists and other content-creation professionals the rendering capability of 150 standard CPU-based servers. This access to GPU-powered accelerated computing will provide extraordinary ability to iterate and innovate with speed and performance, at a fraction of the cost."Developers using our platform can enable millions of artists and designers to access the capabilities of a render farm right at their desk," said Bob Pette, Vice President, Professional Visualization, NVIDIA. "By creating OptiX-based applications, they can bring the extraordinary power of AI to their customers, enhancing their creativity and dramatically improving productivity."

OptiX 5.0's new ray tracing capabilities will speed up the process required to visualize designs or characters, dramatically increasing a creative professional's ability to interact with their content. It features new AI denoising capability to accelerate the removal of graininess from images, and brings GPU-accelerated motion blur for realistic animation effects.OptiX 5.0 will be available at no cost to registered developers in November.

Rendering Appliance Powers AI Workflows

By running NVIDIA OptiX 5.0 on a DGX Station, content creators can significantly accelerate training, inference and rendering. A whisper-quiet system that fits under a desk, NVIDIA DGX Station uses the latest NVIDIA Volta-generation GPUs, making it the most powerful AI rendering system available.To achieve equivalent rendering performance of a DGX Station, content creators would need access to a render farm with more than 150 servers that require some 200 kilowatts of power, compared with 1.5 kilowatts for a DGX Station. The cost for purchasing and operating that render farm would reach $4 million over three years compared with less than $75,000 for a DGX Station.

Industry Support for AI-based Graphics

NVIDIA is working with many of the world's most important technology companies and creative visionaries from Hollywood studios to set the course for the use of AI for rendering, design, character generation and the creation of virtual worlds. They voiced broad support for the company's latest innovations:

Sources:

NVIDIA News, NVIDIA @ Developer News, via Tom's Hardware

AI training is still a brute-force scenario with finesse applied at the end: basically, NVIDIA took tens of thousands of image pairs of rendered images with one sample per pixel and a companion image of the same render with 4,000 rays per pixel, and used that to train the AI to predict what a denoised image looks like. Basically (and picking up the numbers NVIDIA used for its AI training), this means that in theory, users deploying OptiX 5.0 only need to render one sample per pixel of a given image, instead of the 4,000 rays per pixel that would be needed for its final presentation. Based on its learning, the AI will then be able to fill in the blanks towards finalizing the image, saving the need to render all that extra data. NVIDIA quotes a 157x improvement in render time using a DGX station with Optix 5.0 deployed against the same render on a CPU-based platform (2 x E5-2699 v4 @ 2.20GHz). The Optix 5.0 release also includes provisions for GPU-accelerated motion blur, which should do away with the need to render a frame multiple times and then applying a blur filter through a collage of the different frames. NVIDIA said OptiX 5.0 will be available in November. Check the press release after the break.Running OptiX 5.0 on the NVIDIA DGX Station -- the company's recently introduced deskside AI workstation -- will give designers, artists and other content-creation professionals the rendering capability of 150 standard CPU-based servers. This access to GPU-powered accelerated computing will provide extraordinary ability to iterate and innovate with speed and performance, at a fraction of the cost."Developers using our platform can enable millions of artists and designers to access the capabilities of a render farm right at their desk," said Bob Pette, Vice President, Professional Visualization, NVIDIA. "By creating OptiX-based applications, they can bring the extraordinary power of AI to their customers, enhancing their creativity and dramatically improving productivity."

OptiX 5.0's new ray tracing capabilities will speed up the process required to visualize designs or characters, dramatically increasing a creative professional's ability to interact with their content. It features new AI denoising capability to accelerate the removal of graininess from images, and brings GPU-accelerated motion blur for realistic animation effects.OptiX 5.0 will be available at no cost to registered developers in November.

Rendering Appliance Powers AI Workflows

By running NVIDIA OptiX 5.0 on a DGX Station, content creators can significantly accelerate training, inference and rendering. A whisper-quiet system that fits under a desk, NVIDIA DGX Station uses the latest NVIDIA Volta-generation GPUs, making it the most powerful AI rendering system available.To achieve equivalent rendering performance of a DGX Station, content creators would need access to a render farm with more than 150 servers that require some 200 kilowatts of power, compared with 1.5 kilowatts for a DGX Station. The cost for purchasing and operating that render farm would reach $4 million over three years compared with less than $75,000 for a DGX Station.

Industry Support for AI-based Graphics

NVIDIA is working with many of the world's most important technology companies and creative visionaries from Hollywood studios to set the course for the use of AI for rendering, design, character generation and the creation of virtual worlds. They voiced broad support for the company's latest innovations:

- "AI is transforming industries everywhere. We're excited to see how NVIDIA's new AI technologies will improve the filmmaking process." -- Steve May, Vice President and CTO, Pixar

- "We're big fans of NVIDIA OptiX. It greatly reduced our development cost while porting the ray tracing core of our Clarisse renderer to NVIDIA GPUs and offers extremely fast performance. With the potential to significantly decrease rendering times with AI-accelerated denoising, OptiX 5 is very promising as it can become a game changer in production workflows." -- Nicolas Guiard, Principal Engineer, Isotropix

- "AI has the potential to turbocharge the creative process. We see a future where our artists' creativity is unleashed with AI -- a future where paintbrushes can truly 'think' and empower artists to create images and experiences we could hardly imagine just a few years ago. At Technicolor, we share NVIDIA's vision to chart a path that enhances the toolset for creatives to deepen audience experiences." -- Sutha Kamal, Vice President, Technology Strategy, Technicolor.

5 Comments on NVIDIA Announces OptiX 5.0 SDK - AI-Enhanced Ray Tracing