Monday, September 24th 2012

AMD FX "Vishera" Processor Pricing Revealed

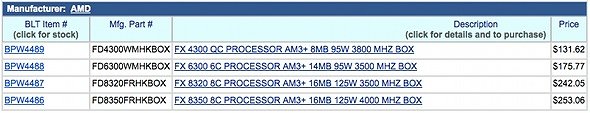

AMD's upcoming second-generation FX "Vishera" multi-core CPUs are likely to appeal to a variety of budget-conscious buyers, if a price-list leaked from US retailer BLT is accurate. The list includes pricing of the first four models AMD will launch some time in October, including the flagship FX-8350. The FX-8350 leads the pack with eight cores, 4.00 GHz clock speed, and 16 MB of total cache. It is priced at US $253.06. The FX-8350 is followed by another eight-core chip, the FX-8320, clocked at 3.50 GHz, and priced at $242.05.

Trailing the two eight-core chips is the FX-6300, carrying six cores, 3.50 GHz clock speed, 14 MB total cache, and a price-tag of $175.77. The most affordable chip of the lot, the FX-4350 packs four cores, 4.00 GHz clock speed, and 8 MB of total cache (likely by halving even the L3 cache). The FX-4350 is expected to go for $131.42. In all, the new lineup draws several parallels with the first-generation FX lineup, with FX-8150, FX-8120, FX-6100, and FX-4150.

Source:

HotHardware

Trailing the two eight-core chips is the FX-6300, carrying six cores, 3.50 GHz clock speed, 14 MB total cache, and a price-tag of $175.77. The most affordable chip of the lot, the FX-4350 packs four cores, 4.00 GHz clock speed, and 8 MB of total cache (likely by halving even the L3 cache). The FX-4350 is expected to go for $131.42. In all, the new lineup draws several parallels with the first-generation FX lineup, with FX-8150, FX-8120, FX-6100, and FX-4150.

221 Comments on AMD FX "Vishera" Processor Pricing Revealed

I like you, you are funny.

P.S. That question mark is grammatically incorrect since you're not asking a question; rather making a statement.

personally i want the upcoming amd's piledriver can draw less power, cheaper price compared to competitor, and maybe can perform better on benchmark apps :nutkick:.

Bulldozer sucked it was one of the biggest let downs I have seen in my lifetime in computers. The idea is great parallel computing is awesome. AMD however needs to step up their game for it to catch on. The server market is already turning ears towards bulldozer it works well with the correct programs. Give them a few mind you I said a few more generations and we will see some shoe exchanging. Intel has had it happen to them on multiple occasions AMD innovates and Intel copies.

I don't think that good marketing matters for AMD; it's not like they could just churn out an extra million of CPUs every month even if the demand was there. And they always sell out. People might want them to be present in every tier but does AMD want that too? Why did AMD become fabless in the first place?

I would imagine the difference between rendering something in 1 hour rather than 1 hour and 20 minutes isn't a huge deal for most people. I would never say BD was completely unusable, in fact it did pretty well for certain programs, but in my mind there was not enough reason to get it over Intel's offerings at the time.There were a lot of situations where Phenom II was better than Bulldozer (especially of the 4xxx and 6xxx variant). Phenom II had the higher IPC, so with the same number of 'cores' Phenom II performed better clock for clock. It was only really when optimizing new instruction sets that this wasn't true. It also depends greatly on the game, obviously certain games are way more CPU dependent--primarily RTS and MMO's.

Parallel Computing is definitely the future, but it's a slow progression because software developers are slow to support increased numbers of cores as it would require an increase in development costs. As for the innovation, AMD definitely innovated a lot, their implementation of x86-64 was definitely the smarter move. The way I see it historically AMD introduces new concepts but Intel really executes them.

From my experience, I deduced that multi-GPU with video cards built around nVidia GPUs works a lot less well with AMD systems than those built around AMD GPUs. If history is any guide, intel has paid nVidia a fortune to make that happen... Now I'm not saying that is the case, I'm just saying Intel has such a history of monopolistic, illegal, rotten, evil business practices, that it wouldn't surprise me overly if such were the case.

Either way, using multiple GPUs will tend to move the bottleneck from the (single) GPU to the CPU, so that's where the CPU's power becomes more relevant.

It remains that in my case, it wasn't simply a situation where the second GTX 570 didn't add any performance, it just made everything stutter like complete madness.

to above and bellow posts, i cant vouch for nvidia on Amd as i havent used it but i have xfired on Amd(this) and intel platforms with minimal issues(mostly app specific) for years now and the main thing xfire users need to know is AFR on,,, this brings a near doubleing of fps in all but nvidia optimised games and metro 2033 but is the most crashy

then he came to my workshop and tries my computer.6 hours torturing my computer,and he just comment "did you use highly overclocked ivy-bridge?" :pall-in-all,if you crossfire'd or sli'ed high-end GPU,the choice was obvious.

but when it came to mainstream,i.e my system with just two HD7850,there's is no noticeable different with highly overclocked 2600k + GTX 680 @1080p gaming :)

I also extract a lot of .rar files and do a lot of video conversion with handbrake. The i7 was dramatically faster in extraction and compression. Although I do say the FX-8120 was great in Handbrake and overclocked it was the same as the i7. As for photo editing, it would be hard to tell a difference.

Gaming though Bulldozer really does suck though in comparison. If you REALLY cant wrap your head around that, then take a look at some of the Borderlands 2 CPU scaling benchmarks or the Multi-GPU benchmarks (tweaktown.com) There are tons and tons of proven benchmarks that aren't just "bars and graphs" that prove this. It really DOES just suck and is behind even the Phenom II.

However I will note that everybody's gaming experience will be based on their own standards. Some people will be fine with 30fps and claim they see no difference with 60fps (probably need their eyes checked)