Monday, September 30th 2013

Radeon R9 290X Clock Speeds Surface, Benchmarked

Radeon R9 290X is looking increasingly good on paper. Most of its rumored specifications, and SEP pricing were reported late last week, but the ones that eluded us were clock speeds. A source that goes by the name Grant Kim, with access to a Radeon R9 290X sample, disclosed its clock speeds, and ran a few tests for us. To begin with, the GPU core is clocked at 1050 MHz. There is no dynamic-overclocking feature, but the chip can lower its clocks, taking load and temperatures into account. The memory is clocked at 1125 MHz (4.50 GHz GDDR5-effective). At that speed, the chip churns out 288 GB/s of memory bandwidth, over its 512-bit wide memory interface. Those clock speeds were reported by the GPU-Z client to us, so we give it the benefit of our doubt, even if it goes against AMD's ">300 GB/s memory bandwidth" bullet-point in its presentation.

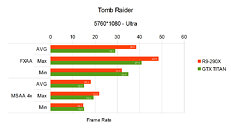

Among the tests run on the card include frame-rates and frame-latency for Aliens vs. Predators, Battlefield 3, Crysis 3, GRID 2, Tomb Raider (2013), RAGE, and TESV: Skyrim, in no-antialiasing, FXAA, and MSAA modes; at 5760 x 1080 pixels resolution. An NVIDIA GeForce GTX TITAN was pitted against it, running the latest WHQL driver. We must remind you that at that resolution, AMD and NVIDIA GPUs tend to behave a little differently due to the way they handle multi-display, and so it may be an apples-to-coconuts comparison. In Tomb Raider (2013), the R9 290X romps ahead of the GTX TITAN, with higher average, maximum, and minimum frame rates in most tests.RAGE

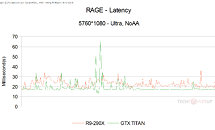

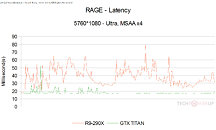

The OpenGL-based RAGE is a different beast. With AA turned off, the R9 290X puts out an overall lower frame-rates, and higher frame latency (lower the better). It gets even more inconsistent with AA cranked up to 4x MSAA. Without AA, frame-latencies of both chips remain under 30 ms, with the GTX TITAN looking more consistent, and lower. At 4x MSAA, the R9 290X is all over the place with frame latency.TESV: Skyrim

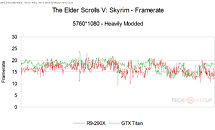

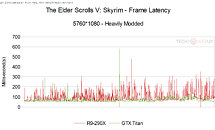

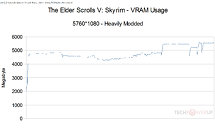

The tester somehow got the game to work at 5760 x 1080. With no AA, both chips put out similar frame-rates, with the GTX TITAN having a higher mean, and the R9 290X spiking more often. In the frame-latency graph, the R9 290X has a bigger skyline than the GTX TITAN, which is not something to be proud of. As an added bonus, the VRAM usage of the game was plotted throughout the test run.GRID 2

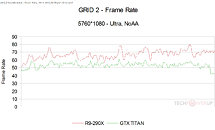

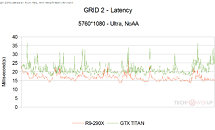

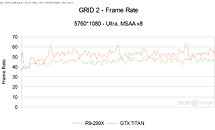

GRID 2 is a surprise package for the R9 290X. The chip puts out significantly, and consistently higher frame-rates than the GTX TITAN at no-AA, and offers lower frame-latencies. Even with MSAA cranked all the way up to 8x, the R9 290X holds out pretty well on the frame-rate front, but not frame-latency.Crysis 3

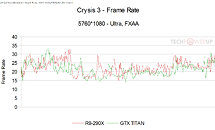

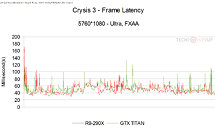

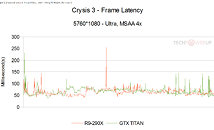

This Cryengine 3-based game offers MSAA and FXAA anti-aliasing methods, and so it wasn't tested without either enabled. With 4x MSAA, both chips offer similar levels of frame-rates and frame-latencies. With FXAA enabled, the R9 290X offers higher frame-rates on average, and lower latencies.Battlefield 3

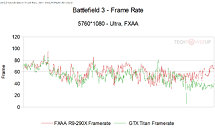

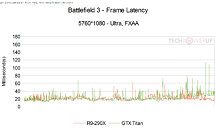

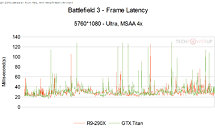

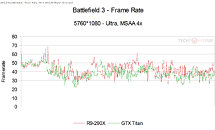

That leaves us with Battlefield 3, which like Crysis 3, supports MSAA and FXAA. At 4x MSAA, the R9 290X offers higher frame-rates on average, and lower frame-latencies. It gets better for AMD's chip with FXAA on both fronts.Overall, at 1050 MHz (core) and 4.50 GHz (memory), it's advantage-AMD, looking at these graphs. Then again, we must remind you that this is 5760 x 1080 we're talking about. Many Thanks to Grant Kim.

Among the tests run on the card include frame-rates and frame-latency for Aliens vs. Predators, Battlefield 3, Crysis 3, GRID 2, Tomb Raider (2013), RAGE, and TESV: Skyrim, in no-antialiasing, FXAA, and MSAA modes; at 5760 x 1080 pixels resolution. An NVIDIA GeForce GTX TITAN was pitted against it, running the latest WHQL driver. We must remind you that at that resolution, AMD and NVIDIA GPUs tend to behave a little differently due to the way they handle multi-display, and so it may be an apples-to-coconuts comparison. In Tomb Raider (2013), the R9 290X romps ahead of the GTX TITAN, with higher average, maximum, and minimum frame rates in most tests.RAGE

The OpenGL-based RAGE is a different beast. With AA turned off, the R9 290X puts out an overall lower frame-rates, and higher frame latency (lower the better). It gets even more inconsistent with AA cranked up to 4x MSAA. Without AA, frame-latencies of both chips remain under 30 ms, with the GTX TITAN looking more consistent, and lower. At 4x MSAA, the R9 290X is all over the place with frame latency.TESV: Skyrim

The tester somehow got the game to work at 5760 x 1080. With no AA, both chips put out similar frame-rates, with the GTX TITAN having a higher mean, and the R9 290X spiking more often. In the frame-latency graph, the R9 290X has a bigger skyline than the GTX TITAN, which is not something to be proud of. As an added bonus, the VRAM usage of the game was plotted throughout the test run.GRID 2

GRID 2 is a surprise package for the R9 290X. The chip puts out significantly, and consistently higher frame-rates than the GTX TITAN at no-AA, and offers lower frame-latencies. Even with MSAA cranked all the way up to 8x, the R9 290X holds out pretty well on the frame-rate front, but not frame-latency.Crysis 3

This Cryengine 3-based game offers MSAA and FXAA anti-aliasing methods, and so it wasn't tested without either enabled. With 4x MSAA, both chips offer similar levels of frame-rates and frame-latencies. With FXAA enabled, the R9 290X offers higher frame-rates on average, and lower latencies.Battlefield 3

That leaves us with Battlefield 3, which like Crysis 3, supports MSAA and FXAA. At 4x MSAA, the R9 290X offers higher frame-rates on average, and lower frame-latencies. It gets better for AMD's chip with FXAA on both fronts.Overall, at 1050 MHz (core) and 4.50 GHz (memory), it's advantage-AMD, looking at these graphs. Then again, we must remind you that this is 5760 x 1080 we're talking about. Many Thanks to Grant Kim.

100 Comments on Radeon R9 290X Clock Speeds Surface, Benchmarked

There are other DC projects that make good use of GPUs both BOINC and f@h.

I appreciate the excitement of course, and no doubt it will thankfully bring prices into more reasonable realms for all us mere mortals.

We will have to wait for R9 290, a 780 counter part, for custom pcb.

@ the Tomb Raider Benchmark Graph:

-FXAA-

GTX Titan, Min = 35.0 fps; Max = 40.9 fps; avg stated = 29.1 fps; actual avg = 37.95 fps.

error off = 24.62%.

This implies that the GTX Titan's avg is smaller than it's minimum...

RX9-990, Min = 32.0 fps; Max = 48.6 fps; avg stated = 38.6 fps; actual avg = 40.30 fps.

error off = 4.22%.

Looking at the huge error of the GTX Titan's performance, this graph comes into question, and also, the author's ability comes into question.

-MSAA 4x-

GTX Titan, Min = 15.1 fps; Max = 19.4 fps; avg stated = 15.1 fps; actual avg = 17.25 fps.

error off = 12.46%

In this, author states that the Minimum fps is equal to the average... Again, this is another error...

RX9-990, Min = 14.7 fps; Max = 22.0 fps; avg stated = 18.2 fps; actual avg = 18.35 fps.

error off = 0.82%

i expected much much more consistent and higher performance results.. this card changes nothing and will be easily dominated by evga gtx 780 classified for just a slightly higher price.

basically theres no wonder AMD was hiding these cards as long as humanly possible.. if they had a game changer, itd be available in the summer already. yup, its the bitter reality..

The other ones are perfectly possible. The average is not the (Max_fps+Min_fps)/2... These are weighted averages. Imagine a situation were your game runs at 55 fps 99% of the time, and 1% were it runs at 300fps. Is the average fps 205, or will it be closer to 55?

Yes I think many of us have noted your min can't be above your average.

looks like the 2 rows got swapped tbh....

Try to google the term "Kepler boost" and you will know what I meant with "cheating"?

Nice bait though. :toast:

The graphics landscape is littered with "optimizations" from most anyone who ever released hardware.

As an amusing aside, why do a number of people howl about the max sustainable boost over the minimum guaranteed boost, but yet always quote the lower specification numbers associated with base frequency. For example, every man and his AMD loving dog seem to attribute Titan's FP32 number at 4500 Teraflops of compute...yet if you were taking the boost into consideration -i.e. just as in gaming benchmarks - the number is actually 5333. Weird huh?

Anyhow we seem to be getting off the subject

On the topic, the final specs ;)Personally, I'm disappoint with the VRM settings :mad: The core clock will be hard to deal with, however the mem can be much higher if samsung/hynix chips were used.

Hmm well they sell the Titan for 8490 Kr ( = € 983,-) ... which is normal.

[EDIT:

Naaa... price is already known to be $599, and their description translates:/EDIT]

That makes the card € 845.

Please behave appropriately.

Thank you.