Tuesday, May 19th 2015

Top-end AMD "Fiji" to Get Fancy SKU Name

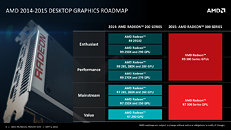

Weeks ago, we were the first to report that AMD could give its top SKU carved out of the upcoming "Fiji" silicon a fancy name à la GeForce GTX TITAN, breaking away from the R9 3xx mold. A new report by SweClockers confirms that AMD is carving out at least one flagship SKU based on the "Fiji" silicon, which will be given a fancy brand name. The said product will go head on against NVIDIA's GeForce GTX TITAN-X. We know that AMD is preparing two SKUs out of the fully-loaded "Fiji" silicon - an air-cooled variant with 4 GB of memory; and a liquid-cooled one, with up to 8 GB of memory. We even got a glimpse of the what this card could look like. AMD is expected to unveil its "Fiji" based high-end graphics card at E3 (mid-June, 2015), with a product launch a week after.

Source:

SweClockers

56 Comments on Top-end AMD "Fiji" to Get Fancy SKU Name

So wait, if its just the top end sku this now leaves an interesting opening with where other cards will sit. So are we expecting the Top Fiji to be the only one receiving a name like that and the cut down variants will then fill the void below. To me that means we could be seeing something like the cut down variant being the 390X and the 390 might be the revamped R9 290X.

I guess this would help explain the rebranded R9 285 being where it is. Though its a bit confusing since by that logic a lot of card areas are being ignored including possibly some cut down silicon.

One month to go and I'm genuinely excited about this card.

Intel is just ripping people off as usual.

Memory manuf. have admitted to price fixing, again. They were sued before, but since laws don't matter anymore...

If these new GPUs are that good, I have a friend who will unload his three Titan X cards for a good price. Maybe I'll just get those.

Some people seem to think that the pricing structure as somehow escalated when the fact is the consumer was somewhat spoiled for a brief number of generations thanks to the R600 debacle that led to AMD targeting the value for money segment with the HD 3870/4870/5870/6970...which accounts for less than three years of product releases of the twenty years consumer 3D graphics have been generally available.Well, if your needs and desires dictated the fortunes of the entire AMD company I could see the relevance, but the company needs to appeal to a wider market than just yourself. You see the market from a personal standpoint, I tend to look at it from the entire customer base.

For the record, I don't see a need for 4K either - especially with OS font issues, lack of native 4K video content, and the nascent state of the 4K monitor market (why bother jumping on the train early when the next wave of panels - and cards- should feature DP1.3 and a wider range of offerings in the 10-bit/IPS class).

AMD major fail with Fuji XT. a 290X with 8gb is still gana be the choice for 4K gamers.

or titanX is a much better option. RIP AMD

980 or a 980ti with 6-8gb is looking like a great option when they come out. until HBM becomes mature at HBM v2 and 8GB is possible! and then we will have pascal vs AMD's version (IF they stay open that long. as I said I dont know how AMD are even still alive)

Nvidia have already stated that the shipping product will feature HBM2

One wouldn't be to quick to discount that both are working on higher stacks for their pro-line in any case and would work its way to the game-line of products

HBM1 8 stacks would give them 8GB at HBM2 16GB makes sense they would work towards a higher stack count be it HBM1 or HBM2

which then they announced they would release Pascal with HBM2 in 2016.

But you cant market a single GPU as a 4k GFX card with only 4gb. and if they say oh you need 2 to do 4K. then thats a complete waste. 290X in Xfire did 4K. so what are we wasting all that extra horsepower on! if there is no memory there to drive the Anti ALiasing and extra textures?

again they are saying compression tech is getting better etc and less memory is needed. but I remain sceptical.

for me the 980 TI will be a sweet buy if its got 6-8gb of ram. and then if AMD remains alive ill see what they offer with HBM2 and pascal from Nvidia. that way there is real competition. hbm1 will be full of issues I think. fragile links and heat rising to the top Dram chip. Think Xbox360 issues and a billion bucks in write down!

With consoles having a greater pool of memory to work with, it is probably a safe bet that vRAM usage wont remain static in the foreseeable future.

If you build quality, people will buy it.

If I perceive that it's worth it, (after reading reviews and comments from others who already have it) and I can get the cash together, I'll buy it.

The nVidia Pascal HBM design also has 4 stacks only but they will wait for HBM2 before using it.

My underlying point should still stand though considering Fiji is HBM and not HBM2 and using that interposer, correct? So there will not be an 8GB card out of the gate.