Wednesday, June 10th 2015

GFXBench Validation Confirms Stream Processor Count of Radeon Fury

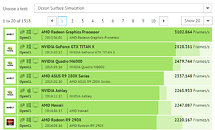

Someone with access to an AMD Radeon Fury sample put it through the compute performance test of GFXBench, and submitted its score to the suite's online score database. Running on pre-launch drivers, the sample is read as simply "AMD Radeon Graphics Processor." Since a GPGPU app is aware of how many compute units (CUs) a GPU has (so it could schedule its parallel processing workloads accordingly), GFXBench was able to put out a plausible-sounding CU count of 64. Since Radeon Fury is based on Graphics CoreNext, and since each CU holds 64 stream processors, the stream processor count on the chip works out to be 4,096.

Source:

VideoCardz

39 Comments on GFXBench Validation Confirms Stream Processor Count of Radeon Fury

290X -> 380X

290 -> 380

285 -> 370

270X -> 360

There are different kinds of schedulers here, maybe you mean work group scheduling (which threadblock/workgroup goes to which SMM) that can be done in driver. I'm talking specifically about warp instruction scheduler (that 4.5% of SMM)

You are right about GCN scheduler being totally independent ... that doesn't change the fact that you can have a real world problem only solvable by code that can't feed those big vector processors in GCN core optimally, for example something with lot's of scalar instructions with calculations interdependent of each other + lots of branching in the mix ... in that case opencl source or cuda port, it doesn't really matter those frameworks are very similar.

What does matter is optimizing specifically for one architecture over another, and I can't really say if chosen benches are biased that way.

At any rate it seems my R9 290 at 2 years old (chip wise, I only got it last july) is still relevent enough for amd to simply bump the clocks, add memory and rebrand?

I get nvidia famously took the 8800GTS 512MB and rebranded it twice, but that was over a 2 year period. They could at least drop these down to 380X and 380, otherwise wtf is Fury (XT) going to be named?

Its same has how politician's pick and choose their statements, they say things that are technically correct, til you start looking at things as a whole to know they nit picked things in their favor.

had a look at 8800gtx, g92 65nm core was only rebranded to 9800gtx, the gtx+ was g92b which was 55nm.